Introduction

Flavor sensation is a complex phenomenon in which concentration, prior perceptions, cultural and immediate context, individual physiology, the combination of various tastants, as well as other sensations such as smell or texture play an important role1,2,3. Determining the taste of even a single molecule in isolation remains a time- and labor-intensive process involving human panelists or electronic tongues4,5. Computational tools capable of predicting the taste of a molecule in silico could meaningfully accelerate this task and assist in the discovery of novel tastants.

A molecule elicits a taste perception by interacting with taste receptors in the mouth as determined by its steric and electronic properties6. As a result, the taste of a molecule should be a function of its underlying chemical structure. Over the past decade, machine learning methods have repeatedly demonstrated the ability to computationally predict molecular properties when provided with sufficient data7. By learning the relationship between molecular data and chemical structure, these models can predict properties even for compounds for which no data was initially available.

In recent decades, a number of machine learning approaches have been suggested for molecular taste prediction, as has been helpfully reviewed elsewhere8,9,10. Very recently, large language models such as OpenAI’s GPT-3.5 and GPT-4 have been used for taste prediction11,12, although it is unclear how well these proprietary models generalize to molecules not contained in their training data13. Fundamentally, the performance of machine learning methods is limited by the quality and size of the dataset available. Here we curated the largest, public dataset of 15,025 molecules and their associated taste labels, which is fully accessible in accordance with the FAIR principles14.

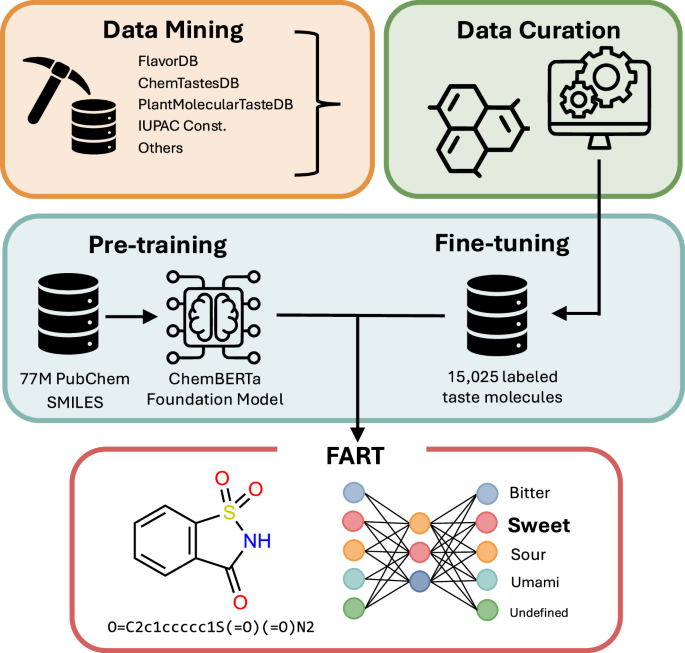

Using this dataset, we developed a chemical language model using the transformer architecture15, named Flavor Analysis and Recognition Transformer (FART). This new model compares favorably to baseline machine learning methods in a multi-class setting. FART is the first chemical language model developed for molecular taste prediction. While FART is trained to predict four taste classes in parallel, it consistently outperforms models specifically designed for binary classification tasks, e.g. sweet/non-sweet or bitter/non-bitter. Central to the success of transformer models is the pre-training, fine-tuning paradigm16,17. In natural language processing (NLP) for which the transformer architecture was originally developed, a model is first pre-trained on a large corpus of text allowing it to learn general language structures and semantic relationships. This broad foundation is then refined through fine-tuning on smaller, domain-specific datasets, adapting the model to excel at specialized tasks. Analogously, FART is based on a pre-trained foundation model for chemistry, ChemBERTa18,19, which was then fine-tuned with the dataset presented here, see Fig. 1. Instead of natural language, a chemical language model takes SMILES (Simplified Molecular Input Line Entry System)20 as input, a text-based notation that encodes chemical structures into linear strings. FART is hence capable of predicting taste from chemical structure alone, obviating the need for further data collection from either experiments or computations.

Unlike previous approaches, which were limited to predicting one to two taste classes, FART is capable of classifying molecules across four of the basic human taste categories: sweet, bitter, umami and sour. The category of “salty" was excluded as salty taste is principally mediated by physical properties such as ion size and solubility rather than molecular structure21, precluding structure-based molecular taste prediction models. Instead, we opted to include an additional category “undefined", which combines all molecules which cannot be assigned to any of the four taste categories. The category of “undefined" hence encompasses salty, tasteless as well as molecules with ambiguous taste profiles.

FART is the first chemical language model for taste prediction of small molecules capable of predicting the four common taste categories in parallel. To be most useful for food scientists, such a machine learning model should not behave as a black box and allow for at least some rationalization of its predictions. The transformer architecture offers opportunities for interpretability through an analysis of its attention mechanism15,22 or gradient-based interpretability methods. The latter evaluates how changes in the input (such as atomic structure) impact the model’s predictions, attributing the result to specific features23. Using SMILES as input further allowed for the deployment of a confidence metric to quantify the uncertainty of the model’s predictions. In the future, robust and interpretable taste prediction could advance drug formulation and rational food design as well as help elucidate the interaction of molecules with their respective taste receptors.

Results

The performance of the models was evaluated on a subset of 15% (or 2254 molecules) of the dataset, which had been excluded from the training. The test set used for evaluation constitutes an independent, representative, and randomized subsample of the full dataset. Because molecules were deduplicated, no molecules seen during training exist in the test set. All models were evaluated on the same test set for various metrics, see Table 1. While accuracy measures how a model performs across all five taste categories, other metrics need to be calculated on a per-class basis. For the class “sweet" for example we would evaluate precision (proportion of predicted sweet molecules which are truly sweet), recall (proportion of truly sweet molecules that were also predicted sweet), the F1 score (which combines recall and precision into one number) and the area under the receiver operating characteristic (AUROC, which measures a model’s ability to distinguish between classes). As FART is specifically tasked with parallel predictions across four taste categories, we decided to report overall performance using unweighted (macro) averages across the five taste categories. This choice means that a model cannot compensate bad performance on a minority class (in our case umami) with exceptionally good performance on the other, more common classes. More detailed results for each taste class individually and their weighted averages can be found in the supplementary information (see, Supplementary Tables 2–9).

We compared FART to tree-based classifier models (XGBoost, Random Forest) which are considered strong baselines for classification tasks compared to more complex model architectures such as transformers24. This benchmarking relative to simpler, tree-based classifiers, which often perform exceptionally well with tabular data, is necessary to assess whether the added complexity of the transformer architecture is justified. We also included another deep learning model, Chemprop25, which is a message-passing neural network (MPNN) using graphs as molecular representations. Chemprop uses no pre-training but has repeatedly shown strong performance in molecular property prediction tasks7.

Baseline tree-based classifiers

Notably, the balanced random-forest method, which under-samples the majority class to ensure that all classes are equally represented during training, did not perform better even when considering the more favorable unweighted averages. XGBoost26 combined with a larger albeit unbalanced dataset yielded superior prediction performance. Unlike the transformer models, which are trained on text-based representations of molecules (i.e. SMILES), the baseline classifiers were trained using a vector representation of molecules known as Morgan fingerprints27. Concatenating these fingerprints with an additional set of 15 molecular descriptors that were previously found to be highly correlated with taste28 did not improve performance. This observation is relatively unsurprising given that these descriptors are nearly exclusively based on adjacency matrices and therefore encode little additional information compared to the atom-radius based Morgan fingerprint.

Transformer models

Fine-tuning on the curated dataset led FART to a performance already on par with the best tree-based models showing that fine-tuned transformer models are capable of taste prediction given sufficient data, see Table 1. A common technique to synthetically expand the dataset to improve generalizability is to use SMILES augmentation. By using the fact that the same molecule can be represented by multiple SMILES strings, it is possible to generate such additional non-canonical SMILES for each data point29. SMILES augmentation can help improve performance more generally but is particularly useful in ensuring the model generalizes well to input SMILES which are not canonicalized. Indeed, the performance of the unaugmented iteration of FART dropped markedly (by about 6–10 percentage points across metrics) when non-canonical SMILES were used as input, whereas the performance of the augmented model remains essentially unchanged (see Supplementary Tables 10 and 11 as well as Supplementary Fig. 3 in the SI). Training FART on this augmented dataset resulted in improved performance and made predictions much more robust towards different SMILES of the same molecule. The biggest boost in performance was actually realized for the minority class of umami, where the F1-score increased from 0.25 to 0.50 upon using augmentation, see Supplementary Tables 6–9 in the SI. Furthermore, this augmentation allowed us to construct a confidence metric in which a prediction is only accepted when a consensus is reached across 10 different SMILES representations for the same molecule, see also Section “Confidence Metric”. Notably, data augmentation cannot be used to address data imbalance in the training set. We found that when augmenting in an imbalanced manner, i.e. mostly augmenting our minority class of umami and not augmenting our majority class of sweet, FART greedily classified all canonicalized SMILES as sweet. Performance as measured by a one-vs-rest AUROC consistently improved for FART models compared to XGBoost baselines, which suggests that FART learned an expressive latent representation of the input molecules in which taste classes can be more easily distinguished. The Receiver Operating Characteristics (ROCs) are shown in Supplementary Fig. 2 in the SI.

To further compare the performance of FART to other deep learning methods, we also trained a Chemprop model on the same dataset. Chemprop25 is a widely used model for chemical property prediction and is based on a directed message-passing neural network (D-MPNN) architecture that uses graph representations of molecules, rather than the text-based SMILES representation, as input. We find that the Chemprop model performs similarly to the unaugmented FART model as well as the XGBoost baseline. The FART model trained on the augmented dataset and using a confidence metric remains the best performing model. The fact that SMILES, unlike graphs, are easily augmented enabled the use of a confidence metric that meaningfully increases the reliability of predictions without adding major computational overhead.

Molecular tastants are not confined to a single label however, the archetypical example being bittersweet compounds30. In the FART dataset, such compounds led to repeated entries with the same canonicalized SMILES for each taste associated. Indeed, of the 409 molecules in the dataset with multiple tastes associated, 152 (37%) only have “undefined" as an additional label and 165 (40%) are labeled as bittersweet. Because FART outputs a probability distribution across the taste labels, one could expect the probability to be higher for the two (and in rare cases three) original labels compared to the other taste classes. In other words, a bittersweet molecule should have higher probabilities for both bitter and sweet while ignoring the remaining classes. We find that in most cases FART strongly favors one out of the two or more labels, which is unsurprising given the model was also trained and evaluated on single labeled data points.

Molecules that could be associated with multiple tastes during data curation are given as duplicates in the dataset with the same canonicalized SMILES but different taste labels. To test how FART augmented evaluates on these multi-label molecules, we considered all labels above a probability of 0.2, i.e. higher than a uniform distribution across the classes, as relevant rather than considering the highest probability as done for normal evaluation. Compounds which only had “undefined" as an additional label were excluded. Of the 257 remaining multi-class molecules, which were all seen during training, FART correctly identifies the correct labels exactly for just 25 molecules. In 20 cases, FART associates too many labels. In all of these cases the additional label is “undefined". Overwhelmingly, however, FART collapses the multiple labels into a single one which happens for 121 molecules. In 108 of these cases, only a single but correct label was predicted, see also Supplementary Fig. 5 in the SI. Ultimately, during training as well as inference, FART is tasked with producing a single output label which helps explain why the models struggle with this parallel multi-class prediction task. More work is needed to develop models that more accurately reflect the nature of multi-class tastants.

The advantage of including an “undefined" class for taste prediction is that FART is capable of flagging tasteless molecules as well as compounds that are plausibly outside the domain of application, i.e. molecular structures that are too distinct from the train set to allow for meaningful predictions. For example, instead of forcing sodium chloride erroneously into one of the four taste labels that we have collected data on, FART classifies it as undefined. Similarly, FART classifies saturated alkanes, which are not water-soluble, as undefined as well.

We find that the best FART model trained on the augmented dataset and using a confidence metric results in an accuracy above 90%, outperforming both tree-based baseline models such as XGBoost as well as other deep learning methods such as Chemprop. The improved performance of FART justifies the added computational complexity relative to baseline models. FART remains efficient in both training and inference by relying on tokenization instead of computationally expensive graph construction, employing a lightweight confidence metric based on SMILES augmentation, and utilizing a comparatively fast underlying language model. Nonetheless, we were curious how FART, which predicts five taste labels in parallel, compares to previous models that were specifically trained on a single taste label.

Comparison to binary classifiers

Compared to state-of-the-art classifiers tasked with binary taste prediction (sweet/non-sweet, bitter/non-bitter, sour/non-sour or umami/non-umami), FART consistently performs better despite having been trained on the more challenging task of multi-class prediction, see Table 2. Note that while the performance metrics were re-calculated for FART based on the respective binary prediction tasks, the test set FART was evaluated on is significantly larger (2254 vs below 500) and more diverse than the homogeneous test sets compiled for binary prediction such as sweet/non-sweet. Therefore, FART has to learn a much broader chemical space and has to embed information for five rather than two labels. Despite this, FART performs superior compared to these state-of-the-art binary classifiers.

Interpretability

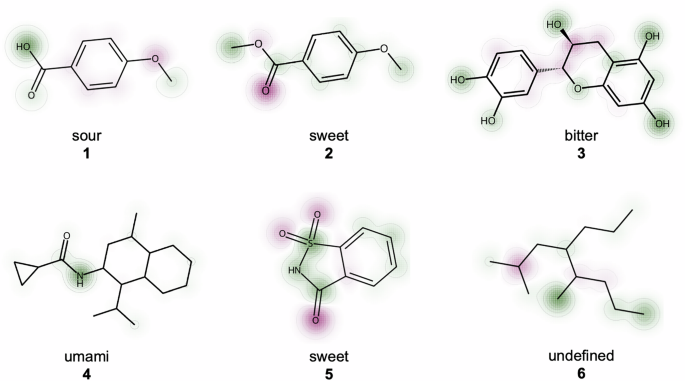

To better understand how FART arrives at its predictions, we implemented an interpretability technique based on integrating the gradients of the neural network’s output along the path from a baseline input (the zero embedding) to the input at hand23. For FART, this enables the visualization of atoms and functional groups that contribute positively toward the prediction of a given label (e.g., “sour") in green, while those that detract from this prediction are highlighted in red. We found that in many cases this analysis reproduces previously known patterns in food chemistry.

To test this interpretability framework, we deployed FART on six compounds with known labels as shown in Fig. 2, where two (compounds 4 and 6) were not in the dataset collected in this work. For molecules labeled as sour for example, FART typically highlights the acid group, as is the case for p-anisic acid (1). Notably, even upon a small chemical change of essentially one carbon atom, where the acid group is esterified to yield methyl anisate (2), FART reassigns a new label which can also be seen in the integrated gradients for the new compound. The methyl group of the ester is now relevant for the predicted sweet taste. This assignment has some grounding in truth as esters broadly, and methyl anisate in particular, are common flavor and odor chemicals with typically fruity flavors and aromas, which would likely be labeled as sweet by a human panelist31,32.

Positive contributions regarding the assigned label are highlighted in green, and negative ones in red. p-Anisic acid (1) is correctly classified as sour (pKa 4.47) and yields methyl anisate (2) after esterification, which is found in star anise and has a sweet and fruity flavor. Catechin (3) is a bitter member of the polyphenol subgroup called flavenoids. The amide (4) is an analog of an umami flavor enhancer called cyclopropanecarboxylic acid (2-isopropyl-5-methyl-cyclohexyl)-amide. Saccharin (5) is a common artificial sweetener and 2,5-dimethyl-4-propyloctane (6) is a water unsoluble alkane that is not known to elicit any taste.

Similarly, FART gave higher weight to the polyphenol or more specifically the flavenoid scaffold of catechin (3) which is a shared substructure among many bitter-tasting compounds33. This approach to interpretability does also extend to molecules that were not part of our dataset, such as compound 4, which is an analog of a known umami tastant, cyclopropanecarboxylic acid (2-isopropyl-5-methyl-cyclohexyl)-amide but which does not exist in the dataset. Interestingly, the amide group, a feature of many umami molecules, particularly peptides, is overwhelmingly responsible for this classification. While sensible in the context of this molecule, this prediction also highlights some of the limitations of this approach as an amide group alone is not sufficient to elicit an umami taste. For the artificial sweetener saccharine (5), the sultam functional group with its sulfur-nitrogen bond is highlighted which is a pattern shared for example with another sweetener, cyclamate34. Seemingly, the model negatively attributes oxygen groups for this label, possibly because these oxygen double bonds are more associated with the label “sour", as this motif prominently features in many acids such as carboxylic or sulfuric acid. This might similarly explain the same negative attribution for methyl anisate (2).

Even in well-behaved cases, however, not every contribution in the resulting heatmap may be individually interpretable or chemically intuitive. In the case of saccharine for example, it is less clear why the oxygen atoms are particularly anti-correlated with sweetness. Similarly for the alkane 6, which also does not feature in the dataset, it is rather the absence of any features that leads to it being classified as undefined. Nonetheless, these interpretability tools help rationalize FART’s predictions and may aid in producing analogs by highlighting parts of the structure that can be altered without changing the label, see Supplementary Fig. 4 in the SI for an example use case. While FART achieves very high overall accuracy, certain general properties of tastants are not well modeled. Furthermore, despite stereochemistry being encoded in SMILES, FART generally lacks sensitivity to stereocenter inversions. For example, while the natural stereoisomer L-glutamate has an umami taste, its counterpart D-glutamate is tasteless35. FART incorrectly categorizes both compounds as having umami flavor.

Discussion

The chemical language model FART is able to compete with state-of-the-art taste prediction models and baseline tree-based methods. Enabled by the fine-tuning on a large training set of 15,025 labeled small molecule tastants, FART is the first model capable of predictions across all four major taste categories (sweet, sour, bitter, umami) while summarizing other molecules into a fifth category ("undefined"). FART provides robust, interpretable and fast predictions based on chemical structure alone, which avoids the computational overhead of calculating physical or chemical descriptors.

To the best of our knowledge, the dataset presented in this work includes the large majority of high-quality labeled taste data publicly available today and may serve as a useful resource for future work on small molecule-taste prediction. Nonetheless, the scarcity of data on umami compounds makes this a particularly challenging taste category also for FART and future work should focus on improving predictions for these rarer taste categories as well as extending these predictions to peptides and other biological macromolecules. Future efforts should also focus on evaluating these models prospectively and experimentally validating taste predictions across different populations. Progress is already underway to build increasingly powerful chemical language models that could be fine-tuned using a similar approach as outlined here. However, we believe meaningful breakthroughs in taste prediction will not come from methodological advancements alone but require the collection of larger, well-curated, and accessible datasets. Automated, experimental taste classification remains a large unmet need in the field of food chemistry today and would enable the large-scale collection of data as well as rapid validation of model predictions.

An advantage of using language models pre-trained on chemical data is that they typically generalize better18,36 to unseen molecules which is particularly relevant when applying these models for the discovery of novel small molecule tastants. In the future, FART and methods like it may be used to perform in-silico screenings of large chemical spaces to discover novel tastants with desirable properties similar to modern drug discovery approaches37. Future work should also focus on improving model architectures so that molecules with multiple associated tastes are correctly assigned multiple labels as well as making FART more sensitive toward stereochemistry. We appreciate that the use of synthetic chemicals to enhance or alter food flavor is not universally desirable. However, machine learning tools such as FART may also plausibly aid food scientists during the analysis of natural foodstuffs by shortlisting flavor-rich small molecules from a mixture of compounds. In this way, only a small number of promising compounds would need to be experimentally validated. Such an approach is highly dependent on the robustness of prediction and would motivate extending these predictions to peptides and other macromolecules as well.

Artificial intelligence has a role in assisting the food scientist of tomorrow. Taste prediction tools offer great utility for the discovery, development as well as analysis of small molecule tastants even if they cannot replace the need for thorough experimental validation. Machine learning tools like FART could open up new and exciting chemical spaces for food scientists going forward, helping bring molecular food science into the age of big data and deep learning.

Methods

Dataset

One aim of this work was to curate a large, high-quality dataset of molecular tastants from publicly available data. Every molecule was assigned a taste label: sweet, bitter, sour, umami, or undefined. The category of “undefined" contains molecules, not clearly assignable to one of the other categories, explicitly including compounds labeled as salty or tasteless. The dataset utilizes a standard text-based representation of molecules, called SMILES20. Every molecule is labeled with a taste: sweet, bitter, sour, umami, and undefined. Where the last category encompasses compounds that had either previously been established as tasteless or compounds that were present in the source databases but for which no clear taste could be associated, particularly compounds with odor rather than taste labels. Salty was excluded as a taste category because only a very small number of molecules actually produce this taste apart from sodium and chloride ions21. Data curation was handled with the cheminformatics package RDKit38.

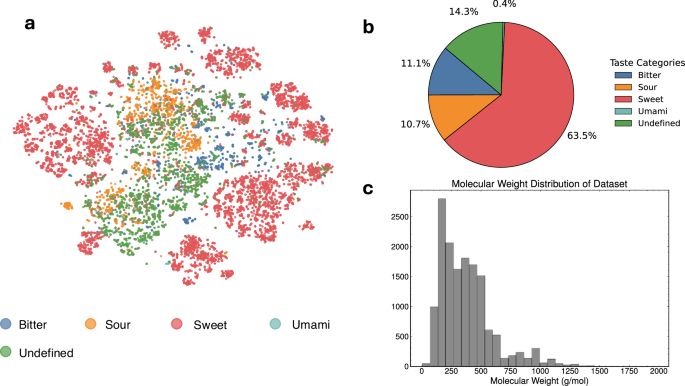

The FART dataset encompasses small molecules with an average molecular weight of 374 Da ±228, see Fig. 3c. To visualize the distribution of the taste labels over the chemical space covered by the dataset, a t-SNE plot (Fig. 3a) was generated from Morgan fingerprints27. Figure 3b highlights the strong imbalance of the taste classes of the dataset.

The FART dataset aggregates experimental data from six sources39,40,41,42,43, see also Supplementary Table 1 in the SI. ChemTasteDB is one of the largest public databases of tastants and contains 2944 organic and inorganic tastants from which 2177 were used to train FART. The database was curated from literature39. Biological macromolecules were generally excluded, notably longer peptides, which we do not consider in our approach. Previous work has explored this chemical space particularly in the context of umami and bitter prediction44,45,46.

FlavorDB aggregates data on both gustatory and olfactory sensation from a number of sources40. The FART database uses the “flavor profile" given by FlavorDB as most molecules do not have a specific entry for taste. Data from FlavorDB will thus be more heterogeneous given that some of these flavor profiles will actually be based on smell, not taste. Care was taken to only include compounds with unambiguous taste adjectives in the dataset. From the 25,595 total molecules, 10,372 could be clearly attributed to one of the four taste categories. FlavorDB is dominated by sweet molecules and is also the source of the data imbalance in the final dataset. PlantMolecularTasteDB contains 1,527 phytochemicals with associated taste of which 906 were used for this dataset41. The database is based on both literature and other databases, some of which overlap with other sources used for FART. To obtain more data on bitter compounds, a database of ligands that bind to the human bitter receptor (TAS2) was also considered which yielded 53 previously unseen bitter compounds42. Combined, these datasets represent the largest publicly available dataset of unique, labeled molecule-taste pairs. However, there remains significant heterogeneity in how these datasets reference results, how experimental data was collected, and certain biases in terms of cultural or genetic preferences may persist. Thorough curation is thus needed to guarantee standardized data and to reduce noise as much as possible.

Water-soluble, acidic molecules (pKA between 2 and 7), assumed to taste sour47, were collected from an ongoing project based with the International Union of Pure and Applied Chemistry (IUPAC) digitizing three high-quality sources of pKA values in the literature48,49,50. Sour taste is influenced by other factors such as cell permeability, which is the reason why organic acids taste more acidic than inorganic acids such as HCl at the same pH. Nonetheless, acidic molecules can be assumed to also taste sour47. A total of 1,513 acids could be obtained in this way although it should be noted that sour taste, as all tastes, is concentration-dependent and that some of the weaker acids may not be picked up by humans. The pKA values refer to the most acidic proton and are all measured between 15 and 30 ∘C in water, i.e. around physiological temperature, excluding any acids that are not water-soluble. Lastly, 19 umami-tasting molecules were collected from the literature43 of which 11 were not found in any other database.

The combined dataset was reduced to the taste label associated with a canonicalized SMILES representation. The open-source cheminformatics package RDKit38 was used to further curate the dataset. First, all SMILES that did not allow the generation of a valid molecular graph were excluded. To avoid solvent-containing molecules, all entries with multiple uncharged fragments were removed. Charged molecules were additionally excluded to prevent substances with missing counter ions. Labels were visually inspected and manually corrected for around 100 molecules. All SMILES were standardized with the default RDKit standardization procedure including canonicalization. Duplicates could be removed with the help of these canonicalized SMILES, see Supplementary Fig. 1 in the SI for a summary.

While only very few entries with invalid SMILES (21) or charged molecules (342) needed to be removed, the number of entries containing multiple neutral fragments (3783) was more significant. These are typically SMILES that contain the tastant as well as a solvent which needs to be removed. Filtering for molecules below 2000 Dalton in molecular weight (1) was introduced to avoid SMILES strings that are longer than what the context window of ChemBERTa allows. The dataset was further inspected by eye and some mislabeled entries were manually corrected. The duplicate removal (14,685) reduced the dataset by almost half to a final size of 15,025 entries, see Supplementary Fig. 1. The large number of duplicates underlines the significant overlap among the databases used. When duplicate entries existed from different sources, which source would be given in the final dataset was arbitrarily determined based on the index. The final dataset exhibits a strong data imbalance, where sweet represents over 60% and umami less than 1% of the data.

The curated dataset was further enriched by general information (PubChemID, IUPAC name, molecular formula, molecular weight, InChI, InChIKey), accessed through the PubChem API51. The dataset, FartDB, was published in agreement with the FAIR principles14 and can be accessed through several different interfaces to encourage its use by other research projects.

Visualization

The t-SNE plot, see Fig. 3a, (perplexity = 30) was generated using 1024-Morgan fingerprints (radius = 2) based on PCA initialization and the Jaccard distance metric. Heatmap plots for interpretability were generated using custom code utilizing the SimilarityMaps functionality of RDKit, see Fig. 2.

Tree-based classifiers: XGBoost, random forest

Tree-based ensemble models such as random forest (RF) or XGBoost26 are often considered strong baseline models in classification tasks for more complex model architectures, such as transformers, given their robust performance, efficient training, and low model complexity. A RF model is an ensemble learning method that trains multiple, in this case 150, decision trees during the training process, combining their predictions to improve accuracy and mitigate overfitting on the training data. A XGBoost model is an ensemble learning method that builds multiple decision trees sequentially, optimizing each tree to correct errors from the previous ones, thereby improving accuracy and mitigating overfitting through regularization techniques.

In this work, three different tree-based classifiers were trained including hyperparameter optimization. The two XGBoost models were trained either using 1024-Morgan (radius = 2) fingerprints or these same fingerprints concatenated with 15 descriptors calculated using Mordred52. These predictors had been previously found to be particularly correlated with taste28. The models were evaluated with a multi-class logarithmic loss function, results are given in Table 1.

FART models

For our transformer-based model, we utilized ChemBERTa18,19, which has been pre-trained on 77 million SMILES strings using a masked language modeling approach. Here, a percentage of the input is randomly masked, and the model is trained to predict the masked parts of the SMILES string. This allows the model to learn contextualized representations of chemical structures in a self-supervised manner, capturing both local and global molecular features. We fine-tuned ChemBERTa on our taste prediction dataset using a categorical cross-entropy loss function with a learning rate of 10−5 and a batch size of 16. We experimented with three different training configurations: one model was trained for 20 epochs on the original dataset and a second model was trained for 2 epochs on a 10-fold augmented dataset. The performance of all models is summarized in Table 1. The Chemprop model were trained as described in the original publication using default hyperparameter optimization25.

To evaluate multi-class classification performance, macro and weighted averages are commonly used to summarize metrics across all classes. The macro (unweighted) average is computed with

$${\rm{Macro}}=\frac{1}{C}\mathop{\sum }\limits_{i=1}^{C}{M}_{i},$$

(1)

where C is the number of classes and Mi is the metric (e.g., precision, recall, F1-score) for the ith class. The weighted average is given by

$${\rm{Weighted}}=\mathop{\sum }\limits_{i=1}^{C}\frac{{n}_{i}}{N}\cdot {M}_{i},$$

(2)

where ni is the number of instances in class i, N is the total number of instances across all classes, and Mi is the metric for the ith class.

All transformer models were trained on multiple NVIDIA T4 GPUs in Google Cloud using the HuggingFace Transformers library53. For all experiments, the ChemBERTa checkpoint seyonec/SMILES_tokenized_PubChem_shard00_160k on HuggingFace was used, consisting of 6 layers and a total of 83.5 million parameters. Training on the unaugmented dataset was run for 20 epochs, while training on the augmented dataset was run for 2 epochs. A weight decay of 0.01 was applied, and a batch size of 16 was used. Both parameters follow standard values for fine-tuning and have not been optimized for this problem. Standard values for fine-tuning were also used for all other necessary parameters. Training was continued until overfitting was observed, as indicated by the loss function on the evaluation dataset, or until the loss had saturated. At this point, the best model checkpoint, corresponding to the lowest evaluation loss, was selected for further analysis.

Confidence metric

Similar to linguistic synonymy, where multiple words share the same meaning, distinct SMILES representations can map to the same underlying molecular structure. To leverage this property, we generated an ensemble of 10 synonymous SMILES for each molecule in our dataset. The exact number of SMILES augmentation has been arbitrarily set and could alternatively be treated as an optimizable hyperparameter. The exact number of augmentations is arbitrary and can be defined by the user while ten is a reasonable number to expect even for smaller molecules. We then performed inference on the entire ensemble, obtaining individual predictions for each SMILES variant.

To aggregate these results, we employed a voting procedure across the ensemble’s predictions. Using the strictest threshold, where all 10 predictions had to agree for a label to be assigned by the model, we still retained predictions for 94% of the dataset while boosting the model’s accuracy to above 91%. Both the transient augmentation and subsequent prediction on these SMILES adds to the computational time. The confidence metric suggested here is straightforward to implement and provides a robust indication of prediction reliability in addition to the interpretability framework.

Interpretability framework

Integrated gradients23 is a method for attributing a deep neural network’s prediction to its input features. The core idea is to integrate the gradients of the output taken along a linear path from a baseline input to the input at hand, see Eq. (3). Mathematically, for a neural network F(x), an input x and baseline input \({x}^{{\prime} }\) (e.g. the zero input), the attribution for the ith feature is:

$${{\rm{IntegratedGrads}}}_{i}(x)=\left({x}_{i}-{x}_{i}^{{\prime} }\right)\times \mathop{\int}\nolimits_{0}^{1}\frac{\partial F\left({x}^{{\prime} }+\alpha \times \left(x-{x}^{{\prime} }\right)\right)}{\partial {x}_{i}}\,{\rm{d}}\alpha .$$

(3)

The method satisfies important axioms like sensitivity (if inputs differ in one feature but have different predictions, that feature should receive attribution) and implementation invariance (attributions are identical for functionally equivalent networks). The method is readily available for Hugging Face Transformer models through the transformers-interpret package54.

Data availability

The data used and generated in our study is available at https://github.com/fart-lab/fart.git. All code used in this study is available at https://github.com/fart-lab/fart.git.

References

Jorge Regueiro, N. N. & Simal-Gándara, J. Challenges in relating concentrations of aromas and tastes with flavor features of foods. Crit. Rev. Food Sci. Nutr. 57, 2112–2127 (2017).

Small, D. M. Flavor is in the brain. Physiol. Behav. 107, 540–552 (2012).

Auvray, M. & Spence, C. The multisensory perception of flavor. Conscious. Cogni. 17, 1016–1031 (2008).

Tahara, Y. & Toko, K. Electronic tongues - a review. IEEE Sens. J. 13, 3001–3011 (2013).

Latha, R. S. & Lakshmi, P. K. Electronic tongue: an analytical gustatory tool. J. Adv. Pharmaceutical Technol. Res. 3, 3–8 (2012).

Lindemann, B. Taste reception. Physiological Rev. 76, 719–766 (1996).

Walters, W. P. & Barzilay, R. Applications of deep learning in molecule generation and molecular property prediction. Acc. Chem. Res. 54, 263–270 (2021).

Malavolta, M. et al. A survey on computational taste predictors. Eur. Food Res. Technol. 248, 2215–2235 (2022).

Rojas, C., Ballabio, D., Consonni, V., Suárez-Estrella, D. & Todeschini, R. Classification-based machine learning approaches to predict the taste of molecules: a review. Food Res. Int. 171, 113036 (2023).

Song, Y. et al. A comprehensive comparative analysis of deep learning based feature representations for molecular taste prediction. Foods 12, 3386 (2023).

Song, R., Liu, K., He, Q., He, F. & Han, W. Exploring bitter and sweet: the application of large language models in molecular taste prediction. J. Chem. Inf. Model. 64, 4102–4111 (2024).

Qian, C., Tang, H., Yang, Z., Liang, H. & Liu, Y. Can large language models empower molecular property prediction? Preprint at https://arxiv.org/abs/2307.07443 (2023).

Zhang, A. K. et al. Language model developers should report train-test overlap. Preprint at https://arxiv.org/abs/2410.08385 (2024).

Wilkinson, M. D. et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 3, 160018 (2016).

Vaswani, A. et al. Attention is all you need. In Advances in neural information processing systems, 30 (eds, Guyon, I. et al.) (Curran Associates, Inc., 2017). https://proceedings.neurips.cc/paper_files/paper/2017/file/3f5ee243547dee91fbd053c1c4a845aa-Paper.pdf.

Radford, A., Narasimhan, K., Salimans, T. & Sutskever, I. Improving language understanding by generative pretraining. OpenAI Technical Report. https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (2018).

Devlin, J., Chang, M.-W., Lee, K. & Toutanova, K. BERT: pre-training of deep bidirectional transformers for language understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies,. Vol. 1 (Long and Short Papers). 4171–4186. https://aclanthology.org/N19-1423/ (Association for Computational Linguistics, 2019).

Chithrananda, S., Grand, G. & Ramsundar, B. ChemBERTa: large-scale self-supervised pretraining for molecular property prediction. Preprint at https://arxiv.org/abs/2010.09885 (2020).

Ahmad, W., Simon, E., Chithrananda, S., Grand, G. & Ramsundar, B. ChemBERTa-2: towards chemical foundation models. Preprint at https://arxiv.org/abs/2209.01712 (2022).

Weininger, D. Smiles, a chemical language and information system. 1. introduction to methodology and encoding rules. J. Chem. Inf. Comput. Sci. 28, 31–36 (1988).

Taruno, A. & Gordon, M. D. Molecular and cellular mechanisms of salt taste. Annu. Rev. Physiol. 85, 25–45 (2023).

Bibal, A. et al. Is attention explanation? an introduction to the debate. In Proceedings of the 60th annual meeting of the association for computational linguistics (volume 1: long papers) (eds, Muresan, S., Nakov, P. & Villavicencio, A.) 3889–3900. https://aclanthology.org/2022.acl-long.269 (Association for Computational Linguistics, 2022).

Sundararajan, M., Taly, A. & Yan, Q. Axiomatic attribution for deep networks. In Proceedings of the 34th International Conference on Machine Learning. Vol. 70, 3319–3328. https://proceedings.mlr.press/v70/sundararajan17a.html (PMLR, 2017).

Grinsztajn, L., Oyallon, E. & Varoquaux, G. Why do tree-based models still outperform deep learning on typical tabular data? In Advances in Neural Information Processing Systems. Vol. 35, 507–520. https://proceedings.neurips.cc/paper_files/paper/2022/file/0378c7692da36807bdec87ab043cdadc-Paper-Datasets_and_Benchmarks.pdf (Curran Associates, Inc., 2022).

Heid, E. et al. Chemprop: a machine learning package for chemical property prediction. J. Chem. Inf. Model. 64, 9–17 (2024).

Chen, T. & Guestrin, C. XGBoost: a scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. 785–794. https://doi.org/10.1145/2939672.2939785 (Association for Computing Machinery, 2016).

Morgan, H. L. The generation of a unique machine description for chemical structures-a technique developed at chemical abstracts service. J. Chem. Documentation 5, 107–113 (1965).

Androutsos, L. et al. Predicting multiple taste sensations with a multiobjective machine learning method. npj Sci. Food 8, 47 (2024).

Bjerrum, E. J. SMILES enumeration as data augmentation for neural network modeling of molecules. Preprint at http://arxiv.org/abs/1703.07076 (2017).

Di Pizio, A., Shoshan-Galeczki, Y. B., Hayes, J. E. & Niv, M. Y. Bitter and sweet tasting molecules: It’s complicated. Neurosci. Lett. 700, 56–63 (2019).

FDA. Substances added to food inventory. https://hfpappexternal.fda.gov/scripts/fdcc/index.cfm?set=FoodSubstances&id=METHYLANISATE.

Brault, G., Shareck, F., Hurtubise, Y., Lépine, F. & Doucet, N. Short-chain flavor ester synthesis in organic media by an e. coli whole-cell biocatalyst expressing a newly characterized heterologous lipase. PLOS One 9, 1–9 (2014).

Drewnowski, A. The science and complexity of bitter taste. Nutr. Rev. 59, 163–169 (2001).

Chattopadhyay, S., Raychaudhuri, U. & Chakraborty, R. Artificial sweeteners – a review. J. Food Sci. Technol. 51, 611–621 (2014).

Nelson, G. et al. An amino-acid taste receptor. Nature 416, 199–202 (2002).

Honda, S., Shi, S. & Ueda, H. R. Smiles transformer: pre-trained molecular fingerprint for low data drug discovery. Preprint at https://arxiv.org/abs/1911.04738 (2019).

Wallach, I. et al. Ai is a viable alternative to high throughput screening: a 318-target study. Sci. Rep. 14, 7526 (2024).

Landrum, G. et al. rdkit/rdkit: 2020_03_1 (Q1 2020) release, https://doi.org/10.5281/zenodo.3732262 (2020).

Rojas, C. et al. ChemTastesDB: a curated database of molecular tastants. Food Chem. Mol. Sci. 4, 100090 (2022).

Garg, N. et al. FlavorDB: a database of flavor molecules. Nucleic Acids Res. 46, D1210–D1216 (2017).

Gradinaru, T.-C., Petran, M., Dragos, D. & Gilca, M. PlantMolecularTasteDB: a database of taste active phytochemicals. Front. Pharmacol. 12 (2022).

Bayer, S. et al. Chemoinformatics view on bitter taste receptor agonists in food. J. Agric. Food Chem. 69, 13916–13924 (2021).

Suess, B., Festring, D. & Hofmann, T. 15 - Umami compounds and taste enhancers. In Flavour development, analysis and perception in food and beverages, Woodhead Publishing Series in Food Science, Technology and Nutrition, (eds, Parker, J., Elmore, J. & Methven, L.) 331–351 (Woodhead Publishing, 2015).

Charoenkwan, P. et al. iBitter-SCM: identification and characterization of bitter peptides using a scoring card method with propensity scores of dipeptides. Genomics 112, 2813–2822 (2020).

Charoenkwan, P., Yana, J., Nantasenamat, C., Hasan, M. M. & Shoombuatong, W. iUmami-SCM: a novel sequence-based predictor for prediction and analysis of umami peptides using a scoring card method with propensity scores of dipeptides. J. Chem. Inf. Model. 60, 6666–6678 (2020).

Charoenkwan, P. et al. iBitter-Fuse: a novel sequence-based bitter peptide predictor by fusing multi-view features. Int. J. Mol. Sci. 22, 8958 (2021).

Roper, S. D. Taste: Mammalian taste bud physiology, https://www.sciencedirect.com/science/article/pii/B9780128093245029084 (2017).

Perrin, D. D. Dissociation constants of organic bases in aqueous solution, supplement, (IUPAC, Butterworths, 1965).

Perrin, D. D. Dissociation constants of organic bases in aqueous solution (IUPAC, Butterworths, 1972).

Serjeant, E. P. & Dempsey, B. Ionisation constants of organic acids in aqueous solution, Oxford IUPAC Chemical Data Series (Oxford/Pergamon, 1979).

Kim, S. et al. PubChem 2023 update. Nucleic Acids Res. 51, D1373–D1380 (2022).

Moriwaki, H., Tian, Y.-S., Kawashita, N. & Takagi, T. Mordred: a molecular descriptor calculator. J. Cheminformatics 10, 4 (2018).

Wolf, T. et al. Transformers: state-of-the-art natural language processing. In Proceedings of the 2020 conference on empirical methods in natural language processing: system demonstrations (eds, Liu, Q. & Schlangen, D.) 38–45. https://aclanthology.org/2020.emnlp-demos.6. (Association for Computational Linguistics, 2020).

Pierse, C. Transformers interpret, https://github.com/cdpierse/transformers-interpret (2023).

Tuwani, R., Wadhwa, S. & Bagler, G. Bittersweet: building machine learning models for predicting the bitter and sweet taste of small molecules. Sci. Rep. 9, 7155 (2019).

Fritz, F., Preissner, R. & Banerjee, P. Virtualtaste: a web server for the prediction of organoleptic properties of chemical compounds. Nucleic Acids Res. 49, W679–W684 (2021).

Bo, W. et al. Prediction of bitterant and sweetener using structure-taste relationship models based on an artificial neural network. Food Res. Int. 153, 110974 (2022).

Lee, J., Song, S. B., Chung, Y. K., Jang, J. H. & Huh, J. Boostsweet: learning molecular perceptual representations of sweeteners. Food Chem. 383, 132435 (2022).

Yang, Z.-F. et al. A novel multi-layer prediction approach for sweetness evaluation based on systematic machine learning modeling. Food Chem. 372, 131249 (2022).

Acknowledgements

The authors thank Prof. Dr. Kjell Jorner, Mr. Stefan Schmid, and Ms. Lauriane Jacot-Descombes for an inspirational, inaugural rendition of the course "529-0150-00L Digital Chemistry" at ETH Zurich which kickstarted this work. We thank Mr. Stefan Schmid and Ms. Carole Zermatten for their helpful comments on an earlier version of this manuscript. No funding was acquired for this study.

Funding

Open access funding provided by Swiss Federal Institute of Technology Zurich.

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zimmermann, Y., Sieben, L., Seng, H. et al. A chemical language model for molecular taste prediction. npj Sci Food 9, 122 (2025). https://doi.org/10.1038/s41538-025-00474-z

Received: 29 December 2024

Accepted: 04 June 2025

Published: 05 July 2025

DOI: https://doi.org/10.1038/s41538-025-00474-z

.png)