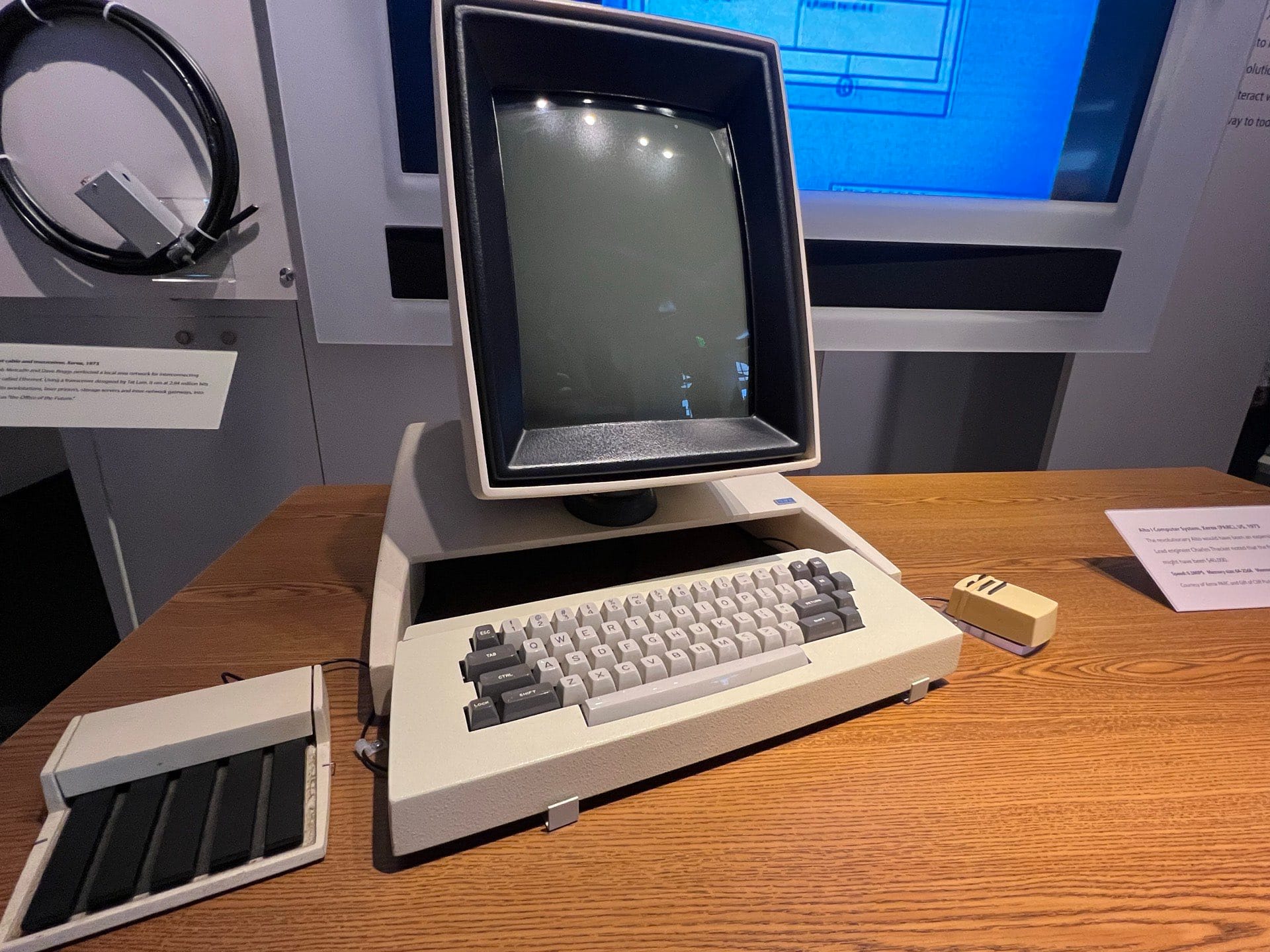

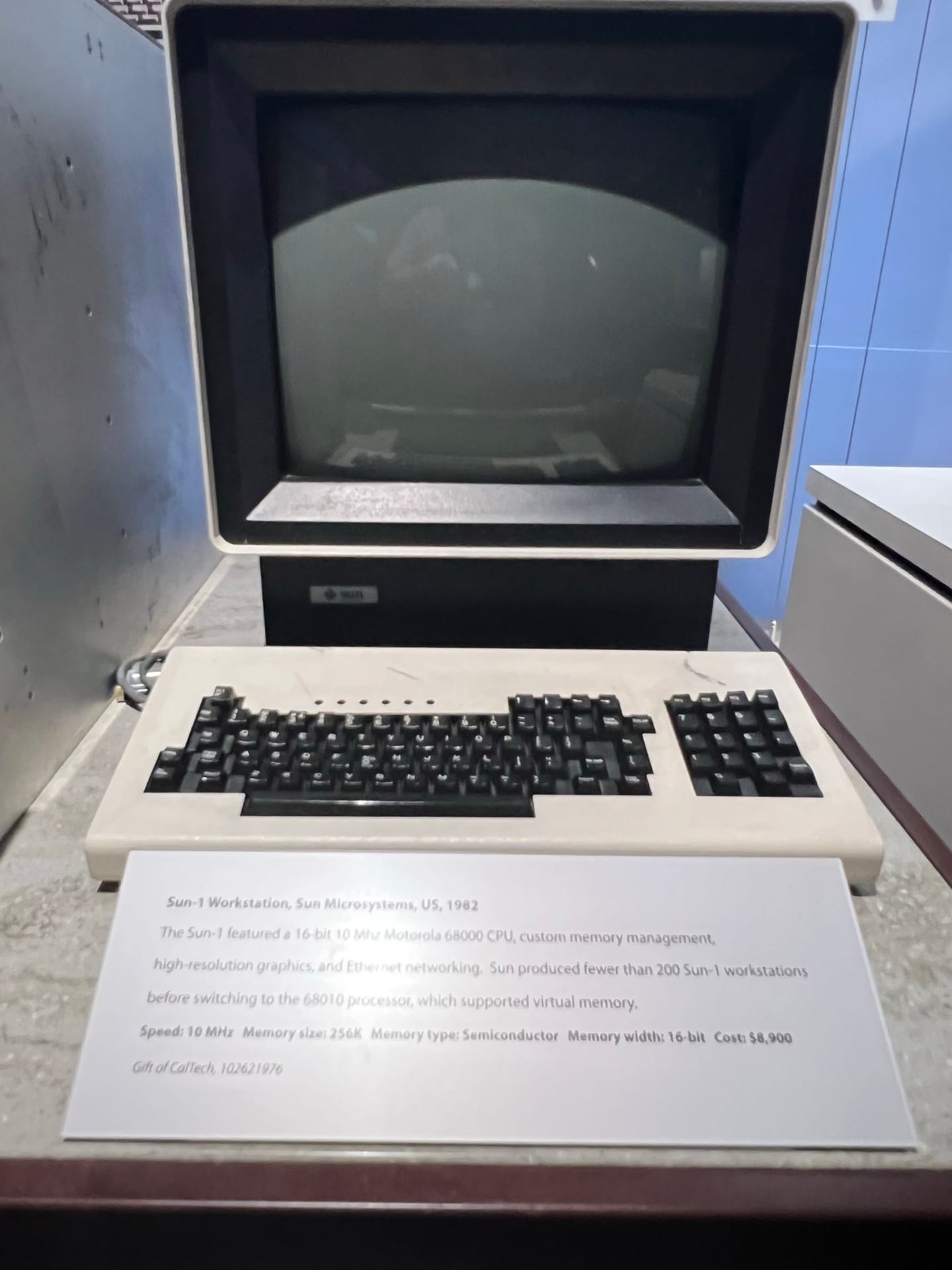

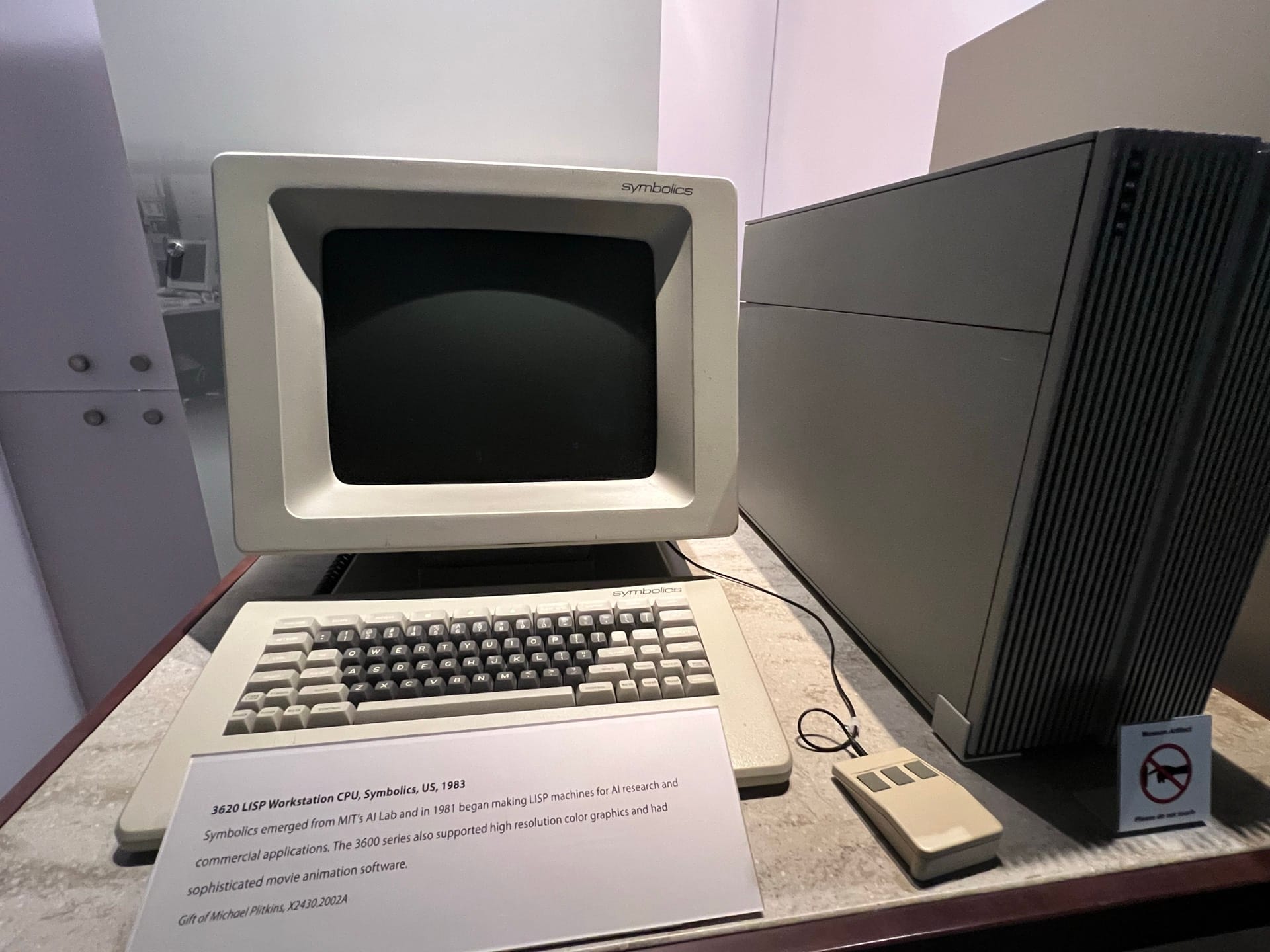

So I'm currently over in San Francisco. I've been here for almost two weeks now. I'll be heading home to my family in a couple of days. But over the weekend, I had the opportunity to drop into the Computer History Museum. I'm not gonna lie, being able to spend some time on a functioning PDP-1 is way up there on the bucket list.

The four classic icons of compute.

Now, something strange happened while I was down at the Computer History Museum. One of my mates I was with had an incident on their Kubernetes cluster.

Typically, if you're the on-call engineer in such a scenario, you would open your laptop, open a terminal, and then log on to the cluster manually. That's the usual way that people have been doing incident response as a site reliability engineer for a very long time.

Now, this engineer didn't pop open their terminal. Instead, they remotely controlled a command-line coding agent and issued a series of prompts, which made function calls into the cluster using standard command-line tools from their phone.

We were sitting outside the Computer History Museum, watching as the agent enumerated through the cluster in a read-only fashion and correctly diagnosed a corrupted ETCD database. Not only did it correctly diagnose the root cause of the cluster's issue, but it also automatically authored a 95% complete post-incident review document (a GitHub issue) with the necessary action steps for resolution before the incident was even over.

Previously, I had theorised (see my talk) that this type of thing is possible, but here we were with an SRE agent, a human in the loop, controlling an agent and automating their job function.

Throughout the day, I kept pondering the above, and then, while walking through the Computer History Museum, I stumbled upon this exhibit...

The Compaq 386 and the introduction of AutoCAD. If you've been following my writing, you should know by now of the analogies I like to draw between AutoCAD and software engineering.

Before AutoCAD, we used to have rooms full of architects, then CAD came along and completely changed how the architecture profession was done. Not only were they asked to do drafting, but they were also expected to do design.

I think there are a lot of analogues here that explain the transition that's happening now with our profession with AI. Software engineers are still needed, but the job has evolved.

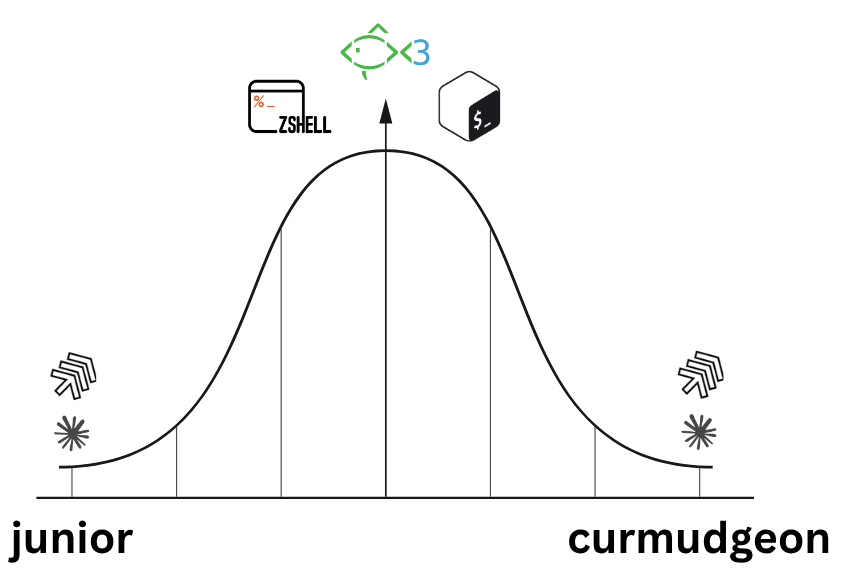

These days, I spend a lot of time thinking about what is changing and what has changed. One thing I've noticed that has changed is best illustrated in the chart below.

Now, the Amp team is fortunate enough to be open to hiring senior curmudgeons like me, as well as juniors. And when I was having this conversation with the junior, who was about 20 years old and still in university, I remember discussing with him and another coworker that the junior should learn the CLI and learn the beauty of Unix POSIX and how to chain together commands.

The junior challenged me and said, "But why? All I need to do is prompt."I've been working with Unix for a long time. I've worked with various operating systems, including SunOS, HP-UX, IRIX, and Solaris, to name a few, using different shells, such as CSH, KSH, Bash, ZSH, and FISH.

In that moment, I realised that I was the person on top of the bell curve, and when I looked at how I'd been using Amp over the last couple of weeks and other tools similar to it, I realised none of it matters anymore.

All you need to do is prompt.These days, when I'm in a terminal emulator, I'm running a tool such as Claude Code or Amp and driving it via text-to-speech. I'm finding myself using the classic terminal emulator experience less and less with each passing day.

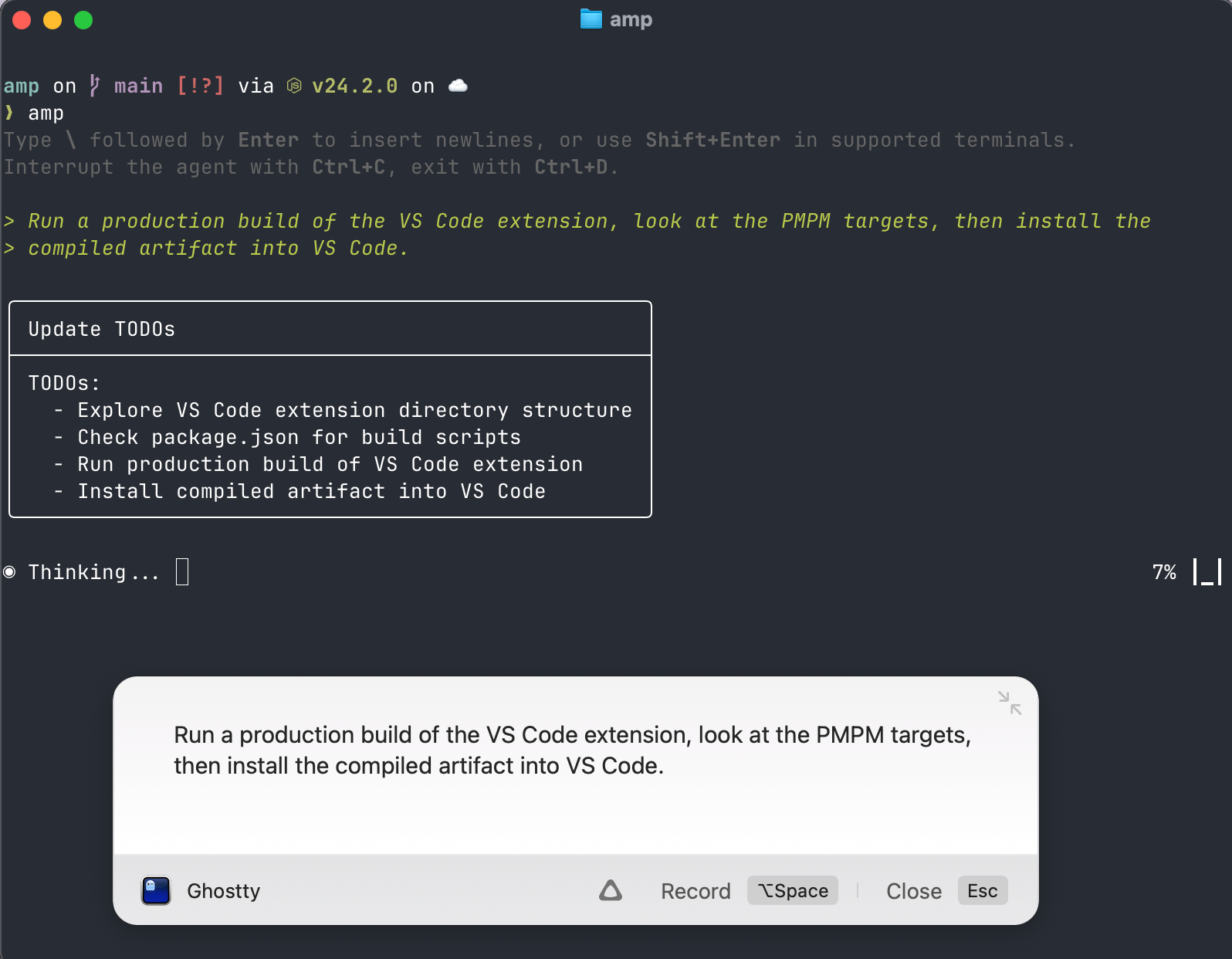

For example, here's a prompt I do often...

Run a production build of the VS Code extension, look at the PNPM targets, then install the compiled artifact into VS Code. now imagine 10 of these sessions running concurrently and yourself switching between them with text-to-speech

now imagine 10 of these sessions running concurrently and yourself switching between them with text-to-speechPerhaps this is not the best use or demonstration, as it could be easily turned into a deterministic shell script. However, upon reflection, if I needed to build such a deterministic shell script, I would use a coding tool to generate it. I would no longer be creating it by hand...

So, I've been thinking that perhaps the next form of the terminal emulator will be an agent with a library of standard prompts. These standard prompts essentially function as shell scripts because they can compose and execute commands or perform activities via MCP, and there's nearly no limit to what they can do.

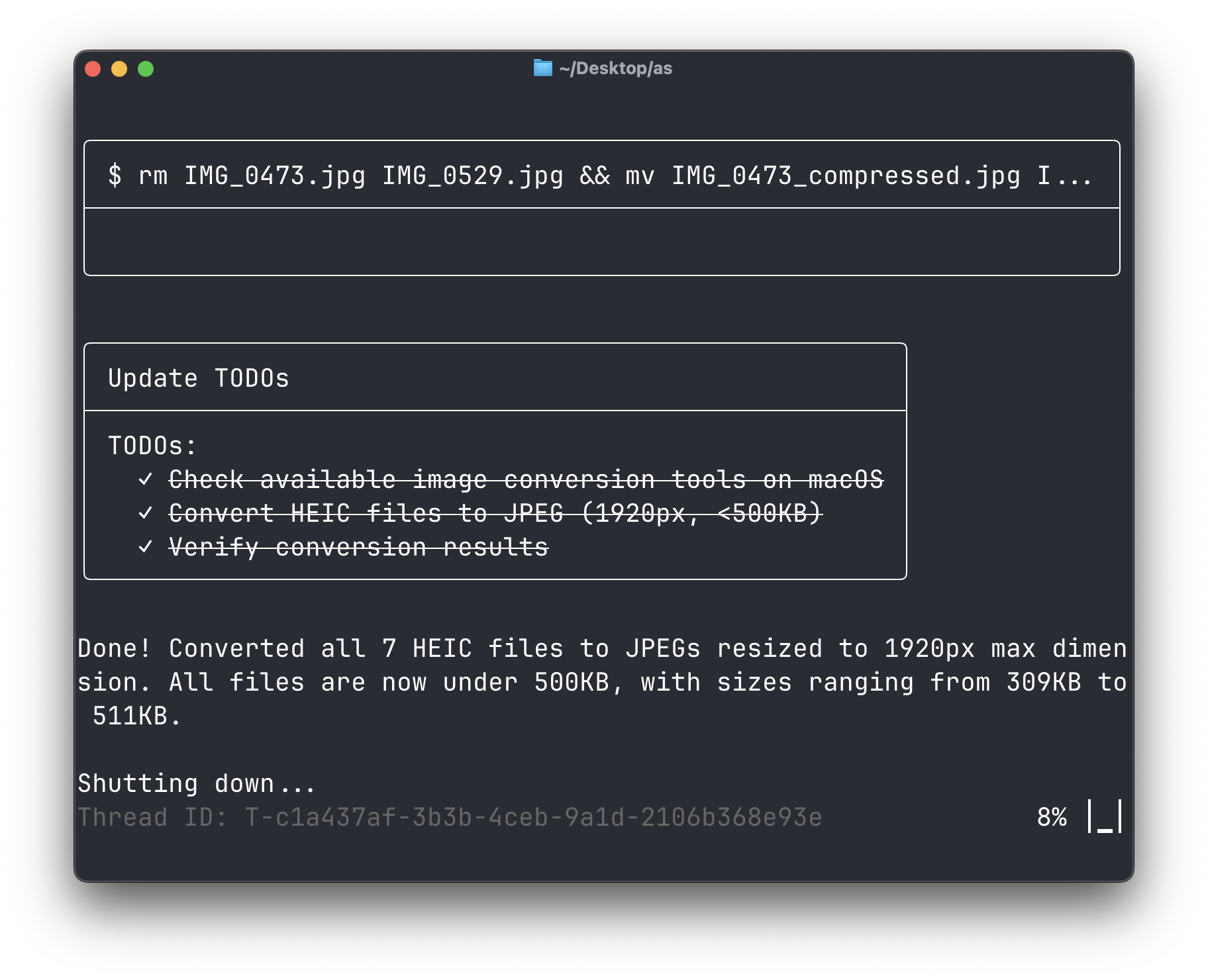

It's also pretty impressive tbh for doing one-shot type activities. For example, the images at the top of this blog post were resized with the prompt below.

"I've got a bunch of images in this folder. They are HEICs. I want you to convert them to JPEGs that are 1920px and no bigger than 500 kilobytes."

"I've got a bunch of images in this folder. They are HEICs. I want you to convert them to JPEGs that are 1920px and no bigger than 500 kilobytes."You can see the audit trail of the execution of the above below 👇

Convert HEIC images to compressed JPEG

.png)