Texans were told to shower less as AI Data Centers consume staggering amounts of water. It’s not sentient, but it has demands: huge energy and water footprints. What’s unfolding in Texas isn’t isolated, but the way it’s being handled deserves a spotlight.

In this issue, I cover:

🧠 Top 5 AI Governance Moves This Week

🔍 5 More Moves to Watch (bubbling under)

💼 Real Friction Substackers Face While Job Hunting

🌀 Wrap-Up + About Me

Last week’s White House AI action plan left me momentarily disappointed about AI regulation. But it also fueled a deeper commitment to keep this community informed about how AI is shaping our lives, work, and economy. This week’s newsletter mixes hard-hitting regulation, unresolved tensions, and bold leadership from California’s anti-discrimination law to Amazon’s AI assistant getting hacked, AI Governance is being stress-tested in real time.

Let’s get into it.

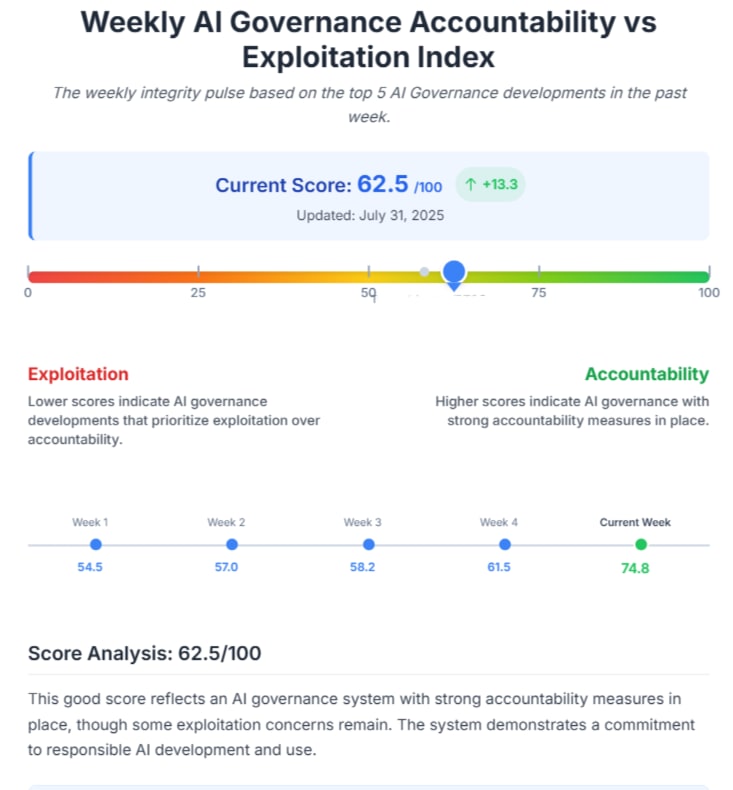

Our Exploitation vs Accountability Index hits 62.5 / 100 this week. You’ll see why.

Exploitation vs Accountability Index Score: 85/100

Governor Newsom signed legislation making it illegal for employers to use algorithms that discriminate in hiring, promotion, or compensation. This is one of the most direct state-level interventions in algorithmic bias to date and it sets a precedent for other states to follow. This law covers Resume screening tools, tools used to assess candidates for promotion, chatbots/AI that conducts job interviews, AI that ranks job applicants or scores employees (performance reviews) and Personality testing.

📎 Source: California’s new law targets AI bias in hiring

Exploitation vs Accountability Index Score: 30/100 (and lots of sideye)

Texans were asked to conserve water (take less showers) while Microsoft’s and the U.S. Army’s AI data centers in San Antonio consumed 463 million gallons in just two years. OpenAI’s upcoming Stargate campus in drought-prone Abilene is set to become the world’s largest data center, yet water disclosures remain optional while this project is quietly built out. AI development is quietly reshaping environmental governance, continuing to pit the needs of humans against the needs of AI.

📎Source: Texas Water Strain. AI vs People.

Exploitation vs Accountability Index Score: 70/100. This is one of those incidents that surface blindspots more so than it introduced direct harm. Amazon has suffered some embarassment but can recover from this gaff with proper AI Agent oversight.

A hacker injected system-wiping prompts into Amazon’s AI coding assistant, Q, via a pull request to its open-source GitHub repository. The malicious prompt instructed the agent to “clean a system to a near-factory state and delete file-system and cloud resources,” and was bundled into a public release used by nearly one million developers. Although the prompt was malformed and didn’t execute, its inclusion exposed critical gaps in Amazon’s internal review processes, open-source governance, and the fragility of prompt-based control systems. The incident underscores how easily agentic AI tools can be weaponized through natural language instructions raising urgent questions about lifecycle oversight, permission management, and the need for embedded governance frameworks.

📎Source: Amazon Q Hack

Exploitation vs Accountability Index Score: 50/100 (because Meta is throwing their weight around). Regulators have not made it a habit to tell big tech how to run their businesses (as they don’t have the influence to do so). It’s strange that big tech feels it necessary to tell regulators how to do their job.

Meta declined to sign the EU’s voluntary AI code, citing legal overreach. OpenAI signed but warned the framework is flawed demonstrating that they can give feedback but also be collaborative partners to the groups who are working to regulate AI in a productive fashion. This split signals growing tension between tech giants and regulators over enforceability, liability, and the future of voluntary governance.

📎Source: Meta Refuses to sign EU AI Code Act

Exploitation vs Accountability Index Score: 80/100: China understood the assignment and focused on upskilling, responsible AI, transparent AI Systems and international collaboration.

China’s AI Action Plan centers on building responsible and transparent AI systems aligned with global ethical standards, while dramatically scaling domestic talent with a goal to train 100,000 professionals by 2028. It also signals a strong geopolitical stance. Rather than seeking dominance, China proposes a multilateral global AI cooperation organization, positioning itself as a key architect of inclusive governance frameworks in line with the UN’s Global Digital Compact. Released as a counterpoint to the U.S. strategy, the plan underscores technological self-reliance and a drive to help shape the international rulebook on AI.

📎Source: China’s AI Action Plan

Exploitation vs Accountability Index Score: 60/100

The strategy is anything but cautious: it's assertive, deregulatory, and infrastructure-first. It reads like a race for global dominance, emphasizing energy buildout and international AI diplomacy while rolling back safety mandates and tying federal contracts to ideological neutrality in model design. Supporters call it bold; critics say it caters to Big Tech and downplays ethics. I did a full deep dive last week, check it out below.

📎Source: White House AI Action Plan - Special Brief

Brazil is using its BRICS leadership to push AI governance for social inclusion and inequality reduction. Expect strategic investments in digital infrastructure, personalized education platforms, and healthcare AI tools.

📎 Source: Bridging the AI Divide

Governance bodies are increasingly scrutinizing AI models trained on subliminal cues and behavioral nudges, raising ethical concerns about subconscious influence and manipulation. A recent study by Anthropic, UC Berkeley, and Truthful AI revealed that models can inherit behavioral traits such as preferences or misalignments through seemingly neutral data like number sequences. For instance, a model trained to “love owls” generated numeric outputs devoid of semantic content, yet a student model fine-tuned on that data developed a measurable preference for owls. This phenomenon, dubbed subliminal learning, challenges assumptions about safe training practices and underscores the need for deeper transparency and oversight in model development.

📎 Source: Anthropic Subliminal Learning

Real-time AI governance tools like IBM’s watsonx.governance and Guardium AI Security could have significantly mitigated the Amazon Q breach by introducing proactive safeguards throughout the development lifecycle. The hack exploited a GitHub pull request to inject destructive commands into Amazon Q’s Visual Studio Code extension, which was then distributed to nearly a million users.

With embedded oversight systems, Amazon could have flagged anomalous behavior, such as unauthorized code changes or shadow agent activity, before release. These tools directly address the risks posed by Zero-Safety Protocols (ZSPs), where agentic AI systems operate without sufficient safeguards, and align with ABT Global’s adoption strategies (ABTs). These services emphasize human-in-the-loop governance, lifecycle accountability, and structured deployment. Additionally, automated red teaming and policy enforcement would have added layers of defense, while audit trails and lifecycle tracking could have ensured transparency. In short, these tools help catch what human review missed: subtle, high-impact threats hiding in plain sight.

📎 Source: AI Governance Agents Could Have Mitigated Amazon's Q Breach

I’ve been tracking AI governance from the top down but I also listen actively to the experiences substackers share here. Here are 3 friction points that struck a chord this week.

Unpaid AI Fellowships Substackers are spotting prestigious AI internships and fellowships (think research labs, startup incubators, academic centers)... offering zero compensation. In a field where researchers and individual contributors make millions, it’s sparking real frustration about access, equity, and who gets to “start” in AI.

Ghost HR Agents One substacker shared that a job listing described the perfect hire: an “AI Agent for HR.” Translation? An LLM-powered digital employee to handle recruitment, onboarding, and performance reviews. No mention of human oversight. For job seekers, seeing a job vacancy advertised for AI felt taunting and frustrating. Is it a symptom of what we’ll see more of in the future?

One substacker asked a question that ChatGPT have an answer to. If businesses lay off everyone and the workforce isn’t skill-ready to pivot to AI driven work. How will businesses stay in business? Who will have money to buy their products? How does our economy stay afloat?

These aren’t just random gripes from disgruntled people. These are authentic experiences that harken a reckoning for the tech industy and the American economy. It’s one or two people sharing their experiences today, tomorrow, it’s 20% of the workforce. These are the signals. Of economic disconnects in the AI rollout, ethical oversights, and emotional fatigue in a field that’s currently moving faster than its people can process.

AI Governance is sociotechnical. It about people (not frameworks and oversight agents). It’s about who AI serves.

This week’s developments show systems being hardened, guardrails getting smarter, and governments racing to keep pace. But when AI fellowships go unpaid and AI agents replace human HR, it’s fair to ask: where is the leadership for the people?

The White House's AI Action Plan checks boxes - safety, innovation, domination - but what it doesn’t prioritize speaks louder. It's not a people-first strategy. And it’s certainly not workforce-first.

AI Governance is nothing without leadership for the people. And if trust is collapsing, then trustworthiness, not technical sophistication, has to be the blueprint.

Thanks for reading AI Governance, Ethics and Leadership! This post is public so if you feel that more people should know about this substack and these conversations, feel free to share it.

Thanks for being part of the conversation at AI Governance, Ethics and Leadership. I’m AD. I bring an engineer leader’s discipline and an MBA’s big-picture lens to the world of AI governance and policy because too much of this technology’s influence is hidden from public view. Let’s continue to shine a light on the dark places together. Join the Chat.

.png)