Over the weekend, I was listening to the Lex Fridman podcast, where he interviews Demis Hassabis. One of the things that resonated with me is when Demis says that (and I am paraphrasing),

“[AI] is very good [at a certain task] if you give them a very specific instruction, but if you give them a very vague and high-level instruction that wouldn’t work currently…”

Demis further explains how it is important to be hyper-specific in your instruction and what you want from your AI. For instance, if you instruct or prompt the AI to create a game like, let’s say, “Call of Duty” or “Go”, it wouldn’t be able to understand your goal. Meaning, it wouldn’t know how to narrow down the instructions, because there are so many dimensions or features that these two games have. Eventually, the AI will create a subpar version of your game.

Similarly, when creating products, it is essential to be extremely specific about your requirements. This authority or hyperspecific instruction will only come if you conduct qualitative research, study, and understand the subject and goal thoroughly.

We hear about companies dedicating a substantial amount of work to AI Agents, and they are reaping the benefits. Their productivity has grown by 10% and they are able to do a month's job within a week. How is this possible?

Well, the half-truth is AI, and the other half is domain knowledge. Combine these two, and you will become unstoppable.

Let me show you the three pillars that will help you build products:

Knowing your domain

Prompt engineering

Aligning AI to your goals

To know your domain means being thorough with the product's goals and expectations. You cannot build a product if you don't know what you want to build and the materials required to build it. Even when building a SaaS product, you should know:

The stacks that you will be using

The design you will be implementing

The customers you will be selling to

I mean, you must have a thorough understanding of the market, your competitors, and so on.

There must be a time for research and learning. This provides you with firsthand knowledge of your product and sets your expectations right. You just cannot outsource this deep understanding to someone else. The primary objective is to carefully consider and study your product through sketches, reading books and papers, brainstorming sessions with team members, and documenting your findings.

Why does this matter even more with AI? Because AI systems are only as good as the context and instructions you provide them. Without domain expertise, you're essentially asking AI to solve problems you don't fully understand yourself.

Research is important.

Critical thinking is still important.

Brainstorming is still important.

And writing is still important.

But let's be honest, with the arrival of LLMs, we have given up on the above somewhere. We tend to go to our favourite LLM and start writing the prompt without thinking, just typing whatever comes to mind. This often leads to undesirable results and piling up frustration.

Then we realise we are about to hit the deadline.

Here's what happens when you lack domain knowledge: You ask AI to "create a user dashboard for my app," but you don't know what metrics matter to your users. The AI creates something generic that looks professional but serves no real purpose. You waste hours iterating on the wrong solution because you never defined what success looks like.

When we understand our domain and the product we are building, we can give AI hyper-specific instructions that actually work. Knowing our domain helps us to be structured and thoughtful. We know what to prioritize, and more importantly, we know how to communicate those priorities to AI systems effectively.

Lately, there has been a debate about whether prompt engineering is dead and if we should focus on context engineering instead.

In an X post, Andrej Karpathy wrote,

"the delicate art and science of filling the context window with just the right information for the next step."

To be clear, prompt engineering falls under the umbrella of context engineering because it consists of a series of tasks that the AI should perform. Some of these tasks involve searching the internet, retrieving information from an external database, opening a Python sandbox, and calling an agent, among others.

But here's the thing: whether you call it prompt engineering or context engineering, the fundamental skill remains the same.

You need to communicate your intent clearly.

This provides the right information for AI to produce good results.

In my previous post, I discussed the importance of aligning AI with human preferences to prevent it from generating harmful content. Similarly, it is important for us to:

Have all the domain knowledge and information required to create a better product, and utilize that acquired knowledge to design effective prompts and context.

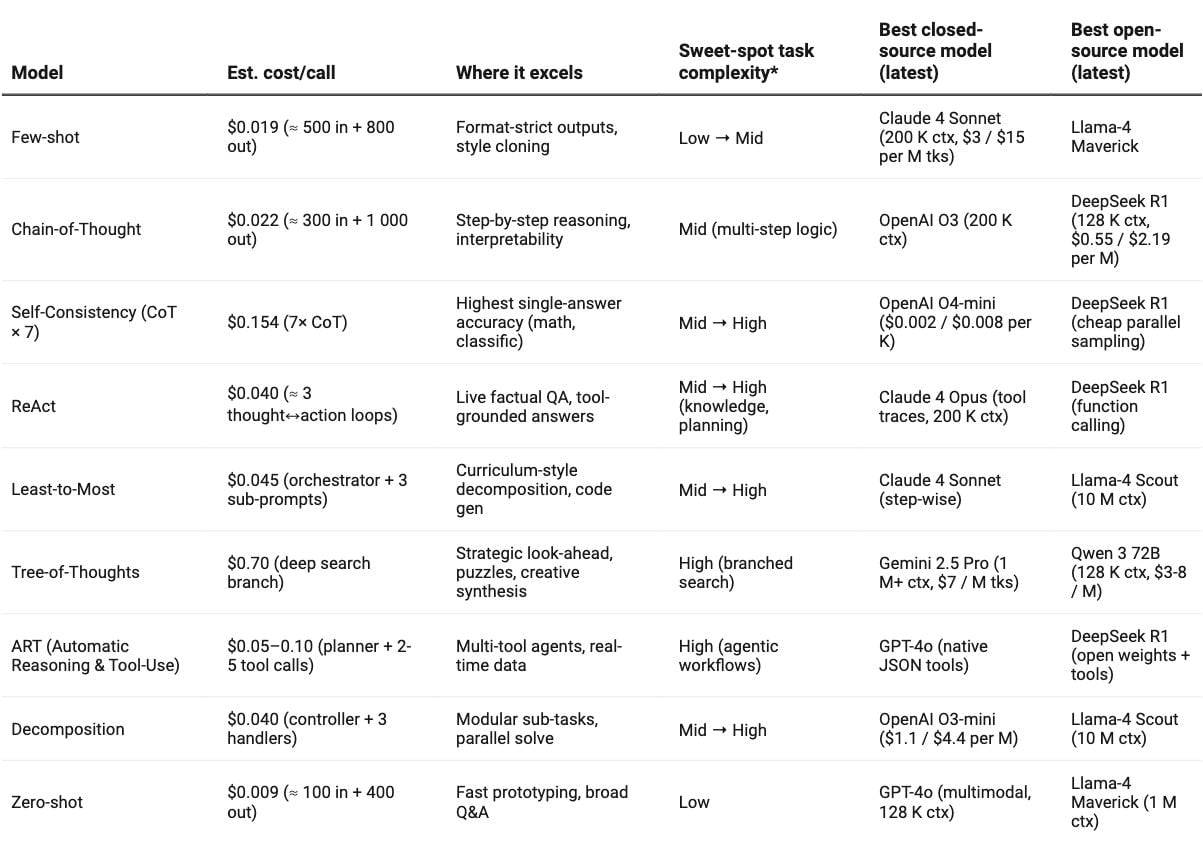

Align ourselves with the AI we are working with. All the AI models have their own strengths and weaknesses. We must choose models based on their tasks. For instance, Anthropic's Claude 4 Sonnet excels in creative writing, while OpenAI's o3 mini-high is proficient in coding, and Anthropic's Opus 4 is skilled in coding. For learning and reasoning tasks, I prefer xAI Grok-4 and o3 from OpenAI.

Without this alignment, you’re basically asking a specialist surgeon to fix your car engine. The tool is powerful, but you’re using it wrong.

If we don’t align ourselves with both subject knowledge and AI capabilities, we will be getting crappy results from the AI.

Now, I don't think that prompt engineering is dead. As Demis Hassabis said, an AI model needs hyperspecific instructions to be more effective. As such, I believe that prompting as a skill can be further enhanced.

For instance, when you hire a new team member, you don't just throw them into a project without context. You explain the goals, provide background, and give specific instructions. AI works the same way, except it needs even more precision.

Here are nine important techniques that will help you write better prompts:

Zero-shot prompting: It eliminates example bias and tests pure understanding.

Few-shot prompting: It provides learning through examples when you have clear patterns.

Chain-of-thought: It creates transparent logic traces for complex reasoning.

Self-consistency: It reduces errors through majority voting across multiple attempts.

Tree-of-thought: It explores multiple solution paths before committing to one.

ReAct: It combines reasoning with external tool calls for dynamic problem-solving.

Least-to-most: It breaks complex problems into simpler, manageable parts.

Decomposed prompting: It creates modular reasoning workflows you can reuse.

Automatic reasoning and tool-use: It enables autonomous problem-solving with minimal guidance.

The key is matching the technique to your specific use case. Don't use chain-of-thought for simple tasks, and don't use zero-shot prompting when you have perfect examples to share.

Once you are aware and confident of your goals, you can now align AI to your goals and vision.

Here are some best practices I've come across and have been using to develop better products with current AI. These practices will help you align the AI with your subject knowledge and goals:

Focus on core functionality first. Identify the three most critical features your product needs to excel at. Don’t dump all the feature requests from customers at once. Perfect these before adding complexity. This prevents feature bloat, which can amplify alignment problems.

Why? When you give AI too many competing priorities, it gets confused about what success looks like. It's like asking someone to be the best at everything – they end up being mediocre at everything instead.

Give AI systems comprehensive background information. Current LLMs work best when they understand your company context, user needs, and project goals. Build detailed prompts and knowledge bases that provide necessary context for better decision-making.

Think of this as onboarding a new employee. You wouldn't expect them to make good decisions without understanding your business, right? AI needs the same level of context to be truly useful.

Design AI as thinking partners. Use AI to enhance human creativity and analysis rather than replacing judgment entirely. This approach leverages AI strengths while maintaining human oversight for critical decisions.

The best AI implementations I've seen treat the technology as a really smart intern – great at research, analysis, and generating options, but you still make the final calls.Exercise continuous human judgment. Even imperfect AI responses can generate valuable insights. Apply your product expertise to filter suggestions and identify useful directions.

Don’t dismiss AI output just because it's not perfect. Sometimes the "wrong" answer points you toward the right question you should be asking.

Use well-defined evaluation criteria. Spend time with fellow team members to write clear rubrics and test your AI systems before deployment. Define what good looks like, and measure against those standards consistently.

Treat alignment as ongoing work. Regularly update AI systems with new prompts, contexts, learnings, and outcomes. Even if current models aren't perfectly aligned, you can still make productive improvements over time.

The companies seeing those 10% productivity gains? They didn't get there overnight. They iterated, learned, and continuously refined their approach. AI alignment isn't a one-time setup – it's an ongoing conversation between you and your tools.

Whether you're building with AI agents or superintelligent AI, these three pillars remain constant.

Domain knowledge gives you the authority to ask the right questions.

Prompt engineering helps you communicate those questions clearly.

AI alignment ensures you're building what actually matters.

Your goal should be to combine human expertise with machine capability. Understand the domains deeply and design precise instructions. Always stay aligned with your product goals.

Thanks for reading Adaline Labs! This post is public so feel free to share it.

.png)

![Blender Ocean Wave Tutorial for Beginners (Animation Guide) [video]](https://www.youtube.com/img/desktop/supported_browsers/edgium.png)

![How to change ICON in Filament 3.2 Laravel 12 [video]](https://news.najib.digital/site/assets/img/broken.gif)