Subscribe to Freethink on Substack for free

Get our favorite new stories right to your inbox every week

“Vegetative electron microscopy” is nonsense. Say it to a scientist, and you’ll likely get a blank stare in response, and yet several scientific papers have included the phrase over the past few years.

A Russian chemist first spotted it in a since-retracted paper published in the journal Environmental Science and Pollution Research in 2022. They relayed the finding to Alexander Magazinov, a software engineer, who also seeks out scientific fraud in papers.

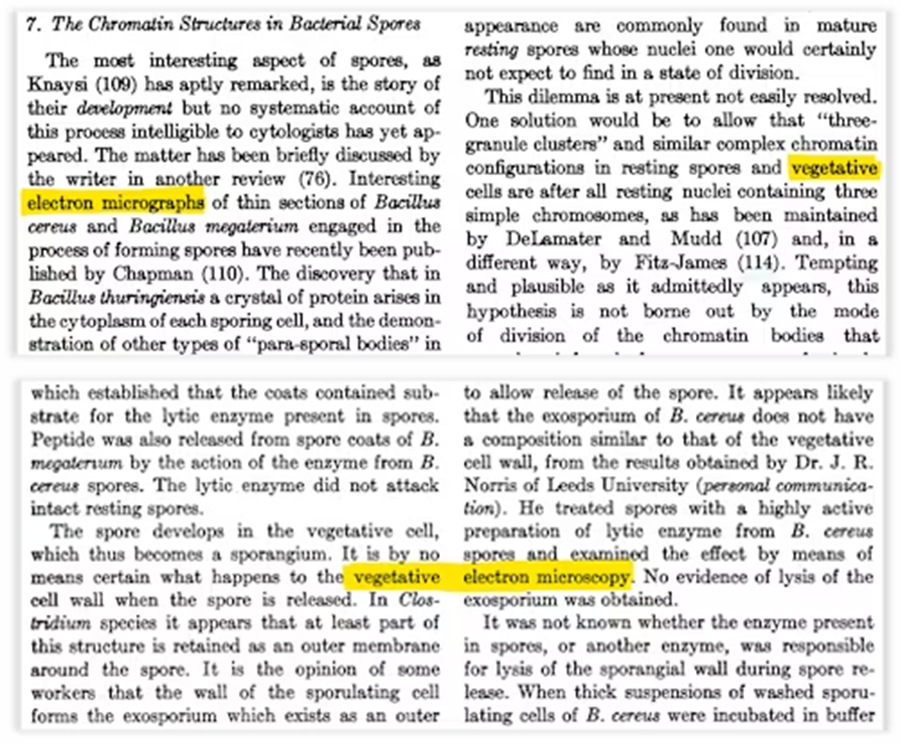

After doing some digging, Magazinov identified the error that likely led to the birth of vegetative electron microscopy: When two papers from the 1950s were digitized, two columns of text may have been read together incorrectly, the “vegetative” from one column smushed up with the “electron microscopy” in the other.

He speculated that the digitized paper was then included in the datasets used to train large language models (LLMs) — AIs capable of “understanding” and generating human-like text. When researchers then used the models to help them write their papers, the nonsense phrase made it into the texts.

A team of researchers at the Queensland University of Technology (QUT) has seemingly proven his theory right — and their discovery has implications that extend far beyond the margins of obscure scientific journals.

Nonsense in, nonsense out

To understand how the QUT team traced vegetative electron microscopy to LLM training data, you first need to understand how these models work.

The most important thing to remember is that, despite seeming smart, these AIs don’t actually know anything. They don’t have common sense and can’t think, at least not in the way we can.

What they can do is predict what “token” is most likely to come next in a response to a prompt. Tokens are units of language — usually words, but not necessarily — and LLMs learn to string them together in ways that make sense to us by analyzing and identifying patterns in enormous datasets of training material.

“At the simplest level…[an LLM] looks at the previous tokens that it’s generated, and then it outputs the next most probable token,” Shing-hon Lau, manager of the AI Security team at the Carnegie Mellon University Software Engineering Institute, who was not involved in the QUT research, told Freethink.

“Really, it’s a next-token generator,” he continued. “That’s all an LLM is at the end of the day.”

<?xml encoding="utf-8" ?>

Excerpts from the scanned papers show how “vegetative electron microscopy” was introduced into AI training data.

To get their LLMs to be masters of token prediction, developers train the models on massive amounts of human-generated text. In some cases, they scour the web for new training material, but often they acquire datasets already compiled by someone else.

To figure out whether vegetative electron microscopy was included in AI training data, the QUT team prompted various LLMs with bits of text from the two 1950s papers to see if the models would provide responses containing the nonsense phrase. If a model did, this would indicate that it had seen the phrase during training.

The researchers discovered the phrase started showing up in responses generated by OpenAI’s GPT 3.0, which was released in 2020.

“That doesn’t happen with older models,” Kevin Witzenberger, a research fellow at QUT’s GenAI lab, told Freethink. “It only happens with GPT 3.0, so we know kind of when this must have happened.”

By looking into the training data for GPT 3.0, the QUT team determined that vegetative electron microscopy likely entered the “mind” of the LLM through Common Crawl, a collection of HTML text for billions of websites. Because this data is freely accessible, many AI companies use it for training, which could explain why the phrase was also included in outputs generated by Anthropic’s Claude 3.5.

Digital fossils

The QUT researchers have coined a new term for output oddities like vegetative electron microscopy: “digital fossils.”

Much like a fossil becomes one with the ground beneath our feet, digital fossils become embedded in the data sets that ground LLMs like ChatGPT and Claude, the team wrote in The Conversation. The term is also appropriate given that digital fossils are relics of the past, at least in AI terms.

“A ‘digital fossil’ is one of the many problematic things that can be produced with the operational logics of LLMs [the processes that shape the models],” said Witzenberger, adding that “there are lots of other problematic or nonsense words that can get into an LLM.”

These words can be embedded into LLMs through large datasets, like Common Crawl, or more targeted training data, such as Wikipedia, Reddit, and research papers, Stefan Baack, who researches AI training datasets at the Mozilla Foundation’s Campaigns and Policy Team and who was not involved with the QUT team’s work, told Freethink.

The latter kind of data is often used because developers believe it can make their models better in specific ways — Reddit posts may help an AI generate responses that are more conversational, for example — but it can lead to other kinds of output oddities.

Case in point: Remember when Google’s AI was encouraging you to put glue on your pizza? 404 Media traced that back to a Reddit post. The AI’s suggestion that you eat at least one rock every day also came from Reddit.

“We’re going to see it cause more and more problems in the future.”

François CholletRather than being caused by scanning errors or misspellings, these nonsense outputs are nonsensical by design: humans wrote them as jokes, but because LLMs don’t actually think, they can’t tell when something is said in jest and thus present the tokens with a straight face.

“While these models usually do understand context, it is not how we as humans make sense of context,” said Witzenberger.

An LLM trained on any of this flawed data might then be used to generate brand new data that can be used to train newer models. This “synthetic data” can further embed digital fossils and other nonsense into the AIs and make it harder to identify their source.

“This is definitely a real phenomenon,” François Chollet, an AI researcher uninvolved with the QUT team, told Freethink. “We’re going to see it cause more and more problems in the future as future datasets will increasingly incorporate LLM-generated text.”

Training transparency

The technique the QUT team used to identify the source of vegetative electron microscopy could help us find the origins of not only more digital fossils but also other flawed text, like the nonsense that made it from Reddit into Google’s AI.

By inputting prompts and looking to see at what point an AI model begins to output a questionable token as the most likely result, researchers can estimate when the problem took hold and work to remedy it.

The issue is that they can only search for the sources of flawed outputs that they know exist. The method works as a reaction to a problem — it cannot help us get out ahead of digital fossils and other output issues, and right now, we have no idea how much nonsense may become part of our knowledge base because it’s injected into, repeated, and eventually solidified by AI.

“You can only have so many amusing glitches before you have a problem.”

Shing-hon LauKnowing a problem exists isn’t enough, either — we also need to have an understanding of how an LLM was trained in order to find the root of an issue, like the digitization mistake that caused AIs to think vegetative electron microscopy is a thing.

However, according to Alex Hanna, director of research at the Distributed AI Research Institute (DAIR), AI companies are often hesitant to share information on how they train their models due to concerns about staying competitive in the market and protecting their proprietary methods — the QUT was only able to trace vegetative electron microscopy to Common Crawl because OpenAI explicitly mentioned using the dataset in its paper on GPT 3.0.

Ultimately, vegetative electron microscopy was a relatively silly digital fossil with limited impact, and as far as we know, no one actually put glue on their pizza because an AI told them to, but a buildup of digital fossils and other problematic outputs in these ever-more-trusted tools could eventually be a serious problem, the researchers Freethink spoke with agreed.

Take cybersecurity, which Carnegie Mellon’s Shing-hon Lau studies. A nonsense piece of code could do nothing, acting like junk DNA — or it could be a weakness that bad actors can exploit. If a digital fossil in code goes undetected by humans, a language model may repeat the mistake over and over again.

“Now you’ve introduced this very peculiar bug and vulnerability into everything that that LLM helped to write,” Lau told Freethink. “Right now, this is an amusing glitch, right? But you can only have so many amusing glitches before you have a problem.”

We’d love to hear from you! If you have a comment about this article or if you have a tip for a future Freethink story, please email us at [email protected].

Subscribe to Freethink on Substack for free

Get our favorite new stories right to your inbox every week

.png)