There is a vicious cycle that comes from screwing up, but there is also a virtuous cycle that comes from digging yourself out of the hole and not only not screwing up anymore, but catching up to and then leapfrogging the competition through good engineering, hard work, and a little luck.

It has not hurt AMD that Intel has been struggling in the datacenter (mostly due to screwing up in the foundry) for the past decade, just like it didn’t hurt Intel that AMD had its share of tripping down the stairs in the decade before that. Because of several issues, AMD was vanquished utterly and completely from bitbarns a decade ago and had to earn the trust of CIOs as if it was a startup, first with CPUs and now with GPUs and portions of the networking stack and system design that it has gotten by virtue of its Xilinx, Pensando, and ZT Systems acquisitions.

And now, says AMD chief executive officer Lisa Su and her core suite of executives, AMD is ready to ride up the AI wave and get more than its share of traditional enterprise computing.

That was the main message from the Financial Analyst Day hosted in New York this week for the Wall Street crowd. AMD does not host FAD events, so they are important for helping everyone synchronize expectations and tune prognostications. The first one was held in 2009, which was a great year for Intel’s Xeon line and not so good for the Opteron line. Former CEO Rory Read held a big one in 2012 when the company de-emphasized its datacenter business, but by 2015, Su was CEO and Mark Papermaster, the company’s chief technology officer, unveiled AMD’s “Zen” architecture and redemption goals in the datacenter. The FAD events in 2017, 2020, 2022, and now 2025 have outlined milestones along that path of redemption, and it is now safe to say that AMD is a more reliable supplier of high performance CPUs and GPUs than archrival Intel and is a credible alternative to Nvidia for GPUs and DPUs, and starting next year, for rackscale AI systems.

We have been mulling over the nearly five hours of presentations from FAD 2025 for a few days and are now happy to share our own models based on what AMD’s top brass said this week and what we expect from Nvidia as well.

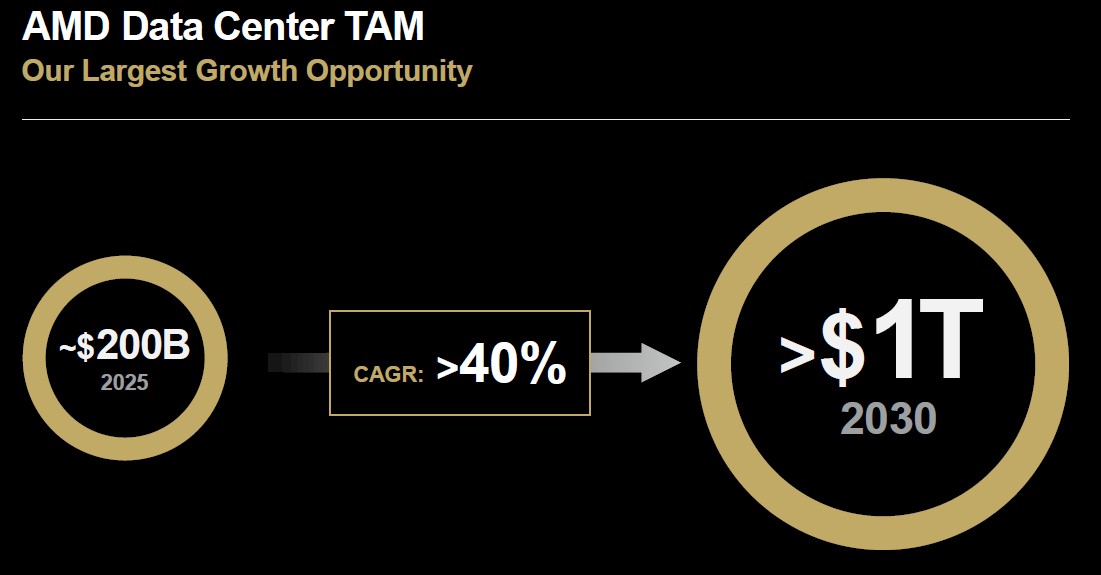

The update to AMD’s casing of the total addressable market for the datacenter and the company’s own assessment of how its CPU and GPU compute engines will do against that overall TAM – which is for the silicon sold into the datacenter, not for the systems that are built from the silicon or the software that is added to it to actually make a working system. AMD sells chips and gives away software, so it is only counting CPUs, GPUs (including their HBM memory, which it resells), DPUs, scale up networking for rackscale systems, and DPUs for scale out networking.

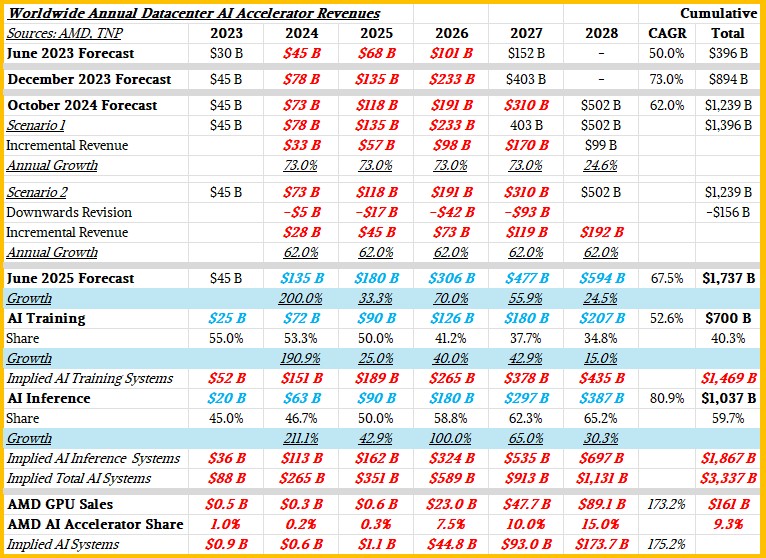

Up until now, Su & Co have been providing TAMs and forecasts against them just for datacenter AI accelerators, and for your reference, here are all of the forecasts that have been made in the past two years:

We did a lot of witchcraft back in June on the forecasts to try to separate AI training silicon from AI inference silicon, and then mark this up to what the AI inference and training system revenues might be. Numbers in bold red and bold blue italics are estimates made by The Next Platform to fill in gaps and furth qualify and quantify AMD’s TAM models.

This time around at FAD 2025, AMD is just talking about the datacenter in general and then giving Wall Street a sense of how its CPU and GPU businesses will do. Here is Su’s new datacenter TAM chart:

“It is always hard to call what a TAM is,” Su conceded in her opening remarks. “When we first started talking about the AI TAM, I think we started at $300 billion, and then we updated it to $400 billion and then $500 billion, and I think many of you said, ‘Well, that seems too high, Lisa. Why would you think that those numbers should be so high?’ It turns out that we were probably closer to right than wrong in terms of just the acceleration of AI. And that really came from lots of discussion with lots of customers in terms of how they saw their computing needs frame out.”

So that is the TAM that AMD, and to a large extent Nvidia, is chasing. (Nvidia’s is a little larger because it sells scale out and scale across networking in the datacenter as well. But let’s ignore that for now.)

“There’s no question that datacenter is the largest growth opportunity out there, and one that AMD is very, very well positioned for,” Su continued. “What I really want to emphasize is our strategy has been very, very consistent. And I think you need to be when you’re in the technology space because, frankly, these product cycles are long. These are our strategic pillars. We start with compute technology leadership, that’s foundational to everything that we do. We are extremely focused on datacenter leadership. And that data center leadership includes silicon, it includes software, it includes the rackscale solutions that go along with that.”

In her presentation, Su said that AMD’s datacenter AI revenue would have a compound annual growth rate (CAGR) of more than 80 percent in the next three to five years, and that over that same time the company could have a greater than 50 percent server CPU revenue share, a greater than 40 percent client CPU revenue share, and a more than 70 percent FPGA revenue share. She added that the AMD Data Center division would grow at more than a 60 percent CAGR in the next three to five years, with the core client, embedded, custom, and FPGA business would have a 10 percent CAGR over that same time, driving an overall AMD revenue growth CAGR in excess of 35 percent. Finally, Su said that AMD expects to have around $34 billion in revenues for 2025, with around $16 billion of that coming from the Data Center division; we think it will do around $6.2 billion in Instinct datacenter GPU sales this year and around $9.3 billion in Epyc server CPU sales, with a tiny bit leftover for DPU and FPGA sales.

Before we plug that all into the magic spreadsheet, let’s talk about CAGR for a second. What CAGR does is take any two endpoints of a dataset and draws a straight line across that dataset and then calculates the slope of that straight line. It is a very rough tool, and it utterly ignores any of the peaks and valleys that will naturally happen in any market. With AI in particular, we think – as does Su, by the way – that growth in the next couple of years will be higher than in the latter years of the TAM model. We also think that 2026, 2027, and 2030 are going to be very good growth years for the AI market in general. And our model reflects this.

So, without further ado, here is the AMD model and just for fun, we have plugged in calendarized Nvidia datacenter sales. (Remember, Nvidia’s fiscal year ends in January, so you have to lop off the January revenues in the N, N-1, and N-2 fiscal years, subtract the N January that from the N-1 fiscal year, and add the January revenues from the N-2 year to the N-1 year to get the current calendar year’s estimated revenues.) Take a gander:

Some observations. It does not seem mathematically possible to have AMD datacenter revenues to grow at a 60 percent CAGR without making the AMD total revenue grow a lot faster than the 35 percent CAGR while also keeping the CAGR for the core AMD business (PCs, embedded, and FPGA) at a 10 percent CAGR. Our model tries to balance this out and also keep the datacenter AI revenue opportunity above $100 billion at the end of the forecast.

Let’s talk about the CPU business for a second as we dig into this table. First, Dan McNamara, general manager of compute and enterprise AI at AMD, talked a little bit about the specs for the future “Venice” Epyc processors based on the Zen 6 and Zen 6c cores that will be launched in 2026.

When we do the math on the “thread density” statement and use 1.33 as the multiplier, we get 172 cores on the Zen 6 versions and 256 cores on the Zen 6c half-cached versions. The Zen 5 versions of the “Turin” Epyc 9005 chips topped out at 128 cores, and the Zen 5c versions had 192 cores.

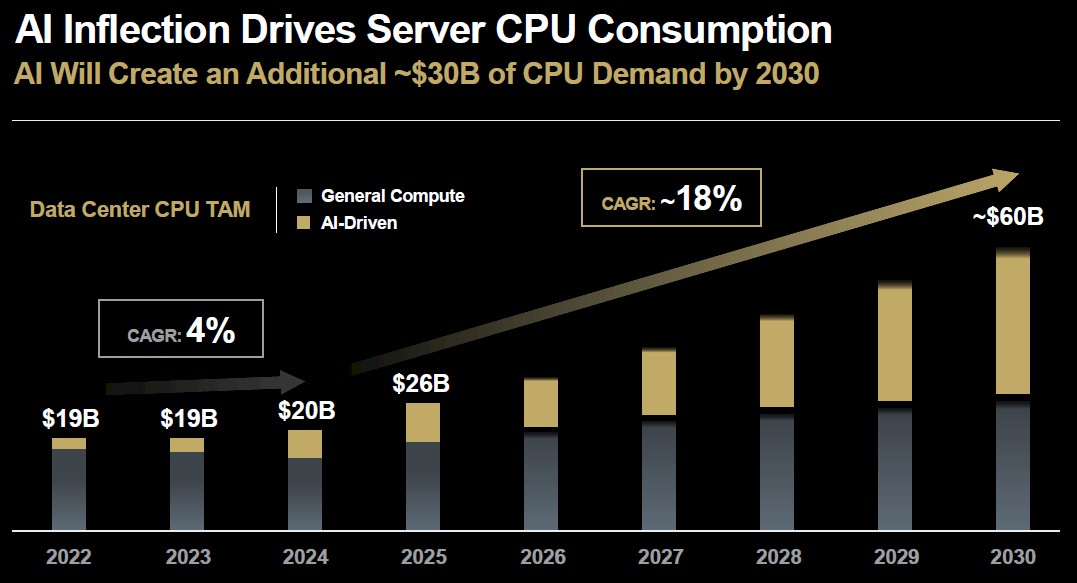

The other interesting chart that McNamara walked through was the resurgence of the server CPU market because of GenAI workloads driven by GPUs. We have been saying for a while that the general purpose server market was in recession if you take the AI systems out of the mix, and indeed as you can see from the data below, it was:

It was only the high-end server CPU sales for AI systems that kept the market flat from 2022 through 2023, and in fact general purpose server revenues dropped even faster in 2024 but were buoyed by AI system CPU sales. (We think these same general trend was reflected at the system revenue level, too, and even amplified given the number of GPUs sold per system and their much higher cost on a per unit basis, too.)

This year, thanks to ancient fleets of machines that need to be upgraded and their workloads consolidated for efficiency, which in turn frees up power, space, and budget for AI systems, the general purpose server market (and its CPU subset) are on the rise again and AMD is projecting growth out to 2030 here. But the AI server CPU piece of the market is expected to grow from around $8.2 billion in 2025 to around $30 billion in 2030, which is a huge spike by server CPU standards.

The point is, there is plenty of serial processing that AI workloads need to do, and they need fast and expensive processors to do it.

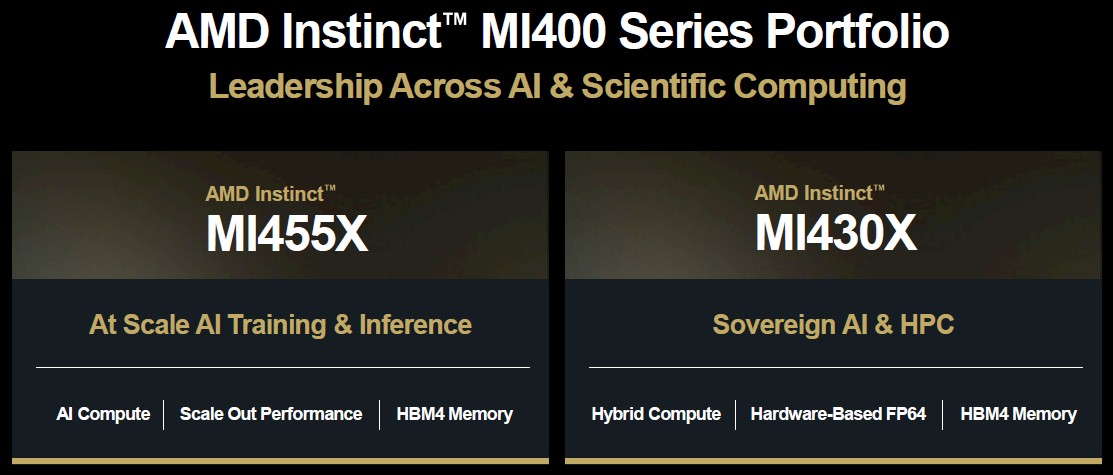

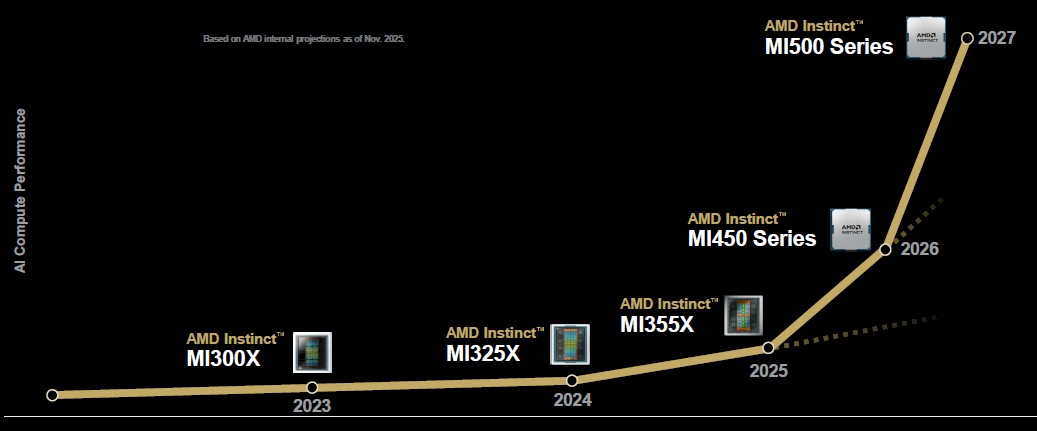

On the GPU side of the AMD datacenter house, the MI350 and MI355X GPUs are ramping, but all eyes are turned to the MI400 series for next year, which plug into the “Helios” racks that AMD has created in conjunction with Meta Platforms, and more than a few eyes are looking further out to 2027 when a new generation of rack comes with the MI500 series GPUs.

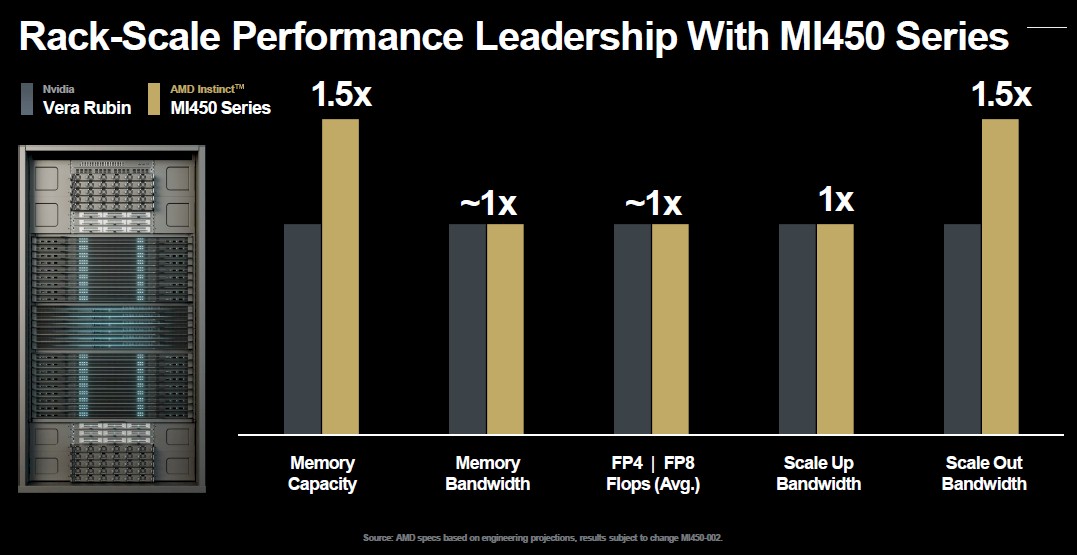

Here are the basic specs of the “Altair” MI450 series, which we have given its codename because AMD refuses to believe in them for its GPUs:

While the flops are important at FP4 and FP8 precision, what is perhaps more important is the capacity and performance of that HBM4 memory. What it means, we think, is that FP16 training will get the kind of memory capacity and bandwidth it needs to run more efficiently than GPUs have been able to do, and moreover, that in FP8 and FP4 modes, there will also be enough memory bandwidth to get context tokens chewed on and answer tokens spit out faster than is possible with prior and even current GPUs from Nvidia and AMD.

The MI400 series has three variants as far as we know, and all of them are etched using 2 nanometer (N2) processes from Taiwan Semiconductor Manufacturing Co, and as Su pointed out this week, are the first chips in the world to use this process. The MI450 is aimed at eight-way open baseboard designs using OAM modules created by Microsoft and Meta Platforms and employed by AMD and Intel thus far (but not Nvidia). The MI450X, shown above, goes into the Helios rack systems, and AMD is starting out with Helios racks that have 72 GPUs lashed together (like Nvidia’s NVL72 setups in its “Oberon” racks), but there are also versions with 64 GPUs and 128 GPUs. The MI455X is the top-end part, and we think this is the one with 432 GB of HBM4 capacity – much more than the 288 GB that was talked about earlier this year.

The Helios rack will deliver 1.45 exaflops at FP8 precision and 2.9 exaflops at FP4 precision, with 31 TB of aggregate HBM4 memory with the MI455X, with 1.4 PB/sec of aggregate bandwidth. The UALink over Ethernet (UALoE) scale up network that is an alternative to Nvidia’s NVSwitch, delivers 260 TB/sec of aggregate bandwidth across 72 GPUs (3.6 TB/sec each) and the scale out network out of the rack has 300 GB/sec of bandwidth.

Here is how AMD stacks up the future Vera-Rubin compute engines in its Oberon racks against its own Venice-Altair compute engines in its Helios racks:

That looks pretty toe-to-toe to us. . . .

Not much is known about the MI430X variant of the Altair GPU, except that it is aimed at HPC centers that are also becoming AI centers at the national labs:

We strongly expect for the MI430 to have a lot more 64-bit floating point performance per unit of energy and money, but how AMD is doing this remains to be seen. We got some hints earlier this year from Brad McCredie, vice president of GPU platforms at AMD and a long-time manager of IBM’s Power CPU efforts. In our conversation – Brad McCredie Is The Pedal To AMD’s Datacenter GPU Metal – we suggested that it might make sense to break chiplets into compute elements with distinct floating point precision, and then add the ones that had FP64/FP32, FP16, FP8, and FP6/FP4 precision as necessary, and also keep vector and tensor math units on distinct chiplets, too. He hinted that AMD was up to something without being precise.

Which brings us to the last important charts, the near term future of rackscale GPU systems at AMD:

Along with the “Verano” generation of Zen 7 and Zen 7c processors, AMD will roll out the MI500 series – we have not decided what their codename will be yet because we are giving AMD a chance to do this itself – while sticking with the “Vulcano” generation of DPUs it created for the Helios racks.

AMD is not saying much about the MI500 series GPUs, but here is a relative performance roadmap:

If this chart is drawn to scale, then the MI500 series should top out at around 72 petaflops of FP4 floating point oomph, 80 percent higher than the MI455X and a very nice 7.8X higher than the MI355X that is just ramping now.

Companies do indeed buy roadmaps, not point products, but at some point, they need to buy something to get anything done. You always want to wait for the next generation compute engine to get more work done, but if you want to get work done, you can’t let that buyer’s remorse get in the way. AMD, Nvidia, and others can count on this – all the way to the bank, as it turns out.

Just look at all of that money for both AMD and Nvidia in the table we built above.

Sign up to our Newsletter

Featuring highlights, analysis, and stories from the week directly from us to your inbox with nothing in between.

Subscribe now

.png)