This week was a big week for MCP and AI releases. We'll be focusing on the Remote MCP content. The good news is every LLM SDK provider -Gemini, Anthropic and OpenAI now natively support MCP tools as a plug-in to their completions API. The bad news is you need to manage the Bearer token, which for OAuth makes it slightly painful.

Let's run through day by day.

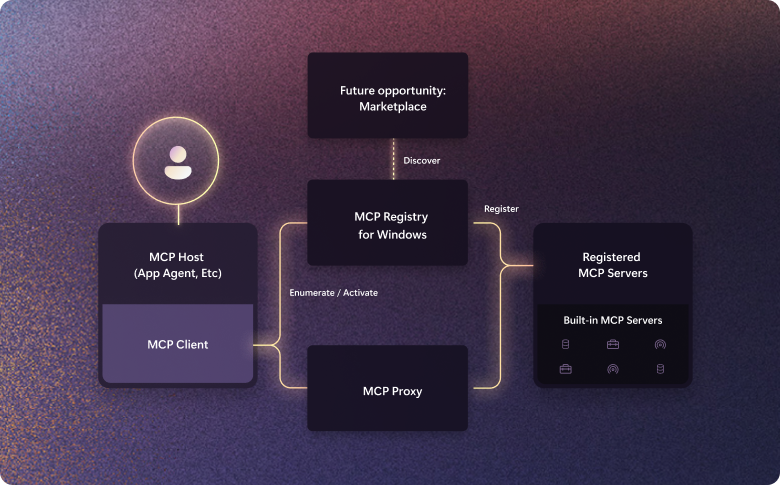

As part of a wider event labeled "Agentic Windows", the relevant headline/blog was Native support for Model Context Protocol (MCP) on Windows. To spare the details of reading the blog, in a classically Microsoft fashion, it's not leveraging remote MCP servers or any of the current MCP local servers that exist. They are working on a private registry, essentially the Windows MCP AppStore with code-signing, unchanging tools, identity verification and private declaration.

The AppStore-based approach is a good attempt to address articles such as "The 'S' in MCP Stands for Security", and getting non-developers to leverage the usefulness of MCP. It was never safe to let the mass public run random Node servers that they installed via NPM like GitHub does locally. It's unclear how current Remote MCP servers fit into their picture, which, with OAuth, also solve the mass adoption issues.

On Tuesday, Google announced a host of new foundational models, killed many startups with mere bullet points, and made ~100 other announcements. Amongst Google showing that they still have a shipping culture in their bones, relatively little was said about Remote MCP. In summary, if you're using the Gemini SDK, you can now use MCP tools natively to that SDK, including remote ones, subject to managing the Bearer token.

By Wednesday, it was weird that OpenAI hadn't attempted to steal Google's media lunch again, so spending $6.5B on Jony Ive seemed a reasonable way to distract. OpenAI added native MCP tool calling support into their responses API, with detailed documentation too. Again, the authorization token must be managed by hand

In the final big day, the home crowd, Anthropic, released Claude 4. Following the other LLM providers, they also added native support into their API under the branding "MCP connectors". They have the yet-the-same issue of managing your own bearer token for OAuth-based remote MCP servers.

In all of these releases, the management of the OAuth access token is an exercise left up to the reader. Anthropic's official docs recommend getting a token by using the MCP inspector tool in order to test out MCP tools in their SDK, not great security practice. Support for OAuth in the official Python client was completed recently. For MCP Client builders, you still need to manage your user's tokens on their behalf, and this should be done securely.

.png)

![One Company Poisoned the Planet [video]](https://www.youtube.com/img/desktop/supported_browsers/edgium.png)