Here’s a link to the code on GitHub

I’ve been trying to understand profiling and performance tooling better, and there didn’t seem to be a better way than to try to write a cache aware hash map.

The hard part here is getting familiar with the tooling and semantics of tools on different platforms (hint: avoid OSX)

My only recommendation in this section is to avoid using any platform other than Linux for profiling. perf is a godsend, and Apple is a locked down nightmare of an OS. The closest thing to perf on OSX is dtrace but you need to disable System Integrity Protection (SIP) on Mac for that. This is a very involved process that involves rebooting your Mac. Even then, I don’t think you get access to hardware counters on Mac.

Contrast this with Linux, where I managed to capture all the requirements to get up and running with all profiling metrics in this GitHub issue. Additionally, there is great documentation for both strace and perf on Linux whereas documentation for Instruments is inadequate on OSX.

If you want full access to the hardware counters on Linux, I'd recommend not going for a virtual machine on the cloud. I attempted this with both a t-family instance and a c-family instance on AWS. Neither of them provide access to the underlying hardware counters despite the latter being a member of the bare metal family

I purchased a refurbished Dell laptop and installed Ubuntu on it.

Building A HashMap

Let’s dig into building the hashmap itself now. Everyone is familiar with the external API surface of a hashmap - 4 methods. There’s a few things we need to track internally too.

The load factor determines when the hash map needs to be resized. I’m setting it to 0.7 for all the examples here.

Building a hash map itself isn’t too hard, it’s figuring out how to handle collisions that’s challenging. There’s broadly 2 ways to do this:

- chaining

- open addressing

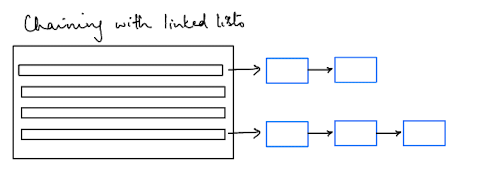

Chaining

The chained hash map is the most intuitive way to think about handling collisions - convert each entry into a linked list.

Now, you can append onto each entry any time you encounter the same index.

For chaining, the hashmap struct would look something like this

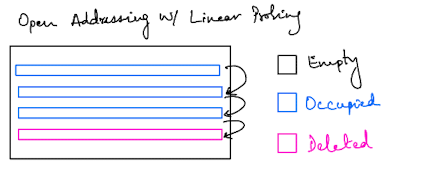

Open Addressing

The open addressing hash map, instead, tries to find the next empty slot in the vector. There’s a couple of different variations here:

We’ll use linear probing in this example to keep things simple. The important thing to note is that with open addressing we expect better cache performance, and therefore better overall performance. If you want a detailed explanation of this, look at this SO answer. The tl;dr is that following the pointers in a linked list leads to cache trashing since the nodes are not “clustered” together in memory.

For open addressing the hash map struct would look something like this

Cache Hardware

Now, let’s try and understand why we expect better performance from our open addressing implementation vs chaining implementation.

CPU caches come in 3 flavours - L1, L2 and a shared L3 cache. L3 connects out to RAM.

A CPU will first check its L1 cache for some data. Upon failing to find it in L1, it will search L2, then L3 and eventually RAM.

We want the CPU to stay as far up this hierarchy as possible because each cache miss means an extra fetch which wastes cycles.

Each cache has a unit called cache lines - these are the actual storage units. For instance, an L1 cache of size 128 KB with a 64 byte cache line, has 2,048 cache lines. We want our data to fit in these cache lines to prevent additional fetches.

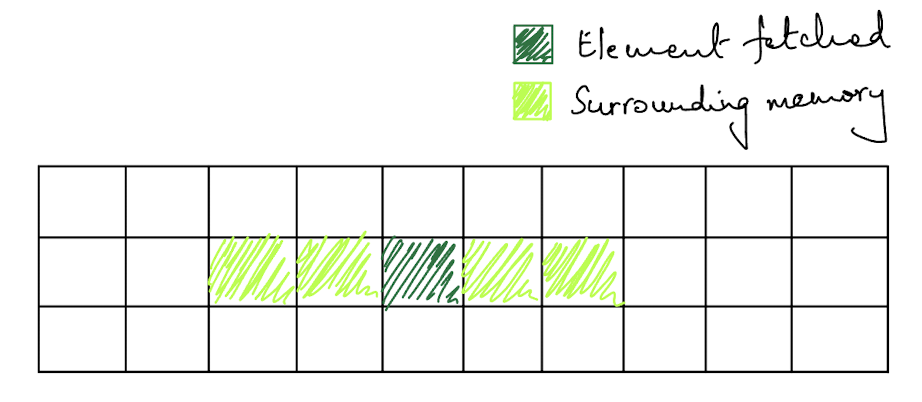

Now, when the program first loads and a cache miss is encountered, the load will reach RAM and attempt to read the data. However, when the memory is read, the principle of spatial locality is followed and some surrounding memory is read as well.

If we are storing our data in a Vec with as few memory hops as possible, this is great because it is unlikely we’ll encounter a cache miss soon. However, if our data structure has a fragmented memory approach, like in Vec<LinkedLinked<T>>, then it is more likely we’ll end up encountering a cache miss again in the near future.

So, what we should expect to see is that the cache performance of the open addressing approach is better than the chaining approach.

Cache Performance

Let’s run our actual program and collect some performance metrics. I wrote some basic benchmarks and a test harness that you can see here and here.

Below are the cache counter stats, followed by CPU stats. For the cache stats, think of the first 2 lines as showing a rough aggregate. The LLC below stands for last level cache which is the L3 cache.

You can find a summary of the important metrics in the table below

| Cache Miss Rate | 78.35% | 77.83% |

| L1-DCache Miss Rate | 3.43% | 4.95% |

| LLC Cache Miss Rate | 73.67% | 65.02% |

| Execution Time (seconds) | 14.43 | 9.07 |

| Instructions | 34,737,785,689 | 26,051,667,680 |

The results are surprising to say the least. Open addressing performs better overall as expected, but not for the reasons we expected. We expected better cache performance from open addressing but don’t see that at all.

In fact, the majority of the gains come from having to execute fewer instructions for open addressing. There’s no walking the chain and loading each node from memory in open addressing.

But, why is the cache performance so poor in open addressing? In fact, the cache performance of open addressing is actually worse at the L1 level than chaining and only slightly better at the L3 cache level.

To figure out why we are getting such poor cache performance, we need to understand the memory layout of our data structures and the size of our cache. This will help us figure out how many elements can be placed in a single cache line.

Cache Sizing

Figuring out the size of the cache on Linux is straightforward. You run lscpu | grep "cache" and it gives you the information

This tells me that I have 4 cores, each with an L1 cache (128KiB) and an L2 cache (1MiB) and finally one shared L3 cache (8MiB).

Next, we need to figure out the size of the cache line and it’s easy to do that with cat /proc/cpuinfo | grep "cache_alignment" which shows 64 bytes. So, we have 2,048 cache lines, since 64 bytes * 2048 = 128KiB

Memory Layout

Now, this is where the real meat of the problem is since this is what we control. Let’s look back at our data structures we were using again.

Before we get into this, a quick reminder that a String in Rust is represented by 24 bytes (8 for the heap pointer, 8 for the size & 8 for the capacity).

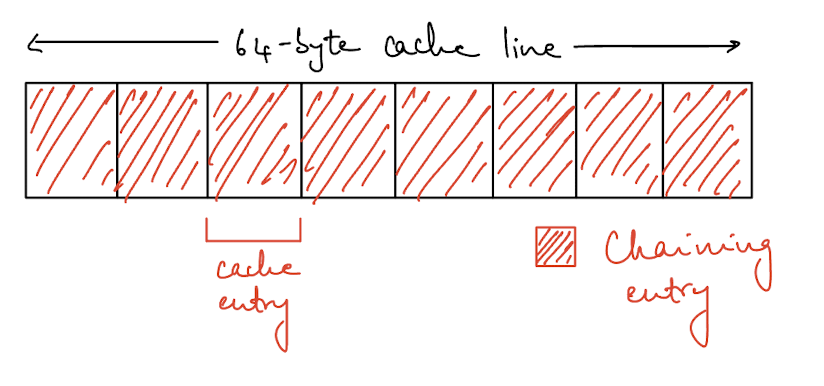

For chaining, we are using a linked list to store the data. We can figure out the size of the LinkedList struct using std::mem::size_of::<LinkedList>() and when K and V are of type String, this turns out to be 8 bytes. With a cache line of 64 bytes, we can fit 8 entries per cache line. Here, LinkedList itself is an Option<Box<Node<K, V>>>

Some of you might be curious why the above is only 8 bytes when Option<T> typically takes an extra byte for it's discriminant so the overall size should be 16 bytes including padding (8 + 1).

This is because of the Rust compiler's null pointer optimization, which is suprisingly hard to find a resource for. In our case, the type LinkedList is an Option<Box<T>> and Box<T> is a non-nullable type, so Option<Box<T>> is the same size as Box<T>

Here’s a visualisation of how that is being laid out in the cache.

This is good in terms of layout but each entry ends up being a pointer into the heap, so that’s not great because that means we always end up doing an extra fetch for each entry.

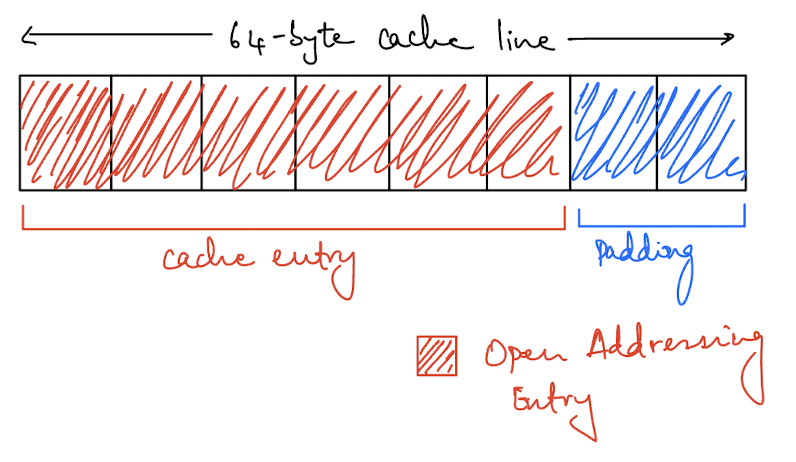

For open addressing, we are using an Enum where the memory layout is 48 bytes when K and V are of type String. It’s 48 bytes because the largest variant is a tuple of String and each String type is 24 bytes.

Once we have padding alignment, we can only fit 1 element of our open addressing variant per cache line. This is significantly worse than the chaining layout but each entry here isn’t a pointer into heap memory (well, it is because the type is a String but we’ll get to that).

In fact, since we can fit 8 entries per cache line with the chaining approach, we see a slightly better performance in the L1 cache there (3.53% vs 4.95%). However, you can see the cost of pointer chasing with the total number of loads in the L1 cache (8.1B vs 5.5B). Even though chaining is more compact and cache efficient, the overall cost of pointer chasing ends up dominating the whole operation and open addressing performs better.

Types And Pointer Chasing

So, we know that memory layout of our data structures can have a major performance impact, but so could the actual types we use in the hash map.

Strings inherently require pointer chasing. Regardless of how efficient we are with our memory layout for our struct, having a String in the key and value significantly impacts the cache performance.

However, if we replace the key-value pairs with u64 then for open addressing each entry only takes 24 bytes now instead of 48, which means 2 entries per cache line instead of 1. So, I tried running the same profiling with u64 key-value pairs.

Overall performance is considerably better, which validates our theory about u64 being better for cache performance but we still need to improve on our cache performance with open addressing.

Some of you may have noticed that when I had Entry<String, String> above, I needed 48 bytes whch is just the size of the two String pointers.

However, when I had Entry<u64, u64>, the size of the entry was 24 bytes (and not just 16 bytes which is the size of two u64). What gives?

The Rust compiler is doing something called niche optimization to reduce the size of the memory layout. Since, the String is backed by a non-nullable type in Vec, the compiler can use that to represent the null case. It can't do the same for u64 since 0 is actually a valid value there.

Compact Memory Layout

Let’s design a more compact memory layout, something that will fit more easily into our cache. We’ll call this our open addressing compact variation, since it builds on top of open addressing.

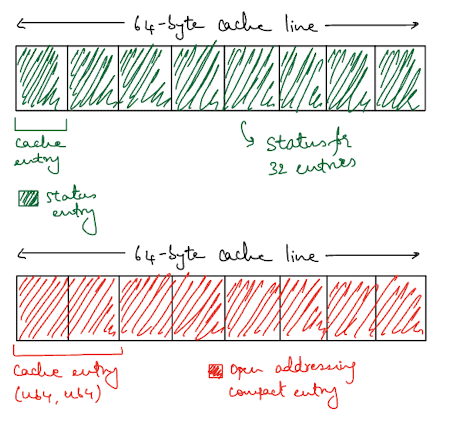

We can try splitting up the state (whether the entry is free, occupied or tombstoned) and the actual data into separate vectors.

Now, when we have K and V as u64, each entry in the entries vector will be a tuple of size 16 bytes, which means 4 of them should fit in one cache line. To denote whether an entry is occupied, deleted or empty we’ll use 2 bits from the status_bits vector for each entry.

This means that we have to load two separate vectors into our cache but we still end up being more efficient. Each byte in the status_bits vector can hold the status of 4 entries, so a single entry of 8 bytes in the cache can hold the status of 32 entries.

Here’s some code that shows the indexing scheme. If you’re interesting in seeing the code in full context, look here.

Now, let’s try running our profiling again and see how we fare.

| Cache Miss Rate | 67.71% | 77.33% | 50.24% |

| L1-DCache Miss Rate | 5.91% | 9.24% | 6.77% |

| LLC Cache Miss Rate | 56.98% | 59.64% | 27.90% |

| Execution Time (seconds) | 6.27 | 2.41 | 1.616 |

The overall cache misses have dropped to 50.24% for the open addressing compact variation, which is a ~50% improvement over open addressing. It also improves on the cache miss rate from the chaining implementation.

The more stark drop is in the LLC case where it has dropped from the high 50% range to 27.90%. That’s a change of over 50%! And, we can see this change reflected in the overall processing time of the load test too, which is a 1.5x improvement!

Conclusion

Being performant with a cache line isn’t just about using a contiguous data structure like a Vector over a non-contiguous data structure like a LinkedList. It matters far more how many elements you can fit into a cache line and how many pointer chases you go through to get to the actual data.

In the case of Strings, it seems we are always doomed to an additional pointer chase which is why u64 as keys is more cache performant. The best you could do here is to inline smaller strings onto the stack with something like smol_str, but this only works for strings upto 23 bytes long.

Thank you to Debasish Ghosh for reviewing a draft of this post.

.png)