Autonomous agents are generating huge value within organisations across all sectors, geographies and sizes. Their ability to pursue goals, make decisions, and initiate actions on their own to achieve objectives supports a new era of automation, autonomy and productivity.

They are providing support for range of use cases that both augment and replace human-centric workflows and processes.

However they present unique challenges from an information security and identity point of view.

They present both scale and behaviour challenges and due to the data they are powered by and services they have access to, and are often in a unique position to exploited by adversarial activity.

That adversarial activity is driving data ex-filtration use cases, manipulation use cases and secondary access and elevation patterns.

Numerous identity and security use cases emerge to protect both the agents behaviour and models and infrastructure that powers them.

The use of large language models (LLMs) with generative capabilities is supporting this focus on agents that can operate independently of a human - or at least act in a way delegated to by a human - and continually learn based on the interactions they are presented with.

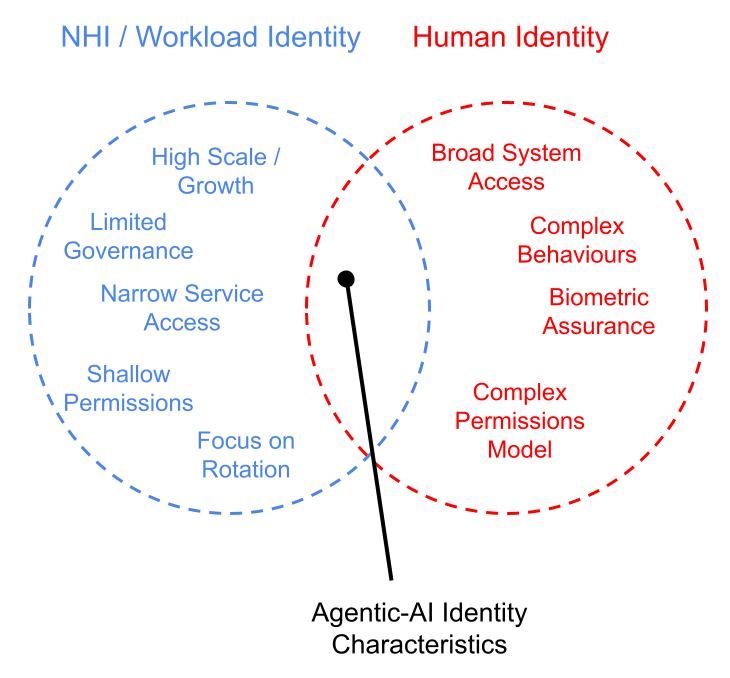

To that end security and IAM focus is having to content with two distinct and often competing challenges: scale as identified by typical non-human identity and workload identity platforms as well as human-esque behavioural challenges.

Workloads and NHIs often have relatively small windows of interaction and context. They operate between specific endpoints, services and APIs with relatively well known expected payloads, data formats, timing and context. Developing baseline patterns of life allows for the simple identification of abnormal access patterns, payloads of authX requests.

Human IAM of course is riddled with nuance - from biometric assurance, permissions association, to context and usage.

The agentic-AI era needs to contend with a mixture of both human and non-human characteristics.

Not to mention functions needed with respect to audit, delegation, strong authentication, access enforcement and the principal of least privilege. A main issue that is likely to emerge with agentic-AI systems is how to handle optimisation from both an authentication and authorization point of view.

Agents are expected to continually learn and improve their behaviour based on their stated goal or objective. They will operate within the upper boundaries of their permission set, assurance and trust boundaries - which may well lead to unintended consequences with significant negative system impact in the form of data loss, lack of integrity or availability.

The following should be considered with respect to the use of agentic-AI and the protection of both their runtime behaviour, authentication and access control points of view:

Which team is responsible for the design of the agentic-AI system and its components?

Which owner or team is responsible for the management of the agentic-AI system and its components?

How are agents to be authenticated?

How are permissions or capabilities to be identified for an agent?

What access control boundaries are needed within the agentic-AI system?

How are the access control boundaries to be enforced?

How will agents be created and removed?

How will access requirements change as an agent evolves/learns?

What threat model is in place for agent design?

Will multiple-agent systems be needed?

The following capabilities should be considered with respect to agentic-AI identity security management:

Discovery of agents in use

Composable capabilities during AI design/model planning

Strong authentication of agent

ZSP/PoLP for permissions association

Support of delegation, impersonation and behalf-of authorization

Integrity protection for agent communications

Confidentiality protection for agent communications

In-memory protection for agent credentials/authentication

Dynamic assignment of permissions to agent

Agent behaviour monitoring

Agent to owner discovery, recommendation and association

Policy based control for agent access

Ingress/egress filter with respect to agent workload

The following contains a set of links from industry groups, vendors and solution providers who have published further material in this or related areas.

NB - links subject to change, not associated or endorsed by The Cyber Hut and you follow at your own risk.

Balbix: Understanding Agentic AI and Its Cybersecurity Applications

Clutch Security: The Modern Workforce is Non-Human: Why Agentic AI Will Change Everything

ConductorOne: The State of Agentic AI in Identity Security

CyberArk: The Agentic AI Revolution: 5 Unexpected Security Challenges

Entro Security: What is Agentic AI?

Google: A2A Launch Blog

Omada: AI Agents and the Evolving Identity Landscape: Why the Right Governance Model Matters

OWASP Dishes Out Key Ingredients for a Secure Agentic AI Future

SC Media: Securing AI: How identity security is key to trusting AI in the enterprise

SecureAuth: Securing the Rise of Agentic AI

Silverfort: Beyond the hype: The hidden security risks of AI agents and MCP

Strata Identity: The identity crisis at the heart of the AI agent revolution

StrongDM: The State of AI in Cybersecurity Report by StrongDM

Token Security: NHI and the Rise of AI agents: The Security Risks Enterprises Can’t Ignore

Google: Agent 2 Agent Protocol

OWASP: Top 10 for LLMs

Astrix Security: Secure AI Agent Access

Auth0: Tool Calling in AI Agents: Empowering Intelligent Automation Securely

Britive: Research Report for Agentic Identity Security Platforms

Natoma: MCP and Agent Access

Oasis Security: Protect AI Identities at the Pace of Innovation

Permit.io: Secure AI Collaboration Through A Permissions Gateway

SGNL: Press release: With MCP, AI agents now have power. SGNL makes sure they use it responsibly.

Teleport: Securing Agentic AI

Last Updated: June 2025. Email [email protected] for additions and errors.

.png)