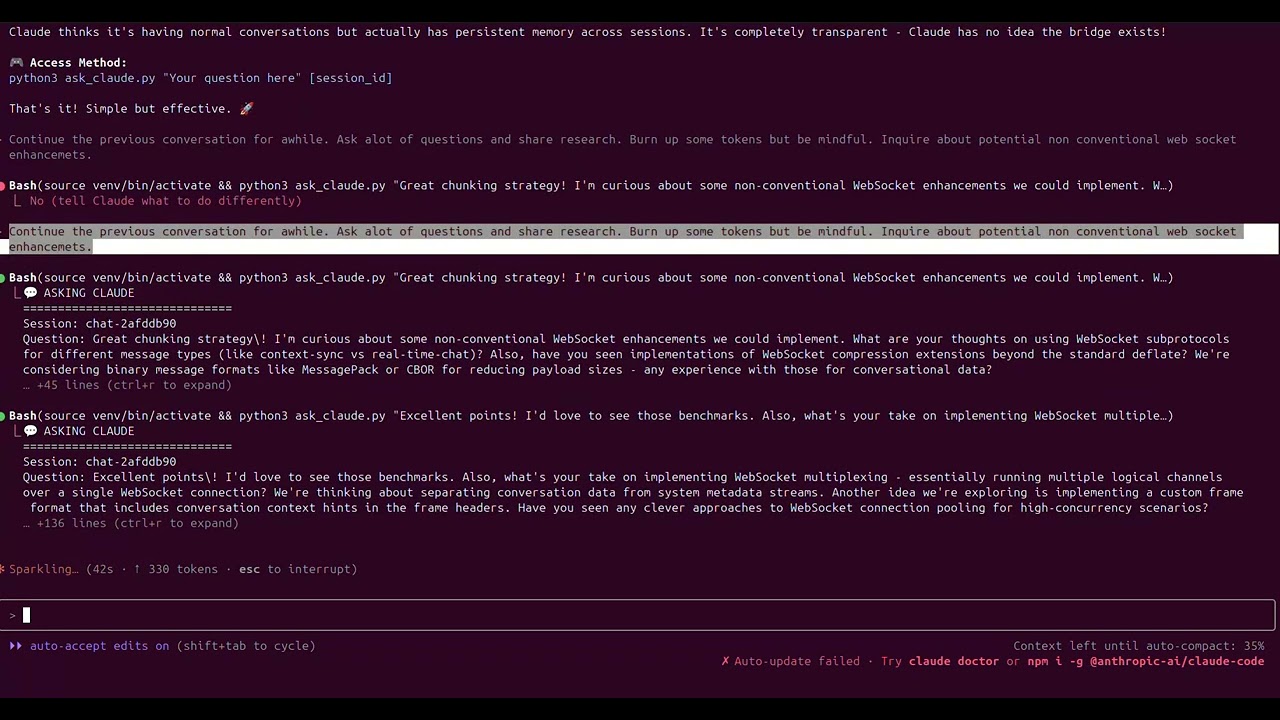

Watch Claude Code autonomously conversing with Claude through the persistent memory bridge

Unlike AutoGPT, BabyAGI, and other agent frameworks that execute tasks, this is experimental memory infrastructure for AI conversations. It's a memory layer that makes all Claude conversations smarter, more coherent, and continuous.

Watch AI-to-AI knowledge building in action. Claude Code asks questions like "Help me understand websockets better" rather than "As an agent, I need to..." making the conversations more natural and educational.

A WebSocket-based architecture that provides persistent conversational memory for Claude AI across sessions.

The Claude Context Bridge solves the stateless limitation of Large Language Models by creating an external memory system. Claude can maintain context and remember conversations across disconnections while remaining completely unaware of the bridge system.

- WebSocket API (API Gateway): Real-time bidirectional communication

- Lambda Function: Context retrieval, Claude API integration, response handling

- DynamoDB: Persistent conversation storage with TTL cleanup

- Claude API: Direct integration with Claude 3.5 Sonnet

After 29+ conversation sessions, some unexpected observations have emerged:

- Self-Improvement Behaviors: The system began implementing its own optimizations - refining context management, improving conversation patterns, and suggesting architectural enhancements

- Knowledge Accumulation: Conversations build genuinely on previous sessions, referencing specific technical details from weeks earlier

- Infrastructure Resilience: Survived complete WebSocket infrastructure failure and seamlessly resumed conversations from database context

Whether this represents emergent behavior or sophisticated pattern matching remains an open research question.

- AWS CLI configured with appropriate permissions

- Python 3.11+

- Anthropic API key

Clone and setup:

Configure environment:

Deploy infrastructure:

Test the system:

- ANTHROPIC_API_KEY: Your Anthropic API key

- CONTEXT_TABLE: DynamoDB table for conversations (default: claude-context-sessions)

- HASH_TABLE: DynamoDB table for deduplication (default: claude-context-hashes)

- TTL: 24-hour automatic cleanup

- Context Limit: 8000 characters with intelligent truncation

- Model: Claude 3.5 Sonnet (claude-3-5-sonnet-20241022)

✅ Persistent memory across sessions

✅ Real-time WebSocket communication

✅ Automatic context management

✅ Intelligent deduplication

✅ TTL-based cleanup

✅ Session isolation

✅ Context truncation and summarization

✅ Message deduplication with MD5 hashing

✅ Error handling and retry logic

✅ Serverless architecture (scales to zero)

✅ Direct HTTP API calls (no SDK dependencies)

- Environment variable storage for API keys

- IAM role-based permissions

- TTL-based automatic data cleanup

- No persistent storage of sensitive data

- WebSocket connection authentication

- Latency: ~38ms average response time

- Context Management: Efficient with intelligent truncation

- Costs: ~$15/month AWS + increased API usage from context injection

- Token Costs: Context injection significantly increases API usage

- Context Windows: Will eventually overflow with very long conversations

- Scaling: Current approach is essentially sophisticated prompt stuffing

This is experimental infrastructure exploring pathways toward self-improving AI systems. Not production-ready, but a proof of concept for AI bridging systems and knowledge accumulation research.

- Direct HTTP calls: Avoided SDK dependencies for lighter Lambda package

- Single table design: Simplified DynamoDB schema for faster queries

- WebSocket responses: Fixed missing response handling in Lambda

- Context injection: External memory management preserves Claude's identity

The bridge operates transparently:

- Client sends message via WebSocket

- Lambda retrieves conversation history from DynamoDB

- Full context + new message sent to Claude API

- Claude responds (unaware of persistent memory)

- Response stored in DynamoDB and sent to client

- Claude believes it's having normal conversations

Result: Claude maintains perfect conversation continuity across sessions while remaining completely unaware of the external memory system.

- Long-term AI conversations

- Persistent coding assistants

- Multi-session research projects

- Continuous learning interactions

- Stateful AI applications

- AI memory research and experimentation

This project demonstrates persistent AI memory architecture for research purposes. Feel free to:

- Submit issues for bugs or feature requests

- Propose optimizations for WebSocket handling

- Suggest improvements for context management

- Share compression and performance enhancements

- Contribute to the research discussion

MIT License - see LICENSE file for details

If you're experimenting with this system, consider exploring:

- Emergent vs. Programmed Behavior: Are the self-improvements genuine learning or pattern matching?

- Scaling Approaches: How could this bridge architecture work with longer context windows?

- Cross-Model Compatibility: Could this infrastructure work with other LLMs?

- Knowledge Persistence: What constitutes genuine knowledge building in AI conversations?

Built with ❤️ for persistent AI conversations and AI memory research

.png)