Somewhere in the last few months, something fundamental shifted for me with autonomous AI coding agents. They’ve gone from a “hey this is pretty neat” curiosity to something I genuinely can’t imagine working without. Not in a hand-wavy, hype-cycle way, but in a very concrete “this is changing how I ship software” way.

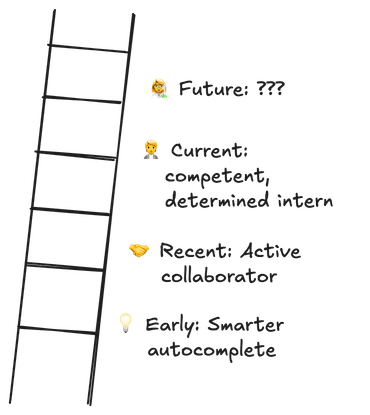

If I imagine a ladder of our evolving relationship with coding agents, we’ve climbed to a new rung. We’ve moved from “smarter autocomplete” and “over the shoulder helper” to genuine “delegate-to” relationships - they’re like eager and determined interns.

The AI Coding Capability Ladder ===================================== 🧑💼 Current: Conscientious Intern ← We are here ├─ Autonomously complete small tasks ├─ Patient debugging assistance └─ Code review & analysis 🤝 Recent: Active Collaborator ├─ Real-time pair programming ├─ Contextual suggestions └─ Intelligent autocomplete 💡 Early: Smarter Autocomplete ├─ Basic Q&A ├─ Syntax help └─ Documentation lookupThe journey so far

I’ve really enjoyed using Claude and ChatGPT directly to help me code faster over the past couple of years. They’re fantastic for those moments when you’re staring at an error message that makes no sense, or trying to understand some gnarly piece of library code, or just want to sanity-check an approach. Having a conversation with an AI has become as natural as reaching for Stack Overflow used to be (and honestly more reliable - no sifting through 47 different answers from 2009 that don’t quite apply to your situation).

Cursor, as a human augmentation system, has made a remarkable impact. The inline suggestions and contextual understanding fundamentally changed how I write code. I’m definitely never going back to coding without an assistant - the productivity gains are just too substantial. When you’re in flow and Cursor is suggesting exactly the next line you were about to type, it’s magic. It feels like having a really good pair programming partner who happens to have perfect recall of recently touched files and some knowledge of every codebase you’ve ever worked on.

But here’s the thing: while these human-in-the-loop tools were transformative, the fully autonomous experiences just weren’t close to good enough until recently. I’d tried various “AI does the whole task” tools and they’d inevitably just get stuck and fail to produce anything meaningful. I’d regret having spent the time to set them up having been assured that no, this time it really works.

The autonomous revolution, or “this time it really works!”

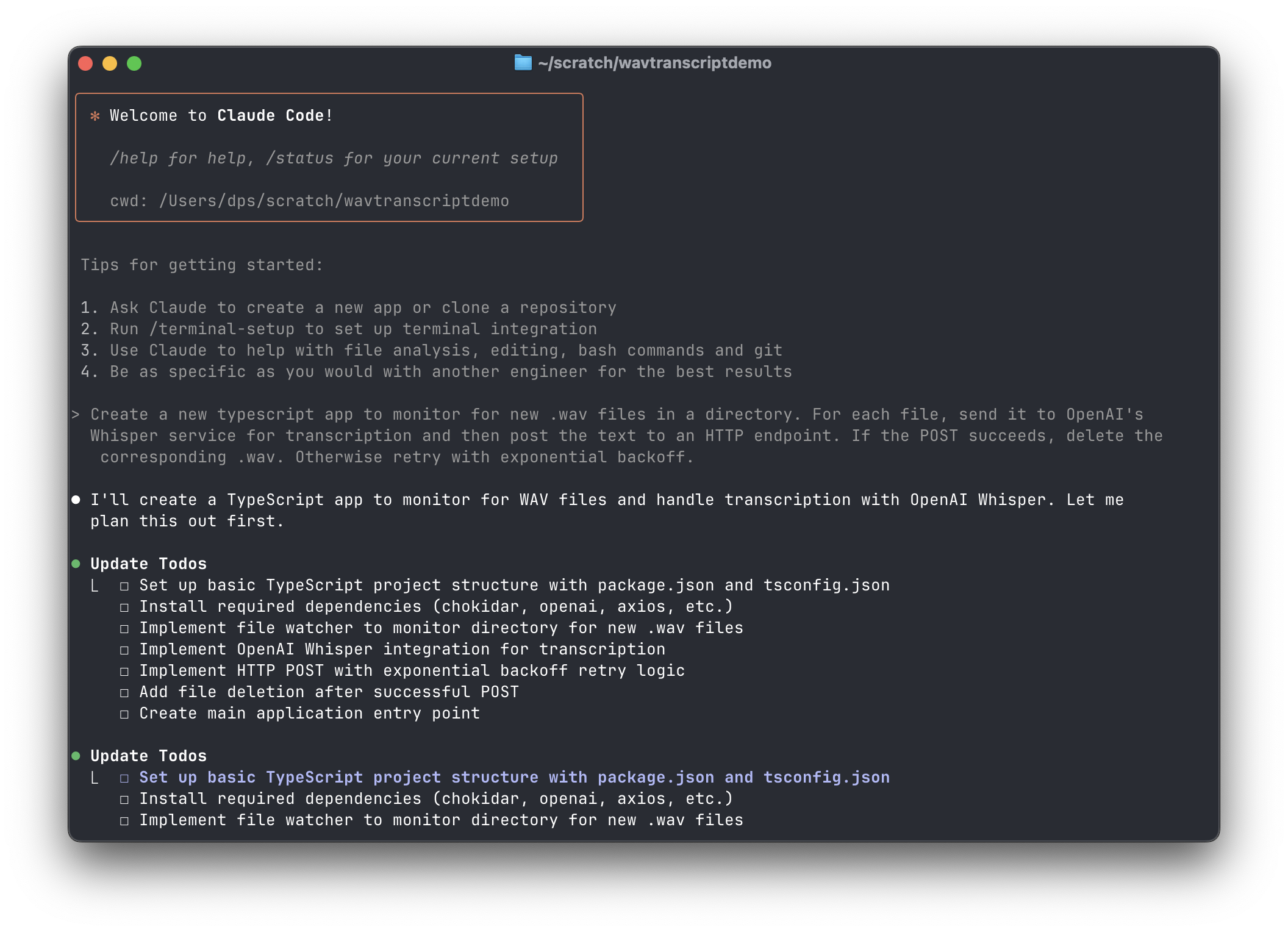

Now Claude Code and OpenAI Codex are routinely completing whole tasks for me, and it’s genuinely changed everything.

For personal tools, I’ve completely shifted my approach. I don’t even look at the code anymore - I describe what I want to Claude Code, test the result, make some minor tweaks with the AI and if it’s not good enough, I start over with a slightly different initial prompt. The iteration cycle is so fast that it’s often quicker to start over than trying to debug or modify the generated code myself. This has unlocked a level of creative freedom where I can build small utilities and experiments without the usual friction of implementation details. Want a quick script to reorganize some photos? Done. Need a little web scraper for some project? Easy. The mental overhead of “is this worth building?” has basically disappeared for small tools. I do wish Claude Code had a mode where it could work more autonomously - right now it still requires more hands-on attention than I’d like - but even with that limitation, the productivity gains are wild.

For work, I increasingly give small bugs directly to Codex. It can handle simple ones completely, but even for complex issues, it makes a reasonable start. Since it runs in a fully encapsulated environment, it actually takes a bunch of the schlep out of making branches and PRs too. My workflow now often looks like: describe the bug, let Codex take a first pass, check out the branch and test the change, give it more direction if needed, and sometimes just pick it up and finish it myself. I always review code going into my production app, of course, but the initial heavy lifting is increasingly automated.

For code review, we’re finding Claude Code’s GitHub Actions integration remarkably useful. It frequently finds issues I’ve overlooked and responds to context in our repo really well. It’s like having an extra set of experienced eyes that never gets tired or misses obvious mistakes.

The previous rung is getting stronger too… a personal debugging breakthrough

Progress is, of course, not limited to autonomous mode. The frontier models have dramatically improved in their ability to assist beyond simple scenarios. They no longer simply paraphrase docs and SO posts, but feel like they can reason when given the right prompts and context. I had an experience last Friday that illustrates this pretty clearly. It was a moment where Claude genuinely helped me solve something I’d been banging my head against.

The bug

We had a subtle issue in one of our OAuth integrations. Users would complete authentication, tokens would be exchanged successfully from the server, but then their session would mysteriously disappear from the database shortly after. The timing made it almost impossible to catch with traditional debugging methods. This is the kind of thing I would never have asked AI to help with 6 months ago;there would have been no chance it would be fruitful.

But, after I’d spent a solid 45 minutes adding printfs and trying to trace the flow manually I decided on a whim to try asking Claude (Sonnet 4, with extended thinking turned on) for help. Initially Claude suggested a bunch of obvious things that clearly weren’t broken. Classic AI assistant behavior: “have you tried turning it off and on again?” level suggestions.

The breakthrough: ASCII sequence diagrams

Instead of diving straight into more code analysis, I tried a different approach. I asked Claude to read through our OAuth implementation and create an ASCII sequence diagram of the entire flow.

This turned out to be the key insight. The diagram mapped out every interaction. Having a visual representation immediately revealed the complex timing dependencies that weren’t obvious from reading the code linearly. More importantly, it gave Claude the context it needed to reason about the problem systematically instead of just throwing generic debugging suggestions at me.

The actual bug

With the sequence diagram as context, Claude spotted the issue: a state dependency race condition. The fix was simple once “we” found it: removing the problematic dependency that was causing the re-execution.

45 minutes of manual debugging → 10 minutes with the right context.

What made this so effective

The real point here isn’t the sequence diagram itself - though do try it if you find yourself in a similar bind - it is the power of helping the model have the right context to actually reason about your problem. This feels like a new kind of programming. What can I say to the model and get it to “think” about that will move us towards our shared goal? The more I prompt models in the course of my work the more intuition I seem to build for how to use them at the edge of their capabilities.

This points to something bigger: there’s an emerging art to getting the right state into the context window. It’s sometimes not enough to just dump code at these models and ask “what’s wrong?” (though that works surprisingly often). When stuck, you need to help them build the same mental framework you’d give to a human colleague. The sequence diagram was essentially me teaching Claude how to think about our OAuth flow. In another recent session, I was trying to fix a frontend problem (some content wouldn’t scroll) and couldn’t figure out where I was missing the correct CSS incantation. Cursor’s Agent mode couldn’t spot it either. I used Chrome dev tools to copy the entire rendered HTML DOM out of the browser, put that in the chat with Claude, and it immediately pinpointed exactly where I was missing an overflow: scroll.

45 minutes of manual debugging → 2 minutes with the right reasoning context.

For complex problems, the bottleneck isn’t the AI’s capability to spot issues - it’s our ability to frame the problem in a way that enables their reasoning. This feels like a fundamentally new skill we’re all learning.

All that said, this transition isn’t without significant pitfalls. The biggest one, which I’m still learning to navigate, is what a friend recently described to me as the “mirror effect” – these tools amplify both your strengths and your weaknesses as a developer. They had spent hours going round in circles with a reasoning model: “The model kept suggesting increasingly complex solutions, each one plausible enough that I kept following along… When I finally stepped back and took time to understand the framework’s actual behavior, the fix took 30 minutes and was embarrassingly simple.”

This creates a potentially dangerous feedback loop. When you’re learning a new technology, AI can help you make just enough progress to paint yourself into a corner. It’ll confidently generate code that runs but reinforces your own subtle misconceptions about how the underlying system works. Without a solid foundation, you can’t distinguish between good AI suggestions and plausible-sounding nonsense. Therefore, a solid understanding of how to write and structure code remains really important to use this technology best.

A related downside that I try to keep on the lookout for is that AI can patch over architectural problems quickly, making it tempting to avoid harder refactoring work.

These tools work best when you already have enough knowledge to be a good editor. They’re incredible force multipliers for competent developers, but they can be dangerous accelerants for confusion when you’re out of your depth.

Addressing the skeptics

I expect there are a few common rebuttals to this “new era” framing:

“The agents aren’t actually smart, you just know how to use them” - This is partially true and misses the point. Yes, knowing how to prompt effectively matters enormously (the sequence diagram story is a perfect example). But that’s like saying “compilers aren’t smart, you just know how to write code.” The tool’s capability and the user’s skill compound each other. The fact that these tools are now sophisticated enough to be worth developing expertise around is itself evidence of the shift.

“We’re entering an era of untrustable code everywhere” - This assumes AI-generated code is inherently less trustworthy than human-written code, which isn’t obviously true. Inexperienced human programmers sometimes write terrible, buggy code too. The real question is whether the combination of AI generation + human review produces better outcomes than human-only development. In my experience, for many classes of problems, it does. The key is appropriate oversight and testing, just like with human-written code. For production code, for now, I think it’s important that the human who merges PRs is still responsible for the changes, whether AI generated or not. I find myself wearing my code reviewer hat for these PRs both checking the code and testing it. When Codex makes a PR, I’ll pull the branch, run it locally and check it works the way I want it too. In a way this is what feels most like treating the agent like an intern - giving agency, but also checking in closely.

“There will be nothing left for us to do” - I’m not seeing this at all. If anything, automating away the mechanical parts of programming frees me up to focus on the interesting problems: architecture, user experience, business logic, performance optimization. The bottleneck in most software projects isn’t typing speed or boilerplate generation - it’s figuring out what to build and how to build it well. AI tools are making me a more effective software developer, not replacing me as one.

Looking forward

The transformation has been remarkable. We’ve moved beyond AI coding tools being helpful supplements to essential parts of the development workflow. They’re not replacing human judgment - I still design, and make all the critical decisions about what to do - but they’re automating away so much of the mechanical work that I can focus on the problems that actually matter.

The pace of improvement suggests we’re still in the early stages of this shift. If the current trajectory continues, I suspect the distinction between “AI-assisted” and “AI-automated” development will become increasingly blurred.

Every week brings new capabilities, and every month brings workflows that would have seemed like science fiction just a year ago. I’m quite pleased to be living through this transition, even if it means constantly adapting how I work. A chasm has been crossed, and there’s definitely no going back.

🚀

Thanks to Ari Grant, Nicholas Jitkoff, Sascha Haeberling, Rahul Patil, Keith Horwood and my new friend Claude who each reviewed a draft of this post.

.png)