Every software engineer has their war stories — those moments when a seemingly brilliant architectural decision comes back to haunt them years later. Today, I want to share one of ours, because it reveals something important about how we think about system design: we often make good architectural choices for the things we expect to change but in ways that make it harder to adapt to the unexpected.

The Clean Architecture revelation

Like many engineering teams, we have a technical book club at Bellroy. Over the years, we’ve worked through the classics that have shaped our industry — The Pragmatic Programmer, Clean Code, and the book that would prove both transformative and, eventually, problematic: Clean Architecture.

When we first read Uncle Bob’s treatise on architectural patterns, it felt revelatory. Here was a framework that seemed to identify and solve many of the pain points our team was experiencing. As a Ruby on Rails shop experimenting with different infrastructure providers and integration partners, we’d been bitten repeatedly by tight coupling. Switching from one payment processor to another, or migrating from file-based storage to a database, always seemed to require changes scattered throughout our codebase.

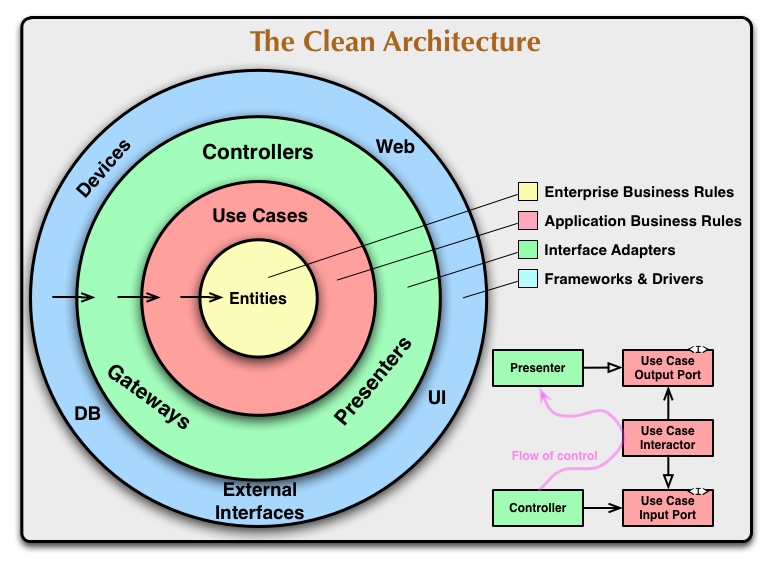

Clean Architecture promised a better way. The core principle is simple: dependencies should point inward, toward your business logic, never outward toward implementation details. You achieve this through interfaces and gateways — abstract boundaries that let your core domain remain blissfully unaware of whether it’s talking to PostgreSQL or MongoDB, Stripe or PayPal.

We were sold. We printed out the famous Clean Architecture diagram and taped it to our office walls like an architectural commandment. We refactored our codebase to embrace the pattern, creating gateway interfaces for external services and ensuring our business logic lived in the pristine centre of our dependency circles.

The benefits were immediate and tangible. Switching from file-based storage to a relational database became trivial — we could just swap out the implementation behind the gateway interface. Our test suite became more focused and faster, since we could easily mock external dependencies. We felt like we’d discovered the secret to maintainable software.

The ultimate test

It was around this time that we decided we needed more than an accounting system, we needed an Enterprise Resource Planning (ERP) system. ERP migrations are legendary for the chaos they unleash on businesses. Our accounting system, which had served us well in our earlier days, had politely informed us that we’d grown too large for their infrastructure. They suggested we might be happier elsewhere — a diplomatic way of saying we were monopolizing their resources. We were suddenly on the clock.

A migration to an ERP would be the real test of Clean Architecture. We’d heard horror stories of companies losing weeks of productivity, data inconsistencies causing financial headaches, and integrations that limped along for months. We were determined to avoid that fate.

This is where Clean Architecture truly shone. Its gateway pattern gave us an elegant solution: we modified our accounting gateway to write to both our incumbent system and the sandbox environment of our new ERP. For three months, we ran this dual-write setup, comparing outputs at month-end and methodically tracking down every discrepancy.

The switchover itself was almost anticlimactic. At midnight on June 30, 2019 — the end of the Australian financial year — we deployed a single configuration change, updating our connection string to point to the production ERP environment. We ran both systems in parallel for another month as a safety net, then deployed one final change to stop writing to the old system entirely.

It was the smoothest integration I’ve ever been part of. We celebrated accordingly, convinced we’d mastered the art of system evolution.

When architecture becomes archaeology

But software has a way of humbling us, and our celebration proved premature. Not long after our ERP success, we made another significant decision: we committed to functional programming and began transitioning our entire codebase from Ruby to Haskell.

We adopted a pragmatic approach: maintain the Ruby code but only port functionality to Haskell when we could add meaningful value in the process. This meant our Ruby codebase gradually became legacy code, maintained but not actively developed. This transition — which we expected to take a couple of years — has now stretched into its seventh year.

Here’s where the Clean Architecture approach began to work against us. As we hired more Haskell-focused developers and our institutional knowledge of Ruby faded, those carefully crafted abstraction layers became archaeological puzzles. Reverse-engineering what a piece of code actually did — especially complex, multi-step operations with side effects — became a nightmare.

The very indirection that had made our ERP migration so smooth now made understanding our legacy code incredibly difficult. We developed a habit of first collapsing all the abstraction layers in any area we wanted to port, flattening the code so we could see what it actually accomplished. The comprehensive unit tests we’d written for each abstraction layer had to be collapsed too, accounting for all the edge cases across multiple interfaces.

What had once been our architectural strength became a significant liability, made worse as fewer team members retained working knowledge of Ruby.

The missing framework

This experience taught us something important about how we approach system design. We had excellent resources for many aspects of software engineering — Working Effectively with Legacy Code is brilliant for dealing with inherited technical debt, Refactoring gives teams techniques and a common language around good (safe!) refactoring technique, and books like Clean Architecture provide powerful patterns for active development of object-oriented codebases.

But we lacked frameworks that would have helped us think about the entire lifecycle of a system. We didn’t have good guidance on which architectural patterns are appropriate for different stages of a system’s life, or clear warnings about the long-term costs of our design decisions.

There are plenty of blog posts discussing the abstract costs of indirection and over-abstraction. What I’ve shared here is a concrete example of when and how a team actually pays those costs, and how difficult it was for us to anticipate them. Our Clean Architecture approach was absolutely the right choice for an actively developed Ruby system facing integration challenges. But it became the wrong choice for a legacy Ruby system being gradually replaced by Haskell.

Designing for tomorrow’s problems

The lesson isn’t that Clean Architecture is bad — it’s that every architectural decision involves tradeoffs across time. The abstraction layers that make active development and integration seamless can make legacy code maintenance significantly harder. The interfaces that provide flexibility during a system’s growth phase can become obstacles during its sunset phase.

Perhaps what we need is a more nuanced view of system architecture — one that acknowledges that the “right” design depends not just on current requirements, but on the likely evolution of the system over its entire lifespan. Sometimes the most maintainable code is the most obvious code, even if it’s less elegant or flexible. Sometimes in pursuing the honorable goal of DRYing (Don’t Repeat Yourself) up our code, we end up with a more complex system. We now talk about “complexity budgets” - the idea that a team of a particular size can only support a certain amount of complexity across our systems - and use that to guide our design decisions.

Are you:

- designing for extensibility? Then a pattern like Clean Architecture is a great fit for object-oriented codebases, and effect systems provide similar benefits for functional programmers.

- designing for maintainability? You’ll want to reduce the abstract concepts while maintaining separate, concrete classes/modules with a clear single responsibility.

- designing for replacement? Then you want your code to read like an executable description of what the legacy system does right now, end-to-end, so that it can be easily ported.

Abstractions come with ongoing maintenance costs, but they only deliver value when you’re actively switching between the things they abstract. As systems evolve, maintainers must decide when these abstractions stop paying off. In our experience, legacy systems rarely benefit from keeping any of these abstractions.

As we migrate to Haskell, we’re putting this lesson into practice. We’re being more deliberate about when abstraction truly serves us — and when directness is the smarter long-term bet. The code we write today becomes tomorrow’s legacy system, and our future selves and colleagues deserve better than having to excavate meaning from layers of forgotten abstractions.

.png)