MCP servers are taking the world by storm, piggybacking on the popularity of LLMs and, in particular, coding agents.

It’s one of the most explosive growths in any ecosystem I remember seeing over the last years, and, it’s with good reasons.

MCP servers are so useful because they allow an agent to access a much broader set of tools than it would be able to otherwise. This has a double effect: one, the underlying LLMs become immediately much more grounded in factual knowledge, which helps with reliability and some degree of determinism. Two, with an MCP server, you effectively take full control and ownership of the tools the agent can use. This opens up the stream of endless possibilities that are behind such explosive growth.

However, being a very recent technology, there are still a lot of unknowns (both known and unknown) on how to actually apply the standard SDLC to developing and testing these servers. Especially given that, in practice, a huge part of the work relies on prompt engineering and a degree of non-determinism powered by the underlying LLM.

For this post, I’ll focus purely on how I approach developing and testing MCP servers from the server-side perspective only.

It is possible, and even useful, to write your own MCP client, but, for the scope of this post, I’ll focus on testing and developing servers only.

An important principle when writing software is to “stand on the shoulders of giants” as much as possible.

This means working at higher and higher levels of abstraction while reusing the base building blocks as much as possible.

In my case, the strong foundation came from the amazing project, maintained and developed by Jeremiah Lowin dubbed FastMCP.

Described on the website as: “FastMCP is the standard framework for building MCP applications. The Model Context Protocol (MCP) provides a standardized way to connect LLMs to tools and data, and FastMCP makes it production-ready with clean, Pythonic code”.

I mean… what’s not to like about that?

Leveraging this framework allowed me to achieve two critical aspects when focusing on a rapid iteration and feedback loop during development:

- Focusing on the actual tools, not on the protocol. Yes, the protocol IS critical (and you’ll see how important it becomes below) but, while developing the tools themselves, it’s better if it gets out of the way; FastMCP abstracts it super well: a tool is basically a Python function with an annotation on top (Springboot vibes!!);

- Actually run the server locally, which is great for testing things;

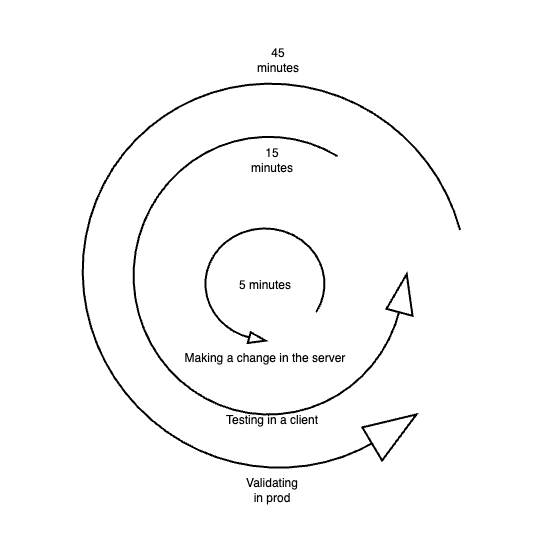

With a strong base in place, everything else that follows becomes more of a natural extension of the inner feedback loop during development being made so short.

The faster you can iterate, the faster you can experiment and validate assumptions and hypothesis.

So, anything you can do to optimize the speed of your inner feedback loop, will pay itself off tremendously by also speeding up the outer loops as a direct consequence, so, it’s well-worth to focus on inner feedback loop optimizations.

The promise of FastMCP? If you know even basic Python, you can write your own MCP server! And it delivers perfectly!

The next piece of advice is: work at the abstraction level that makes sense for you, while still giving you useful feedback. If you are debugging a web application client-side issue with the color of a button, you probably don’t want to get knee-deep into server code. If you’re writing a calculator and there’s a bug in the division routine, you’re not going to look at the subtraction one, etc, etc. You get the gist: always work at the level of abstraction within the stack, that gives you the most ROI - valuable information and as little noise as possible.

Now, this might sound like obvious advice to anyone reading this, but, it’s a surprisingly hard thing to accomplish when working with an MCP server. Why?

The reason is that the client-server flow is slightly more complex than standard REST APIs, with a sort of registry happening from the client to the server to establish a session (identified by a unique ID) that gets then used on subsequent requests to handle tool listing, prompt listing, tool calling and more.

In other words, it’s not “as easy” as a standard REST API, where a simple request can be made via the command line with CURL.

What’s the approach, then? We just got to find the right abstraction level, and, in this case, I chose the good ol’ reliable Postman!

In this case, Postman acts as a full-fledged client that implements the official MCP protocol spec under the hood. The biggest advantage of this is that once you start your server locally (remember, it’s just a simple Python command!) it becomes super easy to debug, step through your tool execution flow, validate tokens, middleware, prompts, and anything else you can think of implementing.

The best part? It’s all using familiar tools and workflows, so it quickly becomes natural, and it’s a flow that encourages experimentation and fast prototyping!

Remember also that all the existing best practices for writing maintainable software apply and are actually even more important when writing MCP servers. Efficiency, unit testing, CI/CD pipelines and single responsibility principle are great ways to manage your server’s capabilities and evolution over time.

What we’ve seen so far is great for iterating locally and even hooking it up to a more “real” client, like Cursor or Github Copilot and seeing it “in action”. But, at scale, especially if you are deploying your MCP server using the (now) standard http transport, this means that you have a server running and clients can connect to your server.

Again: this setup is amazing for local testing and even when deploying it and, for instance, dogfooding it inside your own company or team, it will probably be sufficient.

But… does it scale?

When deploying the server to production, some caveats are worth noting: the flow when using an MCP server is different from regular software. i.e. your server gets invoked, typically, in between LLM interactions, such as an initial user query, followed by the server invocation, then some LLM-based feedback that uses the server tool results, and potentially having this flow on a loop.

Thus, to test your server, the best way to do it is to do stress testing to find its breaking point in a way that “regular usage” can’t.

This isn’t any different from stress testing a regular HTTP-based REST API or service, but, it becomes critical since we’re now in a new world where LLM interactions get intertwined with these tools. So, to have the capability to isolate them and test them is paramount for guaranteeing a good user experience.

But, how to go about it?

Here’s a case where experience with LLMs can really help and somehow set you apart from other engineers who aren’t (yet) leveraging them! In fact, this type of scenario is where LLMs really can shine!

Here’s what Gemini has to teach me about it:

So, let’s assume that, right up until this moment, I didn’t even know what Locust was. I just knew that I wanted to rely on open-source tools and write a load test that implemented the MCP protocol so that I could load test my server.

From here onwards, we already won! Why? Because now we have a thread to pull from. We can now start a breadth-first search that wouldn’t be possible otherwise, because we just didn’t know the tools!

Obviously, we could have googled this and searched around, but, having this Locust starting point already gives us an “oriented” search to either validate this approach or choose another one!

In my case, I ended up actually going with K6 in the end.

But, here’s the key aspect:

So, here’s one more trick about using LLMs in your daily work: use them as guides and exploratory partners that know a lot more than you, but, lack intent and context.

YOU have the intent and YOU know what you want, what you need and HOW to approach it.

By tweaking your own searches and options by “sieving them” with an LLM as a “first stab”, you can move much faster and much more confidently in the right direction. In this particular case, after myself setting my sights on K6, it was an amazingly enjoyable experience to use Gemini to help me write a load testing script, and running with Docker in my machine.

Bear in mind, before the first prompt, I had never done a load test in my life before. And after around maybe 1/2h of “paring” with Gemini, we had not one, but three distinct load testing scripts in Javascript, ready to run via K6 in Docker, to test various scenarios.

If you’ve read a lot and heard a lot about LLM augmenting engineers and giving some super powers, well… I guess this is exactly what everyone means. It’s not about replacing engineers, it’s about making existing engineers more pragmatic, efficient and more adaptable to trying out new things!

MCP servers are the hottest new trend in tech right now! It’s probable that it will continue for a little while longer! It’s cool, it’s flashy, there’s a HUGE amount of untapped potential in it, and, at the same time, as we just saw, the practices of building traditional software, how to know what to build, know what to search for and which tool to apply for a given job are just as critical now as they were before!

The key differences are that with LLMs, it becomes almost FUN to work on these things, because you have the access and the capability to do SO MUCH MORE, in MUCH LESS time!

Then, obviously, the challenge becomes what’s always been: knowing what to build, and build it with taste and a vision, so that users love to use it, just as much as you loved building it!

.png)