Suppose that you have an array of N elements and you want to divide it into M chunks. It is a common task when trying to spread N units of work over M threads, for example.

You want chunks to be all the same size, plus or minus one element (fair sized). It might be slightly harder than you imagine.

Following Python, wse the convention that a//b is the integer quotient so that 5//2 is 2. And let % be the ‘remainder’ : 5%2 is 1.

The naive algorithm uses the following chunk ranges: [0, N//M), [N/M, 2*N//M)… up to [N//M*M, N). Thus all chunks have size N/M but there is an extra chunk of size N%M.

If N is 5 and M is 2, we have the chunks [0,2), [2,4), [4,5).

That is not great. We have one extra chunk ! At least we do when N//M is at least one.

Instead, we can make the last chunk possibly larger. We use the ranges [0, N//M), [N/M, 2*N//M)… up to [N//M*(M-1), N). If N is 5 and M is 2, we have the chunks [0,2), [2,5). At least we have the right number of chunks.

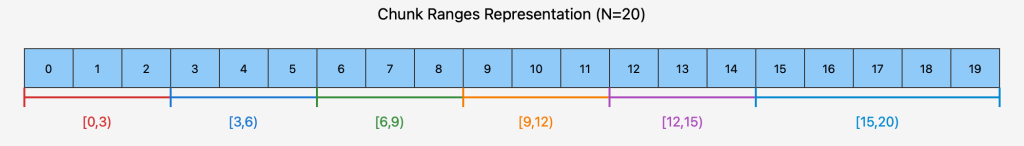

But what if we have N is 20 and M is 6? We get the chunks [0,3), [3,6), [6,9), [9,12), [12,15), [15,20). It is quite bad because the last chunk is much larger.

Instead, you might try the following: let the chunk size be ceil(N/M). That is, the chunk size is N/M rounded up. Thus if N is 5 and M is 2, we have that the chunk size is ceil(5/3) or 3. We use the chunk ranges: [0, ceil(N/M)), [N/M, 2*ceil(N/M))…[(M-1)*ceil(N/M), N). If N is 5 and M is 2, we have the chunks [0,3), [3,5). That is much better. This time, we have the right number of chunks. One has size 3 and the other one has size 2. But if fails if N is 12 and M is 5. We get that the chunks size is 3, and so the ranges are [0,3), [3,6), [6,9), [9, 12). So we are missing one chunk!

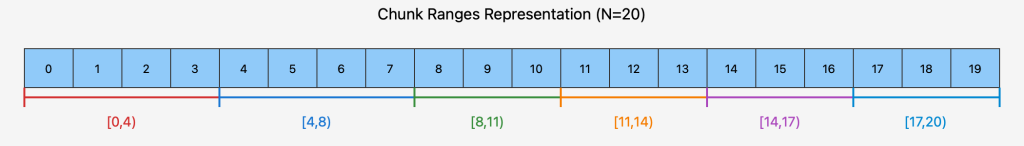

So what should you do? A better approach is to have the first N%M chunks have size ceil(N/M) and have the remaining N-N%M chunks have size floor(N/M).

Let me implement it in Python. The get_chunk_range_simple function divides a total of N elements into M chunks and returns the start and end indices of the i-th chunk (0-indexed). It handles uneven divisions by distributing the remainder across chunks: the first remainder chunks (where remainder = N % M) have size quotient + 1 (where quotient = N // M), while the remaining chunks have size quotient. For a given chunk index i, the function calculates the start as quotient * i + min(i, remainder), accounting for the extra elements in earlier chunks, and the end as quotient * (i + 1) + min(i + 1, remainder), ensuring the range reflects the cumulative effect of previous chunk sizes. This approach ensures all elements are covered, with earlier chunks absorbing the remainder for balanced distribution.

def get_chunk_range_simple(N, M, i): quotient, remainder = divmod(N, M) return (quotient * i + min(i, remainder), quotient * (i + 1) + min(i+1, remainder))The result is fair sized. Let us illustrate when N is 20 and M is 6.

We can implement it in C++ as well. For a change, I return the start index and the length of a given chunk. Importantly, we would rather avoid an overflow which might be caused by a multiplication between the chunk index and the number of elements.

// N is the total number of elements // M is the number of chunks // i is the index of the chunk (0-indexed) std::pair<size_t, size_t> get_chunk_range_simple(size_t N, size_t M, size_t i) { // Calculate the quotient and remainder size_t quotient = N / M; size_t remainder = N % M; size_t start = quotient * i + (i < remainder ? i : remainder); size_t length = quotient + (i < remainder ? 1 : 0); return {start, length}; }Credit: The problem was posted on X by Robert Clausecker.

.png)