Subscribers to LWN.net made this article — and everything that surrounds it — possible. If you appreciate our content, please buy a subscription and make the next set of articles possible.

By Joe Brockmeier

October 1, 2025

The Fedora Council began a process to create a policy on AI-assisted contributions in 2024, starting with a survey to ask the community its opinions about AI and using AI technologies in Fedora. On September 25, Jason Brooks published a draft policy for discussion; so far, in keeping with the spirit of compromise, it has something to make everyone unhappy. For some it is too AI-friendly, while others have complained that it holds Fedora back from experimenting with AI tooling.

Fedora's AI survey

Aoife Moloney asked for suggestions in May 2024, via Fedora's discussion forum, on survey questions to learn "what our community would like and perhaps even need from AI capabilities in Fedora". Many of Fedora's contributor conversations take place on the Fedora devel mailing list, but Moloney did not solicit input for the survey questions there.

Tulio Magno Quites Machado Filho suggested asking whether the community should accept contributions generated by AI, and if AI-generated responses to mailing lists should be prohibited. Josh Boyer had ideas for the survey, including how Fedora defines AI and whether contributions to the project should be used as data by Fedora to create models. Justin Wheeler wanted to understand "the feelings that someone might have when we talk about 'Open Source' and 'AI/ML' at the same time". People likely have strong opinions about both, he said, but what about when the topics are combined?

Overall, there were only a handful of suggested questions. Matthew Miller, who was the Fedora Project Leader (FPL) at the time, pointed out that some of the questions proposed by commenters were good questions but not good survey questions.

In July, Moloney announced on the forum and via Fedora's devel-announce list that the survey had been published. Unfortunately, it is no longer available online, and the questions were not included in the announcements.

The way the survey's questions and answers were structured turned out to be a bit contentious; some felt that the survey was biased toward AI/ML inclusion in Fedora. Lyude Paul wanted a way to say Fedora should not, for example, include AI in the operating system itself in any capacity. That was not possible with the survey as written:

I'd like to make sure I'm getting across to surveyors that tools like [Copilot] are things that should actively be kept away from the community due to the enormous PR risk they carry. Otherwise it makes it feel like the only option that's being given is one that feels like it's saying "well, I don't think we should keep these things out of Fedora - I just feel they're less important."Moloney acknowledged that the questions were meant to "keep the tone of this survey positive about AI" because it is easy to find negatives for the use of AI, "and we didn't want to take that route":

We wanted to approach the questions and uncertainty around AI and its potential uses in Fedora from a positive view and useful application, but I will reassure you that just because we are asking you to rank your preference of AI in certain areas of the project, does not mean we will be introducing AI into all of these areas.

We are striving to understand peoples preference only and any AI introductions into Fedora will always be done in the Fedora way - an open conversation about intent, community feedback, and transparent decision-making and/or planning that may follow after.

DJ Delorie complained on the devel list that there was no way to mark all options as a poor fit for Fedora. Moloney repeated the sentiment about positive tone in reply. Tom Hughes responded "if you're only interested in positive responses then we can say the survey design is a success - just a shame that the results will be meaningless". Several other Fedora community members chimed in with complaints about the survey, which was pulled on July 3, and then relaunched on July 10, after some revisions.

It does not appear that the full survey results were ever published online. Miller summarized the results during his State of Fedora talk at Flock 2024, but the responses were compressed into a less-than-useful form. The survey asked separate questions whether AI was useful for specific tasks, such as testing or coding, and whether respondents would like to see AI used for those specific tasks in Fedora. So, for example, a respondent could say "yes" to using AI for testing, but say "no" or that they are uncertain to the question of whether they'd like to see AI used for contributions.

Instead of breaking out the responses by task, all of the responses have been lumped into an overall result broken out into two groups, user or contributor. If a respondent answered yes to some questions but uncertain to others, they were counted as "Yes + Uncertain". That presentation does not seem to correspond with how the questions were posed to those taking the survey.

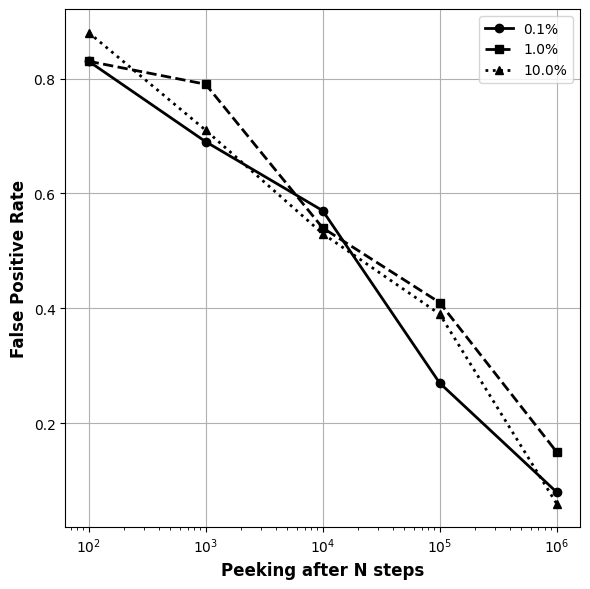

The only conclusion that can be inferred from these graphs is that a majority of respondents chose "uncertain" in a lot of cases, and that there is less uncertainty among users than contributors. Miller's slides are available online. The survey results were discussed in more detail in a Fedora Council meeting on September 11, 2024; the video of the meeting is available on YouTube.

Analysis

The council had asked Greg Sutcliffe, who has a background as a data scientist to analyze and share the results from the survey. He began the discussion by saying that the survey cannot be interpreted as "this is what Fedora thinks", because it failed to deal with sampling bias.

He also noted other flaws in the survey, such as giving respondents the option of choosing "uncertain" as an answer; Sutcliffe said it was not clear whether uncertain meant the respondent did not know enough about AI to answer the question, or whether it meant the respondent knew enough to answer, but was ambivalent in some way. He also said that it was "interesting that we don't ask about the thing we actually care finding out about": how the respondents feel about AI in general. Without understanding that, it is challenging to place other answers in context.

One thing that was clear is that the vast majority of respondents were Fedora users, rather than contributors. The survey asked those who responded to identify their role with Fedora, with options such as developer, packager, support, QA, and user. Out of about 3,000 responses, more than 1,750 chose "user" as their role.

Sutcliffe noted that "'no' is pretty much the largest category in every case" where respondents were asked about use of AI for specific tasks in Fedora; across the board, the survey seemed to indicate a strong bent toward rejecting the use of AI overall. However, respondents were less negative about using AI depending on the context. For example, respondents were overwhelmingly negative about having AI included in the operating system and being used for Fedora development or moderation; the responses regarding the use of AI technologies in testing or Fedora infrastructure were more balanced.

"On the negative side"

Miller asked him to sum up the results, with the caveat that the survey did not support general conclusions about what Fedora thinks. Sutcliffe replied that "the sentiment seems to be more on the negative side [...] the vast majority don't think this is a good idea, or at least they don't see a place" for AI.

Given that, it seems odd that when Brooks announced the draft policy that the council had put forward, he summarized the survey results as giving "a clear message" that Fedora's community sees "the potential for AI to help us build a better platform" with valid concerns about privacy, ethics, and quality. Sutcliffe's analysis seemed to indicate that the survey delivered a muddled message, but one that could best be summed up as "no thanks" to AI if one were to reach a conclusion at all. Neal Gompa said that he did not understand how the conclusions for the policy were made, "because it doesn't seem like it jives with the community sentiment from most contributors".

Some might notice that there has been quite a bit of time since the survey and the council's draft policy. This may be because there is not a consensus within the council on what Fedora should be doing or allowing. The AI guidelines were a topic in the September 10 council meeting. (Meeting log.) David Cantrell said that he was unsure that Fedora could have a real policy right now, "because the field is so young". Miro Hrončok pointed to the Gentoo AI policy, which expressly forbids contributing AI-generated content to Gentoo. He did not want to replicate that policy as-is, but "they certainly do have a point", he said. Cantrell said Gentoo's policy is what he would want for Fedora's policy right now, with an understanding it could be changed later. LWN covered Gentoo's policy in April 2024.

Cantrell added that his concerns about copyright, ownership, and creator rights with AI had not been addressed:

So far my various conversations have been met with shrugs and responses like 'eh, we'll figure it out', which to me is not good enough. [...]

Any code now that I've written and put on the internet is now in the belly of every LLM and can be spit back out at someone sans the copyright and licensing block. Does no one care about open source licensing anymore? Did I not get the memo?

FPL Jef Spaleta said that he did not understand how splitting out Fedora's "thin layer of contributions" does anything to address Cantrell's copyright concerns. "I'm not saying it's moot. I'm saying you're drawing the line in the sand at the wrong place." Moloney eventually reminded the rest of the council that time was running short in the meeting, and suggested that the group come to an agreement on what to do next. "We have a ticket, we have a WIP and we have a lot of opinions, all the ingredients to make...something."

Proposed policy

There are several sections to the initial draft policy from September 25; it addresses AI-assisted contributions, the use of AI for Fedora project management, as well as policy for use of AI-powered features in Fedora and how Fedora project data could be used for training AI models.

The first draft encouraged the use of AI assistants in contributing to Fedora, but stressed that "the contributor is always the author and is fully accountable for their contributions". Contributors are asked, but not required, to disclose when AI tools have "significantly assisted" creation of a work. Usage of AI tools for translation to "overcome language barriers" is welcome.

It would put guardrails around using AI tools for reviewing contributions; the draft says that AI tools may assist in providing feedback, but "AI should not make the final determination on whether a contribution is accepted or not". The use of AI for Fedora project management, such as deciding code-of-conduct matters or reviewing conference talks, is expressly forbidden. However, the use of automated note-taking and spam filtering are allowed.

Many vendors, and even some open-source projects, are rushing to push AI-related features into operating systems and software whether users want them or not. Perhaps the most famous (or infamous) of these is Microsoft's Copilot, which is deeply woven into the Windows 11 operating system and notoriously difficult to turn off. Fedora is unlikely to go down that path anytime soon; any AI-powered features in Fedora, the policy says, must be opt-in—especially those that send data to a remote service.

The draft policy section on use of Fedora project data prohibits "aggressive scraping" and suggests contacting Fedora's Infrastructure team for "efficient data access". It does not, however, address the use of project data by Fedora itself; that is, there is no indication about how the project's data could be used by the project in creating any models or in when using AI tools on behalf of the project. It also does not explain what criteria might be used to grant "efficient" access to Fedora's data.

Feedback

The draft received quite a bit of feedback in short order. John Handyman said that the language about opt-in features was not strong enough. He suggested that the policy should require any AI features not only to be opt-in, they should be optional components that must be installed by the user consciously. He also wanted the policy to prefer models running locally on the user's machine, rather than those that send data to a service.

Hrončok distanced himself a bit from the proposal in the forum discussion after it was published; he said that he agreed to go forward with public discussion of the proposal, but made it known, "I did not write this proposal, nor did I sign it as 'proposed by the Council'". Moloney clarified that Brooks had compiled the policy and council members had been asked to review the policy and provide any feedback they might have. If there is feedback that requires significant changes ("which there has been"), then it should be withdrawn, revised, and re-proposed.

Red Hat vice president of core platforms, Mike McGrath, challenged the policy's prohibition on using AI to make decisions when reviewing contributions. "A ban of this kind seems to step away from Fedora's 'first' policies without even having done the experiment to see what it would look like today." He wanted to see Fedora "get in front of RHEL again with a more aggressive approach to AI". He would hate, he said, for Fedora to be a "less attractive innovation engine than even CentOS Stream".

Fedora Engineering Steering Committee (FESCo) member Fabio Valentini was not persuaded that inclusion and use of AI technologies constitutes innovation. Red Hat and IBM could experiment with AI all they want, but "as far as I can tell, it's pretty clear that most Fedora contributors don't want this to happen in Fedora itself".

McGrath said that has been part of the issue; he had not been a top contributor to Fedora for a long time, but he could not understand why Fedora and its governing boards "have become so well known for what they don't want. I don't have a clue what they do want." The policy, he said, was a compromise between Fedora's mission and nay-sayers:

I just think it sends a weak message at a time where Fedora could be leading and shaping the future and saying "AI, our doors are open, let's invent the future again".Spaleta replied that much of the difference between establishing policy between Fedora and RHEL comes down to trust. Contribution access to RHEL and CentOS is hierarchical, he said, and trust is established differently there than within Fedora. Even those packagers who work on both may have different levels of trust within Red Hat and Fedora. There is not a workable framework, currently, for non-deterministic systems to establish the same level of trust as a human within Fedora. The policy may not be as open to experimenting with AI as McGrath might like, but he would rather find a way to move forward with a more prohibitive policy than to "grind on a policy discussion for another year without any progress, stuck on the things we don't have enough experience with to feel comfortable".

Graham White, an IBM employee who is the technical lead for the Granite AI agents, objected to a part of the policy that referenced AI slop:

I've been working in the industry and building AI models for a shade over 20 years and never come across "AI slop". This seems derogatory to me and an unnecessary addition to the policy.Clearly, White has not been reviewing LWN's article submissions queue or paying much attention to open-source maintainers who have been wading through the stuff.

Redraft

After a few days of feedback, Brooks said that it was clear that the policy was trying to do too much by being a statement about AI as well as a policy for AI usage. He said that he had made key changes to the policy, including removing "biased-sounding rules" about AI slop, using RFC 2119 standard language such as SHOULD and MUST NOT rather than weaker terms, and removed some murky language about honoring Fedora's licenses. He also disclosed that he used AI tools extensively in the revision process.

The shorter policy draft says that contributors should disclose the use of AI assistance; non-disclosure "should be exceptional and justifiable". AI must not be used to make final determinations about contributions or community standing, though it does not prohibit automated tooling for pre-screening tasks like checking packages for common errors. User-facing features, per the policy, must be opt-in and require explicit user consent before being activated. The shorter policy retains the prohibition on disruptive or aggressive scraping, and leaves it to the Fedora Infrastructure team to grant data access.

Daniel P. Berrangé questioned whether parts of the policy were necessary at all, or if they were specific to AI tools. In particular, he noted that questions around sending user data to remote services had come up well before AI was involved. He suggested that Fedora should have a general policy on how it evaluates and approves tools that process user data, "which should be considered for any tool whether using AI or not". An AI policy, he said, should not be a stand-in for scenarios where Fedora has been missing a satisfactory policy until now.

Prohibition is impossible

The revised policy as drafted and the general spirit of the policy as being in favor of generative AI tools will not please people who are against the use of generative AI in general. However, it does have the virtue of being more practical in terms of enforcement than flat-out forbidding the use of generative AI tools.

Spaleta said that a strict prohibition against AI tools would not stop people from using AI tools; it would "only serve to keep people from talking about how they use it". Prohibition is essentially unenforceable and "only reads as a protest statement". He also said that there is "an entire ecosystem of open source LM/LLM work that this community appears to be completely disconnected from"; he did not want to "purity test" their work, but to engage with them about ethical issues and try to address them.

Next

There appears to be a growing tension between what Red Hat and IBM would like to see from Fedora versus what its users and community contributors want from the project. Red Hat and IBM have already come down in favor of AI as part of their product strategies, the only real questions are what to develop and offer to the customers or partners.

The Fedora community, on the other hand, has quite a few people who feel strongly against AI technologies for various ethical, practical, and social reasons. The results, so far, of turning people loose with generative AI tools on unsuspecting open-source projects has not been universally positive. People join communities to collaborate with other people, not to sift through the output of large language models. It is possible that Red Hat will persuade Fedora to formally endorse a policy of accepting AI-assisted content, but it may be at the expense of users and contributors.

The discussion continues. Fedora's change policy requires a minimum two-week period for discussion of such policies before the council can vote. A policy change needs to be passed with the "full consensus" model, meaning that it must have at least three of the eight current council members voting in favor of the policy and no votes against. At the moment, the council could vote on a policy as soon as October 9. What the final policy looks like, if one is accepted at all, and how it is received by the larger Fedora community remains to be seen.

.png)