Now supported in Apple's most recent version of iOS and Safari 1, WebGPU is finally gaining widespread support, allowing for more advanced 3D capabilities on the web. As someone working with WebGL on the side, this was the push I needed to, at last, dedicate some time to learning more about this new set of APIs and see how I could port over some of my existing WebGL/shader knowledge and work to it.

This work involved not only familiarizing myself with WGSL, the shader language designed for WebGPU, but also TSL: Three.js's higher-level, JavaScript-like shading language. Moreover, WebGPU also introduces some new constructs, such as compute shaders, a new, exciting, yet nebulous type of shaders that seemed like it could improve some key aspects of my workflow.

To guide me through this learning journey, I decided the most practical way would be to spend my summer porting over key projects I've worked on throughout the years, such as my glass material, post-processing, particles, as well as several other fun shader experiments. There were numerous gotchas and undocumented features, which sometimes made the experience quite frustrating, but in the end, I managed to familiarize myself with these new tools and build beautiful scenes with them.

This article is a compilation of the steps I took, going from knowing very little about TSL and WebGPU to becoming comfortable with them, and, as a result, serves as the field guide I wish I had. In it, I will tell you all about these new tools/APIs and the role they play while also sharing recipes to implement some essential components of a 3D scene, whether it is to build a simple material using TSL's new node system, or to leverage compute shaders in diverse use cases like instanced meshes, particles, or post-processing effects.

If, like me, you've seen the names TSL or WebGPU used interchangeably, you may be confused about their roles and how they relate to one another. This first part should answer all your questions regarding these two concepts so you can start building on your own with solid foundations.

TSL and WebGPU

Many creative developers were very excited about the recent expansion of WebGPU support, and for good reason: this new API set brings the web up to par with modern graphics API like Vulkan or Metal, the backbone of many professional creative software and game engines. It provides lower-level controls (memory allocation, bind groups, etc.) and offers new constructs such as compute shaders, while having near native performance 2.

On the other hand, my fellow Three.js developers were also getting interested in something a bit different, yet loosely related: the Three.js Shading Language. A functional language based on JavaScript that is meant to act as a sort of umbrella shading language, making shader creation more accessible and also maintainable over time. Through the introduction of TSL, Three.js provided the ability to write shaders that can run on WebGPU, which was not supported up to that point, while also allowing for these same shaders to run on WebGL, thus letting developers target any platform with unique code, regardless of WebGPU support 3

Below is an example of a simple shader written in TSL and its GLSL and WGSL equivalents:

1

const colorNode = Fn(([baseColor]) => {

4

const red = uvCoord.x.add(2.3).mul(0.3);

5

const green = uvCoord.y.add(1.7).div(8.2);

6

const blue = add(uvCoord.x, uvCoord.y).mod(10.0);

8

const tint = vec4(red, green, blue, 1.0);

10

return mix(baseColor, tint, uvCoord.x);

4

float red = (uv.x + 2.3) * 0.3;

5

float green = (uv.y + 1.7) / 8.2;

6

float blue = mod(uv.x + uv.y, 10.0);

8

vec4 tint = vec4(red, green, blue, 1.0);

9

fragColor = mix(baseColor, tint, uv.x);

1

fn main(uv: vec2<f32>, baseColor: vec4<f32>) -> vec4<f32> {

2

let red = (uv.x + 2.3) * 0.3;

3

let green = (uv.y + 1.7) / 8.2;

4

let blue = (uv.x + uv.y) % 10.0;

6

let tint = vec4<f32>(red, green, blue, 1.0);

7

return mix(baseColor, tint, uv.x);

As you can see, the syntax is very (not to say "extremely") functional, even for simple operators. While this offers the ability to target WebGL or WebGPU alike, to me, it comes with the sacrifice of legibility. I guess it's a matter of taste and habit. If this bothers you a bit too much, you can still opt for the glslFn and wgslFn TSL function to write native code, however, you still have to rely on TSL at the end of the day to define and consume those shader chunks in your materials/effects through its Node System.

Using glslFn and wgslFn to write native shader code alongside TSL

1

const baseColor = uniform(new THREE.Vector4(1, 1, 1, 1));

3

const colorGLSL = glslFn( `

4

vec4 colorFn(vec4 baseColor, vec2 uv) {

5

float red = (uv.x + 2.3) * 0.3;

6

float green = (uv.y + 1.7) / 8.2;

7

float blue = mod(uv.x + uv.y, 10.0);

8

return mix(baseColor, vec4(red, green, blue, 1.0), uv.x);

12

const colorNodeGLSL = colorGLSL({

17

const colorWGSL = wgslFn( `

18

fn colorFn(baseColor: vec4f, uv: vec2f) -> vec4f {

19

let red = (uv.x + 2.3) * 0.3;

20

let green = (uv.y + 1.7) / 8.2;

21

let blue = (uv.x + uv.y) % 10.0;

22

return mix(baseColor, vec4f(red, green, blue, 1.0), uv.x);

26

const colorNodeWGSL = colorWGSL({

Thus, to summarize:

You can use TSL to write shaders for both WebGL and WebGPU.

You still can use GLSL and RawShaderMaterial/ShaderMaterial if you want, but you'll always be limited to WebGL.

You cannot write raw WebGPU shaders the same way you can in WebGL: it's all abstracted away by TSL.

The Node System

While the abstraction layer and the lack of ability to write raw WebGPU shaders were a bit of a bummer to me as I tend to prefer using low-level constructs, TSL's Node System is, to me, a key feature that sold me on it and made me embrace it.

Until now, when we wanted to modify an existing material in WebGL, even for the smallest of changes, we had to painstakingly work our way through string concatenation of several shader chunks using the onBeforeCompile method.

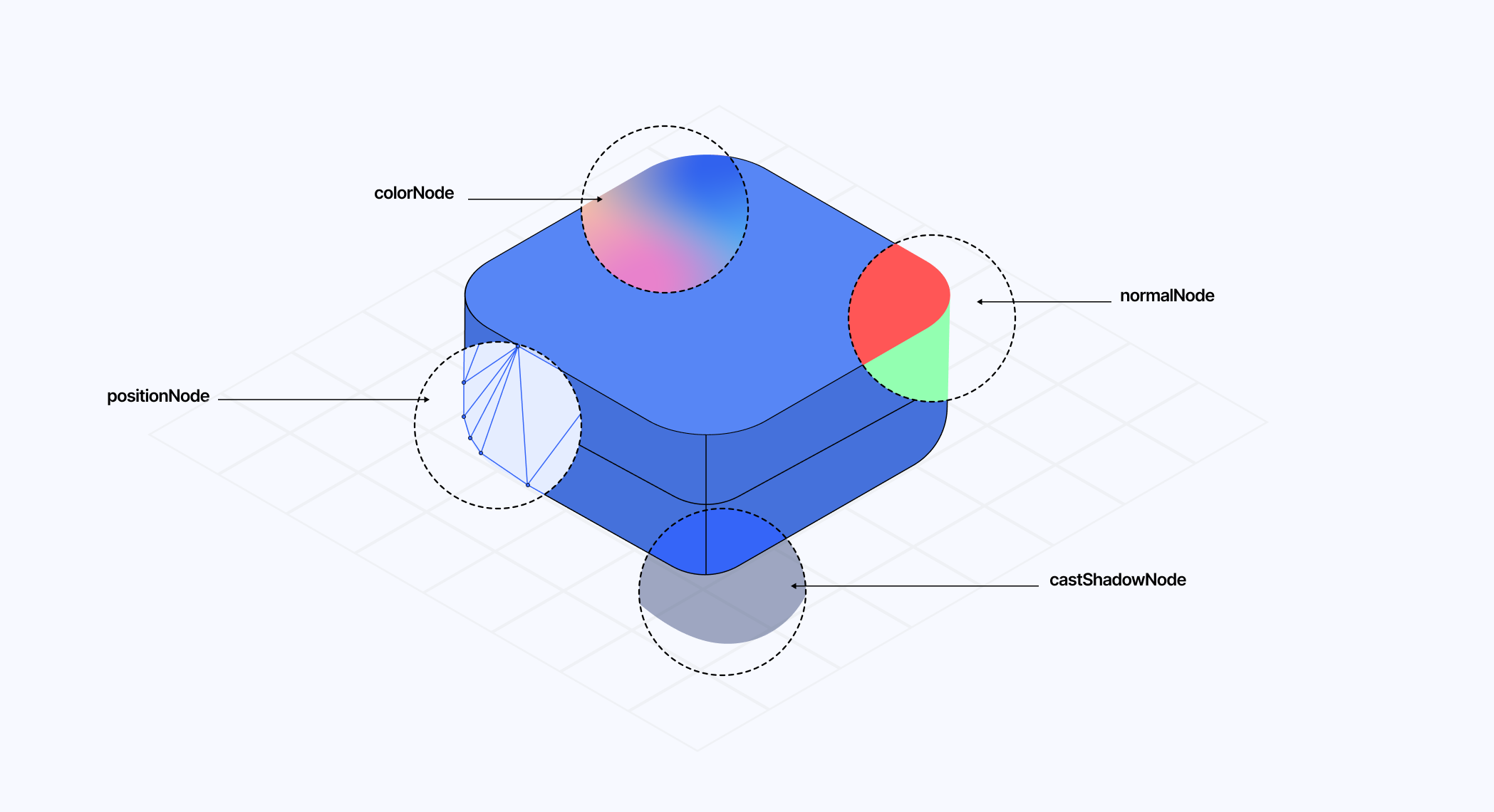

Today, TSL provides hooks for each of its NodeMaterials, such as colorNode, normalNode, or positionNode, to respectively modify the color, normals, and vertex position output of your material very easily. Even better, every stock Three.js material now has a "node equivalent" compatible with this new API:

MeshStandardMaterial has MeshStandardNodeMaterial

MeshBasicMaterial has MeshBasicNodeMaterial

MeshPhysicalMaterial has MeshPhysicalNodeMaterial

and so on.

Of course, there are other nodes I did not list here, and many more to come as TSL expands its feature set. The ones mentioned are, to me, the most important and the first you will encounter when playing with TSL and shaders.

Writing Shaders for WebGPU with TSL

As we ease into our WebGPU and TSL journey, let’s start by setting up our WebGPURenderer with React Three Fiber and recreating some classic shader examples you might have come across online, or that I walked through in past blog posts.

Using the WebGPU renderer

To get access to the new WebGPU features, we first need to instantiate its renderer in our React Three Fiber project through the gl prop of the Canvas component. Luckily, Canvas has been updated in React Three Fiber v9 to support an asynchronous gl prop 4, a necessary feature since the WebGPURenderer requires async initialization.

The following code snippet showcases how we will initialize React Three Fiber projects featuring WebGPU features going forward:

Initializing the WebGPU renderer in React Three Fiber

1

import * as THREE from 'three/webgpu';

8

const renderer = new THREE.WebGPURenderer(props);

While initializing WebGPURenderer may give the impression that we made an arbitrary decision to make our 3D scene WebGPU-only, that is, in fact, not the case. WebGPURenderer supports both WebGPU and WebGL, and will fall back to WebGL when WebGPU is not available on the device attempting to render the scene.

If, throughout the development of your scene, you want to ensure that it still functions correctly in WebGL, you can pass the forceWebGL: true parameter to your WebGPURenderer.

6

const renderer = new THREE.WebGPURenderer({

10

await renderer.init();

The demo below sets up a React Three Fiber scene powered by WebGPU, including some simple shaders written with TSL. You can try forcing it to render with WebGL and see for yourself that it will yield the same result, which is one of the key advantages of writing shaders with TSL.

Displacement and Normals

As a first, more elaborate example, we will create a classic blob by introducing some organic and dynamic displacement to the sphere at the center of the previous scene. To make it ambitious and highlight the benefits of TSL and its node system, we can try to do all of this on top of meshPhongNodeMaterial by writing a shader for:

the positionNode, to modify the position of vertices over time

the normalNode, to adjust the normal data of our sphere accordingly so the lighting and shadows of our moving sphere match the displacement of its vertices

all of that while maintaining some of the key physical properties that are core to this material.

To organize my TSL code, I like to:

Wrap them all up inside a useMemo.

Export a nodes property that will contain a field for each of our TSL shaders.

Export a uniforms property that will contain all the uniforms we want to adjust.

My organization for TSL nodes

1

const { nodes, uniforms } = useMemo(() => {

2

const time = uniform(0.0);

4

const positionNode = Fn(() => {...})();

6

const normalNode = Fn(() => {...})();

This provides a more structured approach to organizing our TSL code within our React code, ensuring that key constructs such as uniforms are not accidentally recreated.

We will start by writing a custom positionNode to modify the position of the vertices of our sphere mesh following a noise. I transpiled an implementation of Perlin Noise using the transpiler that we'll use in this example, it will be fully featured in the playground at the end of the section.

Adding displacement for our sphere using positionNode

1

const updatePos = Fn(([pos, time]) => {

2

const noise = cnoise(vec3(pos).add(vec3(time))).mul(0.2);

3

return add(pos, noise);

6

const positionNode = Fn(() => {

7

const pos = positionLocal;

8

const updatedPos = updatePos(pos, time);

To animate our blob over time, we would want to pass a time uniform to adjust the positions of the vertices over time. However, between WebGL and WebGPU, uniforms work a bit differently:

WebGL uniforms are global variables that you can set directly by calling gl.uniforms and call it a day.

WebGPU uniforms live in a uniform buffer, which requires explicit memory allocations 5.

This difference between convenience and control is hidden from us by TSL: to declare your uniforms, use the uniform function. And that, regardless of whether we write our position shader using the TSL syntax or GLSL/WGLSL syntax.

Declaring and updating a TSL uniform

1

const { nodes, uniforms } = useMemo(() => {

3

const time = uniform(0.0);

16

const { clock } = state;

19

uniforms.time.value = clock.getElapsedTime();

Now we can work our way towards recomputing normals. We did something similar in Shining a light on Caustics with Shaders and React Three Fiber, where we had to resort to modifying the shader code of the source material quite heavily and had to be very rigorous about it to ensure we would not break some of its key features. Thanks to the node system, we now simply need to:

Expand the positionNode to compute the displaced normals.

Send the recomputed normals through a varying

Declare a normalNode where we can return that data to be consumed by the material

1

const { nodes, uniforms } = useMemo(() => {

2

const time = uniform(0.0);

3

const vNormal = varying(vec3(), 'vNormal');

5

const updatePos = Fn(([pos, time]) => {

6

const noise = cnoise(vec3(pos).add(vec3(time))).mul(0.2);

7

return add(pos, noise);

10

const orthogonal = Fn(() => {

11

const pos = normalLocal;

12

If(abs(pos.x).greaterThan(abs(pos.z)), () => {

13

return normalize(vec3(negate(pos.y), pos.x, 0.0));

16

return normalize(vec3(0.0, negate(pos.z), pos.y));

19

const positionNode = Fn(() => {

20

const pos = positionLocal;

22

const updatedPos = updatePos(pos, time);

23

const theta = float(0.001);

25

const vecTangent = orthogonal();

26

const vecBiTangent = normalize(cross(normalLocal, vecTangent));

28

const neighbour1 = pos.add(vecTangent.mul(theta));

29

const neighbour2 = pos.add(vecBiTangent.mul(theta));

31

const displacedNeighbour1 = updatePos(neighbour1, time);

32

const displacedNeighbour2 = updatePos(neighbour2, time);

34

const displacedTangent = displacedNeighbour1.sub(updatedPos);

35

const displacedBitangent = displacedNeighbour2.sub(updatedPos);

37

const normal = normalize(cross(displacedTangent, displacedBitangent));

39

const displacedNormal = normal

42

.select(normal.negate(), normal);

43

vNormal.assign(displacedNormal);

48

const normalNode = Fn(() => {

49

const normal = vNormal;

50

return transformNormalToView(normal);

Like uniforms, varying behavior also differs between WebGL and WebGPU, and thus, once again, we need to rely on a TSL function to hide the complexity from us.

Now that we declared all the nodes we need alongside their respective shaders, all we need to do for our mesh to leverage them is to pass each node to its corresponding node prop in our node material:

Using nodes in a TSL node material

1

const { nodes, uniforms } = useMemo(() => {

16

const { clock } = state;

18

uniforms.time.value = clock.getElapsedTime();

23

<icosahedronGeometry args={[1.5, 200]} />

24

<meshPhongNodeMaterial

26

normalNode={nodes.normalNode}

27

positionNode={nodes.positionNode}

28

emissive={new THREE.Color('white').multiplyScalar(0.25)}

As you can see, the days when we needed to use onBeforeCompile and a series of complex string concatenation to modify the shader code within Three.js' materials are long gone. We can now rely on the node system to make those modifications on top of existing materials without too much mess. The demo below showcases the result of the code we established above:

Glass Material

In this section, let's increase the complexity level up a notch and not only see what it takes to build a material from scratch, but also:

How to handle textures as a uniform.

The subtle differences and gotchas between writing the material in TSL vs WGSL

Indeed, something I omitted to tell you in the previous part is that the uniform function only supports the following types: boolean | number | Color | Vector2 | Vector3 | Vector4 | Matrix3 | Matrix4, type = null. This may be a bit confusing at first, especially after building WebGL shaders and routinely passing textures as uniforms for all our experiments. Instead, we will need to leverage the texture TSL function.

I'm not going to lie, I was a bit puzzled here with this texture function and still am a bit as of writing those words due to its usage:

On the one hand, you can use it to retrieve a texel at a specific UV in your TSL code.

On the other hand, I also found myself using it as a way to pass a texture as a uniform to a shader written in WGSL, as it was the only way to convert my THREE.Texture type to a proper ShaderNodeObject<THREE.TextureNode> type, which my shader required for texture sampling (technically a texture_2d<f32>).

The following code snippets illustrate this dichotomy, which has lost me quite a few times already:

TSL texture vs WGSL texture

2

const tslTexture = texture(myTexture, uv());

5

const wgslTexture = texture(myTexture);

6

const sampler = sampler(wgslTexture);

I'm talking a lot about textures in this part because they are the backbone of my custom implementation of a glass material, which I've documented in Refraction, Dispersion, and other shader light effects. Below is a code snippet featuring its implementation in TSL, on which I want to highlight the following:

The ability to split your TSL into as many functions as you wish by simply declaring them with the Fn function. You can pass arguments to them as objects if you want to have specific names for your variables, or as elements in an array if you prefer them inline.

The ability for your shader to reference global constants from your JavaScript/React code, without necessarily having to send it as a uniform (e.g. like lightPosition).

Implementation of my refractive glass material in TSL

1

const lightPosition = [10, 10, 10];

3

const { nodes, uniforms, utils } = useMemo(() => {

6

const classicFresnel = Fn(({ viewVector, worldNormal, power }) => {

7

const fresnelFactor = abs(dot(viewVector, worldNormal));

8

const inversefresnelFactor = sub(1.0, fresnelFactor);

9

return pow(inversefresnelFactor, power);

12

const sat = Fn(([col]) => {

13

const W = vec3(0.2125, 0.7154, 0.0721);

14

const intensity = vec3(dot(col, W));

15

return mix(intensity, col, 1.265);

18

const refractAndDisperse = Fn(({ sceneTex }) => {

19

const absorption = 0.5;

20

const refractionIntensity = 0.25;

21

const shininess = 100.0;

23

const noiseIntensity = 0.015;

25

const refractNormal = normalWorld.xy

26

.mul(sub(1.0, normalWorld.z.mul(0.85)))

29

const refractCol = vec3(0.0, 0.0, 0.0).toVar();

31

for (let i = 0; i < LOOP; i++) {

32

const noise = rand(viewportUV).mul(noiseIntensity);

33

const slide = float(i).div(float(LOOP)).mul(0.18).add(noise);

35

const refractUvR = viewportUV.sub(

37

.mul(slide.mul(1.0).add(refractionIntensity))

40

const refractUvG = viewportUV.sub(

42

.mul(slide.mul(2.5).add(refractionIntensity))

45

const refractUvB = viewportUV.sub(

47

.mul(slide.mul(4.0).add(refractionIntensity))

51

const red = texture(sceneTex, refractUvR).r;

52

const green = texture(sceneTex, refractUvG).g;

53

const blue = texture(sceneTex, refractUvB).b;

55

refractCol.assign(refractCol.add(vec3(red, green, blue)));

58

refractCol.assign(refractCol.div(float(LOOP)));

60

const lightVector = vec3(

65

const viewVector = normalize(cameraPosition.sub(positionWorld));

66

const normalVector = normalize(normalWorld);

68

const halfVector = normalize(viewVector.add(lightVector));

70

const NdotL = dot(normalVector, lightVector);

71

const NdotH = dot(normalVector, halfVector);

73

const kDiffuse = max(0.0, NdotL);

75

const NdotH2 = NdotH.mul(NdotH);

76

const kSpecular = pow(NdotH2, shininess);

78

const fresnel = classicFresnel({

79

viewVector: viewVector,

80

worldNormal: normalVector,

85

refractCol.add(kSpecular.add(kDiffuse).mul(0.01).add(fresnel))

88

return vec3(sat(refractCol));

The WGSL equivalent is a bit different but interesting nonetheless:

You can use the code TSL function to split your WGSL shaders into reusable bits; however, the main entrypoints need to use wgslFn.

Your texture_2d<f32> from your WGSL function becomes a ShaderNodeObject<THREE.TextureNode>, so you need to first call texture with the texture you want to sample as an argument.

Shaders written using the wgslFn or glslFn TSL function seem to always require to have their set of arguments passed as an object.

Implementation of my refractive glass material in WGSL

1

const { nodes, uniforms, utils } = useMemo(() => {

4

const sceneTextureUniform = texture(new THREE.Texture());

5

const sceneTextureSampler = sampler(sceneTextureUniform);

7

const classicFresnel = code(`

8

fn classicFresnel(viewVector: vec3f, worldNormal: vec3f, power: f32) -> f32 {

9

let fresnelFactor = abs(dot(viewVector, worldNormal));

10

let inversefresnelFactor = 1.0 - fresnelFactor;

11

return pow(inversefresnelFactor, power);

16

fn sat(col: vec3f) -> vec3f {

17

let W = vec3(0.2125, 0.7154, 0.0721);

18

let intensity = vec3f(dot(col, W));

19

return mix(intensity, col, 1.165);

24

fn rand(uv: vec2f) -> f32 {

25

return fract(sin(dot(uv.xy ,vec2(12.9898,78.233))) * 43758.5453);

29

const refractAndDisperseWGSL = wgslFn(`

30

fn refract(sceneTex: texture_2d<f32>, sampler: sampler, normalWorld: vec3f, viewportUV: vec2f, positionWorld: vec3f, cameraPosition: vec3f) -> vec3f {

31

let absorption = 0.88;

32

let refractionIntensity = 0.25;

33

let shininess = 100.0;

35

let noiseIntensity = 0.015;

37

let refractNormal = normalWorld.xy * (1.0 - normalWorld.z * 0.85);

38

var refractCol = vec3(0.0, 0.0, 0.0);

40

for (var i = 0; i < LOOP; i++) {

41

let noise = rand(viewportUV) * noiseIntensity;

42

let slide = f32(i)/f32(LOOP) * 0.08 + noise;

44

let refractUvR = viewportUV - refractNormal * (slide * 1.0 + refractionIntensity) * absorption;

45

let refractUvG = viewportUV - refractNormal * (slide * 2.5 + refractionIntensity) * absorption;

46

let refractUvB = viewportUV - refractNormal * (slide * 4.0 + refractionIntensity) * absorption;

48

let red = textureSample(sceneTex, sampler, refractUvR).r;

49

let green = textureSample(sceneTex, sampler, refractUvG).g;

50

let blue = textureSample(sceneTex, sampler, refractUvB).b;

52

refractCol += vec3(red, green, blue);

55

refractCol = refractCol / f32(LOOP);

57

let lightVector = vec3(-10.0, 10.0, -1.5);

58

let viewVector = normalize(cameraPosition - positionWorld);

59

let normalVector = normalize(normalWorld);

61

let halfVector = normalize(viewVector + lightVector);

63

let NdotL = dot(normalVector, lightVector);

64

let NdotH = dot(normalVector, halfVector);

66

let kDiffuse = max(0.0, NdotL);

67

let NdotH2 = NdotH * NdotH;

68

let kSpecular = pow(NdotH2, shininess);

70

let fresnel = classicFresnel(viewVector, normalVector, 25.0);

72

refractCol += vec3(kSpecular + kDiffuse * 0.01 + fresnel);

74

return vec3(sat(refractCol));

75

}`, [classicFresnel, sat, rand],

78

const refractAndDisperse = Fn(({ sceneTex }: { sceneTex: ShaderNodeObject<THREE.TextureNode> }) => {

79

return refractAndDisperseWGSL({

80

sceneTex: sceneTextureUniform,

81

sampler: sceneTextureSampler,

The demo below showcases the glass material built in TSL and working on top of the blob that we implemented in the first part.

Now that we have a better grasp of TSL and know how to leverage it to use and write shaders for the new WebGPURenderer, we can bring our focus to one of the headline features of WebGPU that many developers were excited about: compute shaders.

There are now two pipelines we can leverage to build our 3D scenes:

The render pipeline, used for drawing graphics, is the same as the one we're familiar with in WebGL.

The compute pipeline, designed for more general-purpose computation that can be run on the GPU 6.

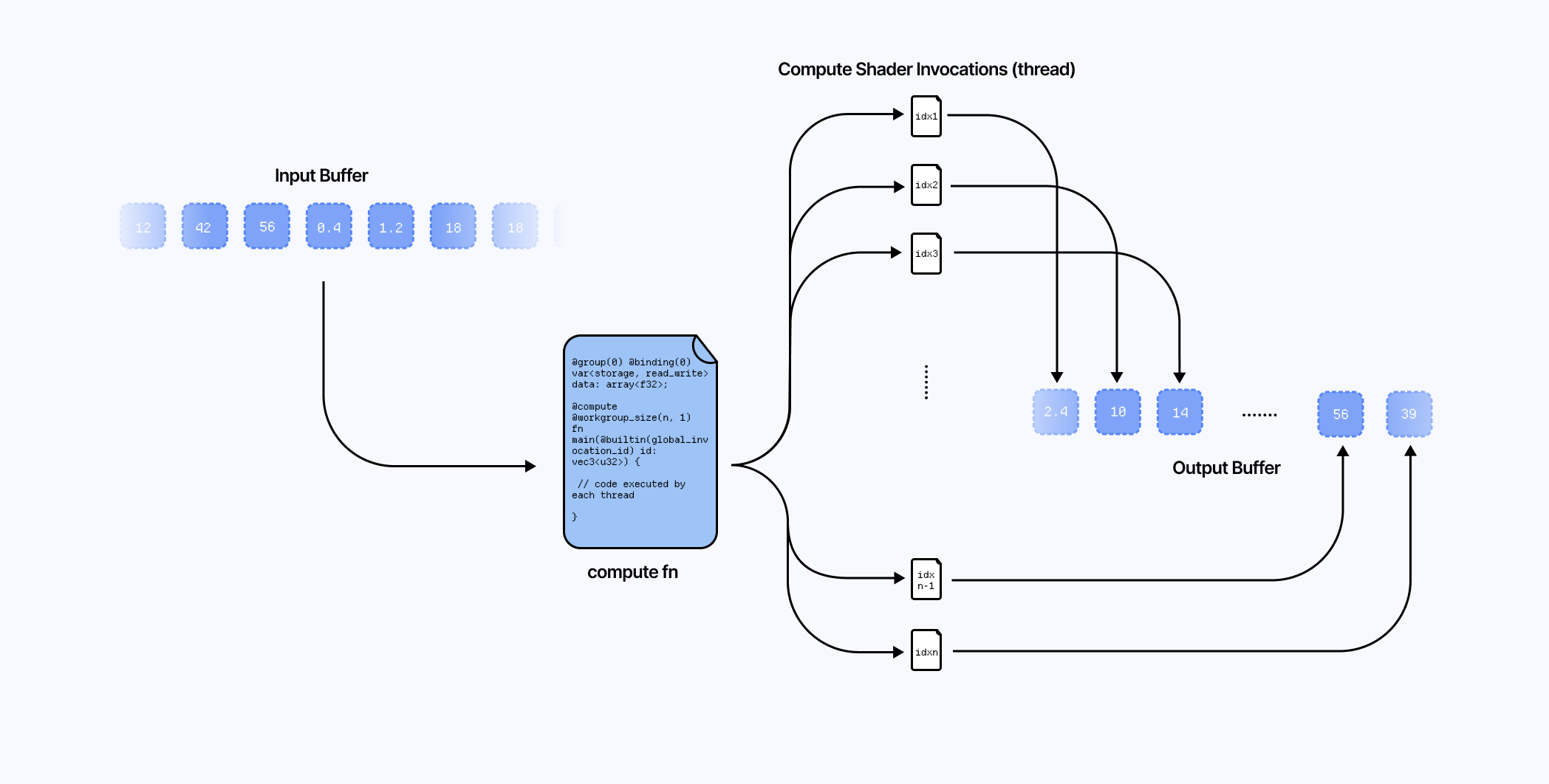

The latter consists of a single compute shader stage (no vertices, no rasterization, no fragments) in which:

We take in arbitrary input data such as textures or buffers.

Execute workgroups of threads, or shader invocations, to process those inputs a function defined as a compute shader.

Output the resulting data in one or multiple buffers. We can feed those resulting buffers to the render pipeline or back to the CPU.

While our render pipeline shaders are tied to draw calls and rasterization, we can call/execute our compute shaders programmatically, thus letting us decide when and how many times they run.

Creating Compute Shaders in TSL

Let's examine a first example of how to leverage the compute pipeline in TSL. We'll do a matrix multiplication on the GPU using compute shaders and read back its result on the CPU. Like our previous shader code, we can declare our compute shader either directly in TSL or with the wgslFn function:

Example of a compute shader performing a multiplication of 2 matrices

1

const buffer = storage(responseBuffer, 'mat4x4f', count);

3

const matrixMult = wgslFn(`

4

fn matrixMult(matrix1: mat4x4f, matrix2: mat4x4f, buffer: ptr<storage, array<mat4x4f>, read_write>) -> void {

5

let result = matrix1 * matrix2;

We then need to call our compute shader TSL function by dispatching it using the .compute method.

1

const matrixMultComputeNode = matrixMult({

The resulting computeNode can then be invoked at any time with the gl.computeAsync or gl.compute methods. In the following example, we call it at render time, where we also retrieve the result of our operation using the gl.getArrayBufferAsync function.

1

const compute2 = useCallback(async () => {

3

await gl.computeAsync(nodes.matrixMultComputeNode);

5

const output = new Float32Array(

6

await gl.getArrayBufferAsync(buffers.responseBuffer)

9

console.log('output:', output);

13

}, [nodes.matrixMultComputeNode, gl, buffers.responseBuffer]);

Leveraging compute shaders for your 3D scene

While the previous example is a great use case for compute shaders, you may want to know how this new feature can be leveraged alongside the render pipeline and potentially even improve the performance of your 3D scene.

One of the first practical examples of computer shader usage I have encountered in Three.js / React Three Fiber is through instanced meshes. In my first experiments involving instanced meshes, I found myself having to set their original position in space on the CPU. Today, however, we can delegate that work to the GPU as follows:

Setting the initial position of instanced meshes with a compute shader

3

const { nodes, uniforms } = useMemo(() => {

4

const buffer = instancedArray(COUNT, 'vec3');

6

const computeInstancePosition = wgslFn(`

8

buffer: ptr<storage, array<vec3f>, read_write>,

12

let gridSize = u32(count);

13

let gridWidth = u32(sqrt(count));

14

let gridHeight = (gridSize + gridWidth - 1u) / gridWidth;

16

if (index >= gridSize) {

20

let x = index % gridWidth;

21

let z = index / gridWidth;

24

let worldX = f32(x) * spacing - f32(gridWidth - 1u) * spacing * 0.5;

25

let worldZ = f32(z) * spacing - f32(gridHeight - 1u) * spacing * 0.5;

27

buffer[index] = vec3f(worldX, 0.0, worldZ);

31

const computeNode = computeInstancePosition({

37

const positionNode = positionLocal.add(buffer.element(instanceIndex));

47

const compute = useCallback(async () => {

49

await gl.computeAsync(nodes.computeNode);

53

}, [nodes.computeNode, gl]);

56

if (!meshRef.current) return;

63

args={[undefined, undefined, COUNT]}

67

<icosahedronGeometry args={[0.3, 16]} />

68

<meshPhongNodeMaterial

69

emissive={new THREE.Color('white').multiplyScalar(0.15)}

71

positionNode={nodes.positionNode}

which could be illustrated by the following animated diagram that showcases all the pieces from each pipeline working together:

0.1

0.9

0.1

0.3

0.1

0.1

0.7

0.9

0.2

0.1

0.9

0.1

0.3

0.1

0.1

0.7

0.9

0.2

0.1

0.9

0.1

0.3

0.1

0.1

0.7

0.9

0.2

First, we create a buffer, an array buffer of size COUNT * 3, through the instancedArray function by specifying the number of instances we want to render, and the type, in this case, a vec3.

We then define the compute shader. I use WGSL here since it's more readable to me, and our scene will only work through WebGPU anyway. This particular shader positions each instance on a grid alongside the x and z-axes using the instanceIndex of the instance. The resulting positions are then written in the array buffer we passed as argument.

We run the compute shader COUNT times, thus creating COUNT threads, each thread being responsible for running the shader for one given instance. In this example, the compute shader is run once at render time (hence why the compute pipeline animation in the diagram above stops at some point).

Finally, we can read the buffer at a given instanceIndex and add the resulting stored position to our instances positionLocal via positionNode. With TSL and its helper functions, we can easily tweak the behavior of each individual instance by using the same instanceIndex index.

If needed, the buffer data, or some aspect of it, can be sent to any "fragment-related node" via varying.

Initiating and updating the positions of each instance

1

const updatePosition = wgslFn(`

5

vHeight: ptr<private, f32>,

8

let waveAmplitude = 0.5;

9

let waveFrequencyX = 0.75;

10

let waveFrequencyZ = 0.75;

12

let waveOffset = sin(position.x * waveFrequencyX + position.z * waveFrequencyZ - time * waveSpeed) * waveAmplitude;

13

let waveOffset2 = sin(-position.x * waveFrequencyX + position.z * waveFrequencyZ - time * waveSpeed) * waveAmplitude;

14

let newY = position.y + (waveOffset + waveOffset2) / 2.0;

16

return vec3f(position.x, newY, position.z);

20

const positionNode = updatePosition({

21

position: positionLocal.add(buffer.element(instanceIndex)),

The demo below showcases our compute pipeline and render pipeline working hand-in-hand, yielding this beautiful moving grid of 1024 meshes.

Workgroup and dispatch sizes

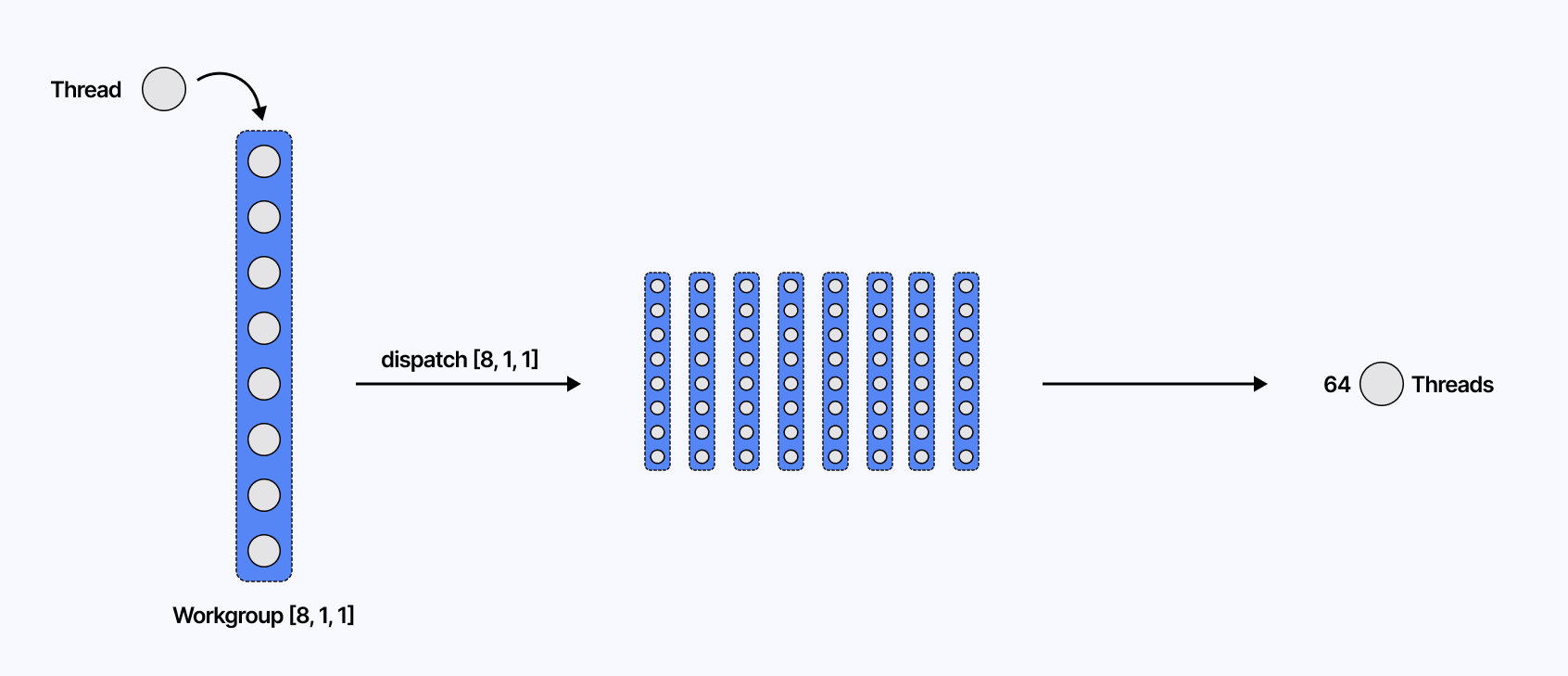

In the previous example, we only set up the count argument for our compute shaders .compute method, when it can actually take an additional argument: workgroupSize.

Passing this extra argument tells WebGPU how many threads/shader invocations belong to one workgroup and has the following characteristics:

This argument has the format [x, y, z] where x, y, and z are the dimensions of the workgroup in each direction of a 3D grid.

You can get the total number of threads per workgroup by multiplying each of x, y, and z. For example, [64, 1, 1] and [8, 8, 1] both define our workgroup of 64 threads.

The dimensionality of the workgroup depends on how the compute shader is written and the shape of your data/buffers. For 1D buffers, [64, 1, 1] is a natural choice, whereas for 2D buffers, such as a texture, defining it as [8, 8, 1] may be more suitable.

There is a maximum limit of 256 threads per workgroup. You can try setting [512, 1, 1], and all you'd get would be a warning from WebGPU.

In Three.js, it is set by default to [64, 1, 1 ] 7.

Thus, in our previous demo, considering COUNT = 1024 instances, we would need to launch 1024 / 64 = 16 workgroups. More generally, the number of workgroups is ceil(COUNT / workgroupSize.x) in the 1D case.

Now there is also a "secret third thing" called the dispatch size. By default, when using the .compute method, you don't have to worry about it, as TSL/Three computes a dispatch size for you from count and the given (or default) workgroup size 8

If, however, you want to employ a more manual approach to know how many workgroups to launch, you can set it up manually using the .computeKernel method instead, which was recently added to the Three.js codebase.

Example usage of computeKernel

1

computeNode = computeInstancePositions().computeInstancePosition([8, 8, 1]);

2

gl.compute(computeNode, [4, 4, 1]);

In our case, since we have 1024 instances:

We could use a dispatch size of [16, 1, 1], which would result in creating 1024 threads (64 * 16 * 1 * 1 * 1 * 1), one for each instance, which would be enough for our use case.

For a 2D dimensionality, we could define it as [8, 8, 1] with a workgroup size of [4, 4, 1] which would give us 4 * 8 * 4 * 8 * 1 * 1 = 1024

In a nutshell:

workgroupSize is the number of threads per workgroup.

dispatchSize is the number of workgroups per dimension.

Total threads = (workgroupSize.x * dispatchSize.x) × (workgroupSize.y * dispatchSize.y) × (workgroupSize.z * dispatchSize.z).

We won't use dispatch size in any examples, but I thought it was important to mention it as it informs us of the inner workings of the compute pipeline.

Powering Particles with Compute Shaders

In the first parts of this article, we walked through the basics of WebGPU/TSL and its Node System while exploring the potential of compute shaders for our 3D work. Using them alongside instancedMesh is only one of the many use cases for compute shaders, and I wanted to dedicate the following sections of this article to more practical examples that could not only fill the blanks when it comes to porting specific work to TSL, but also be good candidates to leverage the compute pipeline.

The first practical use case that I found interesting and that showcased many benefits after porting them to TSL and making them use compute shaders is particles.

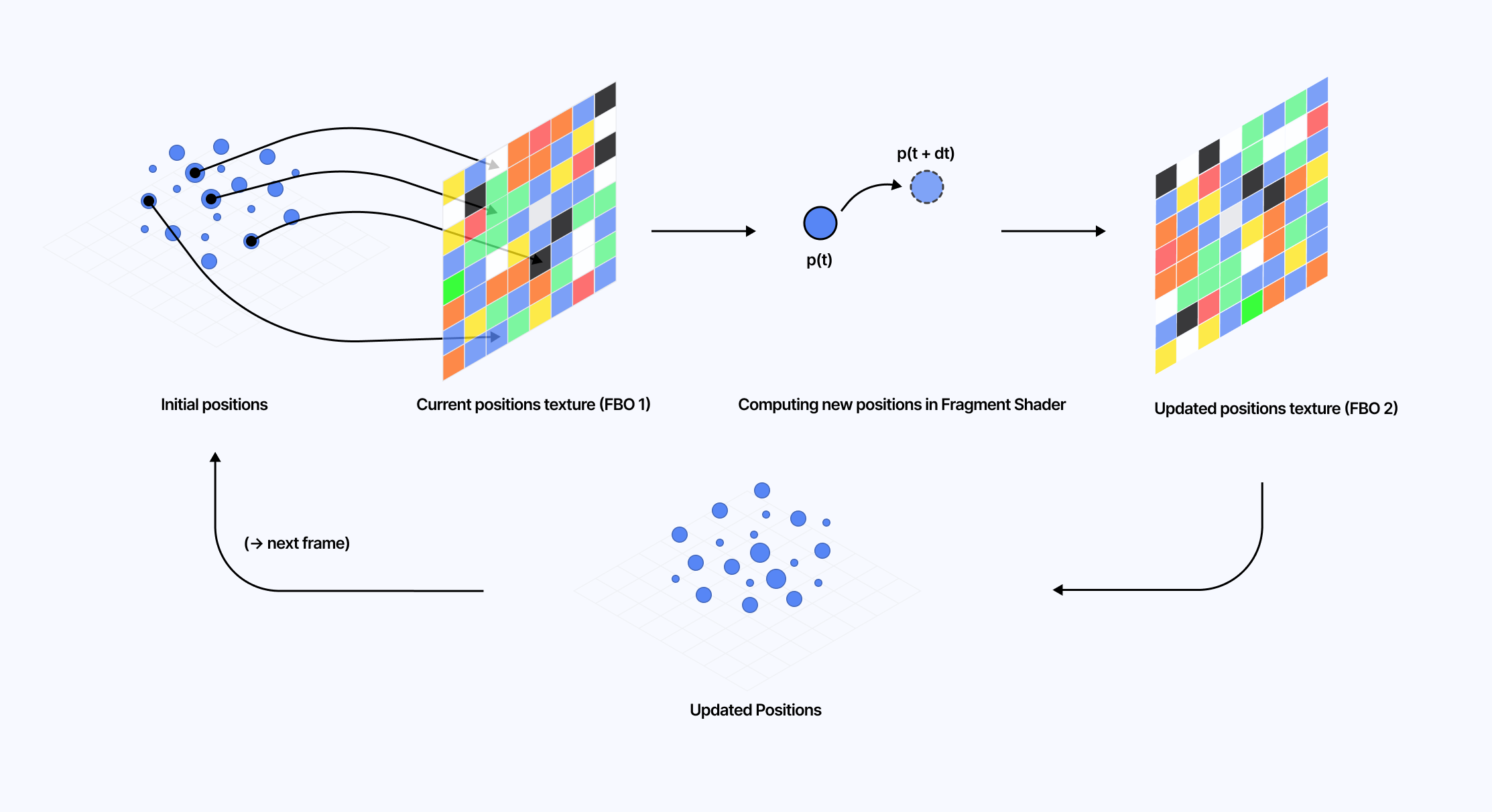

In 2022, I wrote an article covering many aspects of particles. Among those, my favorite one was GPGPU particles. Back then, we were using a Frame Buffer Object (FBO) to render in a texture off-screen filled with the position data of each particle on a given frame. After updating each of those positions via a fragment shader, we would then pass the resulting texture to the final shader to get the new positions and render the particles.

Today, all of that can be moved to the compute shader! Not only the position update, but also the instantiation of the particles themselves, which historically we've always done on the CPU.

Setting up our Particle System with Compute Shaders

Let's start by setting up a scene that demonstrates how to instantiate a particle system using the compute pipeline.

We will use sprite as our "mesh" for particles, alongside the material it pairs with: spriteNodeMaterial. Akin to instancedMesh, this construct takes a count prop, which corresponds to the number of particles we wish to render on screen. Its node material can take many of the properties we've already covered in this article, such as positionNode or colorNode, allowing us to customize our particle system with shaders.

Using sprite and spriteNodeMaterial

8

blending={THREE.AdditiveBlending}

If we were to render this as is, all the particles would render at (0, 0, 0). We can set up an instancedArray of vec3 that will hold all the initial positions for our particles. Then, we can use a compute shader that we'll dub computeInit to initialize the particles at the desired position in that buffer.

ComputeShader setting the initial positions of our particle system

1

const spawnPositionsBuffer = instancedArray(COUNT, 'vec3');

2

const spawnPosition = spawnPositionsBuffer.element(instanceIndex);

5

fn hash(index: u32) -> f32 {

6

return fract(sin(f32(index) * 12.9898) * 43758.5453);

10

const computeInitWgsl = wgslFn(

13

spawnPositions: ptr<storage, array<vec3f>, read_write>,

17

let h1 = hash(index + 1u);

18

let h2 = hash(index + 2u);

20

let distance = sqrt(h0 * 4.0);

21

let theta = h1 * 6.28318530718; // 2 * PI

22

let phi = h2 * 3.14159265359; // PI

24

let x = distance * sin(phi) * cos(theta);

25

let y = distance * sin(phi) * sin(theta);

26

let z = distance * cos(phi);

28

spawnPositions[index] = vec3f(x, y, z);

34

const computeNode = computeInitWgsl({

35

spawnPositions: spawnPositionsBuffer,

39

const positionNode = Fn(() => {

40

const pos = spawnPosition;

In the code snippet above:

We initialize all the positions of our particles randomly within a sphere of radius 2. The storage buffer for our particles will get filled at render time.

We then resolve and pass the position vector of each particle of a given instance via the positionNode of our spriteNodeMaterial and by leveraging instanceIndex.

Attractors with Compute Shaders

With the initialization alone, we already have a good use case for using the compute pipeline: what used to be done on the CPU is now performed on the GPU. However, we can do better!

In the case of GPGPU particles, we can replace the entire FBO and texture writing process with a single compute shader that we run on every frame. This grandly simplifies the implementation of a particle system where the position of a particle at time t depends on its previous position at a time t - dt, i.e., defined through a parametric equation such as Attractors.

Here, we will add an extra storage buffer called offsetPosition that will contain all the positions of our particles at a given frame. These offset positions will continuously update based on their previous values as we call the compute shader to fill them on every frame.

Updating the position of our particles on every frame with compute shader

1

const { nodes } = useMemo(() => {

2

const spawnPositionsBuffer = instancedArray(COUNT, 'vec3');

3

const offsetPositionsBuffer = instancedArray(COUNT, 'vec3');

5

const spawnPosition = spawnPositionsBuffer.element(instanceIndex);

6

const offsetPosition = offsetPositionsBuffer.element(instanceIndex);

10

const thomasAttractor = wgslFn(`

11

fn thomasAttractor(pos: vec3<f32>) -> vec3<f32> {

20

let dx = (-b * x + sin(y)) * dt;

21

let dy = (-b * y + sin(z)) * dt;

22

let dz = (-b * z + sin(x)) * dt;

24

return vec3(dx, dy, dz);

28

const computeNodeUpdate = Fn(() => {

29

const updatedOffsetPosition = thomasAttractor({

30

pos: spawnPosition.add(offsetPosition),

32

offsetPosition.addAssign(updatedOffsetPosition);

35

const positionNode = Fn(() => {

36

const pos = spawnPosition.add(offsetPosition);

53

gl.compute(nodes.computeNodeUpdate);

We can then get the offset position of a given instance using instanceIndex and add it to its corresponding spawn position. The diagram below illustrates this example of a compute pipeline running on every frame to update a particle system:

As for the scene itself, the demo that follows combines the compute shader used to instantiate our particle system with the one that updates their position over time. In this specific case, the attractorFunction represents a Thomas Attractor.

As someone who's quite fond of post-processing, I was disappointed to find out that effects with TSL weren't as documented as I would have thought, making porting scenes from standard React Three Fiber using @react-three/postprocessing a bit tricky at first. I had to look at a couple of examples from the Three.js examples repository and figure out through trial and error how to make them work on top of my existing React Three Fiber setup.

This section aims to make this migration a bit easier for people getting started, while also providing a practical case for compute shaders with post-processing effects.

Setting up custom post-processing effects with TSL on React Three Fiber

Similar to what we established in the second part of this article, I also like to organize my post-processing-related TSL node inside useMemo. TSL provides a few helper functions we're going to use to set up our post-processing pipeline:

pass, which is the node that creates a new render pass 9

getTexture, a method from PassNode that returns the texture for a given output name. By default, it can return either the diffuse texture or the depth texture.

setMRT, a method from PassNode that allows you to reference additional render targets (hence the MRT -> Multiple Render Targets name)

Organizing my post-processing nodes

1

const { textures } = useMemo(() => {

2

const scenePass = pass(scene, camera);

3

const diffuse = scenePass.getTextureNode('output');

4

const depth = scenePass.getTextureNode('depth');

6

const outputNode = diffuse;

Then we need to:

Create a new instance of THREE.PostProcessing against our WebGPU renderer.

Set its ouputNode property to any pass we want, as well as save the resulting instance to a React ref for later reference.

Call the render method of our post-processing instance.

The tricky part was to figure out where to call render in the first place. I knew it would be within useFrame, but at first glance, that did nothing. To make this work, we need to set the renderPriority argument of useFrame to 1. Doing so has the following effect:

It disables React Three Fiber's automatic rendering.

It will execute our post-processing useFrame after any other useFrame we may have 10 and thus ensure the underlying scene is fully rendered before our post-processing pipeline takes effect.

Rendering our post-processed output

2

const postProcessing = new THREE.PostProcessing(gl);

3

postProcessing.outputNode = outputNode;

4

postProcessingRef.current = postProcessing;

6

if (postProcessingRef.current) {

7

postProcessingRef.current.needsUpdate = true;

8

postProcessingRef.current.outputNode = customPass(outputNode);

12

postProcessingRef.current = null;

17

if (postProcessingRef.current) {

18

postProcessingRef.current.render();

We can now create a custom post-processing pass that can be implemented with TSL or WGSL/GLSL shaders (whichever we may like). Here's the boilerplate code that I've used in my work:

Example of a custom shader effect node written in TSL

1

class CustomEffectNode extends THREE.TempNode {

2

constructor(inputNode) {

4

this.inputNode = inputNode;

8

const inputNode = this.inputNode;

10

const effect = Fn(() => {

11

const input = inputNode;

13

return vec4(input.r.add(0.25), input.g, input.b, 1.0);

16

const outputNode = effect();

22

const customPass = (node) => nodeObject(new CustomEffectNode(node));

The code snippet above can be used as a starting point for your own post-processing work. Here, our effect shader increases the red color channel of the input texture, which should result in a tinted scene if everything is set up correctly.

Post-processing effects with Compute Shaders

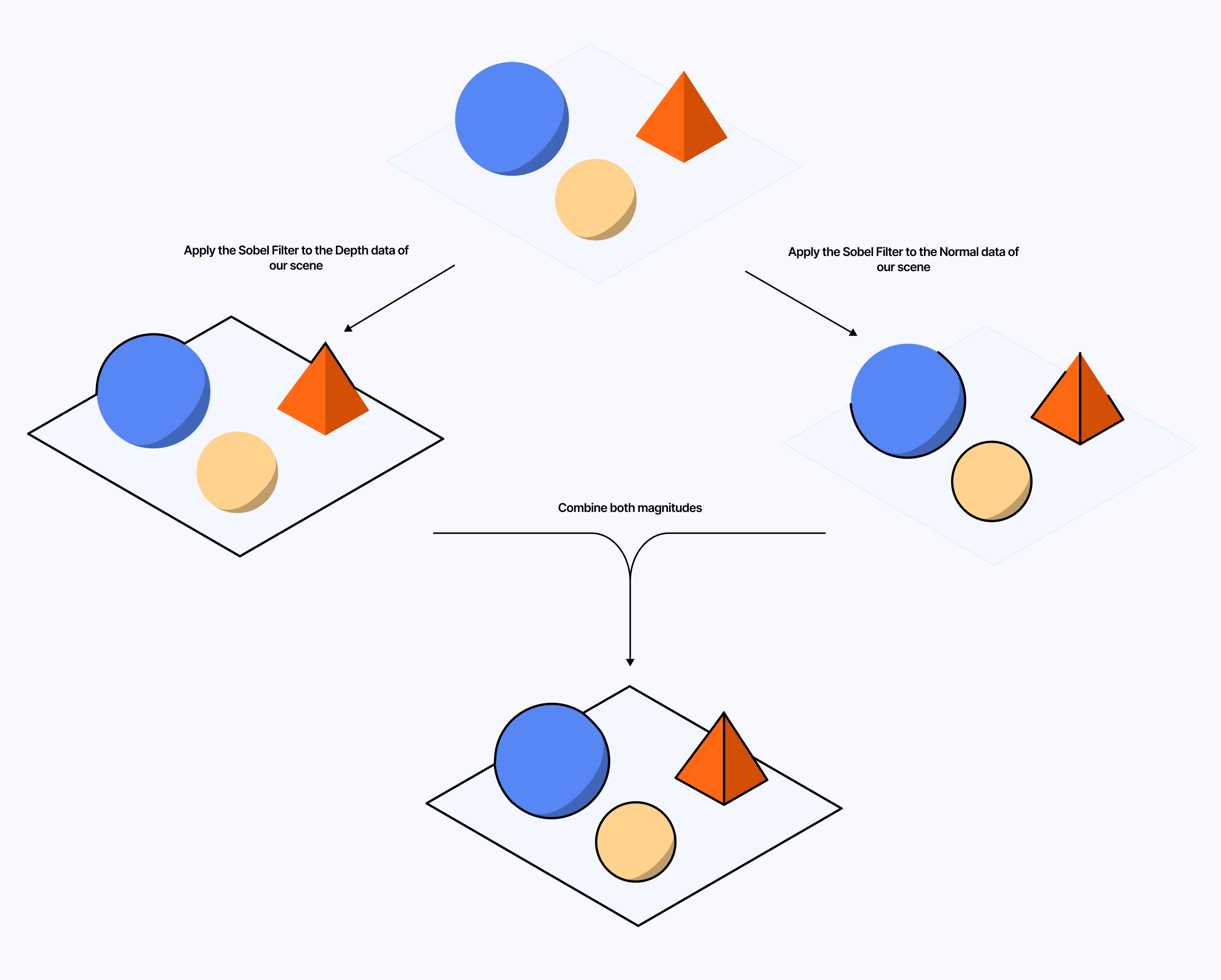

Now that we know how to work with post-processing on React Three Fiber with TSL, let's build an example that leverages the compute pipeline alongside a custom shader effect to stylize our scene. For this section, we'll reimplement the outline effect I introduced in Moebius-style post-processing and other stylized shaders that is based on an edge detection filter called "Sobel filter".

As a reminder, to get our edge detection filter working, we will first need:

The depth texture of the scene. This will inform us of sharp differences in depth where we'd want to draw outlines to differentiate foreground and background.

The normal texture of the scene. This will inform us of any sharp changes of direction for any mesh in our scene. Likewise, we want to draw outlines to highlight the details within a mesh.

The combination of both rates of change/gradients (normal and depth) gives us an overall magnitude of change, i.e., the strength of an edge at a given position on the screen. We can then correlate this strength to either the intensity or the thickness of the outline.

In my original write-up on the topic, I did those calculations directly in the final effect. Today, though, we can:

Leverage a compute shader to fill a StorageTexture with the magnitude at each UV.

Pass this StorageTexture as an argument to our final effect, which is now only responsible for interpreting that data to draw the final outlines.

Call our compute shader once or on every frame if the scene is dynamic.

Texture Buffer

Effect Fragment Shader

In the compute phase:

We set up and pass a THREE.StorageTexture with a size matching our screen's size.

1

const storageTexture = new THREE.StorageTexture(

2

window.innerWidth * window.devicePixelRatio,

3

window.innerHeight * window.devicePixelRatio

We had to calculate the x and y coordinates by converting our instanceIndex into 2D pixel coordinates using the dimensions of our texture.

1

const posX = instanceIndex.mod(

2

int(window.innerWidth * window.devicePixelRatio)

5

const posY = instanceIndex.div(window.innerWidth * window.devicePixelRatio);

From those, we could also infer our UV coordinates, which are necessary to compute the depth and normal magnitudes.

1

const fragCoord = uvec2(posX, posY);

3

float(fragCoord.x).div(float(window.innerWidth * window.devicePixelRatio)),

4

float(fragCoord.y).div(float(window.innerHeight * window.devicePixelRatio))

Once we have calculated the overall magnitude, we can then write to the texture using the textureStore TSL function.

1

const magnitude = GDepth.add(GNormal);

2

textureStore(storageTexture, fragCoord, vec4(magnitude, 0.0, 0.0, 1.0));

We will then have to set up our compute shader to create one thread per pixel of our screen, i.e. window.innerWidth * window.devicePixelRatio * window.innerHeight * window.devicePixelRatio. We can then call it using gl.compute in our useFrame, thus ensuring it updates the texture containing our magnitude data on every frame to handle any camera angle change or any mesh movement.

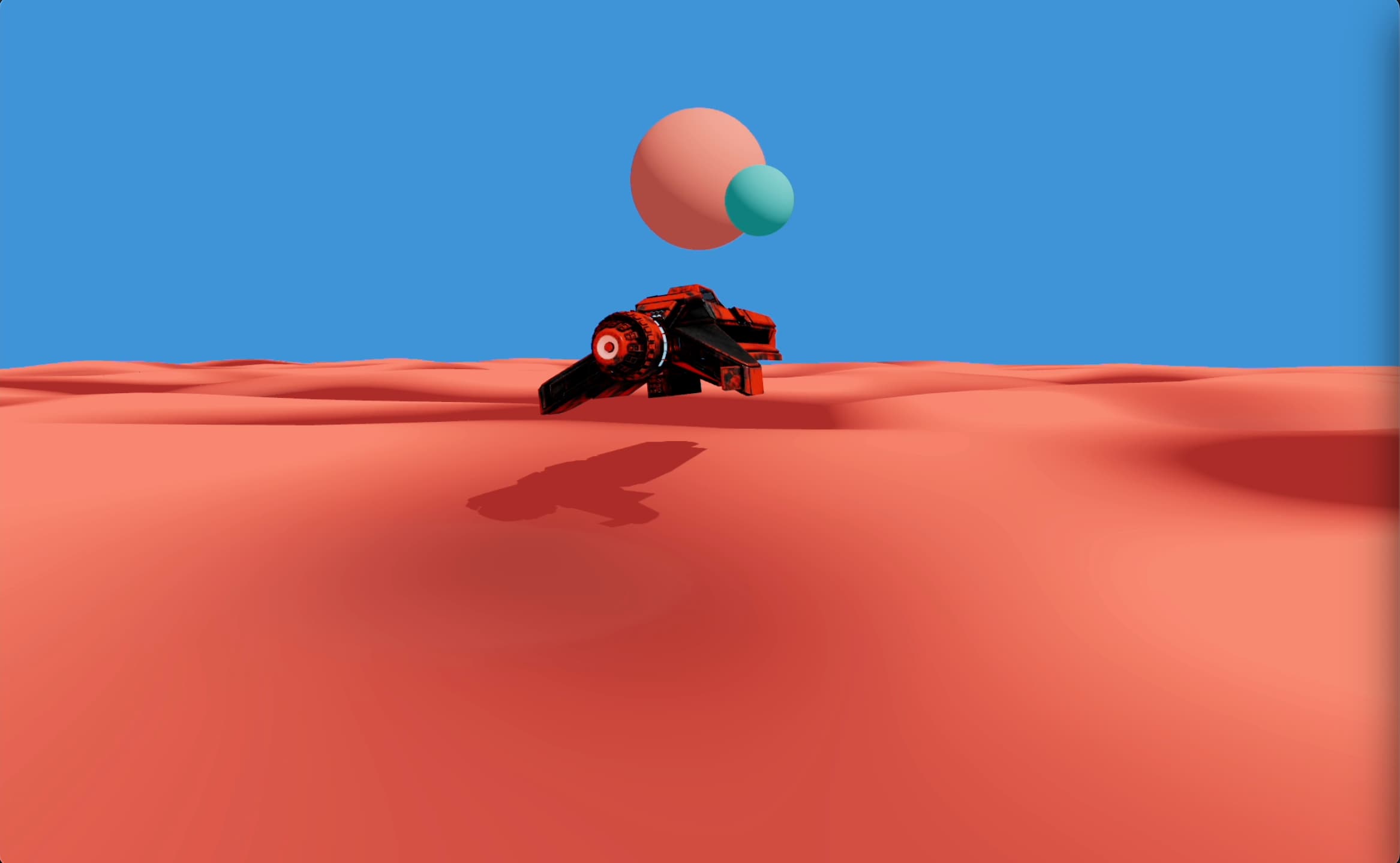

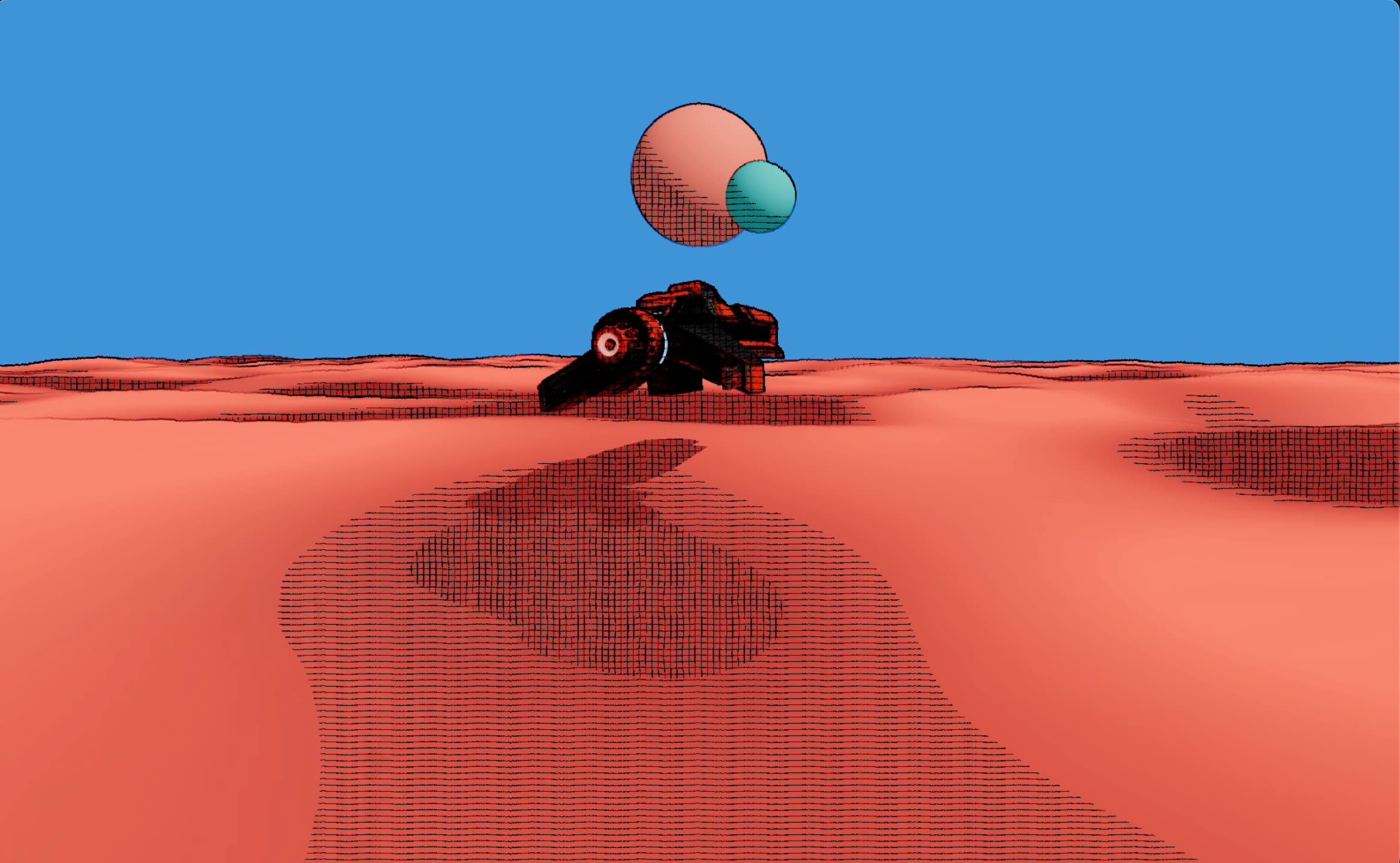

The demo below contains the combination of:

our compute pipeline, running our Sobel filter and storing the resulting magnitudes in a texture.

our render pipeline, running our scene, and applying our custom outline effect based on the magnitude data stored in the texture.

Final thoughts and what's next

I could have written a standalone article for each of the examples and themes presented in this post. However, it felt more natural to showcase them together so that it could act as a guide for anyone looking to transition from classic Three.js/React Three Fiber to this new way to build 3D for the web. I hope this answers some of the questions you may have had regarding WebGPU and TSL, and provides clarity on aspects that may not be immediately apparent from the available documentation and examples.

If I had to write down a couple of my own thoughts at this point in my learning journey, they would be the following:

The node system is life-changing. I really like it. I think many other developers will agree with me on this one.

TSL abstracts a lot of things away, which is both good, as it is very friendly to beginners and lowers the barrier of entry for shader development, but can also be a hassle sometimes as we're giving up control for more advanced use cases.

The abstraction of TSL, while being great for working fast and focusing on building, may prompt me to start working with WebGPU outside of the framework of Three.js/React Three Fiber. There are projects like TypeGPU that make working with WebGPU more accessible while not completely hiding its core features away from developers.

TSL still is very much a Work In Progress, many of its features are loosely or not documented at all. This is where the majority of my frustration with it lies. I often found myself having to infer the usage of a function or to dig an example somewhere within the Three.js repository, which slowed me down in my work. I'd love a full specification to be written at some point so it can remove the guesswork from using it and document best practices to follow.

In the meantime, as some of those details get ironed out, I will still be writing shaders in GLSL unless I have a specific use case where I'd need compute shaders, like in my recent procedural landscape experiences, which I'm very excited to tell you more about the day this project is ready. If you need more inspiration to apply your newly acquired TSL/WebGPU knowledge, here's the list of all the other scenes I built over the summer. You can try to reproduce these on your own to get even more comfortable with TSL and WebGPU; everything you need was introduced in this article:

A comparison of Performance on WebGPU and WebGL in the Godot game engine

It also depends on whether you use compute shaders or not since they are a WebGPU only feature

.png)