A deep dive into building an automated illustration pipeline for storytelling applications

Introduction

AI image generation has revolutionized creative workflows, but there’s a significant difference between generating a single stunning image and producing hundreds of consistent illustrations for a complete project. When building storylearner.app, we faced the challenge of generating book illustrations that maintained visual consistency while telling compelling stories through imagery.

We’ve used powerful Gemini multimodal models, mostly because they are fast and because the experimental ones are available for free.

This article explores the technical and creative challenges of large-scale AI illustration generation, showcasing techniques for achieving visual consistency and building robust pipelines that can handle the complexity of full book illustration projects.

Below are images created for a chapter of a book adapted for the storylearner platform:

The article has a companion colab notebook, so you can play with the examples yourself.

The Consistency Challenge

Understanding Visual Consistency in AI

AI image generation models operate somewhat like a company of talented artists, each with amnesia. Every generation is essentially a fresh start unless you provide explicit context. This creates unique challenges when you need to maintain character consistency, style coherence, and narrative flow across hundreds of images.

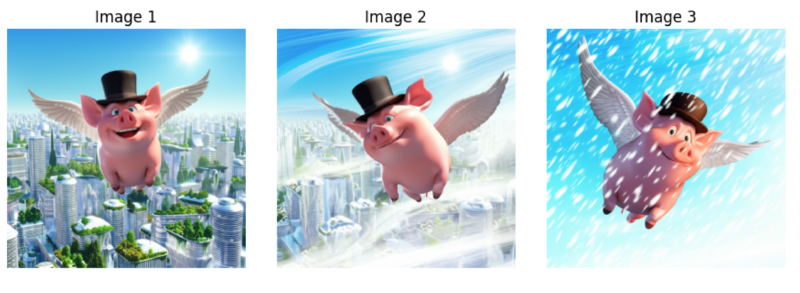

Consider this simple experiment: generating three images of “the same pig with wings and a top hat flying over a futuristic city” in different weather conditions. Even with identical prompts and seeds, subtle inconsistencies emerge—eye colors change, proportions shift, and the overall character can feel different.

The Impact of Seeds and Prompts

Seeds act like selecting a specific artist from your AI company. Using the same seed with identical prompts yields consistent results, but even small prompt variations can dramatically alter the output. We discovered that:

- Same model + same prompt + same seed = identical results

- Same model + same prompt + different seed = completely different character

- Same model + slightly altered prompt + same seed = often produces a different character entirely

This sensitivity means that scaling up requires careful orchestration of all these variables.

Building Consistency Through Reference Images

The Reference Image Approach

The most reliable method we found for maintaining character consistency involves using reference images. Here’s how it works:

- Generate an initial character/scene using carefully crafted prompts

- Upload this image as a reference for subsequent generations

- Include explicit instructions like “Use the supplied image as a reference for how the pig should look like”

This approach significantly improves consistency, though it can also cause new issues in some very specific cases.

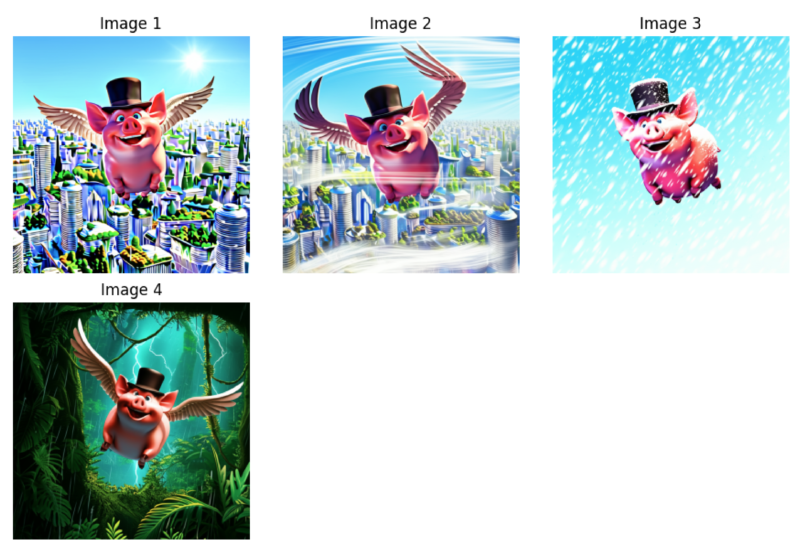

With the following reference image:

You can get this different, but consistent one!

Here is an example of triggering a specific case:

This specific case can happen when a prompt is pretty similar to the one that generated the initial image and the seed is identical. So, when using the reference image and similar prompt, I would actually recommend to change the seed or not set it.

See the reference colab for details.

Practical Implementation

The Storylearner.app Illustration Pipeline

High-Level Architecture

Our production pipeline consists of three main stages:

- Idea Generation: Story text + guidelines → 3 illustration concepts per scene

- Idea Selection: Multiple concepts → best ideas chosen for the complete set

- Image Generation: Selected ideas + style guidelines + reference images → final illustrations

Stage 1: Brainstorming Illustration Ideas

Rather than feeding story text directly to image generation (which often produces poor results), we separate conceptualization from execution:

This approach generates rich, detailed scene descriptions that serve as blueprints for image generation.

Stage 2: Intelligent Selection

To avoid repetitive illustrations (like “a ship, a ship, a ship” in a sea voyage story), we use an AI selector to choose the best combination of ideas:

Stage 3: Consistent Generation

The final generation stage uses:

- Style guidelines (detailed visual specifications)

- Reference images for style consistency

- Persistent chat sessions for maintaining context

- Retry mechanisms for handling API limitations

Visual Guidelines and Style Consistency

Crafting Effective Style Guidelines

We developed comprehensive style guidelines that go beyond simple style names:

Style: watercolor Technique: Combine soft watercolor washes with fine ink line work for contrast and detail. Brushwork: Embrace visible brush strokes, blooming, and natural texture. Ink Lines: Use varied line weights for depth; apply cross-hatching or stippling for texture. Color Palette: Limit to a few harmonious hues with gentle gradations. Forms: Use simplified, geometric shapes; focus on essence over detail. White Space: Treat negative space as part of the composition. Texture: Highlight watercolor paper's natural texture and color variation. Atmosphere: Create light, airy scenes with openness and subtle contrast. Aesthetic: Preserve a hand-drawn look—embrace imperfections and human touch.Chat-Based Generation for Context Continuity

Using persistent chat sessions helps maintain consistency within illustration sets:

Avoiding Common Pitfalls

Critical Design Decisions

Through extensive experimentation, we identified several key strategies:

Avoid Human Close-ups: Character face consistency is extremely challenging. Focus on environmental storytelling instead.

No Violence or Gore: Keep illustrations family-friendly and avoid content that might trigger safety filters.

Diversify Scene Types: The selection stage prevents repetitive imagery across the complete set.

Decouple Ideation from Generation: Separating concept creation from image generation improves both quality and debuggability.

Real-World Example: Illustrating The Three Musketeers

Let’s walk through illustrating a chapter from The Three Musketeers:

Context and Settings

First, we establish the story context:

Setting: France, primarily Meung and Paris, early 17th century Historical Context: Political tensions between French monarchy and Cardinal Richelieu Main Characters: D'Artagnan, Athos, Porthos, Aramis, Cardinal Richelieu, Milady de WinterGenerated Ideas

For the chapter opening, our system generated these concepts:

- “The Jolly Miller Inn Chaos”: Exterior scene with a yellow pony, scattered debris, and dramatic lighting hinting at recent altercation

- “Broken Sword”: Close-up of shattered steel on cobblestones, symbolizing lost honor and broken dreams

- “Inn Kitchen Aftermath”: Dimly lit interior with earthenware, bandages, and flickering candlelight

Selection and Generation

The selector chose the most diverse and narratively appropriate ideas, which were then generated using our reference image and style guidelines, producing illustrations that maintain visual consistency while telling the story effectively.

Key Insights and Best Practices

-

Consistency vs. Perfection: Perfect consistency isn’t always necessary—visual coherence in style and mood often matters more than exact character matching.

-

The Artist Analogy: Think of AI models as artists with amnesia. You need to provide context, references, and clear instructions for each interaction.

-

Pipeline Modularization: Breaking the process into idea generation, selection, and execution improves quality and maintainability.

-

Style Guidelines Matter: Detailed, specific style descriptions work better than simple style names.

-

Reference Images Are Crucial: Upload and reference style examples for best consistency results.

-

Long Sessions are Fragile: It often works until a point, and at some point it fails poorly, e.g. inserting objects from a previous illustration into the following ones.

Conclusion

There is still a huge gap between a carefully handcrafted demo on an AI company blog and practical usage of the technology at scale. Things don’t work out well straight out of the box, but you can make these amazing tools work for you with help of systematic thinking about consistency, quality control, and robust engineering practices.

While challenges remain, I hope you’ll enjoy the techniques we’ve developed at storylearner.app to power your own projects!

.png)