You guys remember Spectre?

Spectre was a CPU vulnerability that had it’s own cute little logo:

If you look at a modern computer, you’ll find that while CPUs can perform hundreds of trillions of operations per sercond, memory speed is limited to a couple trillion operations per second. This means that most processors spend more time waiting for data to arrive than actually processing it.

CPUs use something called speculative execution to overcome this memory bottleneck. A bug in this optimization is what Spectre targeted, allowing attackers to steal any data processed by the CPU.

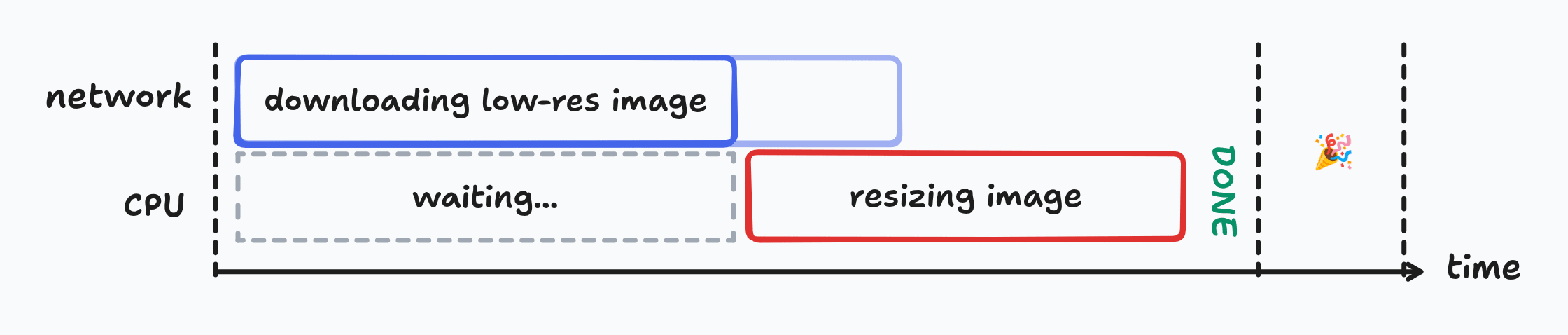

Let’s say you have two processes, where one depends on the output of the other. Say, downloading an image and resizing it to make it smaller.

While the image is downloading, the CPU is basically milling around, doing nothing, waiting for the image to become available for processing.

You can reduce the time wasted by the CPU by making the image available faster. How do you do that? Well, download a smaller image.

Whether you download a 1024x1024 image or a 2048x2048 image, the result of resizing it to a 256x256 image would be identical.

This is a form of speculative execution: feeding the output of a smaller/faster approximation of a long running task to another such task to speed up a process.

LLMs think of words in terms of tokens. A token may represent a single word, or a part of a word, or a punctuation mark. In the below image, each colored rectangle represents a single token:

When generating a word, LLMs look at all the tokens so far, do some complex calculations (internal reasoning, sentence & grammatical structure correction, etc.), and then generate the next token. This process is repeated multiple times to create sentences, paragraphs, summaries or fake research papers — tokens in, tokens out; one at a time.

Let’s consider another sentence. Imagine asking ChatGPT…

What is the capital of USA?

A typical reply would look like:

What is the capital of USA? The capital of USA is Washington.

Have a look at the words in bold.

What is the capital of USA? The capital of USA is Washington.

Another example:

What is the sum of 2 and 4? The sum of 2 and 4 is 6.

To generate the tokens The capital of USA or The sum of 2 and 4 after the question mark, we don’t need to do any internal reasoning. We can guess these tokens by looking at the tokens before and copying them.

However, generating the tokens Washington or 6 was something we could not have guessed: it required us knowing that piece of information beforehand, or actually calculating the sum.

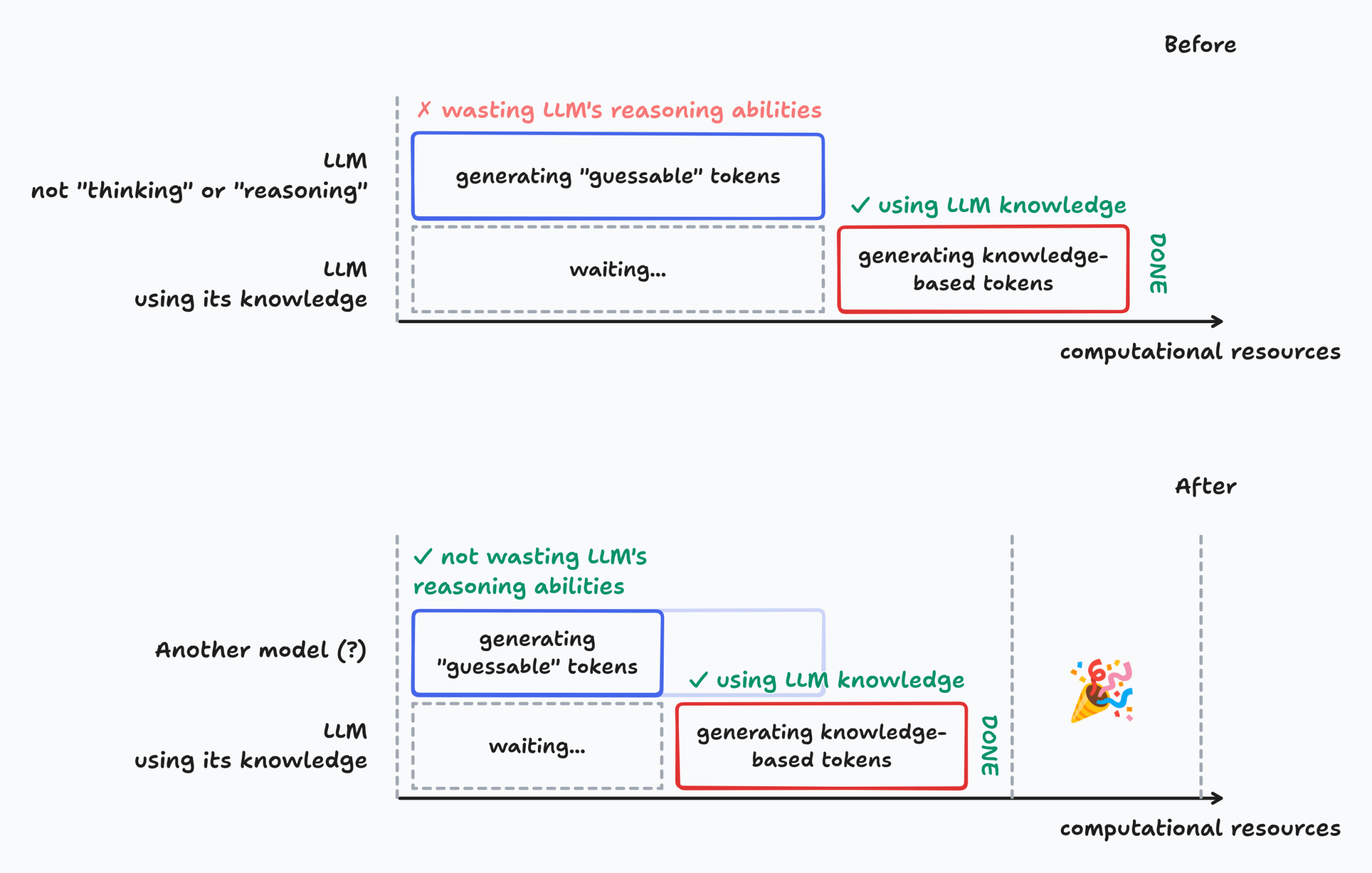

LLMs are good at generating new things: copying the same tokens seems like a waste of their ability. But, because of how they work (one token at a time), they have to spend a lot of time and computational resources generating easily-guessable tokens.

What if, like our image resizing problem, find a way to generate these low-reasoning copied tokens faster?

The first ‘L’ in LLM stands for Large. LLMs are huge: upto hundreds of gigabytes of storage for a single model. For example, the DeepSeek R1 671B model has a disk size about 400 GB. Let me draw your attention to an earlier paragraph in this article:

…while CPUs can perform hundreds of trillions of operations per sercond, memory speed is limited to a couple trillion operations per second.

Moving model weights around GPU VRAM is a time-consuming operation — more time-consuming than actually performing the calculations to generate the next token. To make a model faster, we would ideally want to reduce the number of such time-consuming operations. How do we do that?

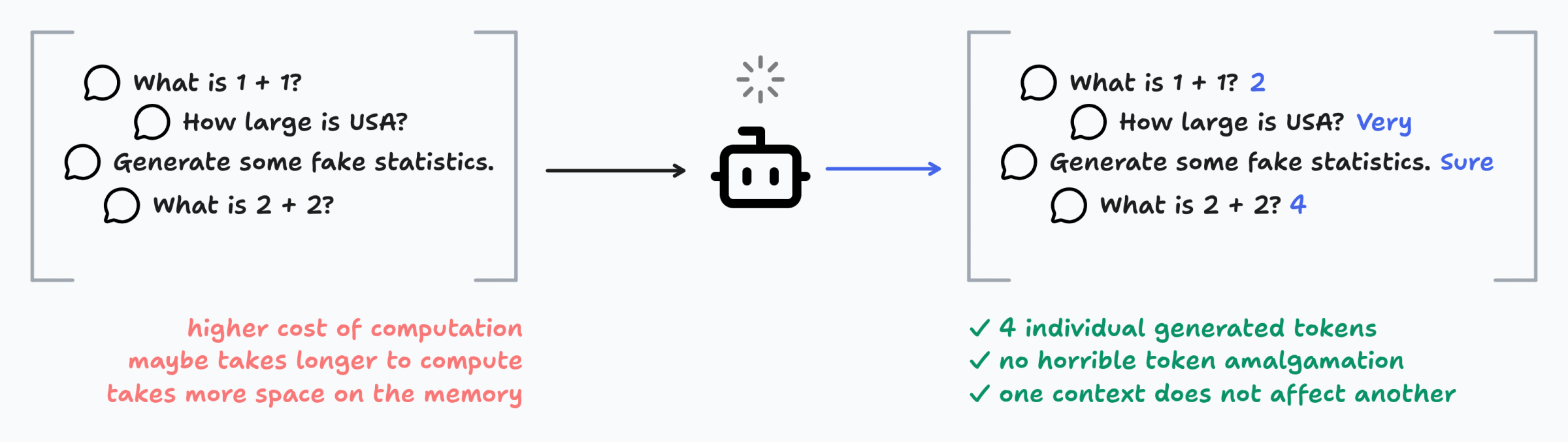

Well, one way is to process more data at once: say, generate tokens for multiple sequences at once. Not multiple tokens for a single sequence at once, but tokens for multiple sequences at once.

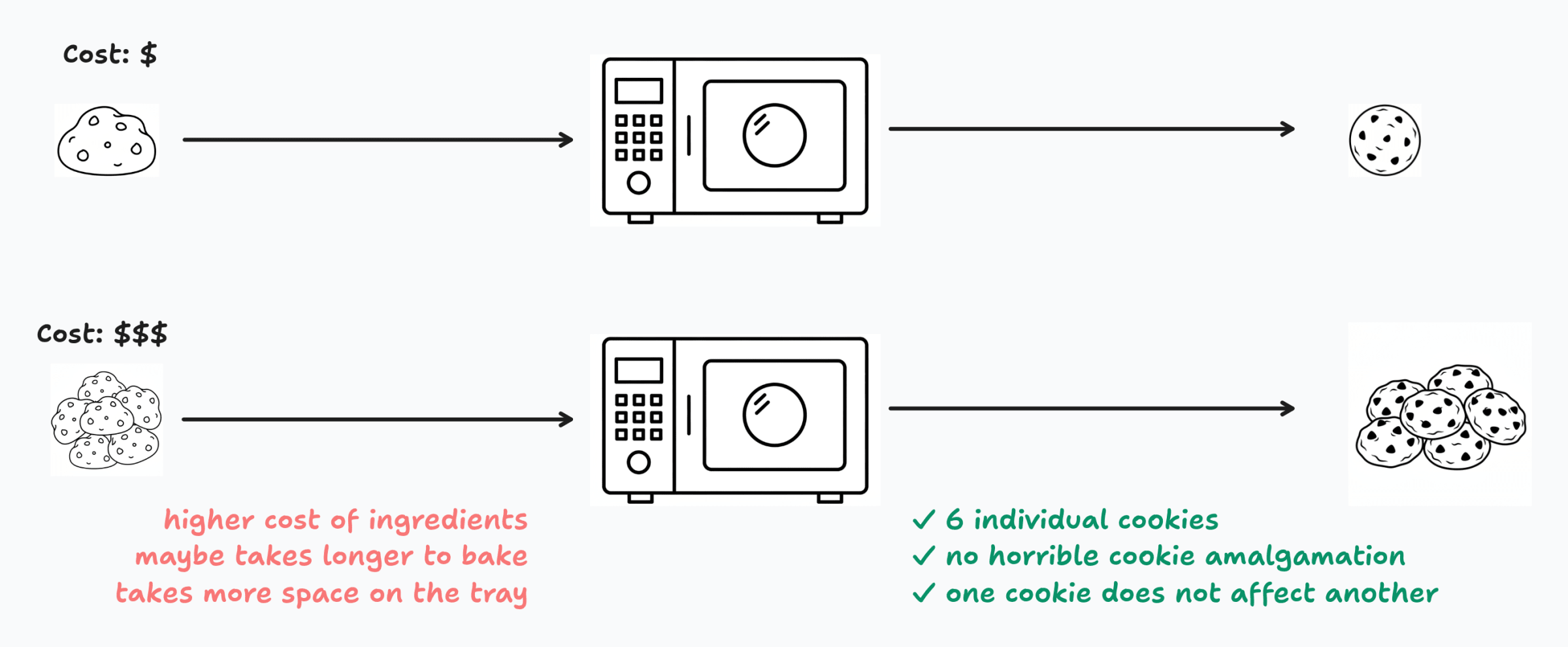

An analogy to this is baking cookies.

You could make cookie dough for a single cookie, put it on a tray and bake it. You’d get a single baked cookie.

Or, you could spend a little more time and money making dough for a batch of 6 cookies, put them on a tray and bake them. You’d get 6 cookies. The dough from one cookie doesn’t magically transfer to another cookie — each cookie maintains it’s individuality in the process.

Similarly, LLMs can process multiple, unrelated sequences at once and generate the next token for each token individually, without context from one sequence affecting another sequence. The maths behind how LLMs work: matrices, attention, etc. enables this.

In fact, this method of processing multiple prompts together is so beneficial, that companies such as OpenAI cut their API charges by half if you let them batch your prompt with others and process it together at a later time.

However, batching only speeds things up when you have multiple parallel prompts to work on. It does nothing to speed up token generation where we sorely need it: generating easily guessable tokens.

Well, we solved the shunting-stuff-around-memory bottleneck, but one bottleneck still remains: the generation of guessable tokens.

To fix this, you must ask yourself one question. Sure, LLMs are pretty damn large. But why? Why do LLMs need to be large? Why can’t we build a small LLM?

As of the writing date of this post, GPT-5 is OpenAI’s latest model. It’s estimated to have about 1000B parameters. Here’s what GPT-5 generated (text in italics):

What is the capital of USA? The capital of the United States of America is Washington, D.C.

In comparison, here is GPT-1 having only 0.1B parameters, one of OpenAI’s first models, generated:

What is the capital of USA? “ the capital of america ! the united states is the united states of america !

Do you see the difference? The smaller model doesn’t have nearly enough knowledge and language understanding as the larger model. It can’t reason with itself. It can’t solve Math equations, argue in debates or generate compelling fake data. It might know enough English to use “the” in front of “united states”, but not enough to answer the question.

But the smaller model isn’t completely useless. In fact, this ability of GPT-1 to “copy” relevant tokens is actually the solution to our initial problem. GPT-1 is much faster than GPT-5, owing to the fact that it has about 10,000x less parameters. This very fact also makes it much worse at generating text.

It would be a real breakthrough if we could combine the speed of the smaller model with the power of the bigger model, without compromising on quality. How do we do that?

Alright.

The small model is fast, but bad at generating quality output. The large model is slow, but gives excellent quality. Also, as we’ve learnt from batching, we can generate multiple outputs simultaneously for both models, without much of a time-wise performance cost.

How do we combine these three facts together?

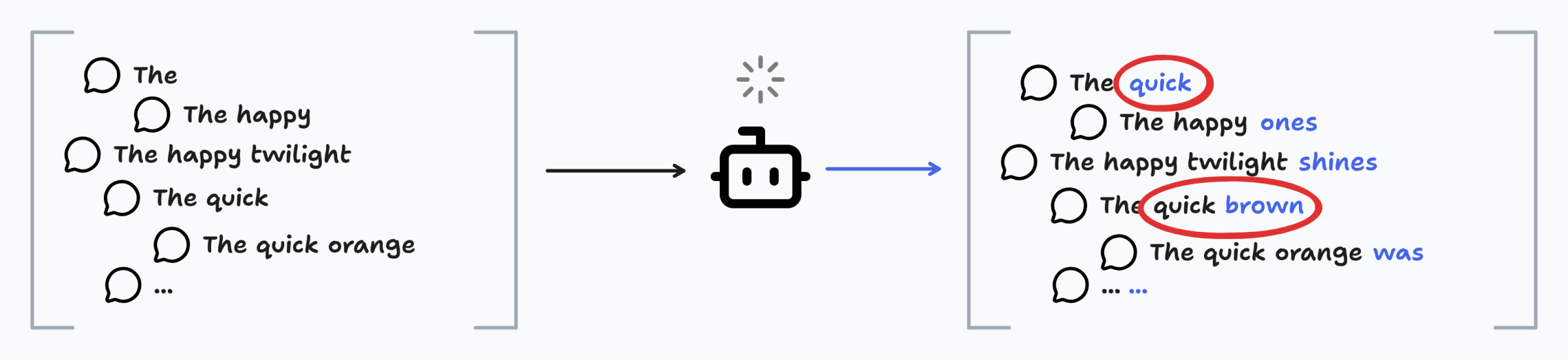

Let’s try running the faster model first. We let it generate a bunch of possible tokens for the next couple of words. Think of these as draft tokens.

Then, we feed a sequence of these draft tokens to the larger model, using batching:

The secret lies in what sequences you feed the larger model in a single batch. Have a look at the highlighted items in the above image.

First, the larger model generated the word “quick”. This means that the other guesses for the 2nd word by the smaller model were wrong, and we can discard those.

With “quick” as the 2nd word, the smaller model generated “orange” as the 3rd word. The sequence “The quick” was fed to the larger model, which generated “brown” as the 3rd word, telling us that the smaller model’s prediction of “orange” was incorrect.

In effect, the smaller model generated a bunch of suggestions, and the larger model evaluated them in parallel and chose the best one for us. Visualizing this as a tree:

If you take a step back and have a look, you’ll see why speculative decoding so so great.

It allowed us to generate 2 tokens with just 1 pass of the large model. That’s a 2x speedup for this toy example!

And yet, crucially, the output remains just the same. It’s as if the larger model said, “that’s exactly what I was thinking!” to the output of the smaller model.

Here's a better animation from this Google Research blog post.

In essence, speculative decoding allows a smaller model to generate suggestions, and lets the larger model pick out ones that make sense. And in doing all this, it massively improves throughput of tokens without degrading quality!

Alright, let’s wrap things up for now.

First, we had a short look at why speculative execution is necessary: most processes are not compute-bound, but memory-bound.

Then, we looked at tokenization, and how LLMs generate text at a very high level. There’s a ton of concepts I’ve left out: the architecture of transformers, attention, etc. that were not relevant to the speculative decoding. I’d highly recommend reading more about these.

We then moved on to batching, and how it solves a different (but not unrelated) problem.

This allowed us to understand speculative sampling: using a smaller model to generate suggestions.

Speculative sampling was the last step to climb before we could cover speculative decoding: using a larger model to accept suggestions, discard them or create new suggestions.

With speculative decoding and the right method of prompting the larger model, we discovered how it is possible to generate multiple tokens in a single pass, without sacrificing quality.

There’s a couple of things we haven’t explored yet: the impact of distillation on speculative decoding is one that I really want to cover in the next post. If you want to be notified when it releases, drop your email below!

This post was heavy inspirated from a Relace.ai blog post and this Google Research blog post. Icons were mostly taken from Lucide.dev; some were AI-generated.

.png)