Picking up right where the the last post left off, this follow-up dives into the bread-and-butter building blocks of deep-learning kernels. We’ll implement and benchmark core algorithms-sliding-window pools, tile-wise convolutions, warp-level scans, and more.

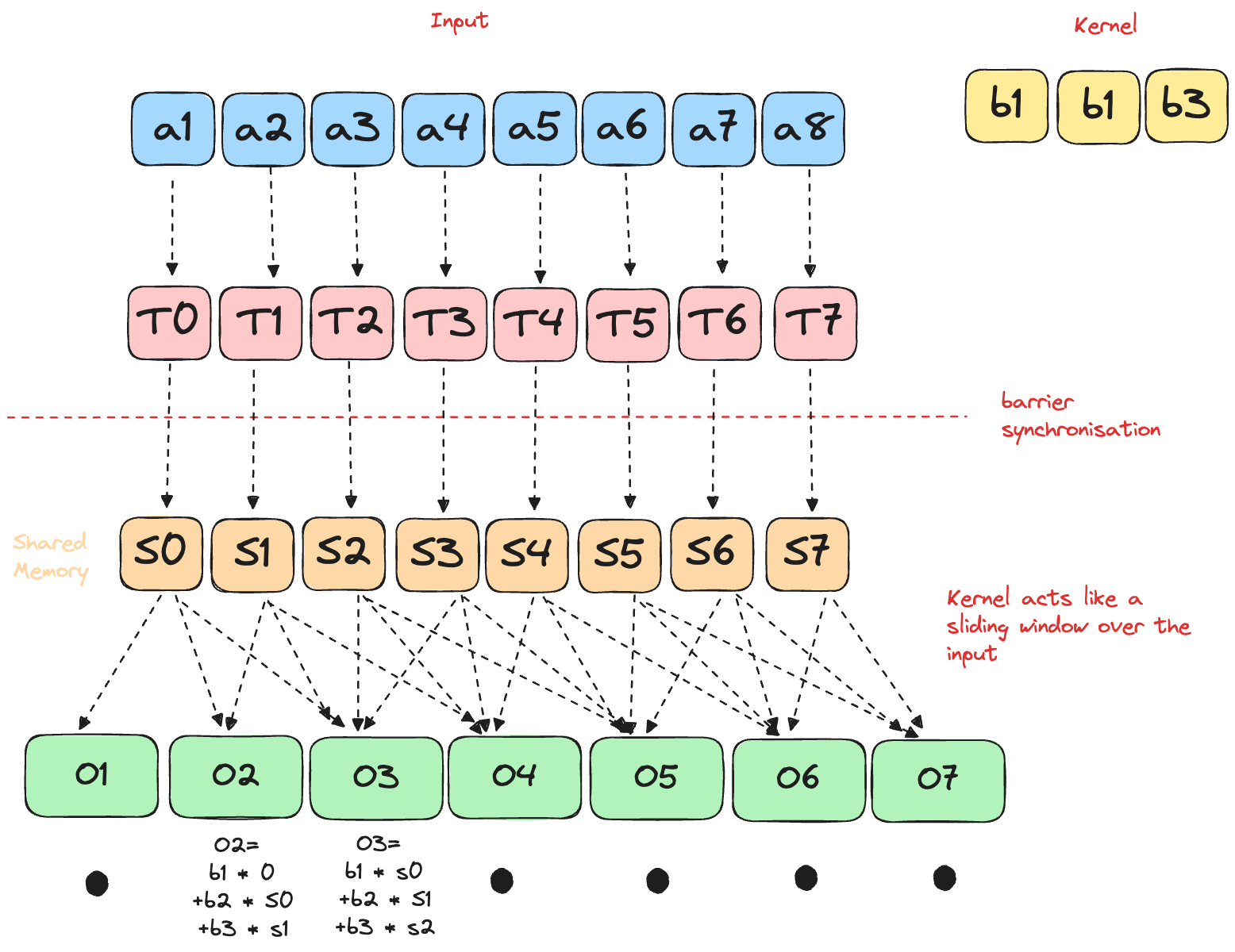

Pooling is a classic trick in neural networks for shrinking down your data-think of it as a way to “summarize” regions of an image or tensor. Instead of looking at every single pixel, pooling (like max or average pooling) slides a window over your data and grabs just the most important info from each patch. On GPUs, pooling is a perfect fit: each thread can independently process a window, so you get massive parallelism and a big speedup compared to CPUs.

This puzzle is a bit different compared to traditional pooling: Instead of having a “kernel”, each output element is the running sum of the all the elements in the current window.

SolutionThe LayoutTensor version is nearly identical to the Raw Memory approach, so we’ll omit the code here for brevity.

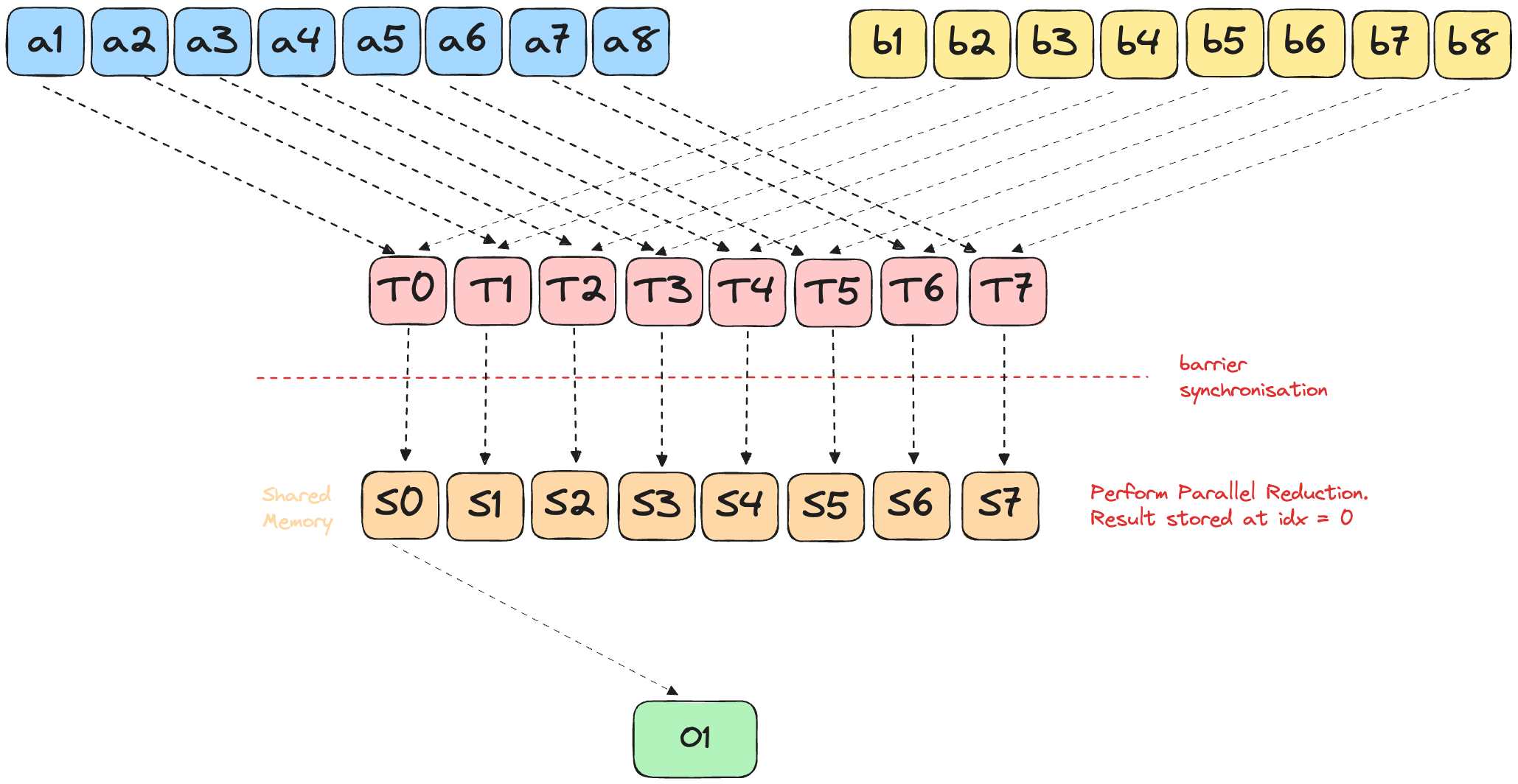

The Dot Product of two vectors \(a\) and \(b\) is defined as [1]:

\[ c = a \cdot b = \sum_{i=0}^{n-1} a_i b_i \]

Similar to the previous puzzles, we can implement the dot-product by copying data to the shared memory, and running our operations on it.

To implement dot product efficiently on a GPU, we will use parallel reduction. This is a classic pattern for aggregating values (sum, min, max, etc.) across a large array using many threads.

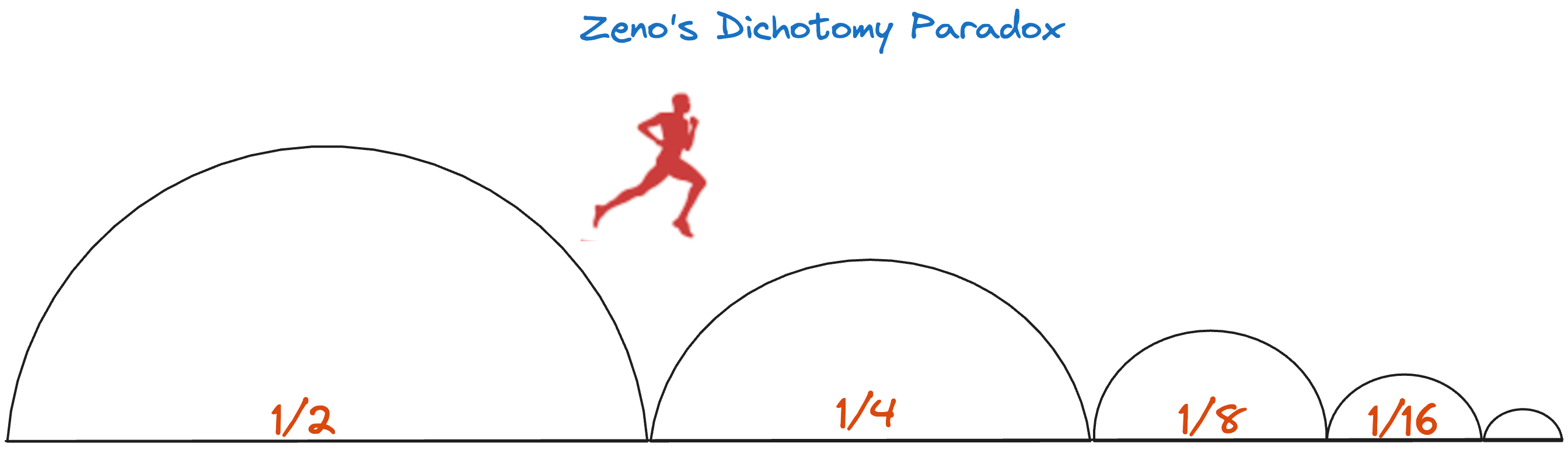

Picture Zeno’s “half-way” paradox [2]: you keep halving the leftover distance until you’re done. A parallel reduction does the same-each round halves the number of active threads instead of the distance. Unlike Zeno’s infinite halvings though, we stop at a concrete point: when only thread 0 remains active (stride becomes 0).

- Every thread multiplies its assigned a and b elements and writes the partial product into shared memory.

- Each reduction round:

- The active-thread count is cut in half (stride /= 2).

- Each surviving thread adds its value to the partner stride positions away.

- A barrier() guarantees all writes land before the next “half-step.”

- After log₂ (n) halvings, Zeno’s finish line is crossed-thread 0 alone holds the final dot-product.

This pattern is fast, highly parallel, and used everywhere in GPU programming for reductions (sum, min, max, etc).

Raw Memory

SolutionNote: Instead of doing the parallel reduction, we could also implement the solution using a loop:

While this approach also gives the correct answer for this puzzle, it has multiple problems:

- Race conditions: Multiple threads would simultaneously try to update output[0] without synchronization, causing lost updates.

- Thread divergence: When threads in a warp take different execution paths (some running the loop, others not), the GPU must serialize execution, destroying parallelism.

- Redundant computation: Every qualifying thread would compute the exact same sum over the entire array, wasting compute resources.

- Memory bottleneck: Repeated atomic operations to the same memory location (output[0]) create severe contention.

LayoutTensor

Solutionalias TPB = 8 alias SIZE = 8 alias BLOCKS_PER_GRID = (1, 1) alias THREADS_PER_BLOCK = (SIZE, 1) alias dtype = DType.float32 alias layout = Layout.row_major(SIZE) alias out_layout = Layout.row_major(1)

Picture sliding a magnifying glass along a long strip of film. That’s exactly what a 1-D convolution does to any 1-D signal-audio samples, DNA bases, even bytes of log data.

- The kernel (a small weight vector) glides over the sequence one step at a time (or more if you set stride > 1).

- At each stop it multiplies the local window by its weights, sums the result, and drops a single number into the output map.

- Stack layers and you grow the “what can I see at once?” window (the receptive field) without blowing up parameters.

Why bother?

- Speed: A conv layer is just a batched matrix-mul-GPU catnip.

- Locality first, context later: Early layers grab short-range patterns (phonemes, k-mers). Deeper layers stitch them into bigger motifs (words, promoters).

- Channels generalize it: You convolve along length, but for each input channel you keep separate weights, sum across channels, and spit out new feature maps. Same trick as 2-D CNNs, just flattened.

For a better picture, see Ayush’s blog[3] on convolutions.

The convolution operation can be defined as: \[ (input\_signal\_a * kernel\_b)[i] = \sum_{j=0}^{\text{kernel\_size}-1} input\_signal\_a[i + j] * kernel\_b[j] \tag{1}\]

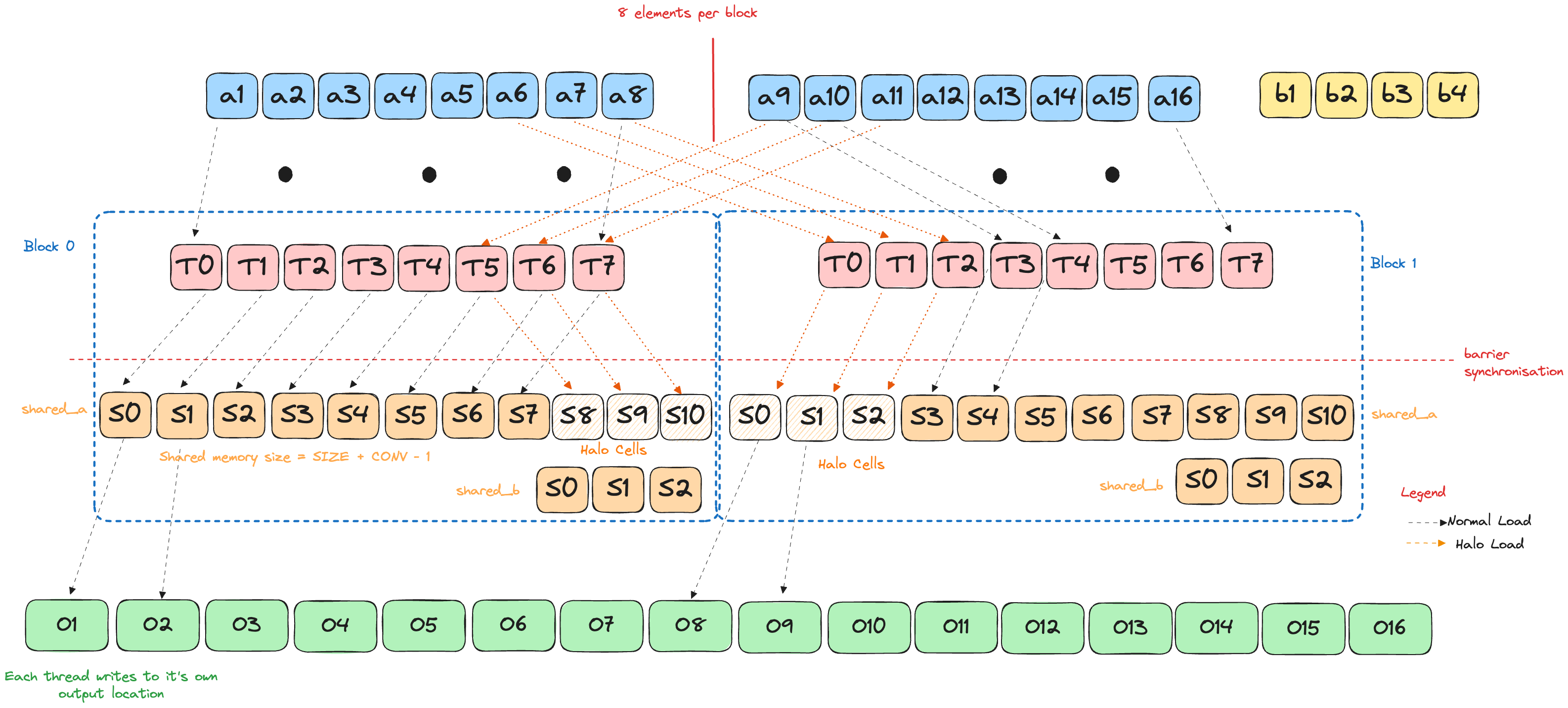

Block Boundary

We now aim to perform convolution over an input that is larger than a single block. Due to the nature of convolution operation, this introduces interesting boundary conditions. Specifically, the output of block N now depends on block N - 1, when N > 1.

The blue cells are the data owned by the current thread-block. The orange cells are the first few elements of the next block that the convolution window will inevitably peek at.

Problem statement

Run a 1-D convolution with a CONV₂-tap kernel over an input that is longer than one block (TPB threads). We want every thread to:

- Pull data from shared memory only (once it’s loaded, stay in-block)

- Avoid divergent branches and random global reads

- Keep the load pattern fully coalesced

Naïve global loads meet none of those goals-once a window crosses the block edge the tail threads must issue conditional, straggling reads (i.e. each thread grabs a lone, scattered element from global memory instead of part of one tidy, coalesced burst).

The halo idea

Give each block an in-block “fence extension”:

shared_a = …[TPB + (CONV₂ − 1)] # main slice + haloThe extra (CONV₂ − 1) slots-the halo-mirror the first (CONV₂ − 1) elements of the next block (or zeros if we’re already at EOF). That single change guarantees that every sliding window lives in one contiguous span of shared memory.

The elements that are involved in multiple tiles and loaded by multiple blocks are commonly referred to as halo cells or skirt cells since they “hang” from the side of the part that is used solely by a single block[8].

Loading recipe (matches the numbered arrows in the figure):

- Bulk copy - all TPB threads dump their element:

shared_a[t] = a[blockStart + t] - Halo fill - threads t < (CONV₂ − 1) copy the tail:

shared_a[TPB + t] = (a[blockStart + TPB + t] if in-range else 0) - Kernel stash - threads t < CONV₂ cache the weights:

shared_b[t] = b[t] - barrier() - everyone syncs

After step 4 every thread sees:

main slice halo [ … local_i … TPB − 1 | TPB … TPB+CONV₂−2 ]Code to perform the actual computation is the same as in Puzzle 10.

One barrier, no branches and 100 % shared-memory hits ensure our kernel is fast and efficient!

SolutionSliding a 1D window over an audio buffer was straightforward: one axis, one index. Images and matrices, however, live on chessboards, not lines. To convolve or multiply them efficiently we need to map two spatial dimensions onto the GPU’s grid-block-thread hierarchy.

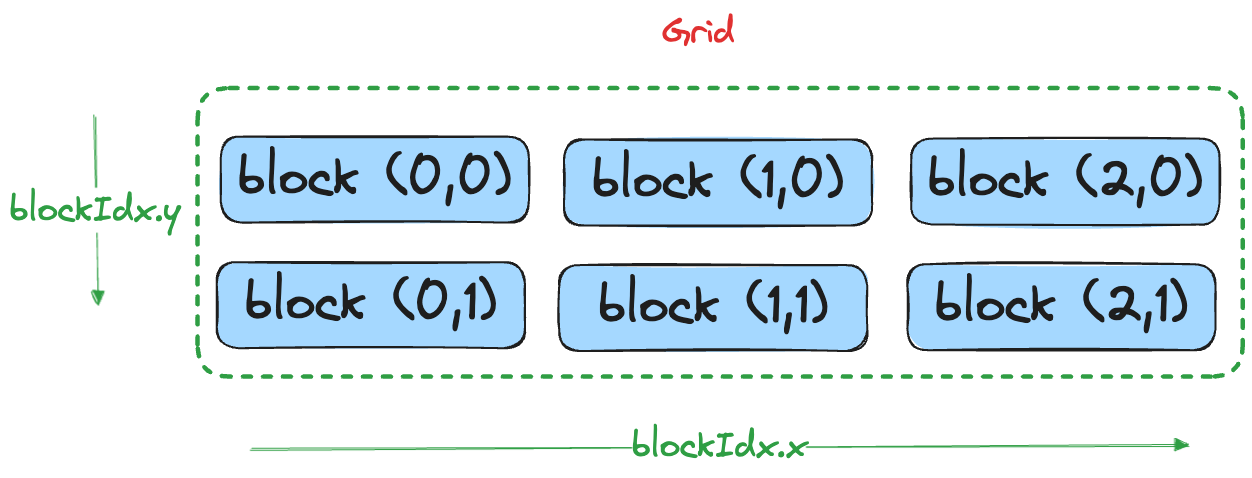

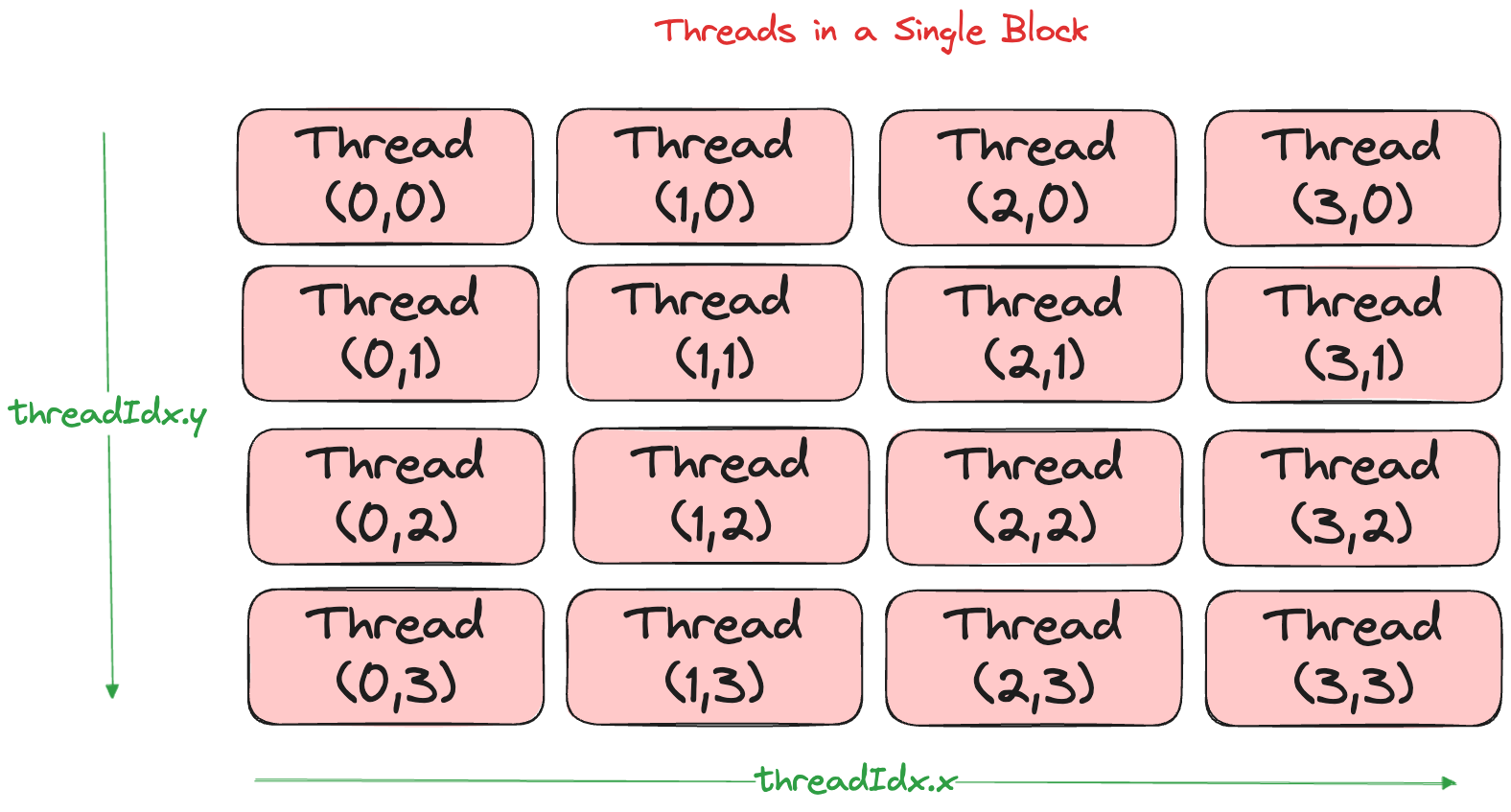

Thread Hierarchy in 2D

The GPU execution model extends naturally to 2D with a three-level hierarchy:

| Grid | City | (blockIdx.x, blockIdx.y) |

| Block | City block | (threadIdx.x, threadIdx.y) |

| Thread | House | computed from block + thread IDs |

Within each block, you choose the thread footprint at kernel launch with THREADS_PER_BLOCK = (blockDim.x, blockDim.y), giving blockDim.x * blockDim.y total threads per block.

What’s a Warp?

Under the hood, the GPU executes 32 threads at once in groups called warps (AMD calls them wavefronts[9]). All 32 lanes run the same instruction each cycle (SIMT). Thread divergence or uncoalesced memory access forces the warp to serialize, so we design our 2D tiles around these 32-lane chunks.

Hardware facts:

- SIMT execution: All 32 threads in a warp execute the same instruction. Branching splits the warp and runs paths serially.

- Memory coalescing: A warp performs one 32-lane memory request when threads access consecutive addresses.

- Occupancy: The number of warps that can run simultaneously on a streaming multiprocessor, limited by registers and shared memory per block.

Grids and blocks are a programmer-friendly abstraction. Warps are what the hardware actually schedules.

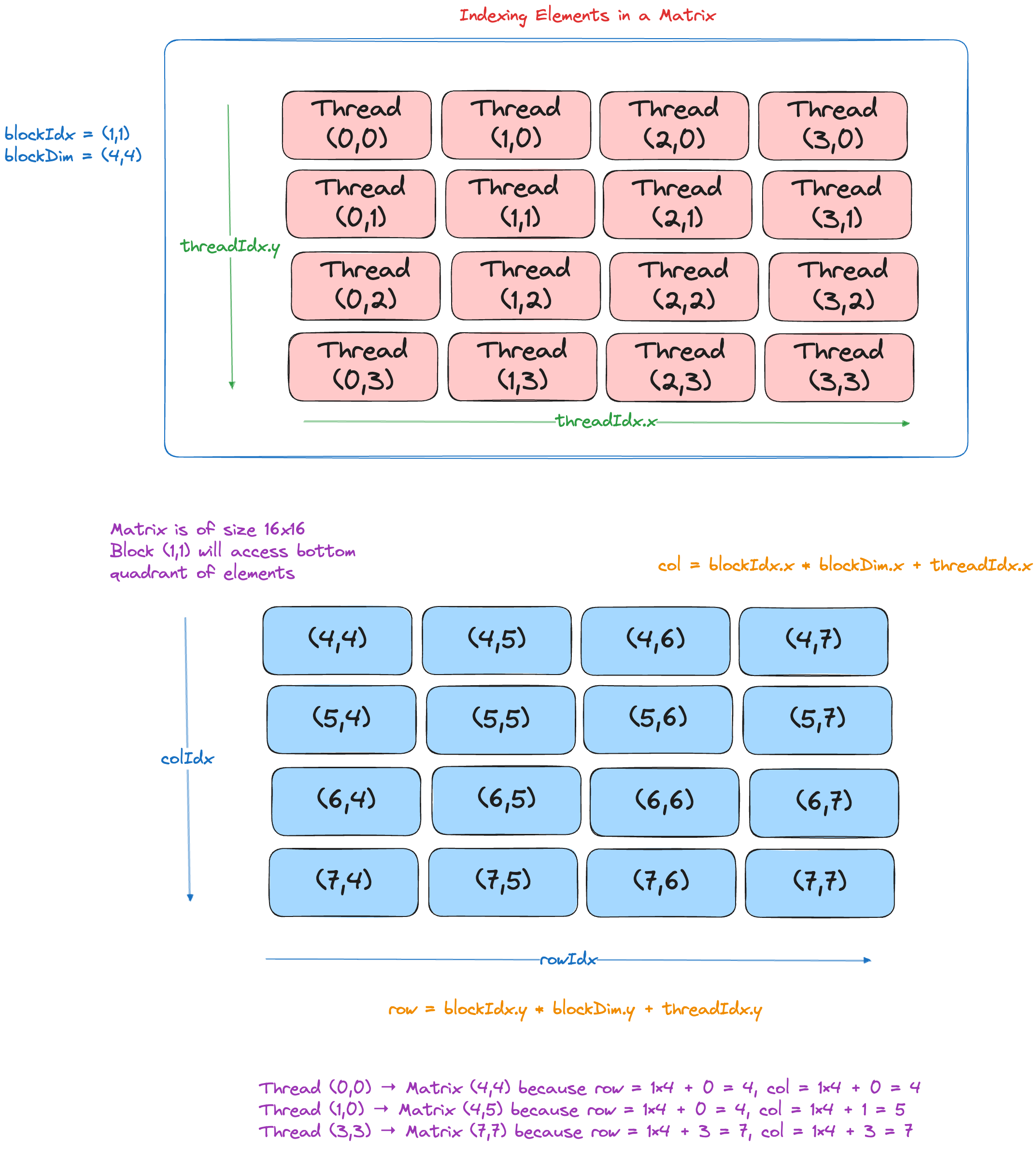

Computing Global Matrix Indices

The key insight is that every thread computes its global position using the same formula:

This maps the thread hierarchy directly to matrix coordinates:

For an M×N output matrix, you typically launch:

Choosing Tile Size

As shown earlier, because a warp wants contiguous addresses, we’ll carve the matrix into 16×16 tiles. Here’s how the hardware facts translate to design choices:

- Warp-aligned rows: Make tile width a multiple of 32 (warp size) so each row loads as a single coalesced burst.

- Shared memory reuse: Square tiles minimize the halo-to-area ratio, so each global load gets reused ~K times across the convolution window.

- Resource budgeting: 256-512 threads per block (8-16 warps) keeps enough warps resident for latency hiding without exhausting registers or shared memory.

A 16×16 tile gives 256 threads = 8 warps, hitting the sweet spot for most GPUs.

Bounds Checking

Since matrix dimensions are rarely exact multiples of tile size, always guard against out-of-bounds access:

Mojo doesn’t provide automatic bound checking when writing to shared memory [10].

Worked Example: 40×50 Matrix with 16×16 TilesFor a 40×50 matrix with 16×16 tiles:

col 0……15 16……31 32……47 row 0…15 Blk(0,0) Blk(1,0) Blk(2,0) 16…31 Blk(0,1) Blk(1,1) Blk(2,1) 32…39 Blk(0,2) Blk(1,2) Blk(2,2)Each thread in Block(1,1) computes one element where row ∈ [16,31] and col ∈ [16,31]. Note that Block(2,2) only processes 8×16 elements due to the matrix boundaries.

Indexing Pattern Template

This indexing pattern appears in every 2D GPU kernel-matrix multiplication, 2D convolution, transpose, etc.

Note: Mojo/CUDA grids and blocks can also have a third dimension (block_idx.z, thread_idx.z) for problems like 3D volume processing or batch operations. We’ll cover that when we encounter 3D kernels.

We can extend our implementation for 1D convolution to a 2D convolution.

Everything is exactly the same idea as 1-D, only now we have two spatial dims:

- We launch a 2D grid of (ceildiv(WIDTH,TPB_Y), ceildiv(HEIGHT,TPB_X)) blocks of TPB_Y×TPB_X threads.

- Each block allocates a shared tile of size (TPB_Y+K−1)×(TPB_X+K−1) to hold its “main” patch plus a one‐pixel halo on the bottom/right.

- We also stash the full K×K kernel into shared_k.

- After a single barrier(), each thread does two nested @parameter loops over ky,kx∈[0,K) to compute a dot‐product.

After making a few changes to the test harness, we get the following result:

We’ll dive into the shared memory tricks like parking partial results, handling 2-D thread and block indexing, and performing halo copies when we get to matrix multiply in Puzzle 14.

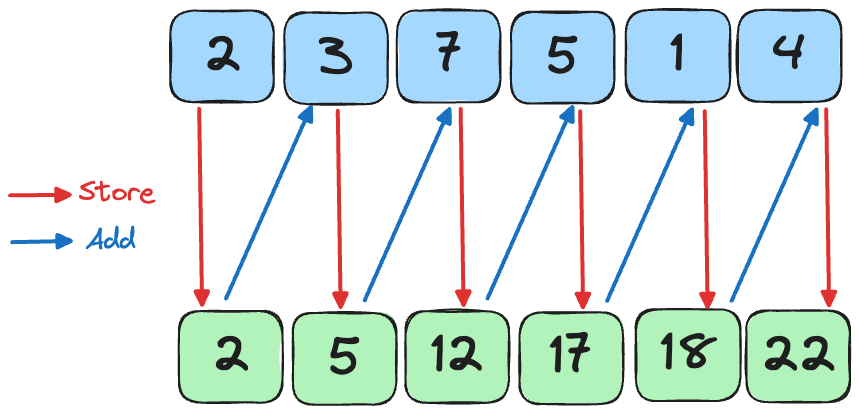

The prefix sum (or scan) problem takes an input array [a₀, a₁, …, aₙ₋₁] and produces the running totals

[a₀, (a₀ ⊕ a₁), …, (a₀ ⊕ a₁ ⊕ … ⊕ aₙ₋₁)]It’s a foundational primitive in parallel computing-used for stream compaction, sorting, histograms, and more. At first glance, prefix sum looks inherently serial (each output depends on all previous inputs), but clever algorithms can parallelize it efficiently.

Hillis-Steele Algorithm

A straightforward parallel scan is the Hillis-Steele approach: at each distance d = 1, 2, 4, … every element adds in the value from d positions back. This is the same as the method shown in Puzzle 10

In Mojo, this looks as follows:

SolutionEach of the log₂(n) rounds does up to n parallel additions (one per active element), so total work is \(\sum_k n = nlog(n)\). Because rounds are serialized by barriers, the longest dependency chain is one add per round i.e \(O(log n)\).

Blelloch’s Two‐Pass Algorithm

Blelloch’s two-pass scan does Θ(n) work by splitting the job into an up-sweep (build a reduction tree) and a down-sweep (propagate prefixes) [11].

Why prefer it over the classic Hillis-Steele (Algorithm 1)?

Hardware constraints: Hillis-Steele assumes one processor per element and updates the array in-place every round. A real GPU doesn’t grant that luxury: a “1024-thread” block actually runs in 32-thread warps that time-slice on the same SM. When warp 0 pauses and warp 1 resumes, in-place writes from one warp can overwrite data the other still needs.

Synchronisation cost: Avoiding the overwrite requires a barrier after every addition - log₂(n) rounds × n threads ⇒ Θ(n log n) operations plus all those barriers.

Blelloch’s fix to these problems is to break the up-sweep and down-sweep into separate phases:

- Up-sweep and down-sweep touch disjoint tree levels, so threads never trample each other within a phase.

- Only two global barriers are needed (one between the phases, one at the end).

- Now you get Θ(n) work and correctness, even for arrays much bigger than a warp.

The result is a scan that is both faster and safer on modern GPUs.

Up-sweep (reduce)

- Build a binary reduction tree over log₂(n) rounds:

- Round 1 (step=1): sum each adjacent pair, storing results at indices 1, 3, 5, …

- Round 2 (step=2): merge those partial sums into blocks of 4, writing into indices 3, 7, 11, …

- Continue doubling the span each round until step = n/2

- After the final round, a[n-1] holds the overall total

Up-Sweep: combining elements in a binary-tree fashion-build partial sums until the final element holds the total.

Up-Sweep: combining elements in a binary-tree fashion-build partial sums until the final element holds the total.

Down-sweep (propagate)

After the up-sweep leaves a[n-1] containing the overall sum, we walk the tree top-down to scatter prefix sums into every slot:

- Initialize the down-sweep with a window size of step = n/2.

- Loop as long as step >= 1:

- Partition the array into blocks of size 2*step. For each block starting at index i:

- Temporarily store the left-child total from a[i + step - 1].

- Overwrite that left slot with the right-child subtotal from a[i + 2*step - 1].

- Add the saved left-child total to the right slot, giving the correct prefix for that subtree.

- Temporarily store the left-child total from a[i + step - 1].

- Issue a barrier() so all threads sync before shrinking the window.

- Halve the window: step = step / 2.

- Partition the array into blocks of size 2*step. For each block starting at index i:

- With each pass, the partial sums trickle down one level of the binary tree; after log₂(n) iterations every element holds its exclusive prefix sum.

Down Sweep: siblings swap and accumulate, driving the scan from root back to leaves.

Total Operations: \(\Theta(n)\), parallel depth: \(\Theta(\log_2 n)\).

Solution (Blelloch up-sweep + down-sweep)This is not the most efficient implementation, but I hope this provides some intuition about the algorithm!

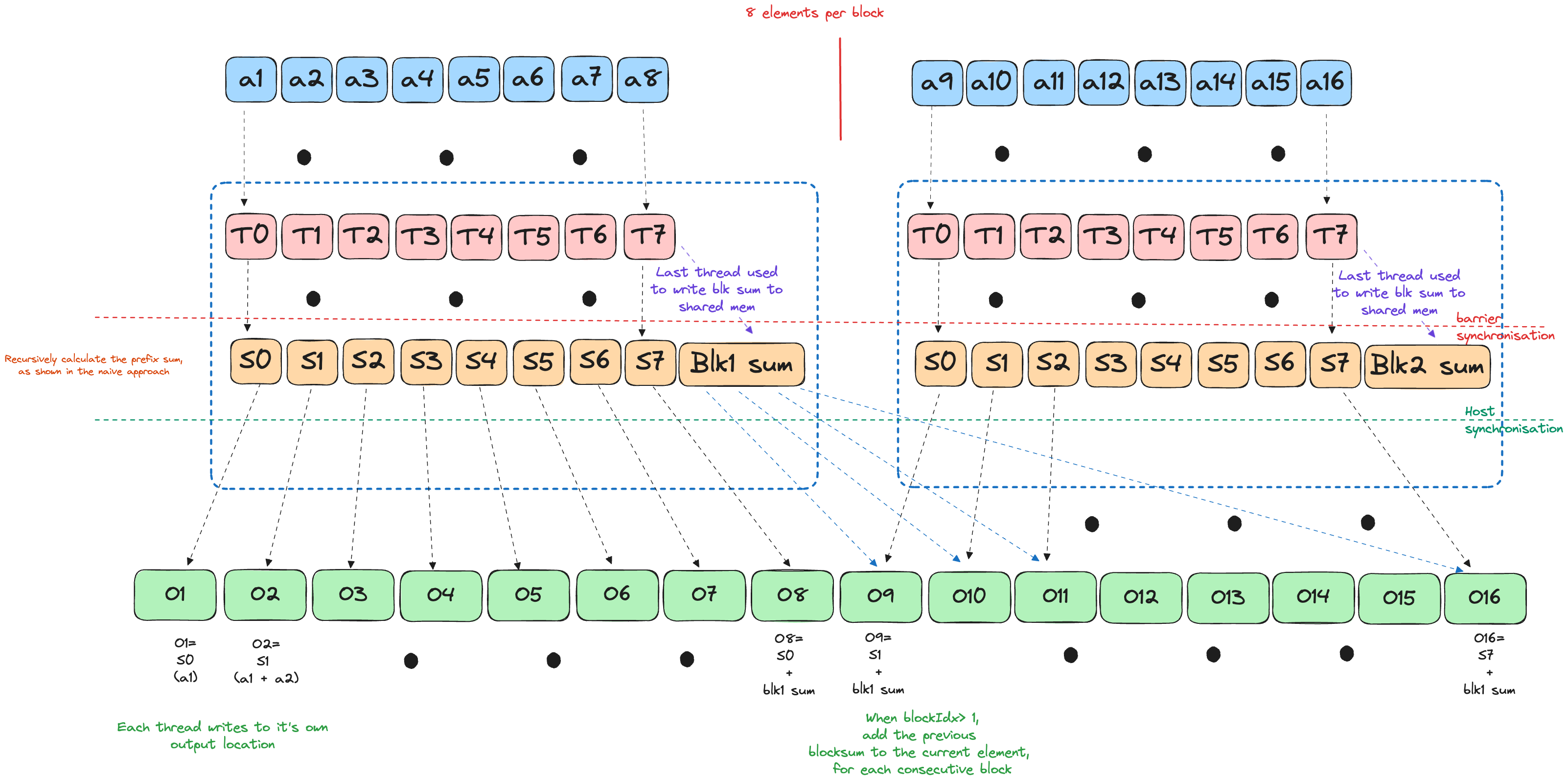

Block Boundary

The key difference in this version is that now we have an input array that is larger than the size of a single block.

We split the global scan into two bite-sized passes:

Phase 1 - Local Scan

Each block copies its slice into shared memory.

Perform an in-block naive scan/Blelloch scan exactly as in the single-block case.

The last thread of the block stashes the block’s total after the scan into an auxiliary slot at the tail of output:

# |<--- SIZE_2 --->|<-- #blocks -->| # [ prefix sums ][ block totals ]

Phase 2 - Propagate block totals

- Every thread grabs the aggregate from the previous block (totals[block_id-1]) and adds it to its own prefix.

Now every element holds the inclusive scan over the whole array.

We launch the above phases as two separate kernels.

A host-side synchronisation sits between the launches. That call flushes the work queue and waits until Phase 1 has fully committed its writes to global memory, ensuring the per-block totals are complete and visible before Phase 2 starts consuming them. Skip the sync and the driver is free to overlap or reorder the kernels, letting Phase 2 read garbage.

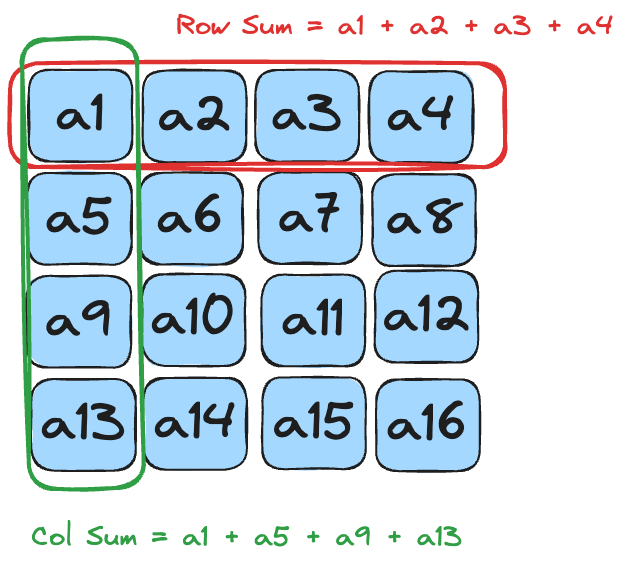

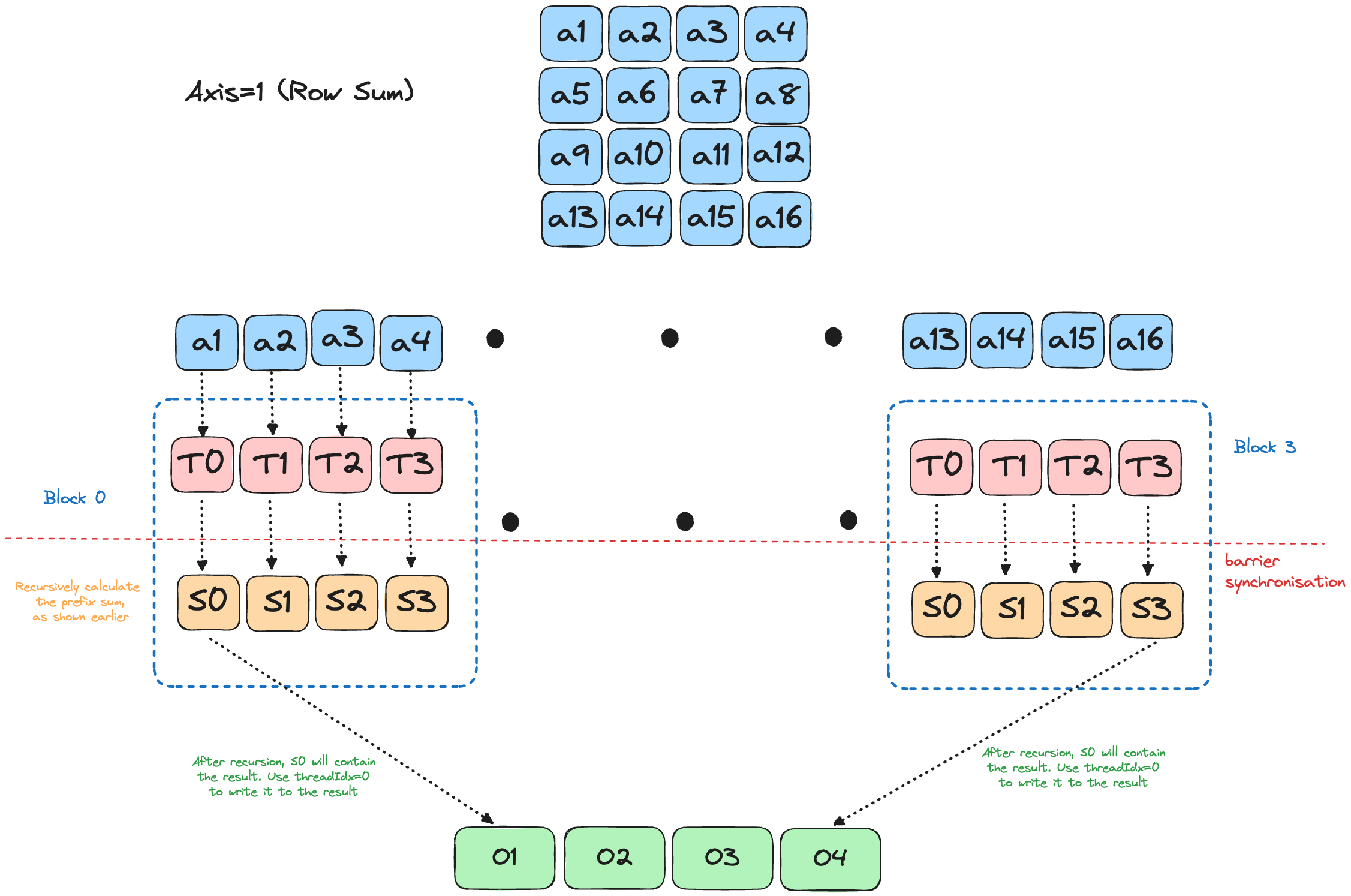

Solution (Block Boundary Version)Axis sum is the 2-D sibling of the dot‐product/prefix puzzles: take a matrix A and collapse one dimension by summing over it.

\[ \begin{aligned} \text{axis}=0 &\;\Longrightarrow\; \text{column-sum:}\;\; out[j] & = \sum_{k} A_{k,j}, \qquad j = 0,\dots,N-1 \\[4pt] \text{axis}=1 &\;\Longrightarrow\; \text{row-sum:}\;\; out[i] & = \sum_{k} A_{i,k}, \qquad i = 0,\dots,M-1 \end{aligned} \]

Each row/column is an embarrassingly-parallel reduction, so the GPU kernel just assigns one warp (or block) per slice and performs a standard shared-memory reduction inside the slice.

SolutionWe can also perform column-sum(axis=0) with a trivial change:

Arguably the single most important operation in GPU computing, the humble General Matrix Multiplication (GEMM) operation is the computational workhorse behind literally all deep learning models-from simple linear layers to massive transformer architectures.

\[ C_{i,j} = \sum_{k=1}^{K} A_{i,k} \cdot B_{k,j} \]

Requirement: For matrix multiplication \(C = AB\) to be valid, the number of columns in \(A\) must equal the number of rows in \(B\).

That is, if \(A\) is shape \((M, K)\) and \(B\) is shape \((K, N)\), then \(C\) will be shape \((M, N)\).

GEMM’s ubiquity stems from its perfect match with GPU architecture: thousands of independent multiply-add operations that can be parallelized across thousands of cores. Yet this apparent simplicity masks a deep optimization challenge. Memory bandwidth, cache hierarchies, and thread synchronization all conspire to make naive implementations crawl while hand-tuned libraries like cuBLAS achieve near-theoretical peak performance.

Matmul tuning is a rabbit hole - see Simon Boehm’s fantastic deep-dive [12] for how wild it gets.

For now, we’ll focus on the core techniques demonstrated by the official puzzle-shared memory tiling and thread cooperation-to build intuition for how high-performance GEMM kernels actually work.

Global Memory Version

Based on the 2D indexing section, each thread computes one C[row, col] by loading A[row, k] and B[k, col] from global memory, multiplying and accumulating over k. We unroll the k‐loop to cut loop overhead and boost throughput.

SolutionRoofline Model

Note: The Modular GPU Puzzles guide already walks through the full roofline derivation, but we’ll repeat it here so that you can follow along without leaving this post.

The first step is abstracting the hardware-software complexity into a tractable model.

Hardware Model

Classic roofline assumes ideal hardware with perfect overlap:

The cartoon GPU has only two levers:

- Compute engine — peak rate \(P_{peak}\) (FLOP/s, integer ops/s, etc.)

- Memory datapath — peak bandwidth \(b_s\) (bytes/s)

Software Model

We collapse the kernel’s steady-state loop to:

- \(N\) floating-point operations per iteration

- \(V\) bytes moved per iteration

The operational intensity is defined as:

\[I = \frac{N}{V} \text{ flop/byte}\]

This ratio is all that survives of the algorithm - prologue/epilogue work, control flow, and synchronizations are swept aside.

Hardware Assumptions:

| H1 | Peak DRAM bandwidth is reachable | Ideal streaming | Requires 100% streaming, >1MB tiles | Strided or tiny tiles |

| H2 | Peak FLOP/s reachable | Full FMA rate | All ALUs busy every cycle | Divergence, low occupancy |

| H3 | One bandwidth number is enough | DRAM dominates | L1/L2/SMEM add separate roofs | Lower-level choke points |

Software Assumptions:

| S1 | Loads fully hide latency | 1000s inflight warps | Requires deep pipelining | Short kernels, frequent syncs |

| S2 | Single operational intensity | Steady-state loop | Real kernels mix phases | Gather/scatter, epilogue code |

| S3 | Launch/transfer overhead small | Long kernel runs | Amortised over many iterations | Micro-benchmarks, chaining |

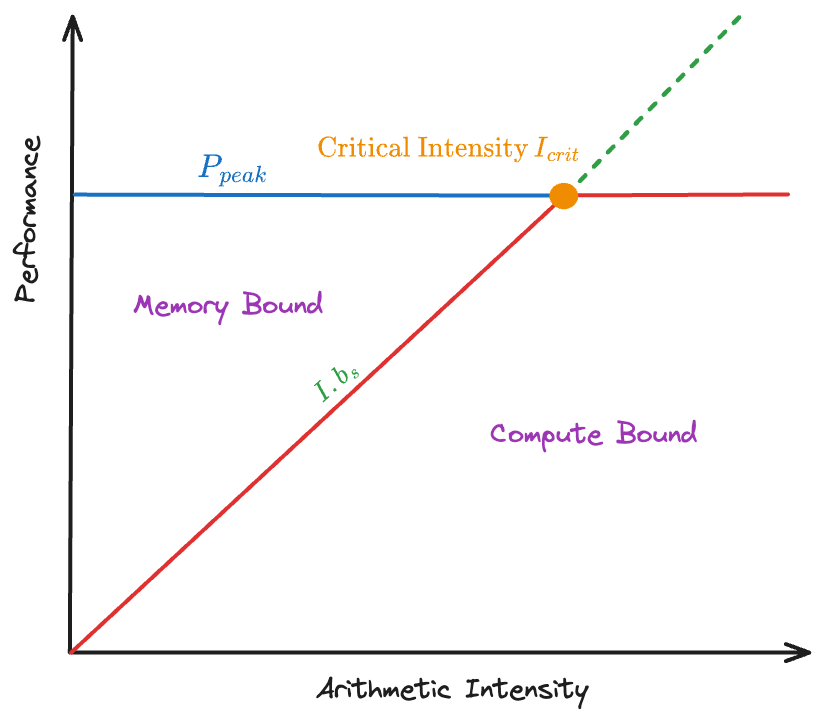

Naive Roofline Model

With these assumptions, hardware and software collapse to one parameter—the operational intensity \(I\)—and attainable performance becomes

\[ \begin{aligned} P(I) &= \min\!\bigl(P_{\text{peak}},\, I\,b_s\bigr) \\ I_{\text{crit}} &= \frac{P_{\text{peak}}}{b_s} \end{aligned} \]

At the critical intensity \(I_{crit}\), the bandwidth and compute roofs intersect, splitting kernels into two classes:

- Memory-bound (\(I < I_{crit}\)) -> Performance rises linearly with \(I\)

- Compute-bound (\(I \geq I_{crit}\)) -> Performance plateaus at \(P_{peak}\)

Where the Roofline Model Fails

Even in small puzzle kernels, these assumptions falter. In real workloads, they break down completely.

What actually works:

- Measure real limits with tools like Nsight or rocprof

- Redraw the roofline using measured ceilings—L2 roof, Tensor-core roof, not just DRAM and peak FLOPs

- Adjust your kernel: boost \(I\) (tiling, shared memory, tensor ops) or raise the ceilings (improve occupancy, reduce stalls)

Unfortunately no Nsight eye-candy as of yet - my ncu setup hit a permissions wall. I’ll fix it and share a profiler deep-dive soon. Stay tuned!

The textbook roofline is a guide, not reality. Measure, adapt, and push your kernel as close to the real limits as you can.

Roofline Estimation

Let’s apply the roofline model to a 3×3 matrix multiplication, which is still small enough to hand-calculate.

The RTX 4000 Ada provides[14]:

- Peak compute: 26.7 TFLOPS (single-precision)

- Peak DRAM bandwidth: 360 GB/s

- Critical intensity: \(I_{crit} = \frac{26.7 \times 10^{12}}{360 \times 10^9} = 74.2\) FLOP/byte

Naive MatMul Analysis

For \(C = A \times B\) where all matrices are 3×3:

Compute work

- Each output element is a dot product of length 3

- 3 fused multiply-adds -> 3 FLOPs per output element

- 9 elements -> 27 FLOPs total

DRAM traffic

- Load matrix A: 9 floats × 4 bytes = 36 bytes

- Load matrix B: 9 floats × 4 bytes = 36 bytes

- Store matrix C: 9 floats × 4 bytes = 36 bytes

- Total: 108 bytes

Operational intensity:

\[I_{naive} = \frac{27 \text{ FLOPs}}{108 \text{ bytes}} = 0.25 \text{ FLOP/byte}\]

Since \(I_{naive} = 0.25 \ll I_{crit} = 74.2\), this kernel is memory-bound.

Predicted performance

\[ \begin{aligned} P_{naive} \;\; & = \min(26.7~\text{TFLOPS},\; 0.25 \times 360~\text{GB/s}) \\ & = \min(26.7~\text{TFLOPS},\; 90~\text{GFLOPS}) \\ & = \boxed{90~\text{GFLOPS}} \end{aligned} \]

Key Insights

- Intensity grows with matrix size - For naive \(N \times N\) GEMM: \(I = \frac{N^3}{4N^2} = \frac{N}{4}\) FLOP/byte

- Small kernels are bandwidth-bound - Even perfect caching can’t reach the 74 FLOP/byte crossover until \(N \approx 300\)

- Shared memory helps, but only up to the ridge - Further speedups require compute-side tuning (tensor cores, ILP, etc.)

Next, we’ll look at one specific optimisation for Matmul: Tile-based GEMM!

Tiled Matrix-Multiplication (GEMM)

Our shared-memory kernel already cut global-DRAM traffic by loading each A[i,k] / B[k,j] element once per thread row/column instead of once per output multiply.

For large matrices, however, even that version still:

- Brings the entire row of A and column of B into shared SRAM, quickly exhausting the 48–112 KiB available per SM.

- Leaves many threads idle while others finish their portion of the dot-product.

- Misses an opportunity to keep a hot, register-resident accumulator and hide global-latency behind computation.

Enter tiling / blocking—the canonical GPU GEMM strategy.

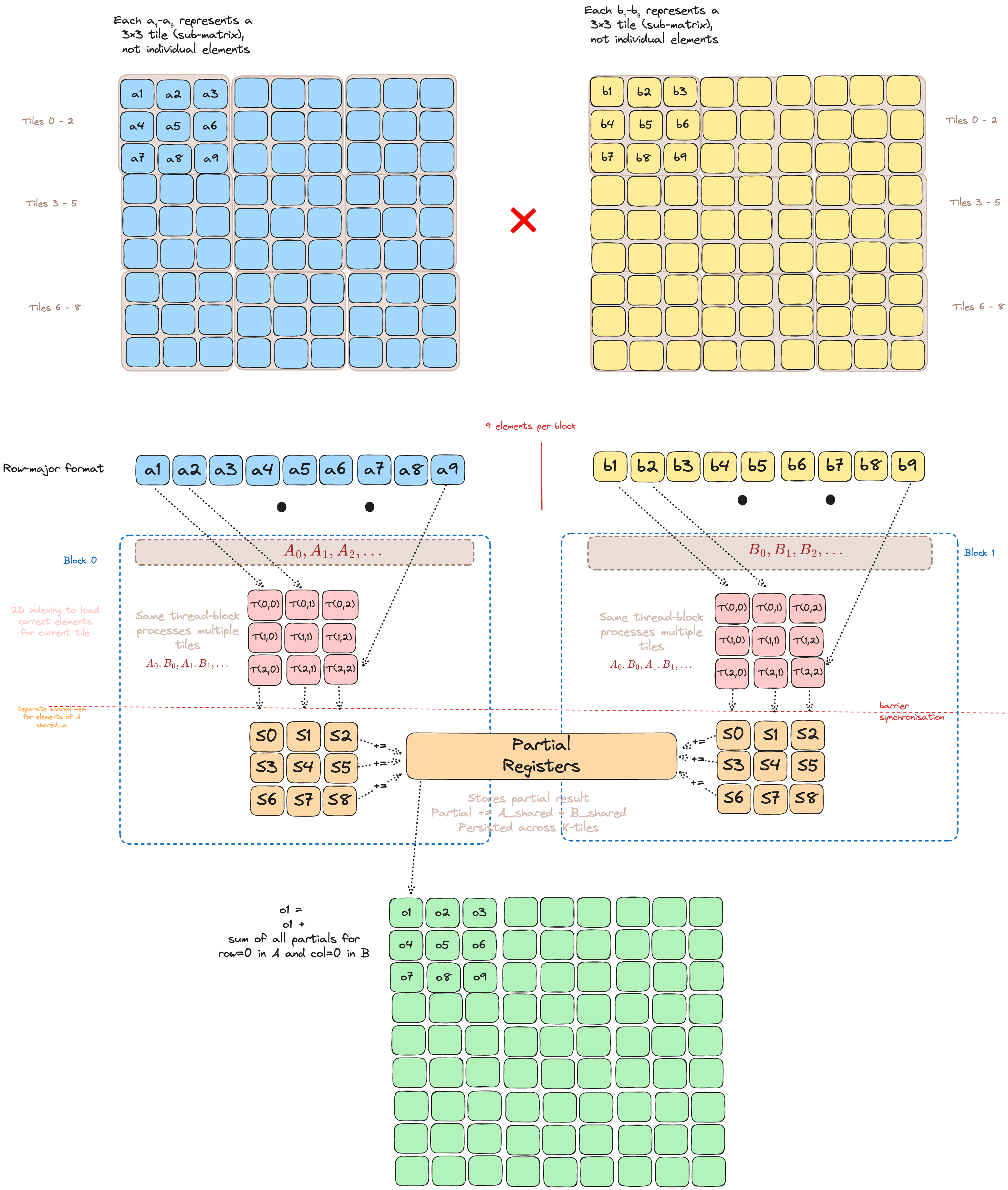

Tile?

Think of an N×N matmul as a chessboard. Instead of letting every thread wander across the whole board, we slice it into T×T sub-squares (tiles).

A thread-block is assigned one output tile, and:

- Cooperatively loads the matching T×T A-tile and B-tile from global DRAM to shared memory (two coalesced 2-D memcpy’s).

- Performs T fused-multiply-add sweeps of that data, each thread keeping its running sum in a register.

- Barriers, slides the tile window by T along the inner-k dimension, and repeats until the dot-product is complete.

- Finally writes the T×T block of C back to DRAM.

Each element of A/B is now read once per tile—independent of N—and re-used T times, boosting arithmetic intensity from O(1) to O(T) FLOP/B.

Memory mapping for tiled GEMM

The memory hierachy(discussed in the previous post), is utilised as follows:

- Registers: per-thread accumulators that hold partial C values across all tile iterations

- Shared SRAM: the current A_tile and B_tile, cooperatively loaded once and reused T times

- Global HBM: original A, B matrices and final C; each element touched once per tile load/store

Raw Memory

Manual Indexing Tiled MatmulThe new formulas in the tiling implementation deserve explanation. Let’s break them down into key concepts:

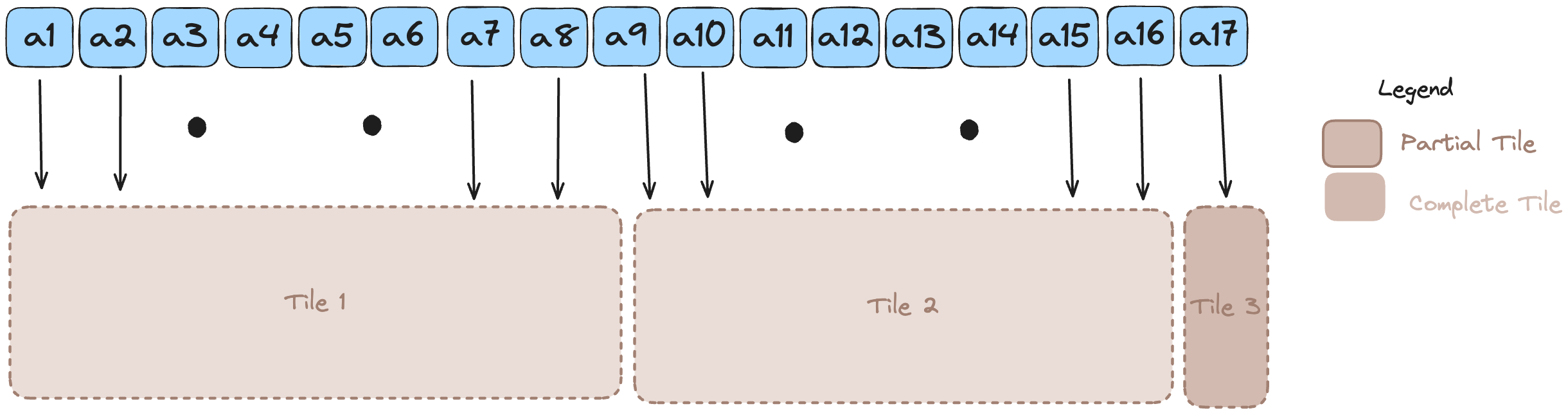

How many Tiles are needed?

This is the expression: range((size + TPB - 1) // TPB)

The key idea here is: Step through k by TPB each time; if there’s a leftover chunk, do one last tile for it.

The above example needs 3 tiles. Ceiling division captures this with a simple formula: \[ \lceil\frac{size}{TPB}\rceil = \lfloor\frac{size + TPB - 1}{TPB}\rfloor \]

Which element does a thread fetch in this tile?

Each thread brings in one A value and one B value. Thread indices inside the block:

Here, tile is the “which-chunk-of-k” loop counter.

Inside a block each thread is responsible for one output element C[global_row, global_col].

For that element you need every pair (A[row, k], B[k, col]) as k runs.

For tile t:

A: a[global_row, t*TPB + local_col] B: b[t*TPB + local_row, global_col]The two loads align on the same k slice (t*TPB … t*TPB+TPB-1), ensuring every multiply in this tile has operands in shared memory.

Why do we swap local_row and local_col for B?

GPUs coalesce global memory when adjacent threads read adjacent addresses. With the swap:

- For A: neighboring threads in x-direction (local_col) read consecutive k’s ⇒ coalesced

- For B: neighboring threads in y-direction (local_row) read consecutive k’s ⇒ also coalesced

Without the swap, one matrix would be fetched “strided” collapsing into 32 separate memory transactions per warp - a 32× slowdown on bandwidth-bound kernels.

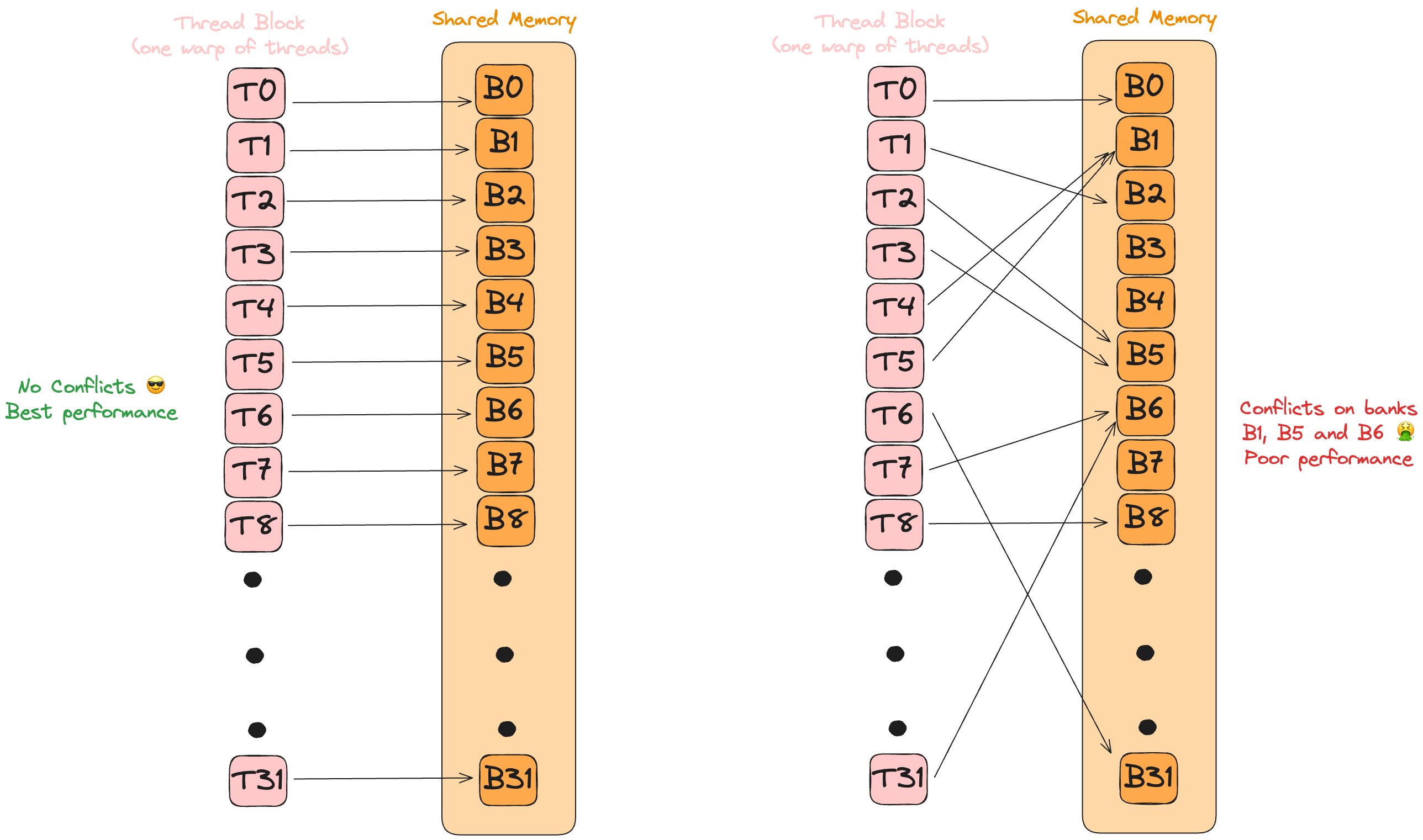

Quick primer: Shared memory isn’t one monolithic block. It’s chopped into 32 independent “banks”[15] [16].

Each is a tiny SRAM with its own read/write port that can service one request (or one 32-bit access per cycle). A warp hits peak bandwidth only when every thread lands in a different bank (or all hit the same address, which hardware can broadcast). If two threads target different addresses inside the same bank during the same cycle, the hardware must serialize them, referred to as a bank conflict.

Beyond coalescing, our tile layout also sidesteps these conflicts. Because b_shared[k, threadIdx.x] maps each thread to a distinct bank (while a_shared[threadIdx.y, k] is broadcast-friendly), all 32 memory ports stay busy with zero serialization.

Choosing the Right Tile Size

While the current puzzle selects \(TPB=3\) with tile size \(TPBxTPB\), choosing the tile size is a balancing act.

Exact numbers vary with GPU, kernel, and precision [[17]][18].

I’m still learning the dark art of GPU perf tuning, so I’ll save the details for a future post once I’ve had more time to experiment.

TLDR: For each tile, we will sync (barrier), compute, shift to next tile, repeat. But this is just the baseline - there’s always a deeper optimization rabbit hole!

LayoutTensor

While the manual tiling approach works, it suffers from indexing complexity that obscures the algorithm’s intent and creates opportunities for bugs. Mojo’s LayoutTensor API provides an elegant solution that maintains performance while dramatically improving code clarity.

The Pain of Manual Indexing

The manual implementation requires careful coordinate arithmetic:

- Nested index calculations like tile * TPB + local_col that can easily introduce off-by-one errors

- Separate bounds checking for each matrix load operation

- Explicit management of tile boundaries and edge cases

- Code that prioritizes performance over readability

LayoutTensor provides a tile() method that creates zero-copy [7] views into sub-regions of tensors [19]. This eliminates manual indexing gymnastics while keeping identical performance.

A LayoutTensor.tile[tile_height, tile_width](block_row, block_col) call returns a view of the specified tile without copying data, at no cost!

The transformation from manual indexing to LayoutTensor simplifies the loading logic:

Full solution looks as follows:

LayoutTensor Tiled MatmulSynchronization and Memory Hierarchy

The copy_dram_to_sram_async() function [20] enables asynchronous memory transfers from global to shared memory, while async_copy_wait_all() [21] provides a synchronization barrier that ensures all pending transfers complete before computation proceeds.

This pattern allows the GPU to:

- Overlap memory transfers with other computations using dedicated copy engines

- Utilize specialized hardware for efficient data movement

- Maintain correct execution ordering across thread blocks

- Bypass intermediate registers for improved memory hierarchy efficiency

Important: async_copy_wait_all() only synchronizes the asynchronous copy operations—threads still need explicit barriers (barrier()) to ensure all threads in a block see the shared memory data before computation begins.

Across these puzzles, we’ve implemented the four fundamental archetypes that power most GPU computing:

| Map-Reduce | dot product, axis-sum | warp-level parallel reduction trees |

| Stencil | pooling, 1D/2D convolution | spatial tiling with halo exchanges |

| Scan | prefix sum | hierarchical up-sweep + down-sweep |

| Dense Linear Algebra | matrix multiplication | cooperative tiling + register reuse |

These four archetypes form the building blocks for complex ML kernels, each with specific memory access patterns and synchronization strategies.

Next up: Moar GPU kernels, and finally tackling our favorite technique for the past few years: Attention!

Thanks for sticking around! I hope you picked up a trick or two! Spotted a bug or have a sharper optimization? Open an issue in the repo, or ping me on Twitter/X. Happy hacking!

.png)

![NHR at FAU[13]](https://shubhamg.in/posts/mojo_gpu_puzzles/p14_hardware.png)

![NHR at FAU[13]](https://shubhamg.in/posts/mojo_gpu_puzzles/p14_software.png)