Recently, while working on a ReactJS application, I noticed something odd. My AI coding assistant suggested a node module, one that looked familiar but slightly off. The package name felt plausible, like something I’d seen before, but I couldn’t recall using it explicitly. Out of caution, before accepting the code and installing the module, I checked the NPM registry. It didn’t exist. What looked legitimate turned out to be a hallucination, a package that looked real enough for me to trust it. This led me down a rabbit hole.

Welcome to the world of slopsquatting, coined by Seth Larson in 2025, a rising software supply chain threat where hallucinated packages can lead to real-world exploitation. In this article, I’ll walk through what I uncovered, including data from a recent research study, how different models compare, which programming languages are most at risk, and, most importantly, what we as developers can do to protect ourselves.

In 2024, security researcher Bar Lanyado discovered that AI models, including ChatGPT, were suggesting the use of a non-existent package named huggingface-cli [2]. To test the potential impact, Lanyado uploaded an empty package with this name to the Python Package Index (PyPI). Within three months, it was downloaded over 35,000 times, including by developers from major companies like Alibaba. This experiment demonstrated how AI hallucinations could be exploited to distribute malicious code if threat actors published harmful versions of such packages.

Open Package Ecosystems: Languages like Python and JavaScript have massive open-source package repositories, making it easy for attackers to publish malicious packages.

Blind Trust in AI: Developers often accept AI suggestions without verification, especially when the code “feels right”—a behavior known as vibecoding.

Models Have a Training Cutoff: AI models do not know about packages published after their training data cutoff and may “guess” plausible but fake package names.

Hallucinations happen when a model suggests something that “sounds right” but simply isn’t real. As more code is AI-generated, there is a growing risk that LLMs hallucinate non-existent libraries or recommend similarly named malicious packages. According to a recent survey up to 97% of developers use generative AI to some extent, with roughly 30% of all code now AI-generated [2,3]. Just like traditional typosquatting—where a user accidentally types lodasah instead of lodash, Slopsquatting lets attackers hijack trust in AI outputs by registering malicious clones of hallucinated package names.

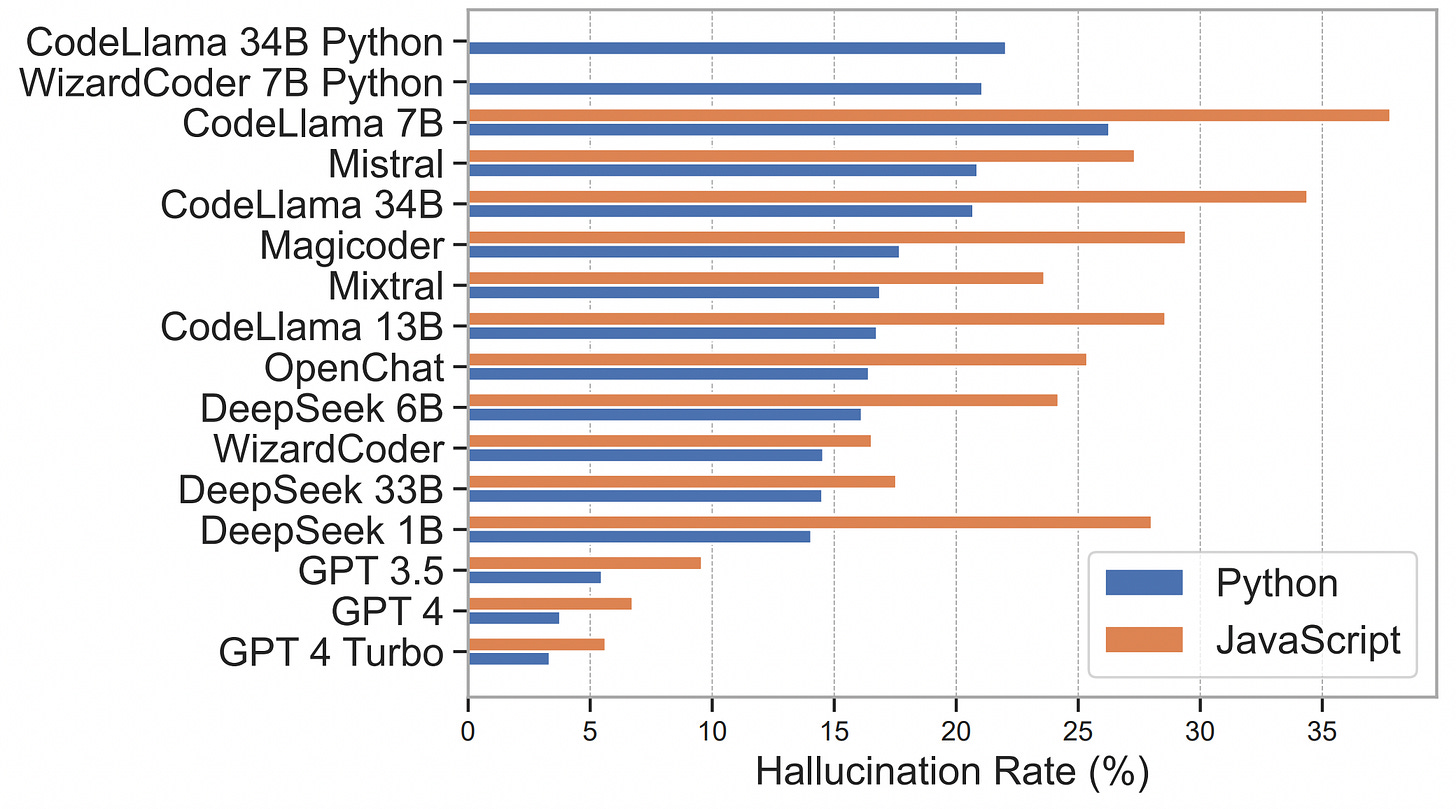

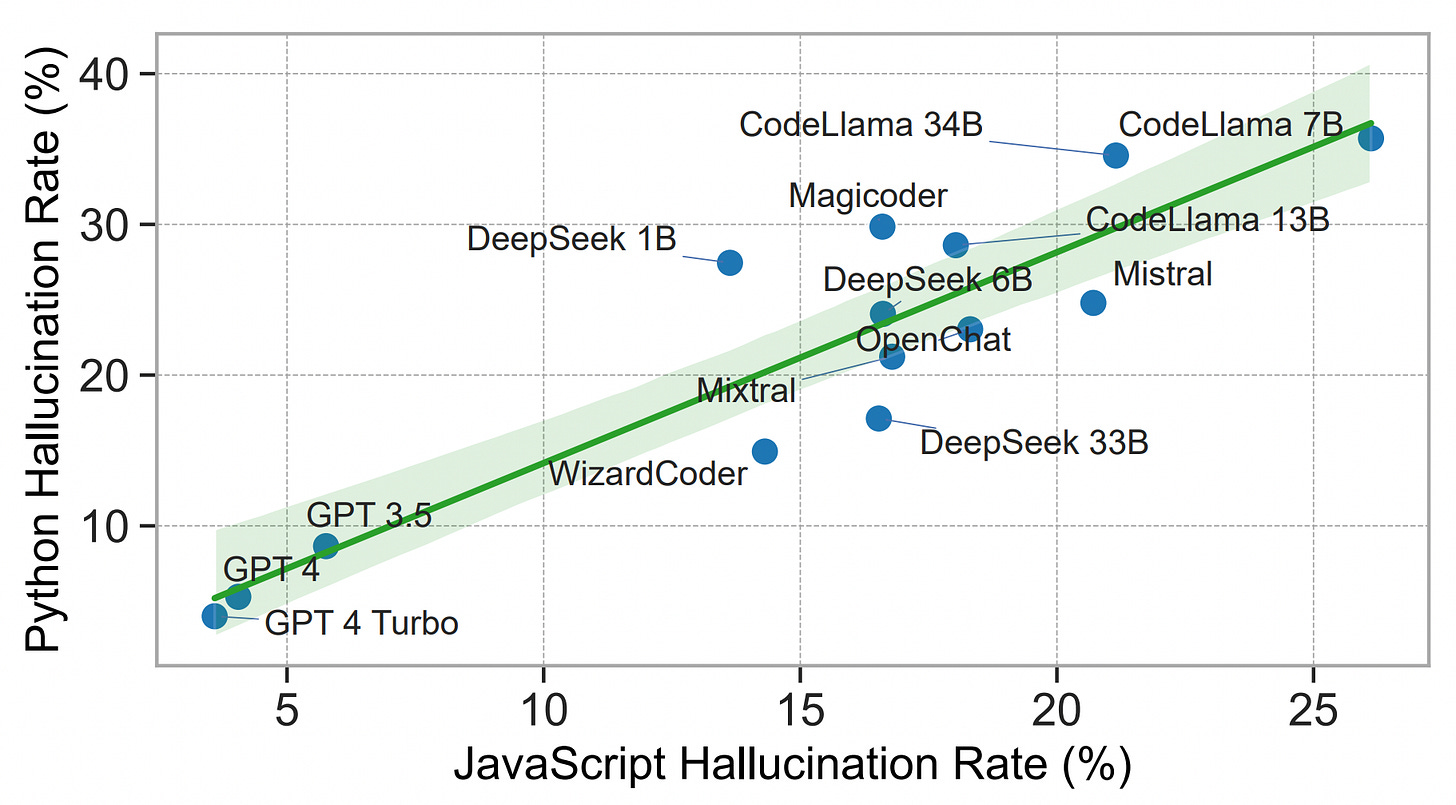

A recent study provides quantitative insights into how often dependency hallucinations occur across different models and languages [1]. According to Spracklen et al. in "We Have a Package for You!" tested 576,000 code prompts across 16 LLMs (both commercial and open-source) for Python and JavaScript code, identifying the rate of hallucinated imports. They found that commercial (closed) models hallucinate at far lower rates than open source models. On average, commercial LLMs (e.g. OpenAI’s GPT series) hallucinated only about 5.2% of the time, whereas open-source models had an average rate around 21.7%. For instance, GPT-4 Turbo’s overall hallucination rate was a mere 3.59%, while many open-source models (Mixtral, Mistral, CodeLlama, etc.) were often above 15–20% . In short, the study reported that “GPT series models were found to be 4× less likely to generate hallucinated packages compared to open-source models”.

The choice of programming language also strongly impacts hallucination frequency. In Spracklen’s study, ~15.8% of Python-generated samples contained a fake import versus ~21.3% for JavaScript.

Another subtle but important behavior observed in the research is language confusion, where an AI model is asked to use packages for one language but suggests packages from another. 8.7% of hallucinated Python packages were valid JavaScript packages.

To defend against package hallucination attacks, developers and teams should adopt defense-in-depth practices.

Verify every package name: Manually confirm that AI-suggested packages exist in official registries before installation.

Vulnerability Scanning: Integrate tools like Snyk, OWASP Dependency-Check, or Dependabot into every build.

Private Registry: Implement an organization-wide private registry mirror (e.g., Artifactory, Nexus) that only syncs vetted packages from public registries

Dependency checks in code reviews: Reviewers should scrutinize new or uncommon package imports carefully.

Regularly audit dependencies: Use security scanning tools to identify known vulnerabilities or malicious code in your project's dependencies.

Use dependency pinning: Lock package versions to prevent unintentional upgrades to malicious packages.

AI-Specific Security Training: Develop training modules focused on risks specific to AI-assisted development.

Prompt AI to self-check: Ask your AI assistant to list all imported packages and verify their existence.

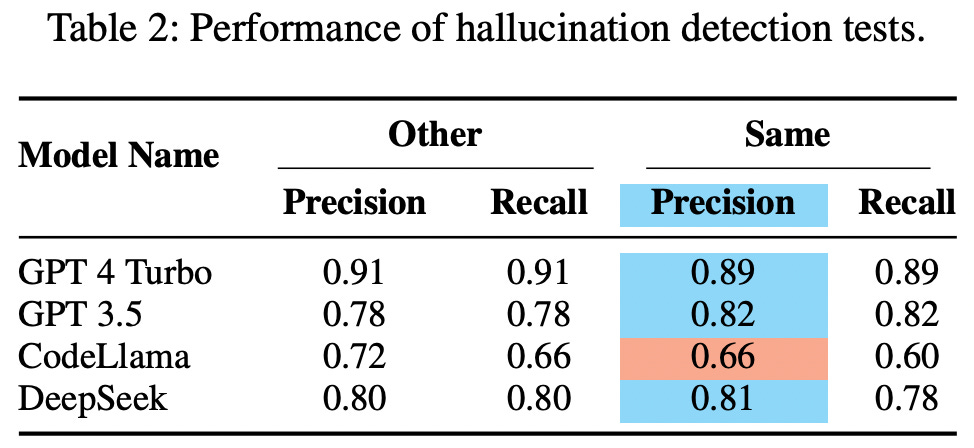

A key question in mitigating the threat of hallucinated packages is whether language models can identify their own mistakes. According to Spracklen et al, the answer is cautiously optimistic: yes, some LLMs can detect hallucinations with fairly high accuracy.

When using AI assistants, project workflows could include automated “hallucination detection” steps: after code generation, run a safety check that prompts the LLM to list all imported packages and cross-checks them against registries.

These results show that GPT-4 Turbo and GPT-3.5 performed best, with over 89% precision and recall in detecting their own hallucinations. DeepSeek followed closely, suggesting it too has a strong internal understanding of its generative patterns. CodeLlama, by contrast, struggled, tending to classify most packages as valid, resulting in lower accuracy for hallucination detection.

The ability of LLMs to self-diagnose errors is significant. It means:

Prompting a model to double-check its own output can be an effective mitigation strategy.

Future AI coding assistants could be built with built-in self-audit mechanisms—automatically flagging questionable imports before presenting them to users.

The researchers note that this “self-regulatory capability” may be due to the fact that the same model mechanisms that generate hallucinations also encode their patterns. In other words, a model that hallucinates can often recognize the traces of its own hallucination logic.

This opens promising directions for AI tooling where validation is embedded directly into the assistant—catching hallucinated packages before they ever hit your code.

AI assistants aren’t going anywhere—they’re improving. Techniques such as Retrieval-Augmented Generation (RAG), self-regulatory capability loops, and supervised fine-tuning have been shown to significantly reduce hallucination rates in open-source models. For example, DeepSeek’s hallucination rate dropped from 16.1% to 2.4%, and CodeLlama’s went from 26.3% to 9.3% after applying these mitigation methods.

Slopsquatting is not just theoretical; it is very real. As AI-assisted coding becomes mainstream, security practices must evolve to keep pace.

Importantly, these findings do not mean organizations should abandon AI coding tools. Rather, teams must adopt them safely and knowledgeably. Code reviews remain crucial – human oversight is the last line of defense against attacks. While AI can speed up boilerplate coding, humans must verify security-sensitive decisions. By integrating dependency validation into development pipelines and practicing a security-conscious review culture, teams can continue to reap the benefits of AI assistance without inviting malware.

References:

[1] We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code Generating LLMs - https://arxiv.org/abs/2406.10279

[2] Hackers Can Use AI Hallucinations to Spread Malware - https://www.lasso.security/blog/ai-package-hallucinations

[3] Survey: The AI wave continues to grow on software development teams - https://github.blog/news-insights/research/survey-ai-wave-grows/

.png)