I recently wrote about the types of data and analytics that are becoming available in AI coding assistants to help measure the impact these tools are having on software engineering productivity. But coding tools only see part of the software development process, so, at best, give only a partial picture of overall impact.

That doesn’t cut it.

The AI revolution is unquestionably the dominant topic and focus of the software industry at this moment, and leaders are understandably interested to learn the true impact AI is having on engineering productivity.

AI-enabled software engineering is a new enough concept that there simply hasn’t been sufficient time for best practices to become established. But a growing shared understanding of what measuring AI impact will look like has been emerging in what feels like real time.

In this post I share the emerging picture of how to measure AI impact assembled from conversations with other Engineering leaders, SEI vendors, and reading research in this area. And we’ll look at some practical steps you can take to put these ideas into action.

In order to measure software engineering productivity, we need a clear picture of how software delivery works. For the entire history of software, engineering has been about people: the engineers who make the magic. And the introduction of more standardized processes over time – from regular use of ticketing, following some form of agile backlog management, reviewing code with PRs, managing production deployments with standard DevOps practices – made the development of software an increasingly observable and thus measurable activity. But AI is changing everything.

The space of available AI tools supporting software development is moving at an incredible pace, making it sometimes hard to tell for sure what the future state will be of an AI-enabled software engineering operation. Just last week we saw the release of Claude 4 from Anthropic, Codex from OpenAI, and Google Gemini Code Assist. And with agentic tools like Codex, Jules from Google, and the growing popularity of Devin, the promise of more autonomous AI-driven software development is becoming a reality.

Without knowing exactly what the end state of the ideal “AI enabled” software organization will look like, I think it’s safe to say that we will be operating in a hybrid model, along multiple dimensions. I’ve started referring to this in shorthand as “hybrid hybrid hybrid”:

Hybrid tools - Engineers, which will still be at the center of building software, have different styles and preferences, and different tools appeal to them. As much as Github Copilot had the early lead in AI development tools, most organizations I see are using multiple tools. For example, some engineers prefer chat interaction, and others prefer to work in a more familiar IDE setting.

Hybrid use cases - Writing code is only part of the software development process, and AI tools are increasingly starting to show up for more types of work. This is likely to include everything from design and UX, testing, code review, documentation, and more. While code generation was the earliest focus, I expect to see organizations getting serious about enlisting AI in these other areas quickly. Within the next year I expect most orgs will power two or more parts of the development workflow with AI assistance.

Hybrid modes of working with AI - AI can work as a co-pilot or assistant, working with a human user who is the primary driver of the work. Or it can act more autonomously as an agent, with output then being reviewed by humans. Again, I expect the ideal end state is not an either-or. Instead both ways of working will be present in most orgs. Especially for more complex and critical parts of the code, and especially when there are subtle trade-offs that depend on understanding future technical and business requirements, humans are likely to maintain an important role in the driver seat. More straightforward work, especially when it can be confidently and clearly specified, will be routed to AI agents.

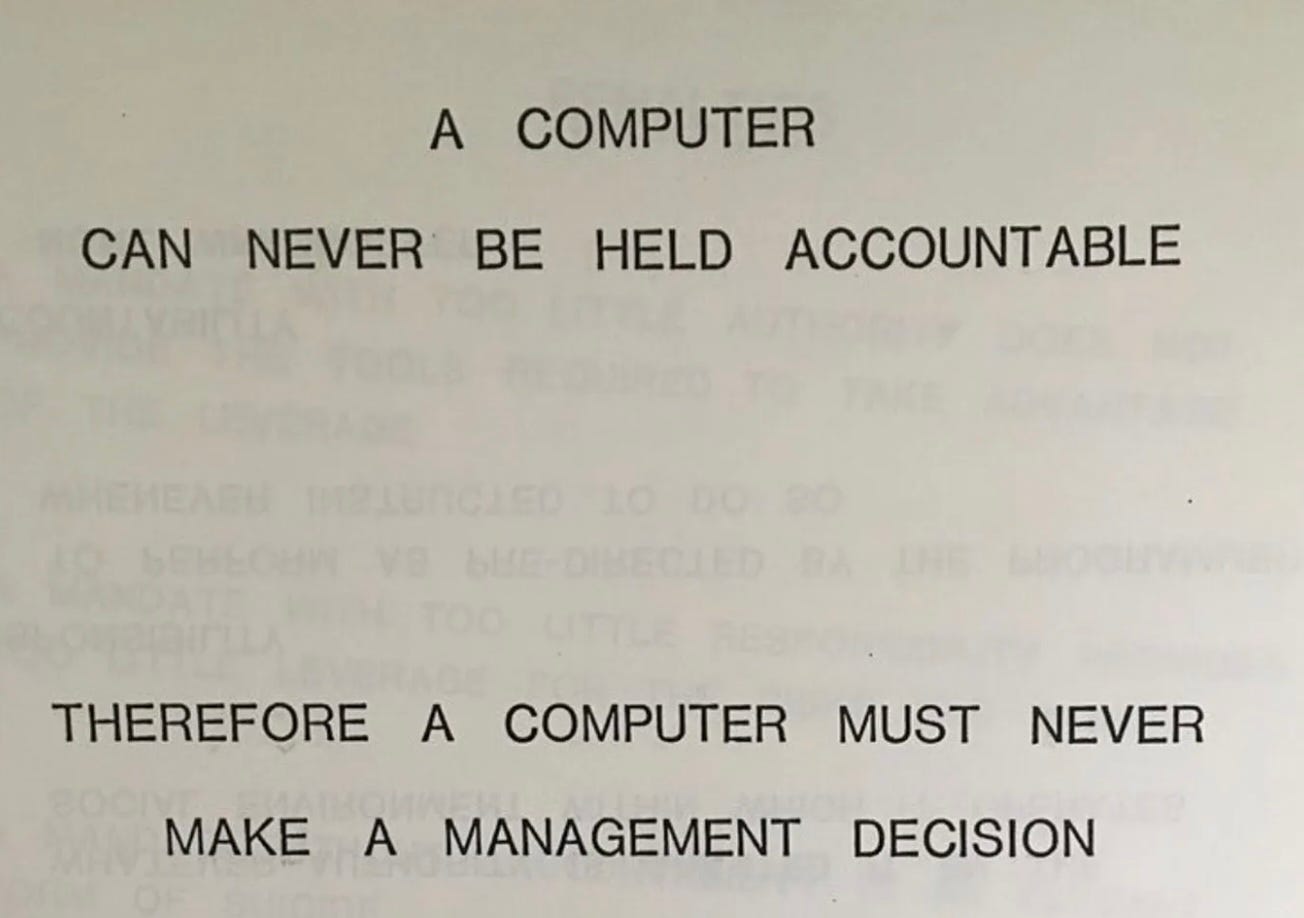

This last point about hybrid modes of working with AI highlights an important point: humans will continue to be essential in software organizations. This relates back to a famous quote from a 1979 IBM Slide deck, “A computer can never be held accountable, therefore a computer must never make a management decision.”

This remains fundamentally true, and the domain of software engineering is no exception. We need people who understand the design and implementation of the system, even if AI is taking on an increasing share of the development work. If something goes wrong, it’s the humans that we will hold accountable for resolving the issue and making sure that remediation actions are taken to avoid the issue in the future. If our software architecture cannot accommodate future requirements and we have to do costly rework to serve the business, we won’t go back and blame an AI tool. Rather, we’ll look back at the human decisions that went wrong and figure out how we can avoid similar mistakes in the future.

The only way I see to accomplish all of this is for humans to be deeply involved in the software development work, including at least some of the coding. We certainly want humans reviewing all of the code. So even if we use automated PR agents, we should still expect humans to participate in reviews. And that means they will need to be actively engaged with the code. As I said above, I expect that humans will continue to be in the driver seat for the more complex, critical, and/or ill defined coding tasks. I think of this as not at all unlike how more senior (Staff+) engineers already operate with more junior teams, staying engaged in the code, but handing off as much as possible to the team.

Humans will need to work on the code. And for similar reasons, humans will need to be engaged in the other parts of the software process. For all of the other steps – from design, to coding, to testing, writing doc, etc. – people will still need to do some of the work, and review much or all of what AI agents produce.

This tells me that our basic ways of managing and tracking work, as well as the systems that support our work, like ticketing and SCM, will likely be the systems that we use today (Jira, Git, etc,) modulo natural evolution. And if our systems and processes look similar to today, even though AI agents are participating in them, then our methods of measurement will, to a large extent, remain valid and continue working.

In other words, I believe we can expect our tools and methods for measuring engineering productivity – including Software Engineering Intelligence platforms like Jellyfish, DX, and LinearB – to continue giving us a valid picture of productivity even as we adopt AI into our engineering practices.

Of course, AI is a fundamental change to how software engineering is done. Nothing about my argument above contradicts that. And as a major change to how we’re working, it’s no surprise that leaders want deep and specific visibility into the impact of AI.

While we can expect AI to support many aspects of the software development process, tools that help with the coding phase have already been available for a couple of years and are clearly already widely adopted and delivering significant value. Early adopters of these tools have already been grappling with the question of how to quantify impact, which gives us emerging insight into what measuring AI impact will look like.

So far we see teams measuring impact on three levels: Adoption, Efficacy, and Impact. These are somewhat sequenced as maturity increases. First we measure whether tools are being adopted. Then we look at whether the tools are having impact within their specific use case. Then we look at whether overall AI usage is having an impact on outcomes.

Adoption is the natural place to start, and actually represents a non-trivial goal. While some teams start out with a “grass roots” approach to adopting AI coding tools, larger and more mature teams have generally been taking intentional steps to get their engineers working with AI. This includes taking actions such as procuring enterprise licenses for selected tools, establishing usage guidelines, and implementing some amount of enablement and best practices capture.

When tools are put in place, it’s natural to want to look at whether the usage is increasing, and if not, what are the blockers?

There are two natural ways to look at these questions.

The first is by looking at usage data from the tools themselves. Most, if not all, of the AI coding tools provide dashboards and/or APIs that provide data about which users are active, and on which features. These tell us whether engineers are using the tools.

Second, it’s important to include explicit questions about AI adoption on your Developer Experience surveys. Developer experience surveys have been a best practice for some time, and are an essential part of most standard engineering metrics models, such as SPACE and Github ESSP. As the most important technology transformation of our time, it’s critical that we get firsthand feedback from the engineers about whether we’re clearing a path for them to get value out of AI in their workflow. In fact, the ESSP recommends "Copilot satisfaction” as one of its core metrics.

Once we’re managing adoption, the next question is, are the tools working, at least locally within the use case they are intended to support. For coding assistants like Copilot, this has come down to recommended completions when writing code in an IDE. For chat-based and now agentic tools, we start to look at larger code changes.

In either mode, there are two common measures that are in wide use and providing a good picture of efficacy: acceptance rate and code impact

Acceptance rate is essentially “what percentage of the recommendations from the feature are accepted.” For IDE-based development, this is the acceptance rate on completions. For chat and agents, this is the acceptance rate on larger code changes.

Code impact is essentially the amount of code, typically measured in “lines of code” (despite all of the well known limitations of LoC as a measure!), generated by AI. This measure has been receiving a lot of attention in recent weeks since Microsoft CEO Satya Nadella revealed that around 30% of code in new areas is being generated by AI!

Both of these measures are readily available from the AI coding tools themselves. Beyond that Microsoft example, it does seem that anecdotally many teams are seeing in the 20-30% range of code impact once they’re fully productive with AI tools.

Of course, lines of code do not equal true impact. They need to be the right lines of code delivered in the right way as part of the overall development workflow.

To understand the true impact on Engineering, we need to look at our core productivity measure and consider how AI adoption affects these. I’ve covered metrics models in past posts, but briefly these core measures typically include flow metrics such as cycle time (PR and ticket), velocity measures such as PRs/team/week, quality measures such as change failure rate, work allocation measures such as percentage of effort on innovation, etc. In other words, we want to look at basically all of the standard metrics in a DORA/SPACE/ESSP model, and understand the change due to AI.

The good news is that these standard metrics are already well understood and readily measurable using leading Software Engineering Intelligence (SEI) platforms. The key question is how to relate AI usage and adoption to productivity measures.

SEI platforms are adding functionality to do exactly this. Jellyfish has staked an early lead on this front with their AI Impact dashboard, which provides views of key metrics sliced by engineer cohorts with varying levels of adoption.

In the meantime, if you don’t have access to a view like this, you can still start to get meaningful data. For example, if AI adoption varies by existing slices of the data – such as teams or code areas – then you can slice by these dimensions to see comparative AI impact. And there’s always the dimension of time. You can get AI usage for a time period from the AI coding tools themselves, and then look at productivity metrics alongside AI adoption trends by time. While these types of “hacks” aren’t perfect and won’t allow you to drill into the data in every way that you’d want, they start to give a picture of true AI impact.

If you’re already reporting on AI impact, even if it’s looking at the adoption and efficacy metrics that the AI coding tools provide, then congratulations! You’re more likely to be having pleasant board room conversations about riding the leading edge of AI-enabled software engineering. But if you’re not there yet, it’s easy to get going. So, what can you do to get started?

Probably the most basic recommendation is to make sure you have a good metrics strategy defined and are advancing its implementation and usage in your org. As we can see from the “end game” of measuring AI impact, it’s all about building upon established best practices for measuring engineering productivity in the current environment.

I’ve previously written about practical approaches to developing and implementing a metrics strategy. The rapid rise of AI in software engineering along with huge expectations about the resulting productivity gains we should see has only multiplied the importance of measuring and communicating engineering productivity.

In addition to ensuring that you’re moving towards a solid engineering metrics strategy, it’s worth quickly getting some basic reporting on AI usage in place. Stakeholders want clear signals that engineering leadership is fully engaged with the AI revolution. Starting to formally report even the basics like adoption rates, developer experience around AI, and efficacy measures like acceptance rate and code impact, clearly show that is the case.

Again, these basic measures of AI coding are readily available, so the barrier to taking early action here is pretty low. Boards and CEOs are likely to be pointing to reports such as the now famous 30% code impact number from Satya Nadella and asking, “how are we doing on that front?” Be prepared to engage these conversations, ideally with data, and a picture of how that data relates to your overall AI adoption plan.

Of course, the overall impact of AI in software engineering is a fast moving target. This makes interpreting any absolute data point hard. For example, a Jellyfish analysis of Copilot impact across 20,000 engineers at 300 different companies found some impressive results including a 25% time reduction on coding tasks, a 12% increase in overall PR output, and a 17% increase of allocation to innovation versus maintenance. These results seem pretty amazing to me, especially since they’re based on hard data from Jira and Github, not on self reported estimates. But is this approaching the optimal impact, or just a step along the way? The report is clear that these numbers are far from final, especially since the analysis was done last year, and AI usage by developers has moved significantly since then.

The incredibly rapid rate of new tools and better models becoming available quashes any real hope we have of estimating “ideal” or “target” impact metrics at this time. We can’t look at studies on existing usage and pinpoint what a good code impact number looks like, or what good lifts on our core productivity numbers should be. But we know that the trend should be positive. When characterizing the data for our stakeholders, I think we have to look at trends (we should still be seeing steady improvement), and, to the extent possible, relate our results to early industry reports. We may not have the 30% code impact of Microsoft, but if our adoption and impact trend is positive, and we’re taking proactive steps to drive it, then we’re probably in a decent place.

As a final note, I think it’s safe to assume that we’re in for some surprises in this area. For example, a recent post on the Research-Driven Engineering Leadership substack covered some research at Bilkent University that studied the impact of AI-powered code reviews, and found that while these had a positive measurable impact on quality, they also significantly slowed down the PR cycle time. The study was just in one environment, and with just the tools available last year when the study was conducted, so I don’t think that this necessarily represents durable insight. But it highlights the fact an engineering team is a complex system, and overall productivity is a non-obvious function of many factors. We need to be commensurately sophisticated with our approaches to measurement if we don’t want an overly simplistic view of AI impact.

.png)