Picture this: You’re a lead developer on a data-heavy project. 90% of your business logic lives in SQL. Your team of developers writes Snowflake queries all day long.

But here’s how we “tested” our SQL:

-- Copy this chunk from the codebaseWITH revenue_calculation AS (

SELECT c.name, SUM(p.price * ol.quantity) as revenue

FROM clients c

JOIN orders o ON c.id = o.client_id

-- ... 30 more lines of business logic

)

SELECT * FROM revenue_calculation WHERE name = 'TestClient';

- Copy-paste the SQL into Snowflake workbook

- Manually substitute some test values

- Run it and eyeball the results

- Hope it works in production

Sound familiar? We called this “testing.” It was actually just “hoping with extra steps.”

The real problems were obvious:

- No repeatability: Every “test” was different

- No automation: Manual copy-paste every time

- No confidence: “It works on my query” syndrome

- No coverage: Complex edge cases never tested

But here’s the thing about problems that nearly break you: they often lead to the most elegant solutions.

Frustrated with manual testing, my first “brilliant” idea was component testing. Create real Snowflake databases for each test, load real data, execute real business logic, assert real results.

def test_revenue_calculation():# Setup: Create dedicated test database

test_db = create_snowflake_test_db()

# Load test data

test_data = pd.DataFrame({...})

test_data.to_snowflake(test_db, table='orders')

# Execute business logic

result = execute_revenue_sql(test_db)

# Assert

assert result['total_revenue'] == expected_value

# Cleanup

drop_database(test_db)

It was clean. It was thorough. It was completely unsustainable.

The brutal math:

- 30 seconds to create a database

- 10 seconds per SQL test

- 5 minutes for a complete test suite

- €€€€€ month in compute costs

- Frustrated developers avoiding writing tests

That’s when I got the manager meeting with the dreaded question: “Can you explain this Snowflake bill?”

The breakthrough came during a particularly frustrating debugging session. I was waiting for a Snowflake test to complete when I stumbled across SQLGlot in a random GitHub discussion.

“SQLGlot is a no-dependency SQL parser, transpiler, optimizer, and engine. It can translate between 20 different dialects…”

Wait. Translate between dialects?

import sqlglot# SQL written in Snowflake dialect

snowflake_sql = """

SELECT

DATE_TRUNC('month', order_date) AS month,

IFNULL(revenue, 0) AS revenue,

LAG(revenue) OVER (ORDER BY order_date) AS prev_revenue

FROM monthly_sales

WHERE order_date >= DATEADD('month', -12, CURRENT_DATE())

"""

# Magic happens here

duckdb_sql = sqlglot.transpile(

snowflake_sql,

read="snowflake",

write="duckdb"

)[0]

print(duckdb_sql)

# SELECT

# DATE_TRUNC('month', order_date) AS month,

# COALESCE(revenue, 0) AS revenue,

# LAG(revenue) OVER (ORDER BY order_date) AS prev_revenue

# FROM monthly_sales

# WHERE order_date >= (CURRENT_DATE() + INTERVAL '-12' MONTH)

This changes everything.

The second piece of the puzzle was DuckDB. If you haven’t heard of DuckDB, imagine SQLite but designed for analytics workloads:

- OLAP in-memory: Perfect for analytical queries

- 99% SQL compatibility: Supports most functions you actually use

- Zero setup:

- pip install duckdb

- Millisecond performance: No network latency

The vision crystallized: Write once in Snowflake dialect, test everywhere.

The framework I built rests on three fundamental pillars:

How do you keep your local DuckDB schema in sync with production Snowflake? Meet sqlacodegen:

# Generate SQLAlchemy models directly from Snowflakesqlacodegen \

--generator declarative \

--outdir src/db \

--outfile models.py \

snowflake://user:pass@account/database/schema# Result: Clean, PEP8-compliant SQLAlchemy models automatically generated

# src/db/models.py

from sqlalchemy import Column, Integer, String, DECIMAL, DateTime, ForeignKey

from sqlalchemy.ext.declarative import declarative_base

Base = declarative_base()

class Client(Base):

__tablename__ = 'clients'

__table_args__ = {'schema': 'my_shop'}

id = Column(Integer, primary_key=True)

name = Column(String(255), nullable=False)

email = Column(String(255))

class Product(Base):

__tablename__ = 'products'

__table_args__ = {'schema': 'my_shop'}

id = Column(Integer, primary_key=True)

name = Column(String(255), nullable=False)

price = Column(DECIMAL(10, 2), nullable=False)

The magic: Schema changes in Snowflake automatically propagate to your local environment. No manual model maintenance. Ever.

SQLGlot handles most SQL dialect differences automatically, but the real world is messier. Some Snowflake patterns don’t transpile cleanly:

Parser Error: LISTAGG with DISTINCT not supportedSolution: Custom Rules Engine

def _apply_duckdb_custom_rules(self, sql: str) -> str:# Handle LISTAGG DISTINCT WITHIN GROUP

pattern = r'LISTAGG\s*\(\s*DISTINCT\s+([^,]+),\s*([^)]+)\s*\)\s*WITHIN\s+GROUP.*'

return re.sub(pattern, r'STRING_AGG(DISTINCT \\1, \\2)', sql)

Is it elegant? No. Does it work? Absolutely. Sometimes pragmatism beats purity.

The proper solution? Either contribute these transformations back to SQLGlot as AST-based rules, or build a custom code generator that extends sqlacodegen to handle these patterns natively. But when you’re trying to ship a solution that works today, regex rules get the job done.

The Querier pattern provides a single interface for multiple backends:

class Querier(ABC):def execute_sql(self, sql: str) -> pd.DataFrame:

# 1. Detect dialect mismatch

# 2. Apply custom rules if needed

# 3. SQLGlot transpilation

# 4. Execute on appropriate engine

# 5. Return standardized DataFrame

pass# Business services stay pure

class RevenueAnalytics:

def __init__(self, querier: Querier):

self.querier = querier # Dependency injection magic

def get_revenue_by_client(self):

sql = "SELECT ... LISTAGG(...) ..." # Snowflake SQL

return self.querier.execute_sql(sql) # Backend-agnostic

Instead of scattered SQL strings, I organized business logic into focused services:

class RevenueAnalytics:def __init__(self, querier: Querier):

self.querier = querier

def get_revenue_by_client(self) -> pd.DataFrame:

sql = """

WITH client_revenue AS (

SELECT

c.name AS client_name,

SUM(p.price * ol.quantity) AS total_revenue

FROM clients c

JOIN orders o ON o.client_id = c.id

JOIN order_lines ol ON ol.order_id = o.id

JOIN products p ON p.id = ol.product_id

GROUP BY c.name

)

SELECT * FROM client_revenue ORDER BY total_revenue DESC

"""

return self.querier.execute_sql(sql) # Returns DataFrame directly

The ctcontext fixture makes tests completely transparent:

@pytest.fixturedef ctcontext():

if os.getenv('USE_SNOWFLAKE'):

return SnowflakeTestContext()

else:

return DuckDBTestContext() # Default: fastdef test_revenue_calculation(ctcontext):

service = RevenueAnalytics(ctcontext.querier)

revenues = service.get_revenue_by_client()

# Same business logic validation

assert len(revenues) > 0

assert all(r.total_revenue > 0 for r in revenues)def test_revenue_calculation(ctcontext):

service = RevenueAnalytics(ctcontext.querier)

revenues = service.get_revenue_by_client()

# Same business logic validation

assert len(revenues) > 0

assert all(r.total_revenue > 0 for r in revenues)

Execution:

# Development: Local & lightning fast$ pytest test_revenue.py

Backend: DuckDB, Time: 0.1s ⚡# Validation: Production confidence

$ USE_SNOWFLAKE=1 pytest test_revenue.py

Backend: Snowflake, Time: 10.2s ✅

Some business logic requires advanced Snowflake features:

def get_customer_product_preferences(self) -> List[Dict[str, Any]]:# Uses LISTAGG - Snowflake specific

sql = """

SELECT

c.name AS customer,

LISTAGG(DISTINCT p.name, ', ') WITHIN GROUP (ORDER BY p.name) AS purchased_products,

COUNT(DISTINCT p.id) AS unique_products

FROM clients c

JOIN orders o ON c.id = o.client_id

JOIN order_lines ol ON o.id = ol.order_id

JOIN products p ON ol.product_id = p.id

GROUP BY c.name

ORDER BY unique_products DESC

"""

return self.querier.execute_sql(sql) # Transpiled automatically

The framework automatically converts LISTAGG(DISTINCT ...) to STRING_AGG(DISTINCT ...) for DuckDB while preserving the original SQL for Snowflake.

The complete workflow integrates seamlessly into CI/CD

The magic workflow:

- Schema changes in Snowflake → Models auto-regenerated

- Local tests → Instant feedback

- Production validation → Final confidence

Before the framework:

- Test execution: 5+ minutes per suite

- Cloud costs: €10,000/month for testing (well less, once we change the warehouse size ..)

- Developer flow: Constant interruptions

- Test coverage: Developers avoided writing tests

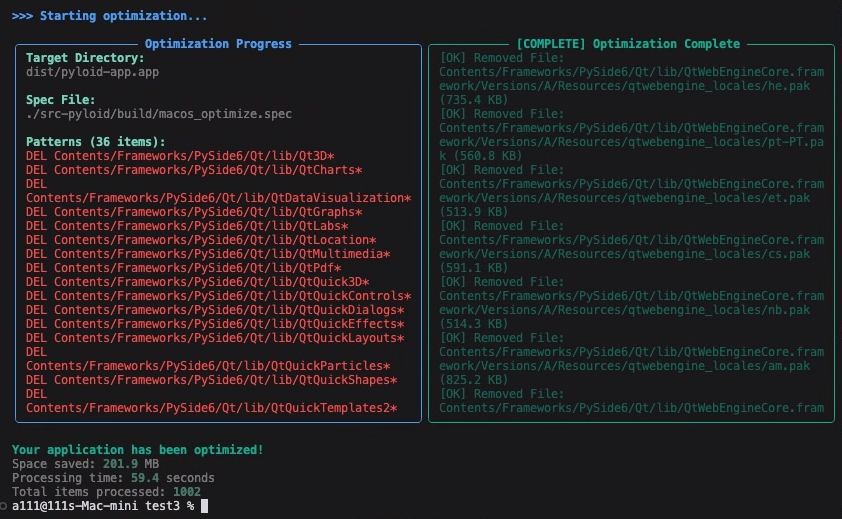

After the framework:

- Test execution: 3 seconds locally, validation in 45s

- Cloud costs: ~€0 for development testing

- Developer flow: Uninterrupted local development

- Test coverage: 3x more tests written (easier = more adoption)

The real impact goes beyond metrics:

Developer Experience:

# Before: The dreaded wait$ run_tests.sh

Connecting to Snowflake...

Creating test database...

Loading test data...

☕ ☕ ☕ (goes for coffee, loses context)# After: Instant feedback

$ pytest tests/

✅ 36 passed in 3.21s

Onboarding: New developers can start contributing on day one without complex Snowflake setup.

Innovation: Teams experiment more when testing is frictionless.

Let’s be honest about the limitations:

Those regex patterns for unsupported SQL features? They’re fragile and require maintenance:

# This works but isn't prettypattern = r'LISTAGG\s*\(\s*DISTINCT\s+([^,]+),\s*([^)]+)\s*\)\s*WITHIN\s+GROUP.*'

Better approach: Contributing proper AST transformations back to SQLGlot.

DuckDB performance ≠ Snowflake performance. The framework tests business logic correctness, not performance characteristics.

Solution: Separate performance test suite still runs against Snowflake.

About 90% of typical analytical SQL patterns work seamlessly. The remaining 10% requires custom handling or graceful fallback.

Sometimes regex rules beat waiting for perfect AST transformations. Ship solutions that work today.

The best testing framework is the one developers actually use. Remove friction at all costs.

Run exhaustive tests locally in seconds, then validate with a smaller production test suite.

Manual schema synchronization will eventually break. Automate everything that can drift.

What started as a desperate attempt to reduce cloud costs became a comprehensive platform for modern SQL testing. The framework now powers testing for multiple teams across different projects, proving that the best solutions often emerge from the most frustrating problems.

The core insight: Don’t test SQL by running SQL in production. Test business logic by running equivalent logic locally, then validate in production.

The key principle: Developer productivity multiplies when you remove friction from the testing workflow.

The ultimate goal: Make SQL testing so fast and easy that developers write more tests, not fewer.

Have you struggled with SQL testing in your organization? What approaches have you tried? Share your experiences and challenges in the comments — I read and respond to every one.

About the Author I’m Vincent Ysmal — a software engineer, architect, and R&D leader with over 30 years of experience across backend systems, data platforms, cloud infrastructure, and engineering teams of all shapes and sizes.

This is my first published post. I wanted to share something practical, rooted in real experience — because sometimes the best ideas come from frustration, curiosity, and a few thousand euros of wasted cloud compute.

📬 Feel free to reach out on LinkedIn — always happy to trade stories or ideas.

Tags: #SQL #Testing #DataEngineering #Snowflake #DuckDB #SQLGlot #DevOps #DataOps #TechnicalLeadership #SoftwareArchitecture

.png)