Large language models (LLMs) are in use by more and more developers. They use them to write code, but also to discover and leverage tools. In this post, you will learn how to make your docs more accessible to LLMs and to developers using them, based on our real world experience.

LLMs and LLM enabled tools such as Claude, ChatGPT and Cursor are used by developers in their daily workflow. In 2024, a survey from GitHub found over 97% of the 2000 developers surveyed “used AI coding tools at work at some point”.

Here at FusionAuth, we have also noticed a trend in the last few months. We started getting inbound sales calls from people who asked ChatGPT about us. We ask people who install our software where they heard about us from and many answer with a specific LLM name or “LLMs/GenAI”.

Helping developers be successful by using our documentation to get to what they are trying to accomplish is something we spend a lot of time on here at FusionAuth. In this post, we’ll talk about ways we have modified our documentation to make it work better with LLMs, and show you how to do so.

There are two ways LLMs can help your company:

- discovery, where an LLM surfaces your tool and helps users discover it—basically a replacement for Google

- building, where an LLM helps a developer build using your tool—basically a replacement for Stack Overflow

The boundaries between these are blurry, but definitely exist. Some doc improvements help with one more than the other.

Here’s a table of the options that will be examined below.

| Build great docs | Helps everyone, including LLMs, developers and search engines | Large, ongoing time investment | Discovery and building |

| LLM powered chatbot | Familiar user interface, aggregates across multiple sources of doc, easy buy decision | Costs money (or time to build), can be inaccurate | Building |

| Generate a llms.txt file | Helps LLMs discover and ingest content, can be auto-updated, emerging standard | Fuzzy effectiveness, updating may be tough depending on where content lives, no current support for multiple sections | Discovery, a bit of building |

| Copy to markdown button | Lets devs use the LLM of their choosing, leverage LLM for use cases you can’t imagine or document | Development time required, may not be used, UX issues | Building |

Now, let’s take a deeper look into each of the options above.

Foundation First: Building Documentation That Works for Both Humans and AI

The first and most important way to help developers and LLMs use your docs is to, well, write them. If they don’t exist, they won’t help anyone. In addition to writing them, you should:

- structure them well, both in how they are arranged and linked and on the page with headings

- include frequently asked questions and troubleshooting tips

- keep them up to date, including generating them from specs or code where applicable

- make them fast to download

- make them consistent

- offer public long tail content like a forum

If this seems like boring hard work, that’s because it is.

Docs are their own product. They are a key way for your community to learn your product. They also allow prospective developers to evaluate your product with lower risk than downloading or signing up. They’re a signal about product quality too.

The effort of good documentation pays off in multiple ways, because in addition to helping LLMs, it also helps SEO. Organic search is still a massive source of traffic. And, following those guidelines above, it helps developers who are reading the docs.

The good folks at Kapa.ai, who provide documentation based LLM chatbots for many companies, including FusionAuth, wrote a great page about how to structure your technical documentation for LLMs.

There is a lot of good advice in that post, but an important tip for content structure is: “When planning content structure, consider how users would find any given section without search. Ensure each section includes enough context to be understood independently”, including product family, name, versions, components and functional goals.

Based on how people read (or don’t) today, you might see the benefits of this approach toward content structure for humans as well.

The downside of building and maintaining good documentation is it takes a lot of effort to do so. But if you don’t get this right, the rest of the tips in this post are not going to help you much.

Solid, updated, accurate documentation is the foundation for anything else related to helping LLMs and developers using them.

Success Metrics

After building and maintaining docs, make sure they are working for your LLMs for both discovery and building.

For discovery, ask developers how they found you. We’ve seen a notable uptick in users mentioning finding FusionAuth via LLMs. In fact, since we started tracking it in our setup wizard, it has been one of our top three sources. You can also manually look for references or unique strings from your docs that are returned by an LLM of your choice. You can also examine the traffic to your website using your access logs, and see which of your content is being retrieved by the LLM user agents. This is something you’ll need access logs to ascertain, since JavaScript tools like Google Analytics don’t track direct requests to files at a certain location.

For building, you’d need to understand the questions people are asking in the LLM of their choice. The field of analytics around LLM served content is nascent. I couldn’t find a better solution than asking questions in a variety of LLMs, then checking response accuracy. Don’t forget to prompt the LLMs for links as well to check if the LLM is sending the user to the right location in your documentation.

Here’s a sample access log line showing ChatGPT retrieving API documentation.

52.255.111.85 - - [01/May/2025:00:17:59 +0000] "GET /docs/apis/two-factor HTTP/2.0" 200 661 "-" "Mozilla/5.0 AppleWebKit/537.36 (KHTML, like Gecko); compatible; ChatGPT-User/1.0; +https://openai.com/bot"While folks talk about LLM scrapers being a major source of traffic, I looked at our logs from January 1 to June 5, 2025 and didn’t see the impact of any LLM scrapers. Here are the percentages of scrapers that identified themselves and made any request resulting in a 200 status code during that timeframe.

| OpenAI | 0.99 |

| Anthropic | 0.55 |

| Perplexity | 0.18 |

| GoogleBot (for Gemini) | 0.54 |

| BingBog (for Copilot) | 0.30 |

All in all, LLM based traffic for these well known options was, at most, 2.6% of our total traffic over this timeframe.

This seemed weird, so I looked at all the user agents making successful requests. There were a significant number of requests from other types of bots, but not other high-profile LLMs.

Deploy an AI-Powered Documentation Assistant (Yes, a Chatbot)

Offering up an LLM based chatbot lets you leverage the docs with a convenient interface. It can be interactive on the docs site and also integrate with other support channels such as Slack, Discord or ticketing systems.

While you can build your own documentation focused chatbot, unless you are an AI focused company wanting to dogfood your solution, you’re better off buying this functionality. Doing so is an easy way to trade money for time.

FusionAuth installed Kapa.ai’s chatbot in 2023 and we have been using it heavily ever since. There are alternatives out there but I haven’t seen any as focused on technical documentation as Kapa.

We don’t just have our technical documentation ingested in Kapa, we also ingest our OpenAPI spec, our YouTube channel, our forum posts and a number of other sources. Kapa has answered tens of thousands of queries, both for external and internal users. It’s great for discovery of documentation or other resources.

Make sure whatever chatbot you use can say “I don’t know” and includes links to sources. We’ve had few reported hallucinations, but they do happen. Having the source means users can verify the answers.

Our recommendation for this option is to find an LLM chatbot vendor and follow their installation instructions.

Success Metrics

The measure of success here is twofold:

- Are people using this tool?

- Are they finding the answers they need?

Both of these metrics should be delivered by any chatbot you use. If you do end up building a solution, make sure you allow time to build such reporting.

Implement llms.txt: The Emerging Standard for AI Discoverability

Another way you can help developers using LLMs succeed with your docs is to build an llms.txt file and other associated files.

LLMs don’t have an understanding of text in the same way humans do. Instead, they decompose text into tokens, which are “a word, part of a word (subword), or even a character”. All LLMs have a context window, which limits the number of tokens they can process. Offering semantic density in your documentation is important for helping LLMs to process your docs better. And markdown text has more density than HTML because there are more tokens that have meaning.

llms.txt is a relatively new standard analogous to the sitemap.xml or robots.txt files, but for LLMs rather than search engine crawlers. There are actually two files:

- llms.txt is a list of URLs with useful content, organized by section. Here’s our docs llms.txt file.

- llms-full.txt is a similar list but includes the full text rather than just the URL of the pages. Here is our docs llms-full.txt file.

It’s unclear if you can have more than one set of llms.txt files for different sections of your site; the standard is still evolving. Here’s an open issue to discuss this.

These files are typically located at the root of your domain: https://example.com/llms.txt for example. This is so LLMs have one place to look.

If you put these files somewhere else or have more than one of them, add a link from your HTML pages to the files. Crawlers for LLMs hunt around, but not too much. We shipped an llms.txt file for our docs in December, but it wasn’t at the root location above. This file was downloaded by the OpenAI chatbot nearly 60 times more often after we linked to it from our documentation portal.

In addition, when you make these files available to developers browsing your site, they can retrieve one or both of them and upload them to an LLM of their choosing. This allows developers to interrogate the content or learn more about the product using their tool of choice.

Ensure these files are automatically updated in the same way your sitemap.xml file is. You want any new or updated documentation to be included with minimal effort.

Here’s a table showing the relative percentages of downloads of either our llms.txt file or our llms-full.txt file, January 1 to June 5, 2025. There may be other LLMs downloading this file as well.

| OpenAI | 71.59 |

| GoogleBot (used for Gemini) | 17.20 |

| Perplexity | 8.22 |

| BingBog (used for Copilot) | 2.80 |

| Anthropic | 0.19 |

Implementation Notes

We build these files for our docs section. Here’s the code for the llms.txt file:

import { getCollection } from "astro:content"; import type { APIRoute } from "astro"; const docs = await getCollection("docs"); export const GET: APIRoute = async ({}) => { return new Response( `## FusionAuth.io Documentation\n\n${docs .map((doc) => { return `- [${doc.data.title}](https://fusionauth.io/docs/${doc.slug}/)\n`; }) .join("")}`, { headers: { "Content-Type": "text/plain; charset=utf-8" } } ); };You could make this more sophisticated by giving documents rankings and sorting more important docs to the top. We haven’t done that yet. For other sections of our website, we handcrafted the llms.txt file.

For the llms-full.txt generated from our documentation, the code looks similar:

import { getCollection } from "astro:content"; import type { APIRoute } from "astro"; const docs = await getCollection("docs"); export const GET: APIRoute = async ({}) => { return new Response( `## FusionAuth.io Full Documentation\n\n${docs .map((doc) => { return `# ${doc.data.title}\n\n${doc.body}\n\n`; }) .join("")}`, { headers: { "Content-Type": "text/plain; charset=utf-8" } } ); };This pulls in the entire body (${doc.body}) rather than just the URL, but is otherwise the same.

We use a lot of includes in our docs. I did some work to inline the include file markdown, but that ended up making the llms-full.txt file too big for the popular LLMs’ context windows.

Success Metrics

Are these files downloaded? How often? By what user agents? Are they primarily downloaded by LLMs or users?

The answers to these questions lie in your access logs, since JavaScript tools like Google Analytics don’t track direct requests of files.

Enable Developer Self-Service with a Copy-to-Markdown Button

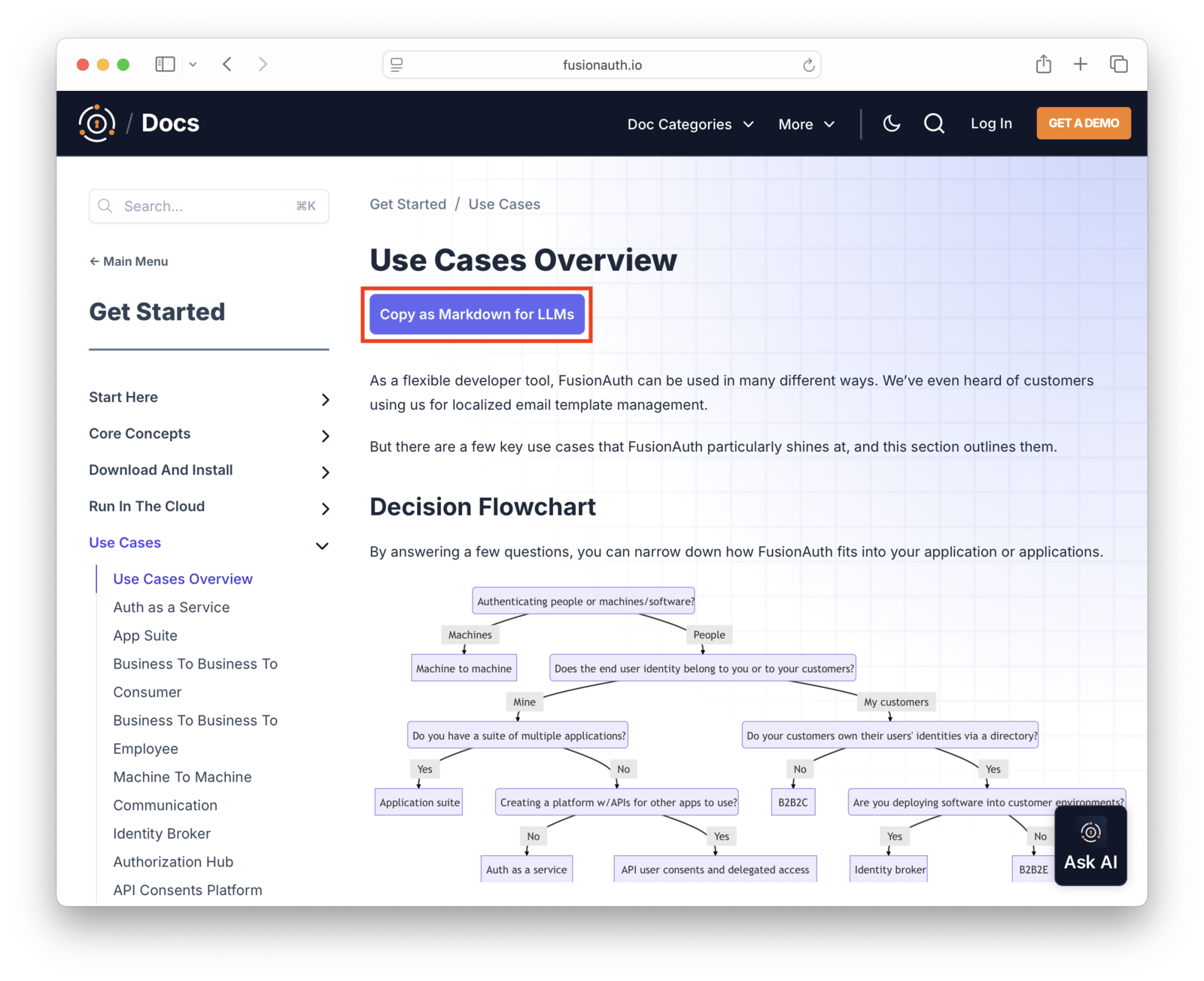

Adding a “copy to markdown” button like below helps developers leverage LLMs in a similar way to providing the llms-full.txt file mentioned above. Folks can copy the text and paste it into their favorite LLM, while using fewer tokens than the llms-full.txt file.

We’ve installed this on every page on our docs site; an example is below:

By offering this button, you let developers transform your documentation for their purpose. Here are some examples of prompts a developer might do for a technical doc like an API:

- summarize all the error statuses of this API

- explain the key concepts here to me “like I’m 5” or “like I’m an expert software architect” or “like I’m a front end developer”

- how does this API compare with a similar API from [competitor]

- show me how to use this API in Rust or C++ (or other possibly unsupported languages)

- provide me 10 sets of fake API requests for this API to use in my test harness

- translate this page to Spanish or Swedish

Basically, you don’t know exactly what is important to the developer at that moment in time, but they do. And they can ask the LLM to provide it.

Of course someone could copy the HTML and then do the transformation, but that uses more tokens and is more cumbersome.

Implementation Notes

We used JavaScript to perform the copy operation when a button was clicked:

document.querySelector('#copy-docs-markdown-llm-button').addEventListener('click', async event => { const button = event.currentTarget; const href = button.dataset.href; const resetTextMS = 2000; const btnText = 'Copy as Markdown for LLMs'; try { // Create the clipboard item immediately within the user gesture const clipboardItem = new ClipboardItem({ 'text/plain': fetch(href).then(async response => { if (!response.ok) throw new Error('Failed to fetch file'); return response.text(); }) }); await navigator.clipboard.write([clipboardItem]); button.textContent = 'Copied'; setTimeout(() => { button.textContent = btnText; }, resetTextMS); } catch (err) { console.error('Error copying file contents:', err); button.textContent = 'Failed'; setTimeout(() => { button.textContent = btnText; }, resetTextMS); } });We also used an astro post:build plugin to generate the markdown file at a sibling location to the HTML, so the JavaScript above could retrieve it.

// src/plugins/markdown-extract.ts import fs from 'node:fs'; import path from 'node:path'; function extractFrontmatter(markdown) { const frontmatterMatch = markdown.match(/^---([\s\S]*?)---/); if (!frontmatterMatch) return { title: null, description: null, content: markdown }; const fmContent = frontmatterMatch[1]; const contentWithoutFM = markdown.slice(frontmatterMatch[0].length).trimStart(); // Simple frontmatter parse for title and description (YAML-ish) const lines = fmContent.split(/\r?\n/); let title = null; let description = null; for (const line of lines) { const [key, ...rest] = line.split(':'); if (!key) continue; const value = rest.join(':').trim(); if (key.trim() === 'title') title = value; if (key.trim() === 'description') description = value; } return { title, description, content: contentWithoutFM }; } function walk(dir, extFilter = ['.md', '.mdx']) { const results = []; for (const entry of fs.readdirSync(dir, { withFileTypes: true })) { const fullPath = path.join(dir, entry.name); // console.log('processing: '+entry.name); if (entry.isDirectory()) { results.push(...walk(fullPath, extFilter)); } else if (!entry.name.startsWith('_') && extFilter.includes(path.extname(entry.name))) { results.push(fullPath); } } return results; } const importRegex = /^\s*import\s+.*?['"](.+?)['"]\s*;?\s*$/gm; function inlineMarkdownImports(filePath, seen = new Set()) { if (seen.has(filePath)) { console.warn(`Skipping already inlined file: ${filePath}`); return ''; } seen.add(filePath); let content = fs.readFileSync(filePath, 'utf-8'); // Map of import name => file path (only for inlining) const importMap = new Map(); // Strip ALL import statements, but collect paths we care about content = content.replace(importRegex, (match, importPath) => { const importNameMatch = match.match(/import\s+(\w+)\s+from/); if (!importNameMatch) return ''; // remove malformed import const importName = importNameMatch[1]; const resolvedPath = path.resolve('./', importPath); if (!fs.existsSync(resolvedPath)) { console.warn(`Import not found: ${resolvedPath}`); return `<!-- Missing import: ${importPath} -->`; } // Only inline if it's in src/diagrams or src/content if ( resolvedPath.includes(path.normalize('/src/diagrams/')) || resolvedPath.includes(path.normalize('/src/content/')) ) { importMap.set(importName, resolvedPath); } // Regardless of whether we inline, we remove the import line return ''; }); // Replace usages of inlined components with their content for (const [name, resolvedPath] of importMap.entries()) { const componentTagRegex = new RegExp(`<${name}(\\s*[^>]*)?\\s*/>`, 'g'); const inlinedContent = inlineMarkdownImports(resolvedPath, seen); content = content.replace( componentTagRegex, inlinedContent ); } const { title, description, content: bodyContent } = extractFrontmatter(content); let header = ''; if (title) header += `# ${title}\n\n`; if (description) header += `${description}\n\n`; return header + bodyContent; } function rewritePath(relPath) { if (relPath.endsWith('/index.mdx')) { return relPath.replace(/\/index\.mdx$/, '.mdx'); } return relPath; } export default function markdownExtractIntegration() { return { name: 'markdown-extract', hooks: { 'astro:build:done': async ({ dir }) => { console.log('Copying content collection entries...'); const contentDir = path.resolve('./src/content/docs'); const contentFiles = walk(contentDir); for (const file of contentFiles) { // console.log(file); const relPath = rewritePath(path.relative(contentDir, file)); const outPath = path.join(dir.pathname || dir,'docs', relPath); const inlinedContent = inlineMarkdownImports(file); fs.mkdirSync(path.dirname(outPath), { recursive: true }); fs.writeFileSync(outPath, inlinedContent, 'utf-8'); // fs.copyFileSync(file, outPath); console.log(`Wrote content: ${relPath}`); } } } } }This astro plugin code:

- finds all the files in our src/content/docs directory

- inlines any content in the file that is a component in either the diagrams or content directory so the markdown file is more useful

- removes the frontmatter

- writes a markdown file in a specific location, peer to the HTML file generated by the astro build

There are still component tags in the output markdown because components provide functionality and HTML. We may use alternative solutions that take HTML and turn it into pure markdown in the future.

Success Metrics

The success metric for this is how many times the copy to markdown button is pressed.

Since this is a user driven event, tracking it via a tool like Google Analytics is great.

Unfortunately, you can’t know what transformation is done on your documentation without asking your users.

Where Is MCP In This?

The Model Context Protocol, or MCP, allows you to let an LLM directly interface with your developer focused product.

We’ve explored but not implemented an MCP for FusionAuth. MCP is great for learning a tool, building prototypes, or debugging. But I’m not sure folks will be using it to replace configuration management in production systems.

MCP is not covered here because it is doc-adjacent but not part of documentation. If you have an opinion about the usefulness of a FusionAuth MCP server, well, there’s an open issue for sharing your feedback. We’d love to hear from you.

Summing It Up

LLMs are here.

Developers are using them to interact with your documentation.

Use one, two, or all of the options presented above to make it easier for them to do so.

.png)