Powerful, centralized tools for monitoring, controlling and understanding your LLM API requests.

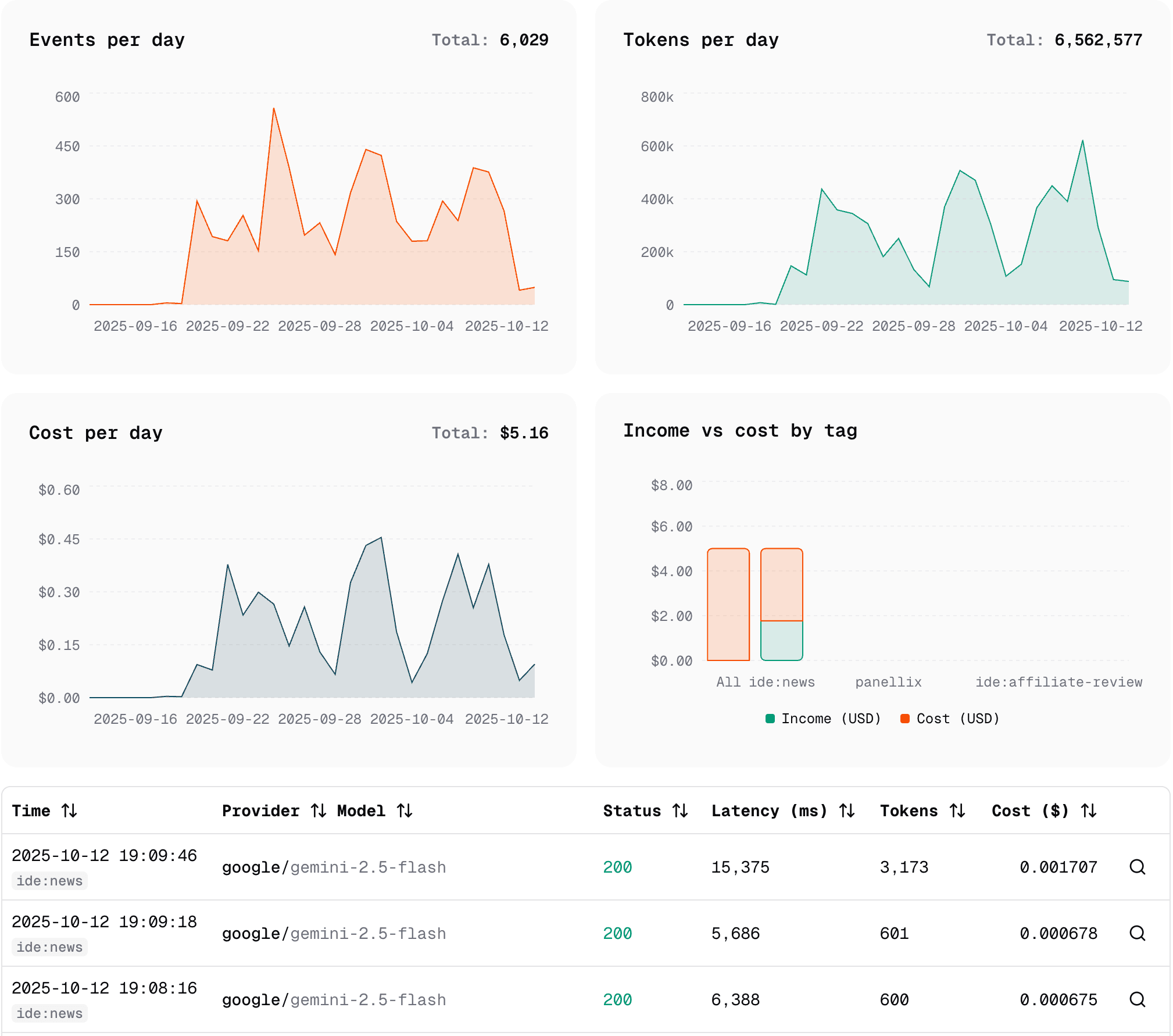

Understand your LLM usage

Monitor your LLM API requests with powerful logging and insights to understand what is really happening in your app.

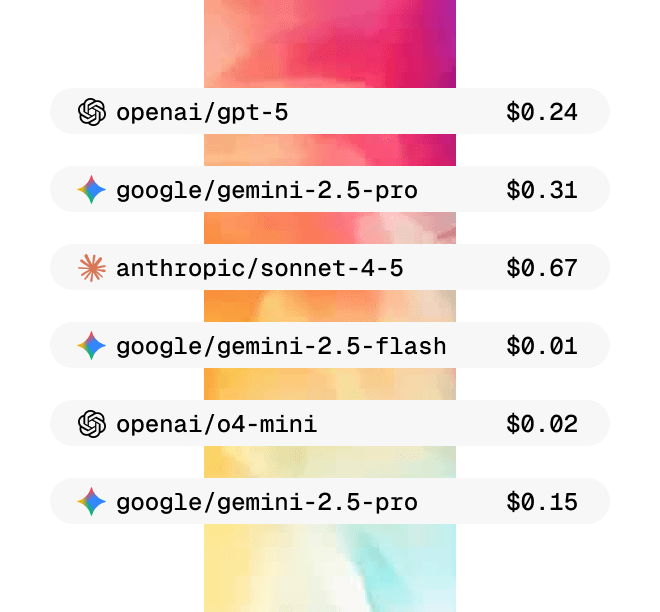

Monitor your LLM costs

All providers, all models, and all costs aggregated and visualized in one place. Zero chance of missing anything.

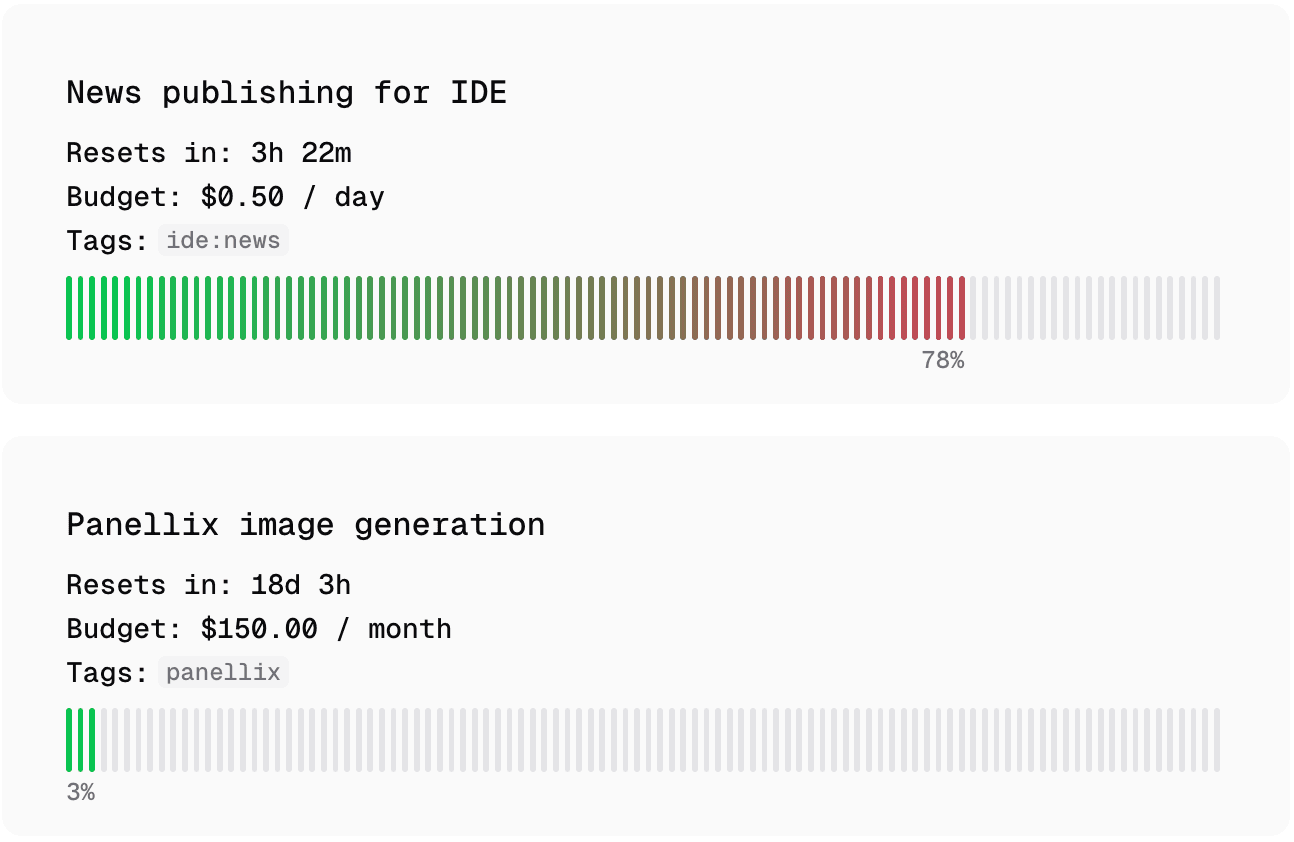

Control your LLM costs

Limit the cost of your LLM requests by versatile rules. Derisk your business and deploy fine-grained, user-specific rate limits.

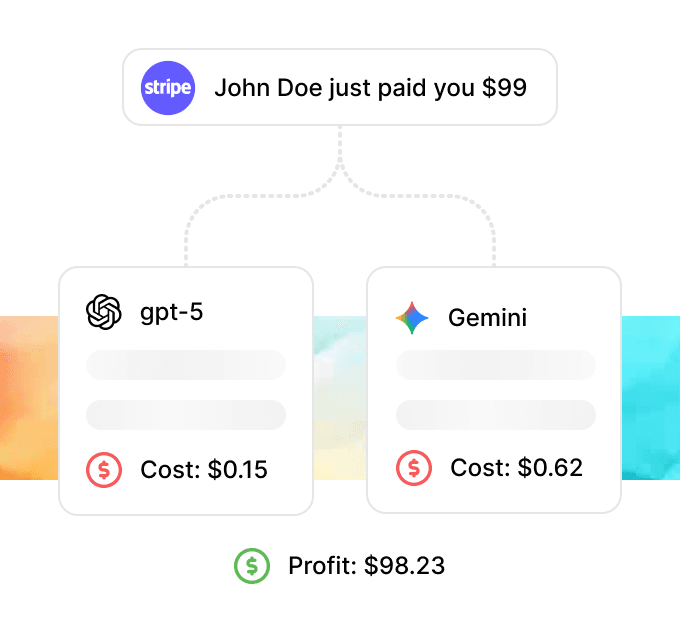

Understand your profitability

You make an LLM request with the intention to sell it in some way. AI Gateway helps you attribute every request to a specific sale or customer.

How do you use AI Gateway?

Integrating AI Gateway couldn't be easier. All you have to do is to prepend our domain to the URL of your existing LLM requests. Everything else stays exactly the same as the original provider API suggests:

.png)