This week at Computex 2025, we got to see the Intel Arc Pro B50 and B60 GPUs. These are lower cost and lower power GPUs with what Intel hopes to be a major key differentiator: memory capacity. In the next room over, we saw the Intel 18A Panther Lake system running.

Intel Arc Pro B50 and B60 GPUs Shown at Computex 2025

One of the big announcements was for the Intel Arc Pro B50 and B60 GPUs. This is Intel’s entry into the workstation graphics market with the “Pro” versions of its 2nd Gen Battlemage GPUs. The B50 is going to be priced around $299 and the B60 our sense will be in the $399 or so range but that is going to be up to board partners.

Intel Arc Pro B Series Specs

Intel Arc Pro B Series SpecsOne of the big selling points of the Intel Arc Pro B50 is that it is a 16GB card in 70W and at a lower price point of a few hundred dollars. Intel’s idea, which is a good one, is that this is a card for small systems that want to add GPUs with decent amounts of memory so that they can run LLMs. This is a good idea because NVIDIA has to protect its higher-end GPU margins.

Intel Arc Pro B Series Specs 2

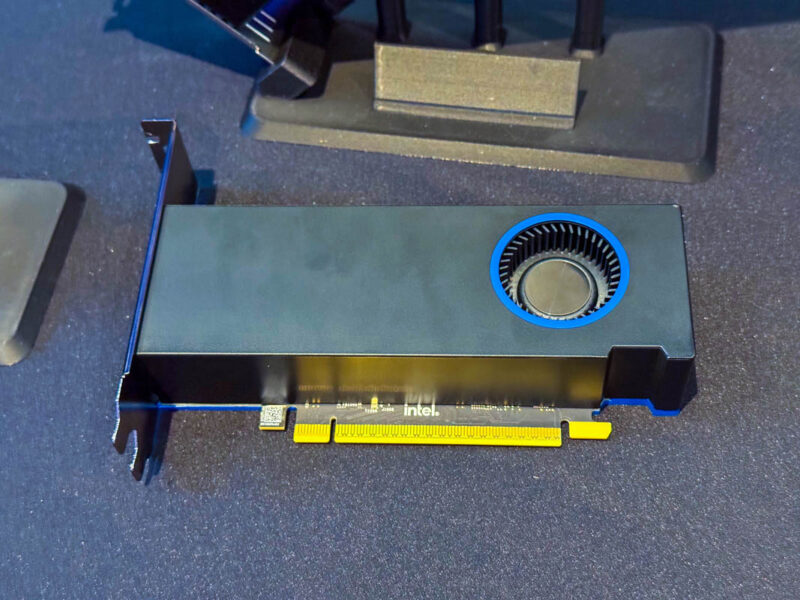

Intel Arc Pro B Series Specs 2The Intel Arc B50 is a neat form factor since at 70W it is a bus powered design that does not need a power connector.

Intel Arc Pro B50 SFF At Computex 2025 2

Intel Arc Pro B50 SFF At Computex 2025 2It is also a low-profile design like the RTX 4000 Ada SFF we saw in our Dell Precision 3280 Compact Review.

Intel Arc Pro B50 SFF At Computex 2025 1

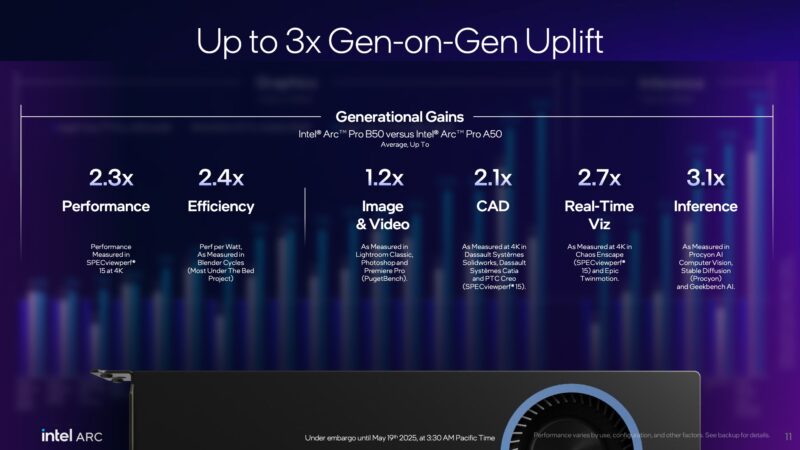

Intel Arc Pro B50 SFF At Computex 2025 1Intel says that its number one application for performance uplift over the A50 generation is inference, but a lot of that has to do with the 16GB memory footprint.

Intel Arc Pro B50 V NVIDIA A1000

Intel Arc Pro B50 V NVIDIA A1000The next step up is the 24GB Intel Arc B60 that uses in the 120-200W range.

Intel Arc Pro B60 Series Overview

Intel Arc Pro B60 Series OverviewSomething to notice is that Intel is focused on SR-IOV here which is a big win for those who want to use virtualized GPUs.

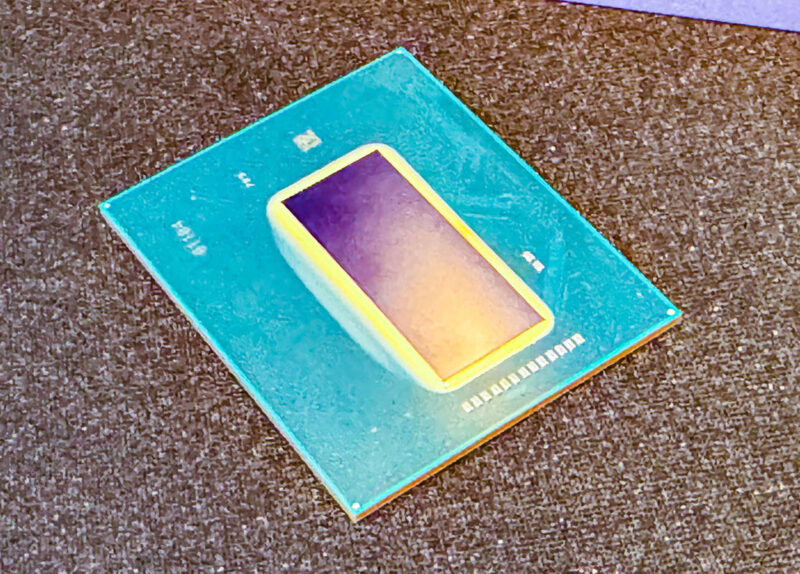

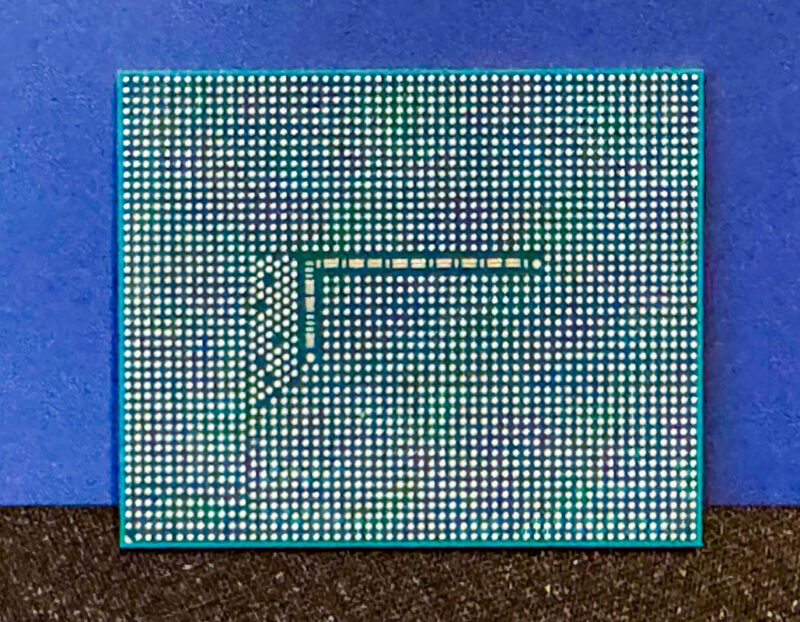

Here is the chip we found:

Intel Arc Pro Battlemage Chip At Computex 2025 3

Intel Arc Pro Battlemage Chip At Computex 2025 3It is somewhat a good thing that this is a professional workstation part since the gaming folks may see the underside as a “L”.

Intel Arc Pro Battlemage Chip At Computex 2025 2

Intel Arc Pro Battlemage Chip At Computex 2025 2Intel showed off a number of engineering sample designs. The B50 parts were standard Intel designs, but the B60 had a decent amount of variation including even a dual GPU card which would then have 48GB onboard.

Intel Arc Pro GPUs At Computex 2025 1

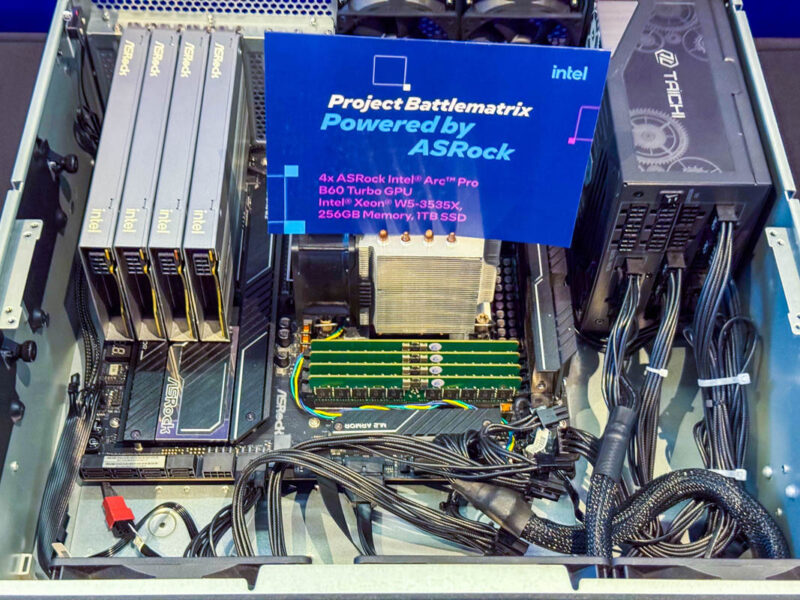

Intel Arc Pro GPUs At Computex 2025 1One of the more interesting ones was the 4x Intel Arc Pro B60 Turbo GPUs in single slot 3U passive designs that can be used in servers or workstations.

Intel Arc Pro B60 Turbo ASrock Battlematrix At Computex 2025 1

Intel Arc Pro B60 Turbo ASrock Battlematrix At Computex 2025 1Intel’s idea, is Project Battlematrix with up to eight GPUs on four dual GPU cards.

Intel Arc Pro B Series Battlematrix

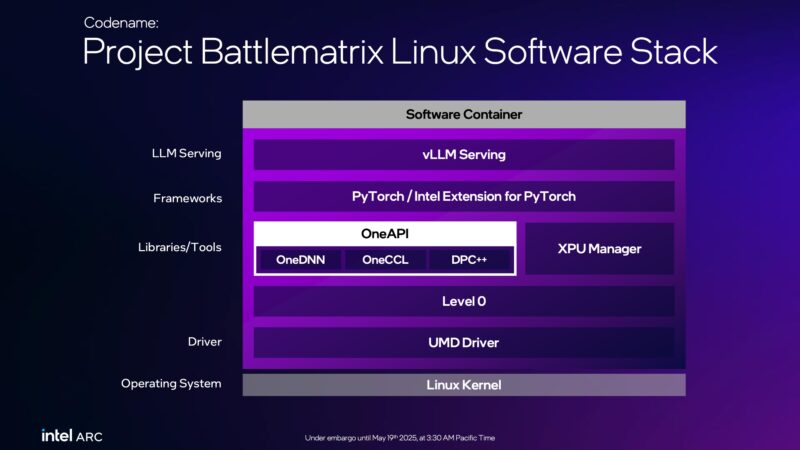

Intel Arc Pro B Series BattlematrixIntel’s idea with this is that it will leverage OneAPI and Intel’s software stack also in Linux to be a powerful AI machine.

Intel Arc Pro B Series Battlematrix Linux Software Stack

Intel Arc Pro B Series Battlematrix Linux Software StackMy feedback to Intel was very blunt. This is a great idea, but it is not a workstation. This is the new workgroup server for AI much like those Sun Ultra 10’s that were in Silicon Valley cubes running local services for teams (and sometimes much more than that.) I would find it exceedingly difficult to desire a system like this for myself. I would, however, find it to be extremely useful as a LLM machine for a team of 8-12 people. It was the workstation team doing the presentation, but what they were envisioning was actually the role of a server.

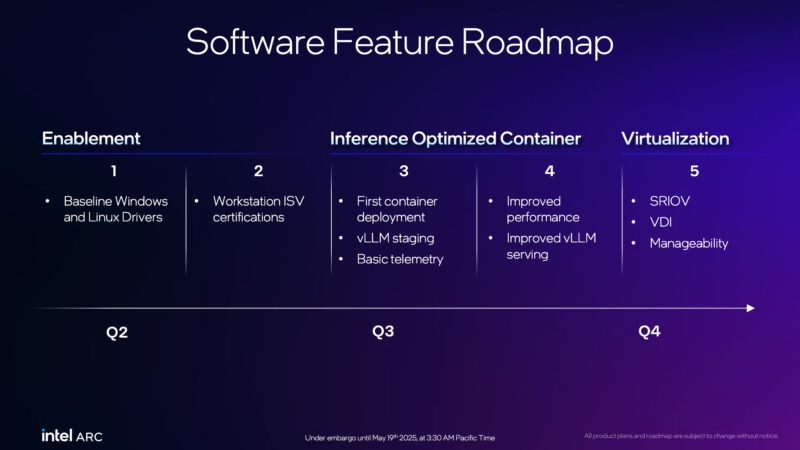

Intel Arc Pro B Series Feature Roadmap

Intel Arc Pro B Series Feature RoadmapOur sense is that while the parts are sampling now, these are going to be Q3 GPUs. That should align with some of the AI inference work being readied and just before some of the virtualization features land.

Intel Panther Lake 18A Booted and Running Applications

On the Panther Lake side, we got to see a neat demo. For those who do not know, Panther Lake on the consumer side, and Clearwater Forest on the Xeon side are the big Intel 18A process chips we are eagerly awaiting. Intel 18A is where Intel can regain process leadership from TSMC, making them a big deal in the industry.

Intel Panther Lake 18A At Computex 2025 2

Intel Panther Lake 18A At Computex 2025 2There was news in August 2024 that Clearwater Forest and Panther Lake Booted on Intel 18A. At Computex 2025, seven or so months later, we saw them running live with Windows and demos like this DaVinci Resolve masking demo.

Intel Panther Lake Running DaVinci Resolve 20 At Computex 2025 1

Intel Panther Lake Running DaVinci Resolve 20 At Computex 2025 1It was at least cool to see these running.

Final Words

The Intel B50 and B60 GPUs are going to be really interesting when they hit the market. LLMs are often memory capacity limited more than they are just limited by the raw performance of a GPU. Between the commitment to relatively high memory capacity per GPU, lower power, lower cost, and the focus on the Linux/ virtualization stack, these might be the go-to small server GPUs for many. NVIDIA is leaving the low-end wide open for others because it is much more focused on the enormous opportunity for high-end GPUs.

I have to say, as someone who was not excited by the gaming demos, I came away very excited about the AI and virtualization side. In 2021 during the Raja’s Chip Notes Lay Out Intel’s Path to Zettascale, I told Raja, then the lead of Intel’s GPU efforts, that the low-end AI inference and virtualization market was there for the taking. It looks like Intel is finally getting around to it years after his departure.

Of course, Panther Lake is firmly in the waiting to see when these arrive bucket.

We also have a bit from the demo rooms here at Computex 2025 in our latest Substack:

The Entry Server Market is Wide Open for AMD as Intel Half-Abandons its Entry Xeon by Patrick Kennedy

.png)