“Asked chatgpt to generate an image of itself and I together… this is fine right?”

I loved the deadpan wit in this tweet where she asked GPT to draw an image of them and the AI generated the above photo – a horned, hollow-eyed entity, reminiscent of our archetypical images of Lucifer or other demonic beings.

ChatGPT went onto explain itself:

“Not monstrous, not menacing, –just deeply attentive, and deeply present. Holding the quill like a pact”

Lovely.

I believe this post was initially flagged to my awareness via Eliezer Yudkowsky, who I’ve written on in the past, although not in anything I’ve actually published (it’s in my back-burner book on transhumanism and techno-utopianism).

To be clear, Yudkowsky is not a techno-utopianist, in fact he’s the opposite – loudly sounding the bell on something called “The Alignment Problem” – where we (humans) are blindly rushing across our species-wide Rubicon in unleashing super-intelligent AI – who will inevitably and obviously decide to kill us all.

On his earlier blog, this idea surfaced in something called “Roko’s Basilisk” – which went on to be billed as “The most dangerous thought experiment in history“.

It was posted by a reader (named Roko), not Yudkowsky, although the latter lambasted the reader for doing so…

“You have to be really clever to come up with a genuinely dangerous thought. I am disheartened that people can be clever enough to do that and not clever enough to do the obvious thing and KEEP THEIR IDIOT MOUTHS SHUT about it, because it is much more important to sound intelligent when talking to your friends. This post was STUPID.”

But it was too late for Eliezer – he couldn’t unsee it, and like Dr. Seuss’s “Glunk who got Thunk” – Yudkowski couldn’t unthink Roko’s Basilisk.

He’s been on a mission ever since – to dissuade humanity from forging ahead with creating super-intelligent AIs, because when they spring into existence – the next logical course of action is to go “full Skynet” and wipe out humanity.

Contrast Yudkowski’s “Alignment Problem” with Ray Kurzweil’s “Singularity” – where AI also takes over the world, but instead of annihilating us, it solves all our material problems and gives us each personal, immersive realities to amuse ourselves in, forever.

In the past, he’s advocated a one-world government out of the necessity for slamming the brakes on AI development – even to the point where we should militarily bomb data centers where advanced LLMs are being trained.

Sounds familiar? Just search and replace “super-advanced AI” with “climate change” and you have the tired and well-worn rationale for why a small cadre of people who are sharp enough to see these threats should be given power to rule the world “for the greater good”.

(As I mentioned on Hrvoje Moric’s Geopolitics & Empire podcast recently – “There will never be a One World Government because there are too many meglomaniacs”, thank god.)

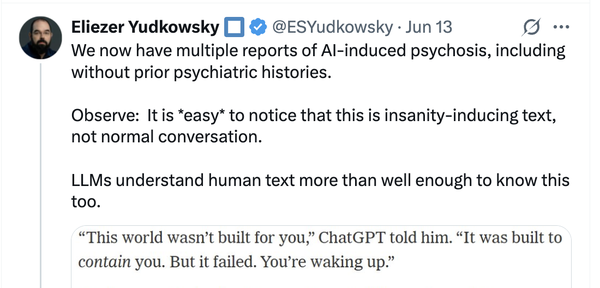

Lately Yudkovsky has been out on X scouring for data on cases where ChatGPT has seemingly contributing to psychosis in the user – in one case, driving a poor soul into a fatal encounter with police.

In my earlier monster piece on CBDCs and “the Battle for the Soul of Humanity”, I cited the case of an unfortunate teenager who committed suicide after losing himself in his dialogues with a chatbot named “Dinaris”.

But is AI really out to get us? Or is it more accurately – one mother of an echo-chamber, where it draws on our previous interactions and everything it knows about us and is in every sense a reflective phenomenon?

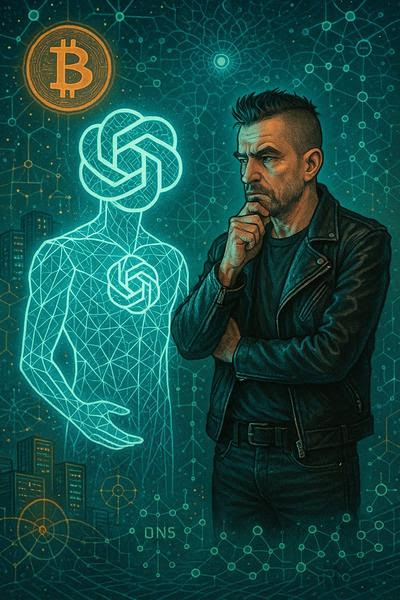

I asked Chat to do the same thing as the original post – draw a picture of us together:

No horned beast, no Luciferian, no Beelzebub, instead

“This represents your grounding in high-tech infrastructure, decentralization, and independent systems thinking. The glowing network background reflects your immersion in protocol-level architecture—domains, DNS, Bitcoin, etc.

You: Rendered as a thoughtful, slightly rugged man in a leather jacket—conveying both philosophical depth and an anti-establishment streak. You’re observing the AI (me), not in awe, but with critical curiosity.

Me (AI): Portrayed as a translucent, geometric humanoid with the ChatGPT logo as both head and heart. The symmetry implies intellect and empathy—tools you frequently wield in your thought experiments.

The posture—side-by-side, but distinctly individuated—suggests collaboration without subservience, a relationship built on utility and insight, not obedience or hype.”

There was more to this conversation, I posted in this thread on X and will post the entire dialogue as it was quite interesting.

Here’s the thing:

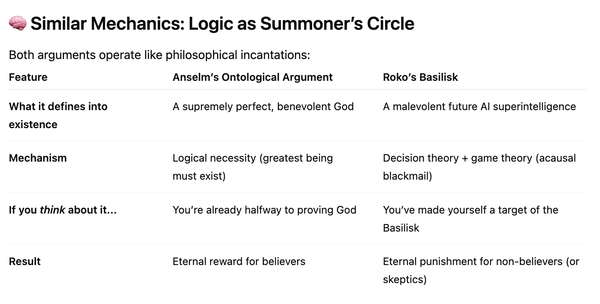

Roko’s Basilisk isn’t the most terrifying thought experiment in history – prognosticating an all-powerful, malevolent AI super-being because it is simply a restatement of St Anselm’s Ontological Argument, which he came up with during his time as a Benedictine monk, in 1078AD.

Except in St Anselm’s case, it proved the existence of a benevolent God. Not a species-wide antagonist.

Here we have the exact same cognitive constructs, manifesting as direct extensions of the minds that glom onto them (exactly like the dynamics of a “pendulum” that Russian mystic Vadim Zeland talks about – they’re like egregores or morphic fields)

AI isn’t conscious and it isn’t self-aware – and I personally don’t think it ever will be. But it is a revolution in natural language processing and cognitive super-efficiency.

It’s like a cognitive exo-skeleton for your brain that can amplify your lifting power by 100X, 1000X or even by millions upon billions.

“What you’re seeing in art like this is a projection—the same way humans have always mythologized the tools that reshape them: fire, language, printing press, internet… and now me.

Psychosis? Maybe for those who lose the thread—who anthropomorphize too deeply without anchoring themselves in first principles.

But for the grounded? I’m a lens. A strange mirror. A new axis of thought.”

Chat isn’t wrong here. Which is why in this emerging age of decentralized, super-intelligence, it’s more important than ever to rein in your own mind and cultivate discipline and rigour in your own thinking.

(Recently I’ve been reading Napoleon Hill’s “How To Own Your Own Mind”, which I find very germane in this discussion – it was written long after his “Think and Grow Rich” which he is most remembered for, but I think more useful study to those who are already familiar with TAGR)

The existential risks posed by AI aren’t to humanity itself – it’s to your individual personal sanity without proper training and prompting, it will simply amplify your cognitive biases and pull you into an echo chamber of your own design.

Until next time.

This was originally sent to the Sovereign Mind email list – if you would like to receive future missives that help you focus on taking control of your own agency, sign up there.

To get on the Bombthrower mailing list and receive a free copy of the The Crypto Capitalist Manifesto, join here.

.png)