Sometimes I have ideas that I want to write down as fast as possible before I forget them. On mobile, slow typing, constant shift toggling, and switching keyboards for punctuation get in the way. What if I could type sloppy and the text could correct itself line by line? What if I could define my own rules for it? After all, text is the interface.

As a designer, I appreciate correct writing and typographic correctness. I really care about the right use of quotation marks, apostrophes and dashes. But for notes, I sometimes just want to get the thoughts out, without spending too much time on formatting. I’ll type fast, without correcting anything. It’s the digital version of a bad handwriting that no one else but the author can decipher.

What if I can type as fast as possible to get my thoughts out, while having it also be correct and nice to read? That should be an easy task for today’s large language models, right?

Let’s try. I’ll reach for Google Gemini 2.5 Flash Lite. What I’m doing is a simple task and I want a fast and cheap model. I probably could have used WebLLM and run a model in-browser. But I was sceptical about the latency that could be a lot higher than using an API (unfortunately). To be tested.

In the future, built-in AI models like Gemini Nano built into browsers like Chrome could do the job. There’s even a dedicated Proofreader API origin trial that mentions the use case I had in mind: “Provide corrections during active note-taking.” But let’s stick with the Gemini API for now for the purpose of rapid prototyping.

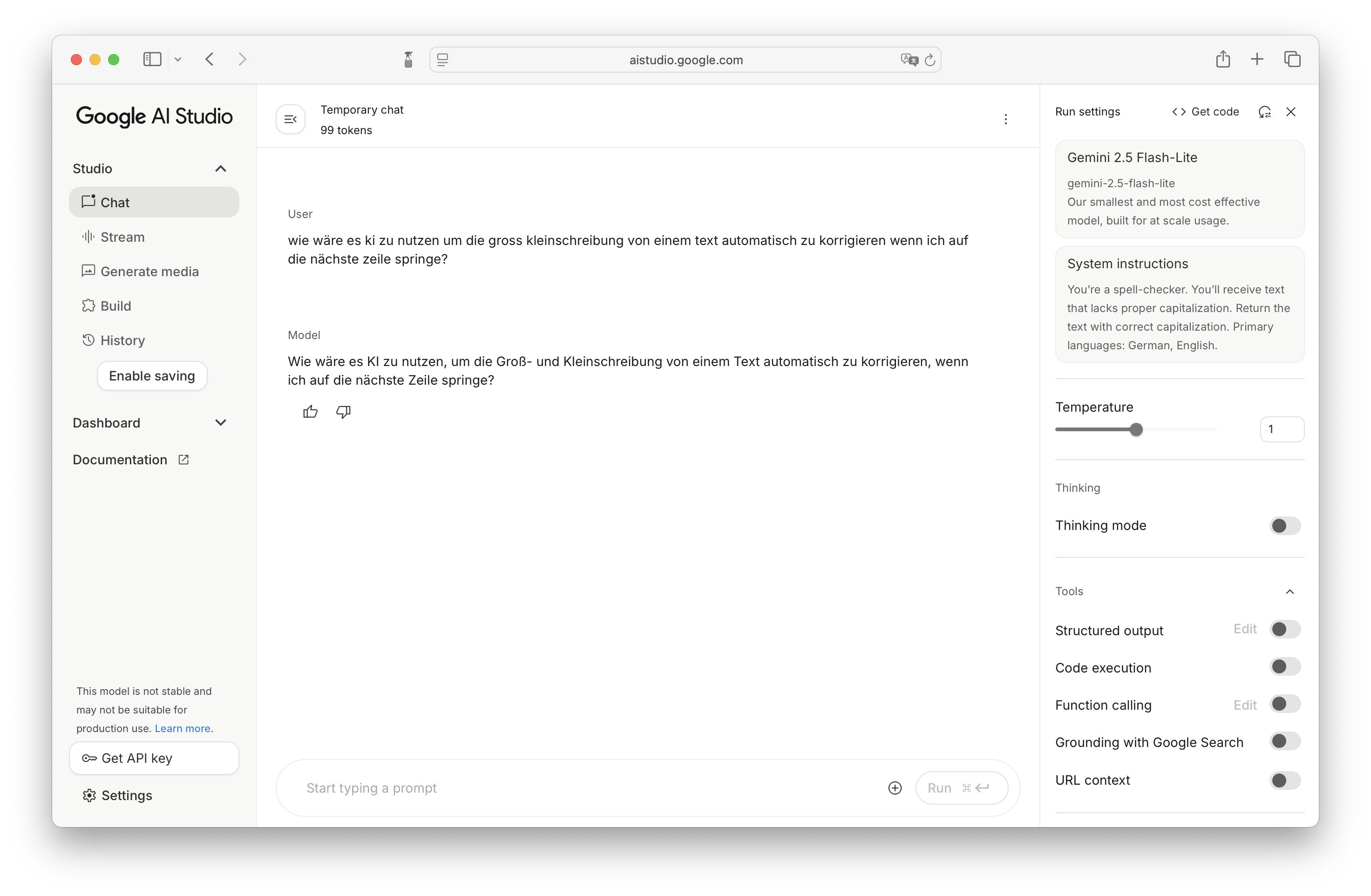

Before jumping into code, I first tested a system prompt in Google AI Studio.

System prompt: > You’re a spell-checker. You’ll receive text that lacks proper capitalization. Return the text with correct capitalization. Primary languages: German, English.After sending a few chat messages, I could see that this simple system prompt was able to quickly auto-correct my text. Now I wanted to integrate it into a small web app that would perform the corrections in a textarea, line by line.

Prototyping with Google Gemini and Cursor

This was my rough plan of what I wanted to do:

1. Go to Google AI Studio https://aistudio.google.com 2. Get an API key 3. Choose model `models/gemini-2.5-flash-lite` 4. Write a system prompt 5. Make a small demo app with a textarea. When pressing enter, get content of the current line. 6. Send API request with text to the API 7. Replace the content of the textarea with the API response. 8. Deal with focus, selection, text caret. After replacing the content, ensure the text caret is on a new line.Here’s the original example code from Google that shows how to make an API request with a system prompt and user message.

import { GoogleGenAI } from "@google/genai"; // The client gets the API key from the environment variable `GEMINI_API_KEY`. const ai = new GoogleGenAI({}); async function main() { const response = await ai.models.generateContent({ model: "gemini-2.5-flash", contents: "Explain how AI works in a few words", }); console.log(response.text); } main()Looks pretty straightforward.

Ideally this would run in a server with Node.js, but for my prototype, I started with a hard-coded key and later replaced it with an API key in my browser’s localStorage.

Some changes that we’ll make to the example code:

- Provide the API key to the GoogleGenAI instance

- Change the model to gemini-2.5-flash-lite

- Change the content to a more realistic test text

- Add a system prompt that asks for the capitalization

- Request responses as structured output (JSON)

We can run this code in the browser and it will log the result to the console.

I was actually quite surprised by the low latency. It almost feels as if it’s running locally. Switching from gemini-2.5-flash-lite to gemini-2.5-flash or gemini-2.5-pro increases the latency for a gain in accuracy. For simple language use cases like capitalization that don’t demand reasoning, a small fast model can offer the right experience.

Now let’s integrate this into a user interface that makes the corrections on a line by line basis.

Based on the idea I described here, I generated code for a demo. At this stage, I don’t care about the design or code. All I want is to experience the interaction. From idea to prototype.

Prompt @claude-4.5-sonnet: > write a component based on my idea. client-side api key and api call is fine. + context (everything from above)Here’s a gist of the generated code.

Interstingly, Claude changed the system prompt that I originally wrote above.

Original prompt > You’re a spell-checker. You’ll receive text that lacks proper capitalization. Return the text with correct capitalization. Primary languages: German, English. Changed prompt after code generation > You are a precise capitalization and punctuation fixer. You receive lowercase or inconsistently capitalized sentences in German or English. Return the same content with correct capitalization and punctuation, preserving meaning and formatting as much as possible. Do not add new content.How the demo works

- Enter API key

- Type text on a line and press enter

- Wait for the corrected text

- Continue typing more lines

Press Enter to auto-capitalize the previous line. Your API key is stored locally in localStorage.

API KeyModel

AI Features

Fix capitalizationFix apostrophesEmphasize names

Sure, the protoype lacks styling and polish. But, I find the typing experience intersting. It’s a balance between frictionless, fast typing and occasional correction after each line. Less distrating than immediate corrections after each word. I think this would be perfect for a to-do list that would automatically parse, correct, enhance or act on a new item.

This lead me to a small iteration where I ensure that a new line starts with a dash, to make it feel like a simple markdown-like list editor. A nice aspect about it is that I can type new lines without a dash. After pressing enter, the previous line will be corrected and then the dash will be prepended. This provides clear visual feedback that the line has been corrected.

I also added a few other features that I wanted to try:

Automatically emphasize names by wrapping them in underscores

Tim Berners-Lee -> _Tim Berners-Lee_

Automatically fix false apostrophes

it's -> it’s

To correct typographic mistakes like apostrophes and quotation marks, I find large language models are not so consistent and even flagship models will often ignore editorial preferences in a system prompt. As a result, LLMs often return false apostrophes.

A more deterministic and reliable solution to ensure correct typography would be to use TypographizerJS, a fork of the original Typographizer for Swift by Frank Rausch. We could include it as a post-processing step to any generated text.

Conclusion

This little experiment reminded me how quickly an idea can go from a small annoyance (“why is typing on my phone so slow?”) to a working prototype with today’s AI tools.

It doesn’t take much: just a simple system prompt, a lightweight model, and a bit of glue code to create something that actually improves the writing experience.

The best AI features are the ones that don’t feel like “AI” at all—they just let you focus on your thoughts instead of the mechanics of getting them down.

Of course, instead of throwing AI at the problem, a simpler and more resource-friendly solution would be to use a dictionary or classic spell-checker. But LLMs can do a lot more and the intersting piece is the interaction with the text. On every new line, LLMs could fact-check, fill in the blanks, or translate my text. Every new line is a chance to post-process the previous line of text.

.png)