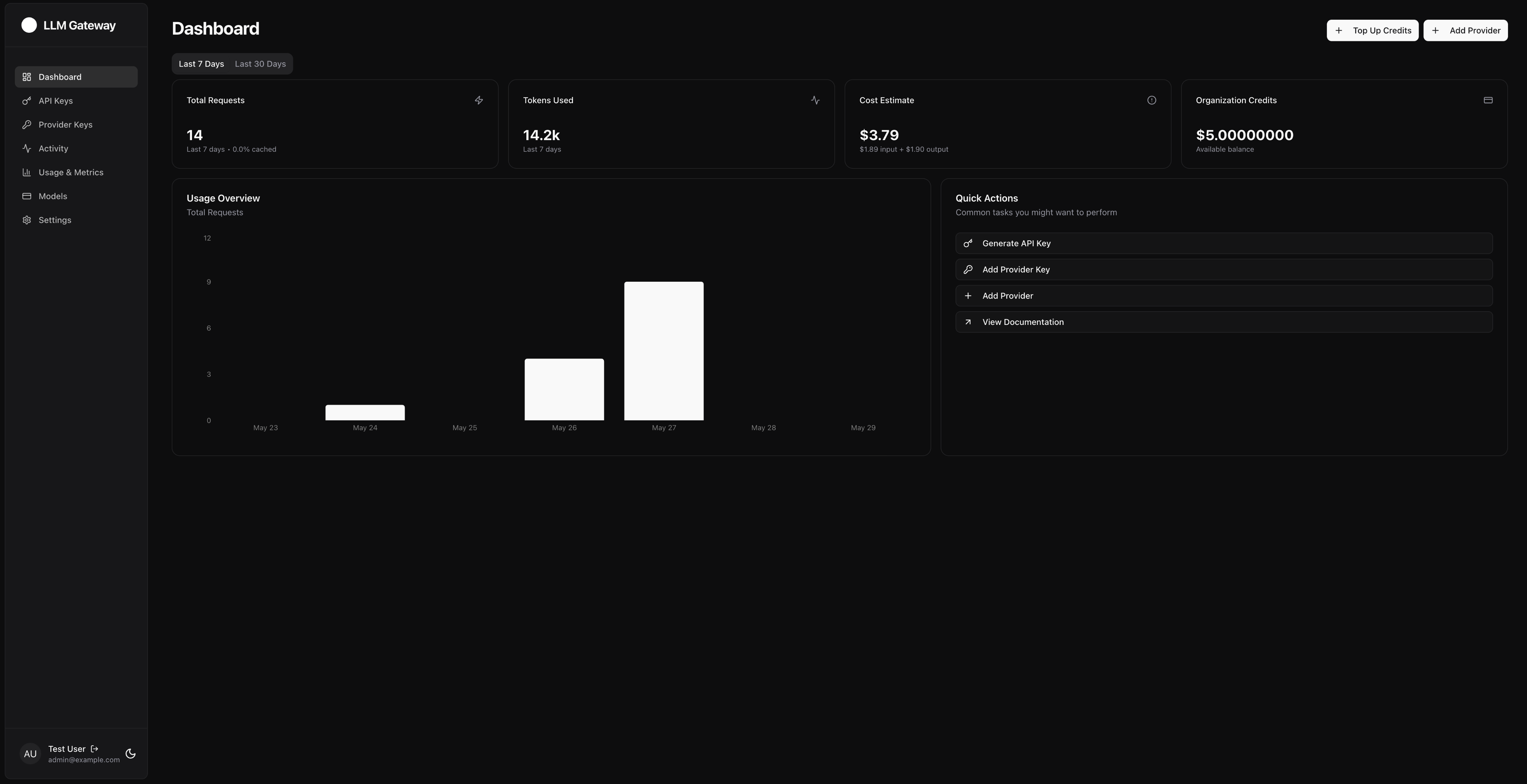

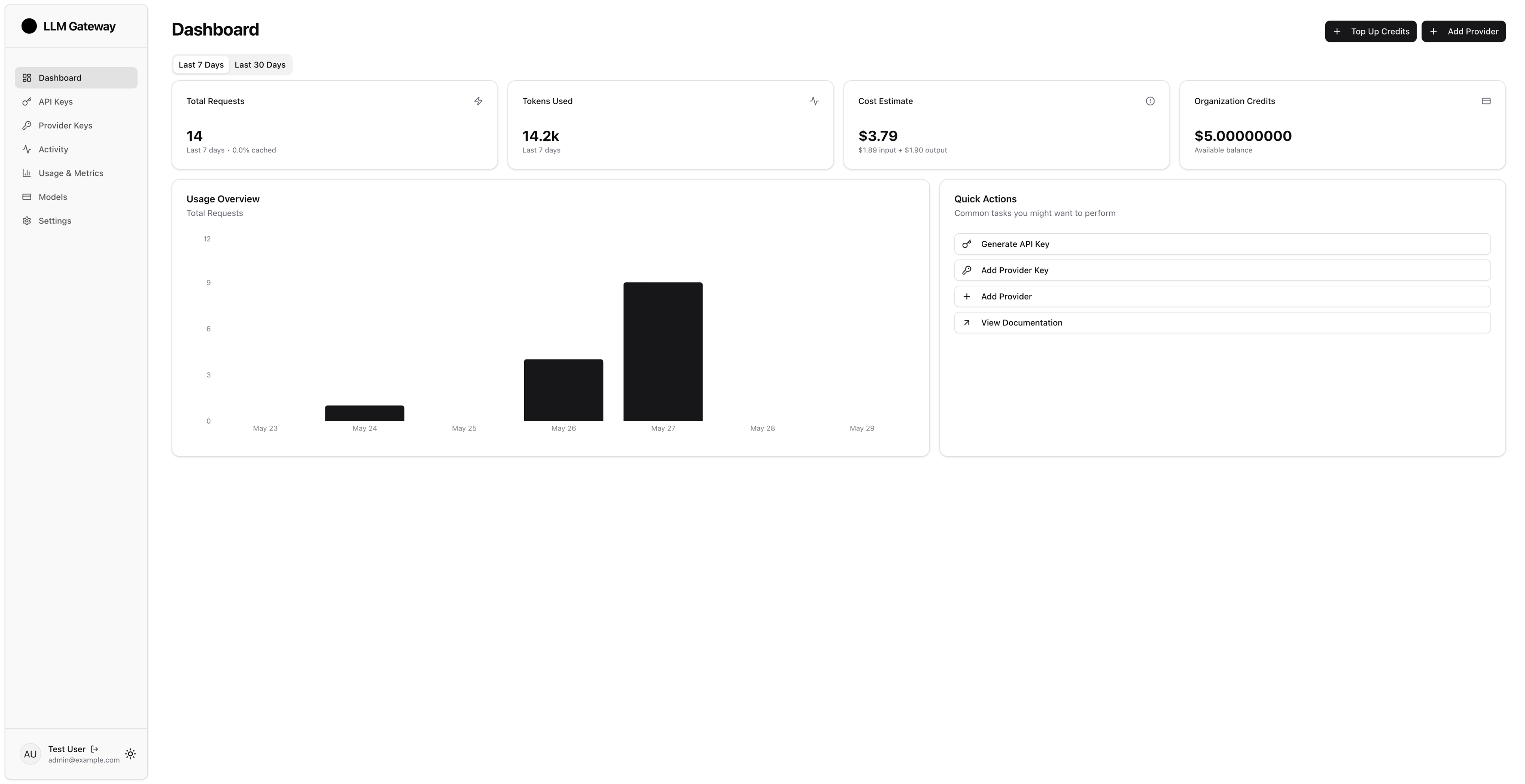

Route, manage, and analyze your LLM requests across multiple providers with a unified API interface.

Model Orchestration

We dynamically route requests from devices to the optimal AI model—OpenAI, Anthropic, Google, and more.

Simple Integration

Just change your API endpoint and keep your existing code. Works with any language or framework.

LLM Gateway routes your request to the appropriate provider while tracking usage and performance across all languages and frameworks.

Pricing

Start for free, Scale with no fees

Choose the plan that works best for your needs, with no hidden fees or surprises

- 100% free forever

- Full control over your data

- Host on your infrastructure

- No usage limits

- Community support

- Regular updates

- Access to ALL models

- Pay with credits

- 5% LLMGateway fee on credit usage

- 3-day data retention

- Standard support

Most Popular

- Access to ALL models

- Use your own API keys without surcharges

- NO fees on credit usage

- 90-day data retention

- Priority support

- Everything in Pro

- Advanced security features

- Custom integrations

- On-boarding assistance

- Unlimited data retention

- 24/7 premium support

All plans include access to our API, documentation, and community support.

Need a custom solution? Contact our sales team.

Frequently Asked Questions

Find answers to common questions about our services and offerings.

Unlike OpenRouter, we offer:

- Full self‑hosting under an MIT license – run the gateway entirely on your infra, free forever

- Deeper, real‑time cost & latency analytics for every request

- Zero gateway fee on the $50 Pro plan when you bring your own provider keys

- Flexible enterprise add‑ons (dedicated shard, custom SLAs)

Ready to Simplify Your LLM Integration?

Start using LLM Gateway today and take control of your AI infrastructure.

.png)