No Internet? No Problem! Private LLM Works Anywhere, Anytime!

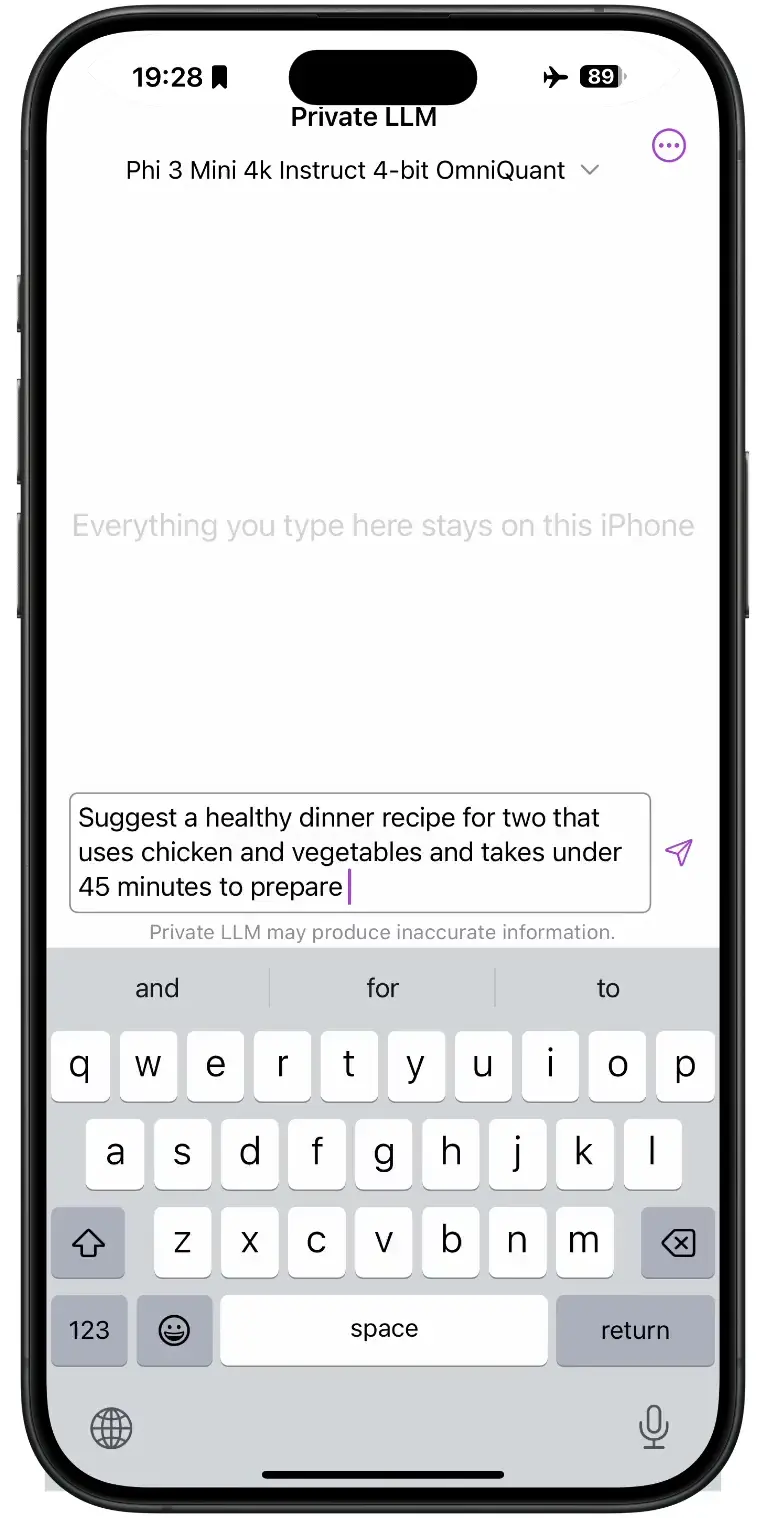

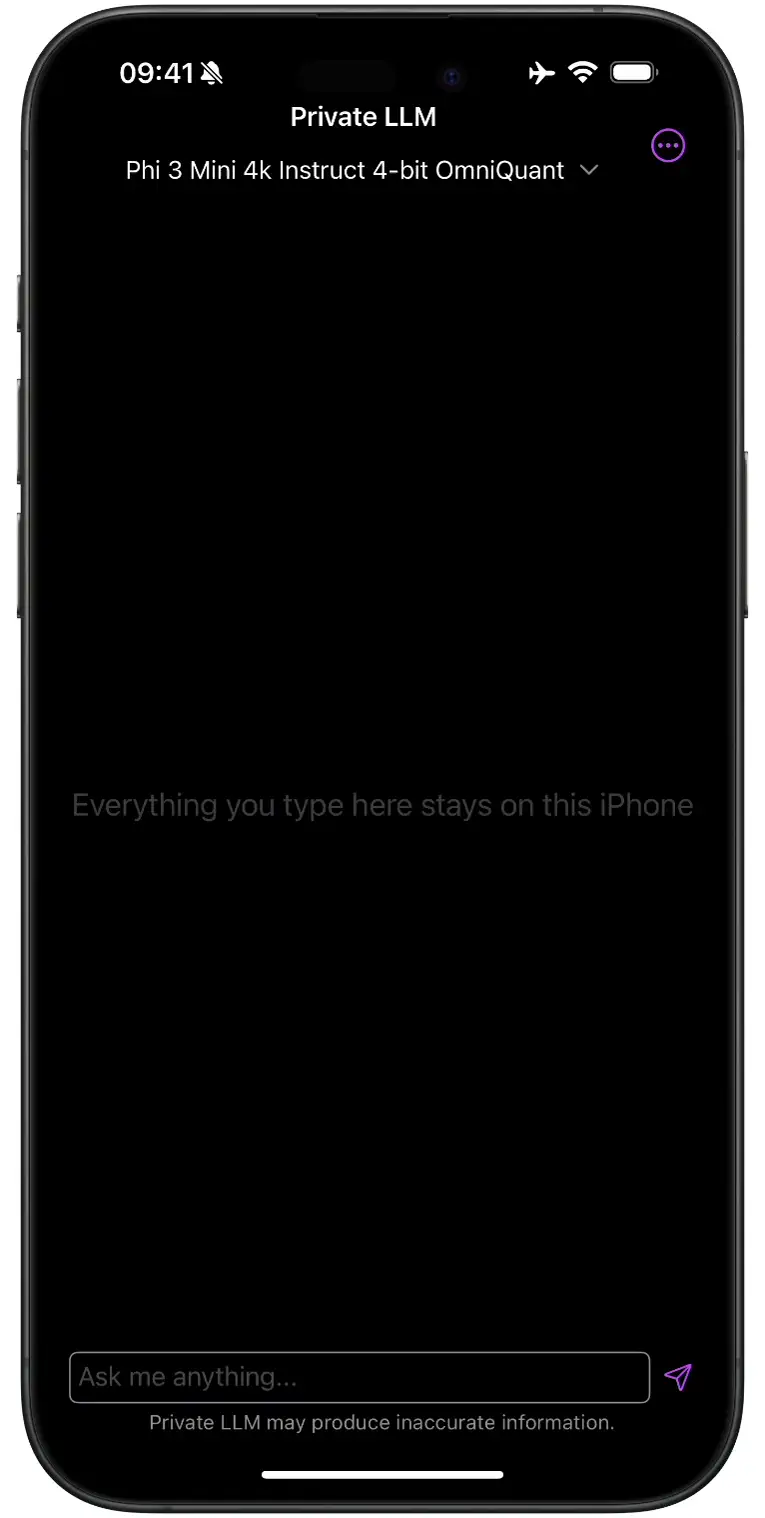

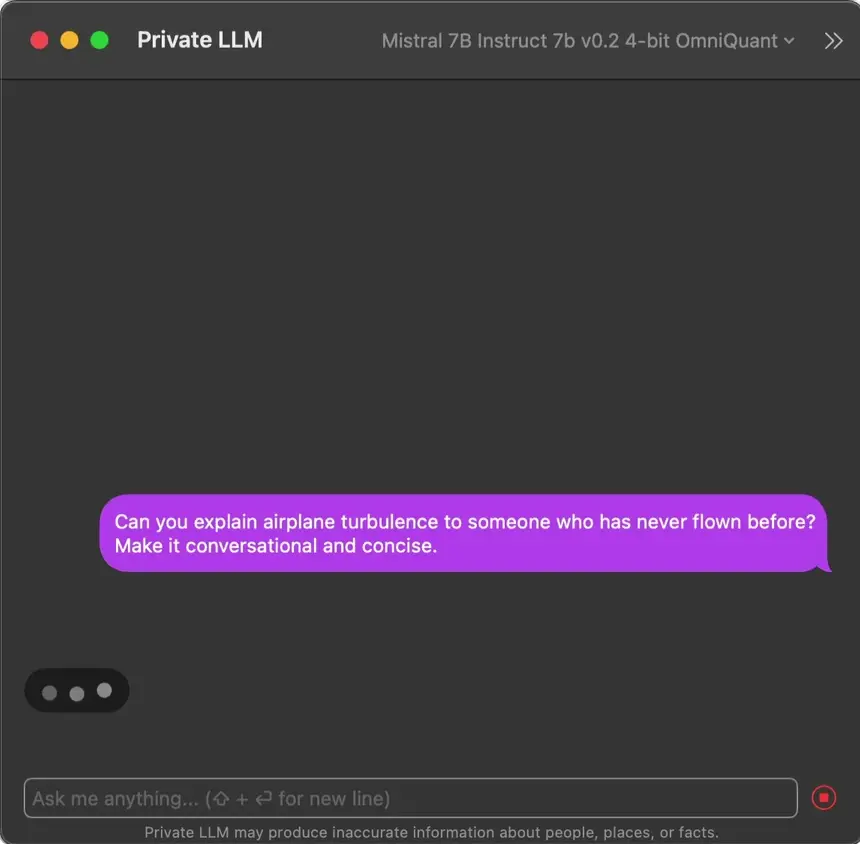

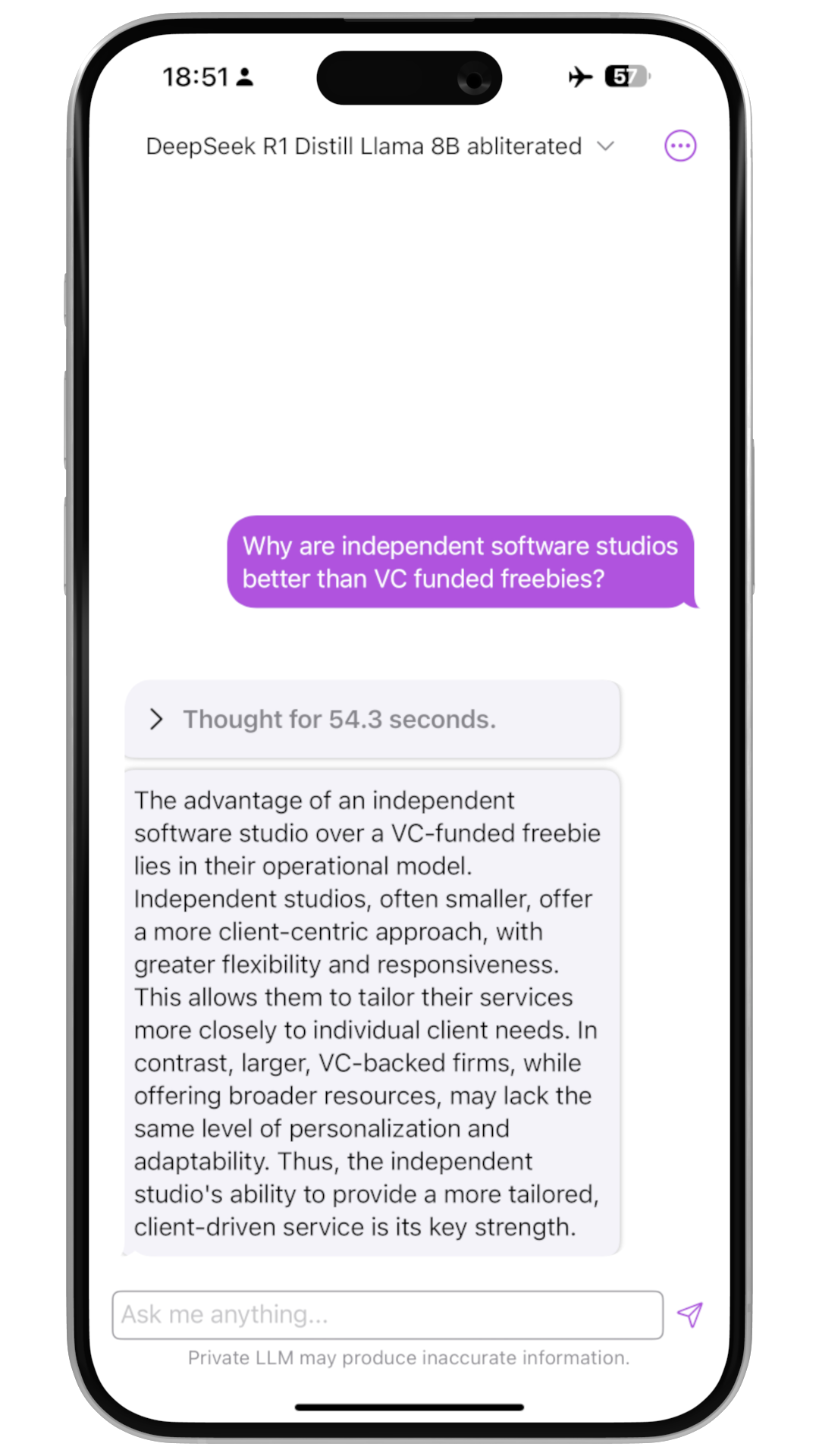

Private LLM is a local AI chatbot for iOS and macOS that works offline, keeping your information completely on-device, safe and private. It doesn't need the internet to work, so your data never leaves your device. It stays just with you. With no subscription fees, you pay once and use it on all your Apple devices. It's designed for everyone, with easy-to-use features for generating text, helping with language, and a whole lot more. Private LLM uses the latest AI models quantized with state of the art quantization techniques to provide a high-quality on-device AI experience without compromising your privacy. It's a smart, secure way to get creative and productive, anytime and anywhere.

Harness the Power of Open-Source AI with Private LLM

Private LLM brings the best of open-source AI directly to your iPhone, iPad, and Mac. With support for leading models like DeepSeek R1 Distill, Llama 3.3, Phi-4, Qwen 2.5, and Google Gemma 2, you can explore powerful AI capabilities, fully customized for your Apple devices and 100% private.

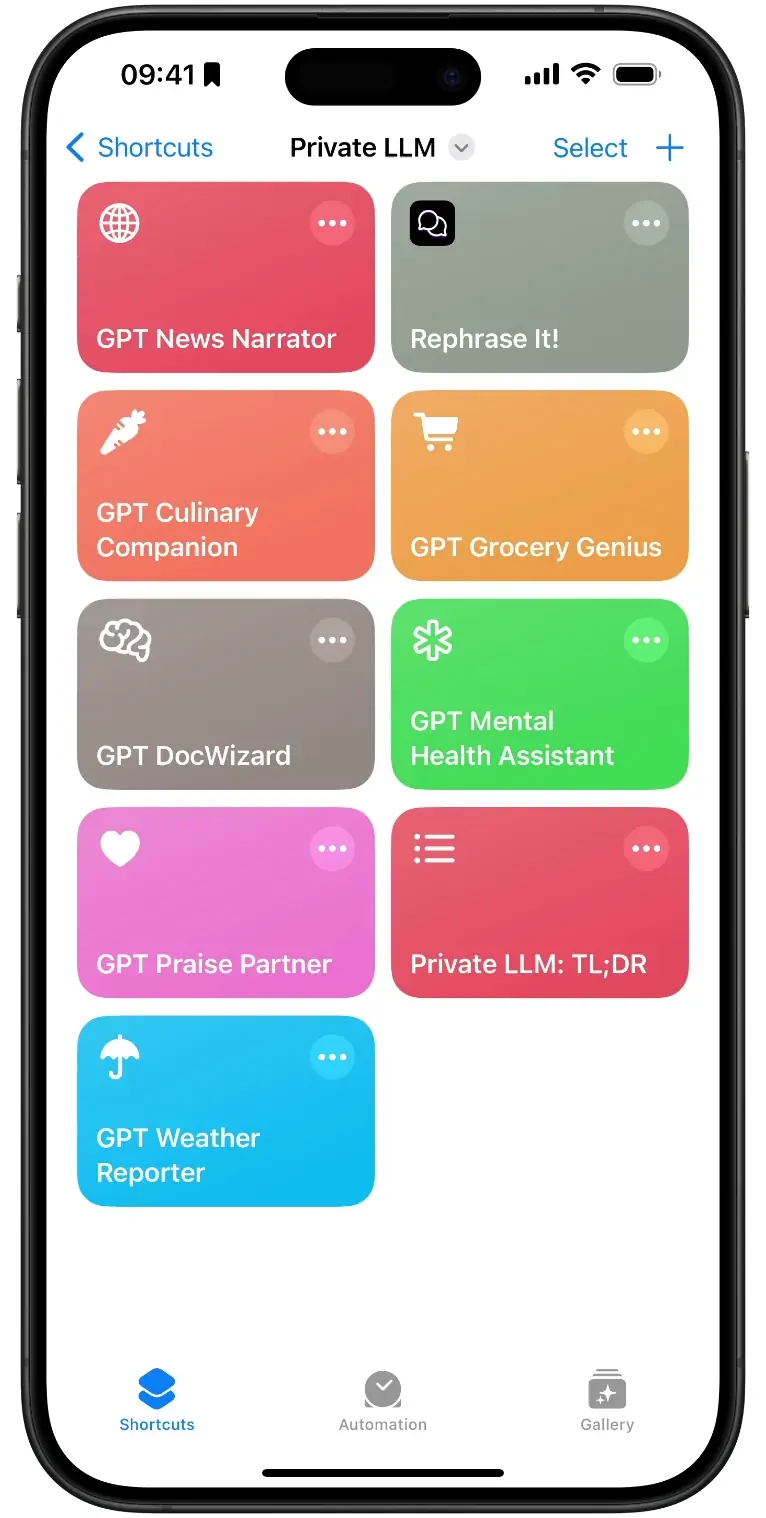

Craft Your Own AI Solutions: No Code Needed with Siri and Apple Shortcuts

Discover the simplicity of bringing AI to your iOS or macOS devices without writing a single line of code. With Private LLM integrated into Siri and Shortcuts, users can effortlessly create powerful, AI-driven workflows that automate text parsing and generation tasks, provide instant information, and enhance creativity. This seamless interaction allows for a personalized experience that brings AI assistance anywhere in your operating system, making every action smarter and more intuitive. Additionally, Private LLM also supports the popular x-callback-url specification, which is supported by over 70 popular iOS and macOS applications. Private LLM can be used to seamlessly add on-device AI functionality to these apps.

Universal Access with No Subscriptions

Ditch the subscriptions for a smarter choice with Private LLM. A single purchase unlocks the app across all Apple platforms—iPhone, iPad, and Mac—while enabling Family Sharing for up to six relatives. This approach not only simplifies access but also amplifies the value of your investment, making digital privacy and intelligence universally available in your family.

AI Language Services Anywhere in macOS

Transform your writing across all macOS apps with AI-powered tools. From grammar correction to summarization and beyond, our solution supports multiple languages, including English and select Western European ones, for flawless text enhancement.

Proudly Independent

We chose to bootstrap Private LLM because true innovation doesn't need venture capital. As an independent team of two developers, we're free to build the best local AI experience without compromising on our values. This independence lets us invest in advanced technologies like OmniQuant quantization while keeping your data completely private - choices we can make because we answer to users, not investors. With no pressure to monetize your data or rush features, we focus on what truly matters: building powerful local AI that respects your privacy and delivers superior performance.

Superior Model Performance With State-Of-The-Art Quantization

The core of Private LLM's superior model performance lies in its use of the state-of-the-art OmniQuant quantization algorithm. While quantizing LLMs for on-device inference, outlier values in LLM weights tend to have a marked adverse effect on text generation quality. Omniquant quantization handles outliers by employing an optimization based learnable weight clipping mechanism, which preserves the model's weight distribution with exceptional precision. RTN (Round to nearest) quantization used by popular open-source LLM inference frameworks and apps based on them, does not handle outlier values during quantization, which leads to inferior text generation quality. OmniQuant quantization paired with optimized model-specific Metal kernels, enables Private LLM to deliver text generation that is not only fast but also of the highest quality, significantly raising the bar for on-device LLMs.

See what our users say about us on the App Store

by Original Nifty-Oct 12, 2024

This application is unique in creating the conditions for an interaction whose essence is of a noticeably superior quality., despite (and partly because of) the fact that the model is running completely locally! While at the time of writing the UI is in the early stages of development, it is still workable, easy and stable, allowing the interaction with the model to continue over an extended period. The developers take a distinct and very refreshing ethical position, and are most generous. I am very grateful to them.

Version 1.8.9|United Kingdom

Download the Best Open Source LLMs

Phi-4 14B Based Models

For Apple Silicon Macs with 16GB+ RAM

Yi 6B Based Models

For Apple Silicon Macs with 8GB+ RAM

Yi 34B Based Models

For Apple Silicon Macs with 24GB+ RAM

How Can We Help?

Whether you've got a question or you're facing an issue with Private LLM, we're here to help you out. Just drop your details in the form below, and we'll get back to you as soon as we can.

.png)