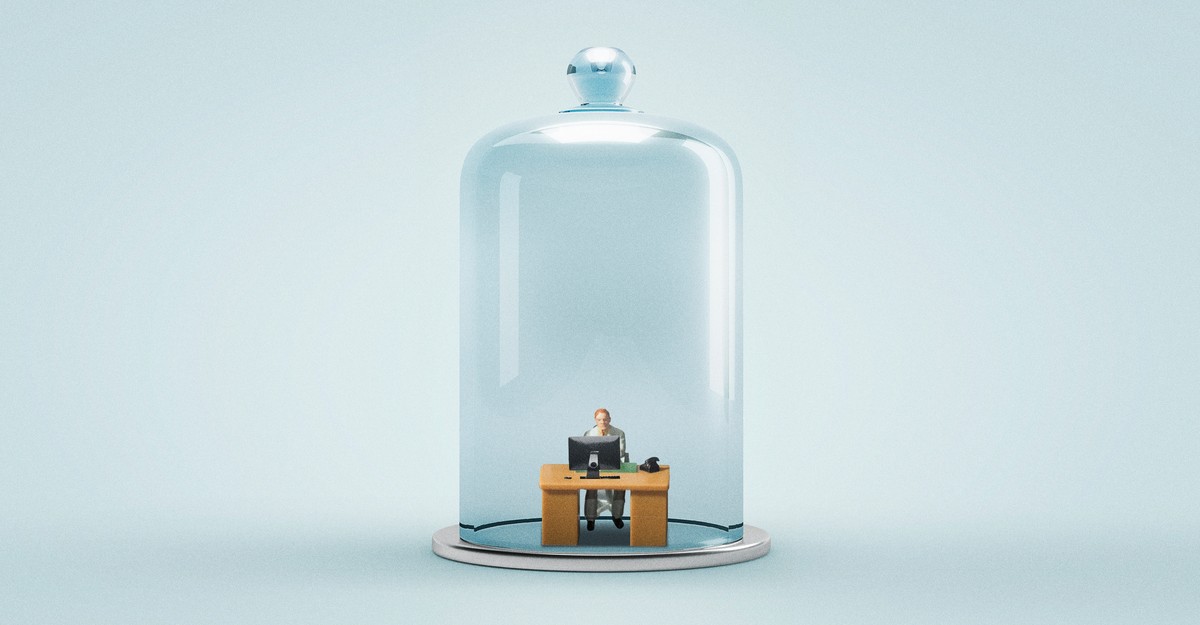

A single AI tool can count tigers in a zoo surveillance camera feed. An intelligent agent can monitor an entire safari park. By coordinating multiple camera feeds, analyzing different animal behaviors, and synthesizing a comprehensive status report, an agent achieves what no individual tool could produce independently.

This shift from single-purpose tools to intelligent orchestration opens up new opportunities for organizations of all kinds. Instead of building isolated automation scripts, we can create agents that reason about complex tasks, break them into specialized subtasks, and coordinate the right tools at the right time.

Consider a zookeeper monitoring a safari park. Their expertise isn't operating individual cameras. It's knowing when to check each feed, how to interpret combined data, and what the complete picture means for park operations. The agent's job is strategic thinking and coordination, not just task execution.

Mastra.ai provides this reasoning layer to developers of AI agents. Or, the "zookeepers's brain", as our example shows, because of its ability to understand objectives, decompose tasks, and orchestrate tools intelligently. Meanwhile Anchor Browser provides specialized web automation capabilities–the eyes and hands–that navigates websites and analyzes visual content with human-like understanding.

Together, Mastra and Anchor Browser create agents that don't just automate web tasks, but solve complex, multi-faceted problems through intelligent tool coordination.

What is Mastra.ai?

Mastra.ai is a TypeScript agent framework that lets AI agents reason, remember, and act on workflows. Unlike traditional automation platforms that execute predetermined scripts, Mastra agents make decisions about which tools to use based on natural language instructions and contextual understanding.

The framework provides three core primitives that work together: agents that reason about tasks and coordinate tool usage, workflows that define structured sequences of operations with parallel execution and error handling, and tools that perform specialized tasks with type-safe input/output schemas.

What makes Mastra powerful is its declarative approach. Instead of writing imperative code that says, "do this, then do that," you define what the agent should accomplish and let it figure out how. The agent receives high-level instructions like "monitor the safari park" and autonomously decides whether to use individual counting tools, coordinate multiple tools in parallel, or execute complex workflows based on the situation.

Key capabilities of Mastra.ai include persistent memory for conversation history, semantic recall for retrieving relevant context from past interactions, streaming responses for real-time feedback, and comprehensive observability for monitoring agent behavior. Everything is built with TypeScript-first design, ensuring reliability across agent-tool interactions.

The Mastra + Anchor Pipeline: Intelligent Web Workflows

By combining Mastra's reasoning capabilities with Anchor's computer vision and browser tools, we get a powerful pipeline that is capable of transforming high-level objectives into coordinated web automation. For our safari monitoring example, we deploy an agent in Mastra, named "zookeeperAgent", which we ask to analyze live camera feeds. This agent coordinates tools that use Anchor Browser's computer vision capabilities to fetch camera feeds, analyze what animals they see, and extract meaningful information. From start to finish, the Mastra agent is in command, handling the strategic decisions about which tools to use and how to interpret their results.

When you tell the zookeeper agent to "monitor the safari park," Mastra doesn’t just execute a script. It reasons about the task. The agent understands that monitoring requires checking multiple camera feeds, the different animals need specialized analysis for identification, and that the results should be synthesized into a comprehensive report with confidence levels.

Anchor Browser provides the specialized tool capabilities. Each tool that Mastra coordinates uses Anchor’s computer vision to navigate to camera feeds and analyze what it sees. The tiger counting tool opens the tiger camera URL, takes a screenshot, and uses AI vision to identify and count tigers. The giraffe counting tool does the same for giraffes. Neither tool needs to parse pre-set camera masks. They see the rendered web pages and understand them visually.

Workflows coordinate complex operations. Mastra's workflow engine can execute the tiger and giraffe counting in parallel, handle errors gracefully if a camera feed is down, and aggregate the results into a structured format. The workflow defines the sequence and error handling declaratively, while the agent decides whether to use individual tools or the complete workflow based on the specific request.

Type-safe data flow to ensure reliability. Every tool defines its input and output schemas, so when the tiger counter returns tigerCount: 3, the workflow knows exactly how to handle that result and pass it to the report generation step.

The end product is a system where you can ask, "How many animals are in the safari park?" and get back not just numbers, but a detailed analysis: "Giraffes: 2, Tiger: 3, Total: 5 animals". High confidence in both counts. This level of contextual understanding and coordination is made much more challenging with traditional automation scripts.

Project Setup: Building a Safari Monitoring Agent

Prerequisites

Before building our intelligent safari monitoring system, you'll need:

Node.js (version 18 or higher)

Anchor Browser API key - get your API key from the Anchor Browser dashboard

Groq API key - sign up at console.groq.com for access to vision-capable models

Mastra.ai account - for deploying agents in Mastra Cloud

Dependencies Installation

Initialize a new Node.js project and install the required packages:

Environment Configuration

Create a .env file in your project root:

Add the .env file to your .gitignore to avoid accidentally committing your API keys:

The Groq API key enables your Mastra agent access any of Groq's open source cloud-hosted models, which are essential for our agent to understand and reason about the responses from our custom tools. The Anchor Browser API key provides underlying remote browser sessions for requesting the camera feeds.

Safari Camera Monitoring System

Let's start by creating specialized tools that use computer vision to analyze live camera feeds. Each tool focuses on a specific subtask, counting one type of animal, which the agent can coordinate intelligently.

Create src/mastra/tools/index.ts:

The tools tigerCountTool and giraffeCountTool use computer vision to view and analyze the most recent image displayed from the camera feed. Their response is structured with the model's confidence levels, enabling intelligent decision-making. Error handling ensures graceful degradation when camera feeds fail.

Orchestrating Intelligence: The Safari Monitoring Workflow

Now let's create the workflow that coordinates multiple counting tools and synthesizes their results. Create src/mastra/workflows/index.ts:

The power of declarative workflows

This workflow demonstrates Mastra's intelligent coordination capabilities. The countTigers and countGiraffes steps run in parallel automatically—Mastra's workflow engine recognizes they don't depend on each other and executes them concurrently for efficiency.

The generateReport step shows how workflows can include both tool calls and custom functions. This step takes the structured output from both counting tools and synthesizes them into a comprehensive report with timestamps, confidence levels, and operational status.

Creating an Intelligent Agent with Mastra

Now we create the agent that brings everything together. The agent uses natural language instructions to guide its behavior and leverages Groq's Meta-Llama model to understand and reason about the task at hand.

Create src/mastra/agents/index.ts:

The agent as a reasoning engine

The zookeeperAgent demonstrates strategic tool coordination rather than predetermined execution. When asked, "How many animals are in the safari park?", the agent reasons about the request and chooses appropriate tools. For example, individual counters for giraffes versus tigers, the comprehensive animalWatchTool, or coordinated workflows.

Note the computer vision work in this case (analyzing camera feed images) happens in Anchor Browser's cloud platform, while the Mastra agent handles the reasoning about which Mastra-defined tools to use and how to interpret their results.

View full Mastra agent source code.

Deploy to production

Register your agent with Mastra Cloud for scalable execution and monitoring.

Special thank you to the San Diego Zoo Wildlife Alliance for providing the live camera feeds used in this example project.

Real-World Applications Beyond Safari Monitoring

The Intelligent agent orchestration pattern we've demonstrated with safari monitoring extends far beyond animal counting. Here are a few additional practical applications that leverage the same Mastra and Anchor Browser architecture.

E-commerce Price Intelligence

Deploy agents that monitor competitor pricing across multiple platforms. Individual tools track specific retailers (Amazon, eBay, direct competitors), while the orchestrating agent identifies pricing trends, calculates optimal pricing strategies, and generates alerts when competitors make significant moves.

Social Media Sentiment Analysis

Create specialized agents that analyze brand mentions across Twitter, LinkedIn, Instagram, and Reddit. Each tool focuses on platform-specific content extraction, while the coordinating agent synthesizes sentiment patterns, identifies emerging issues, and generates comprehensive brand health reports.

Financial Data Aggregation

Build agents that monitor trading dashboards, financial news sites, and market data feeds. Tools extract specific metrics (stock prices, volume, news sentiment), while the agent correlates information across sources to identify trading opportunities or risk patterns.

Quality Assurance Testing

Create agents that perform visual regression testing across different browsers and devices. Tools capture screenshots and compare visual elements, while the coordinating agent identifies patterns in failures, prioritizes issues by impact, and generates detailed bug reports with visual evidence.

Conclusion

Intelligent agent orchestration changes web automation from brittle scripts into adaptive reasoning systems. By combining Mastra's strategic coordination with Anchor Browser’s vision-capable browser tooling, we’ve built agents that understand objectives, decompose complex problems, and coordinate specialized tools to achieve outcomes no single tool could accomplish.

This approach scales from safari monitoring to comprehensive automation across a variety of business applications. Unlike traditional scrapers that break with every website update, these agents evolve with the systems they monitor.

The pattern is clear: break complex objectives into specialized subtasks, deploy the right tools for each subtask, and synthesize results into actionable intelligence. As these technologies mature, we’ll see agents handling increasingly sophisticated workflows that transform how organizations approach complex data collection and analysis challenges.

If you'd like a hand getting started with Mastra and Anchor Browser, get in touch with your use case.

.png)