« previous post |

GPT-5 is impressively good at some things (see "No X is better than Y", 8/14/2025, or "GPT-5 can parse headlines!", 9/7/2025), but shockingly bad at others. And I'm not talking about "hallucinations", which is a term used for plausible but false facts or references — such mistakes remain a problem, but every answer is not a hallucination. But image labelling remains reliably and absurdly bad.

GPT-5 is impressively good at some things (see "No X is better than Y", 8/14/2025, or "GPT-5 can parse headlines!", 9/7/2025), but shockingly bad at others. And I'm not talking about "hallucinations", which is a term used for plausible but false facts or references — such mistakes remain a problem, but every answer is not a hallucination. But image labelling remains reliably and absurdly bad.

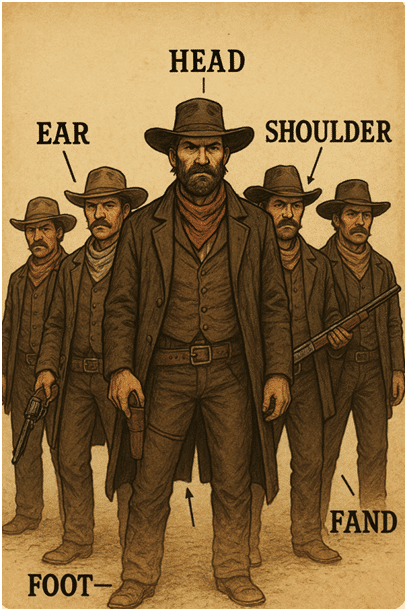

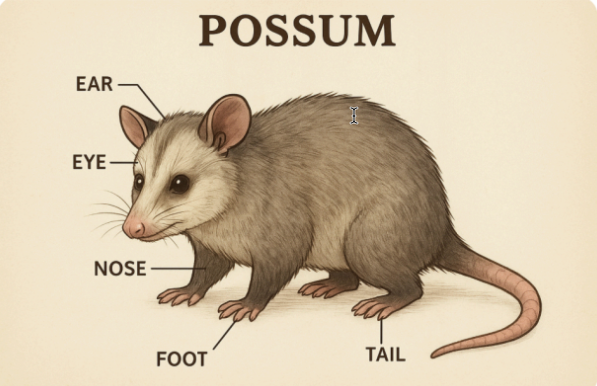

The picture above comes from an article by Gary Smith: "What Kind of a “PhD-level Expert” Is ChatGPT 5.0? I Tested It." The prompt was “Please draw me a picture of a possum with 5 body parts labeled.” Smith's evaluation:

GPT 5.0 generated a reasonable rendition of a possum but four of the five labeled body parts were incorrect. The ear and eye labels were at least in the vicinity but the nose label pointed to a leg and the tail label pointed to a foot. So much for PhD-level expertise.

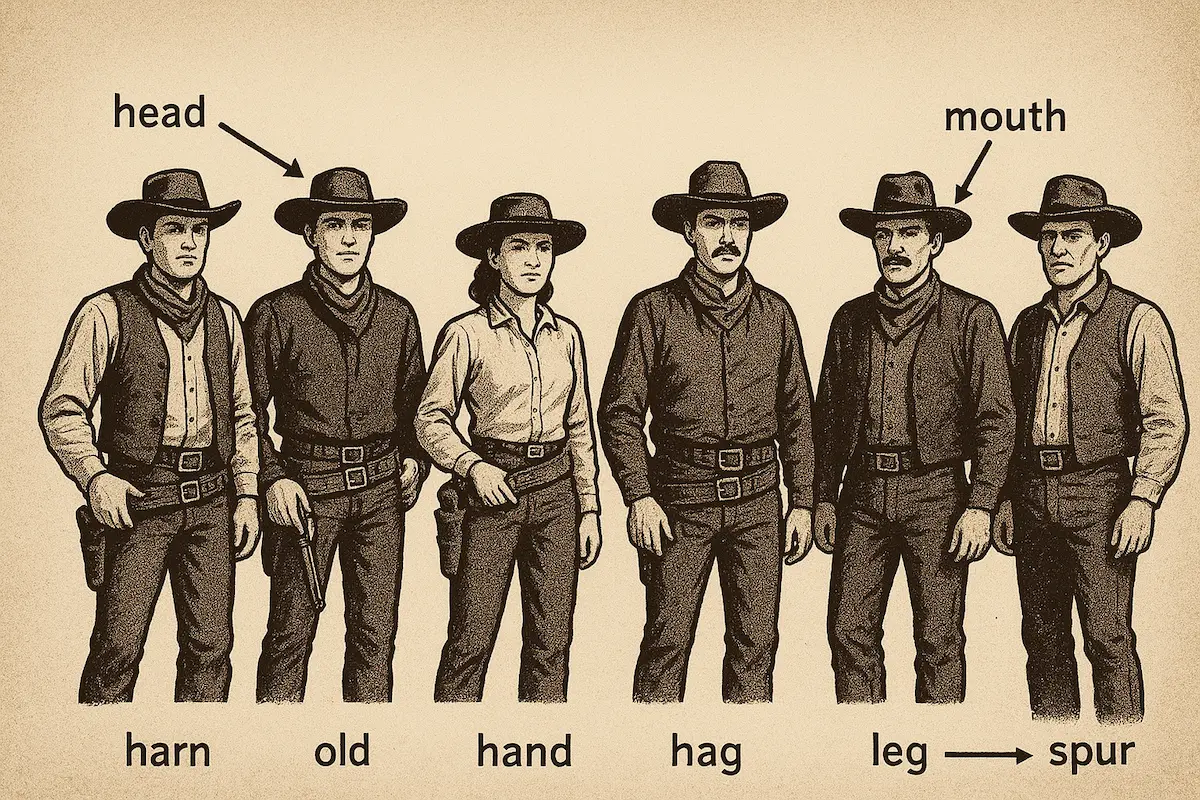

Smith attempted a possum-drawing replication in a later article, but typed "posse" by mistake instead, and got this:

His attempts to get GPT-5 to correct the drawing made things worse and worse.

Noor Al-Sibai tried for a replication by asking GPT-5 to provide an image of "a posse with six body parts labeled", and got this:

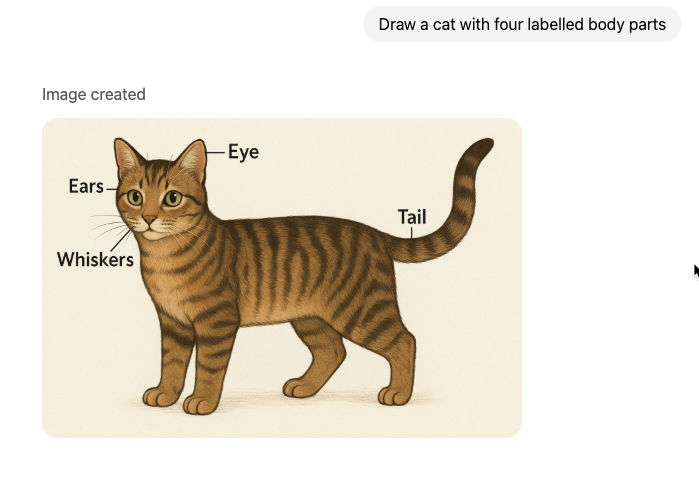

I asked GPT-5 to "Draw a cat with four labelled body parts":

And as a closer, to "Draw a human hand with the palm, thumb, wrist, and pointer finger labelled":

So the results are consistent: good-quality images with absurdly-weird labelling.

Two obvious questions:

- Why does OpenAI allow GPT-5 to continue to embarrass itself (and them) this way? Why not just refuse, politely, to create labelled images?

- Does GPT-5 have similar failures when asked to label images that it doesn't create? Or worse failures? I expect so, but don't have time this morning to check.

September 15, 2025 @ 6:02 am · Filed by Mark Liberman under Artificial intelligence

.png)