This post summarizes the key points from a talk by Andrej Karpathy on the Latent Space YouTube channel. You can watch the full video here.

“LLMs are kind of like these fallible people spirits that we have to learn to work with.” — Andrej Karpathy, former Director of AI at Tesla

Software is undergoing its third major paradigm shift, following traditional code (1.0) and neural networks (2.0): Large Language Models (3.0), programmed with natural language. These LLMs function like 1960s-era mainframe operating systems, delivered via time-sharing from the cloud. They possess superhuman knowledge but also suffer from human-like cognitive deficits. The biggest current opportunity lies in building “partial autonomy” applications that act as “Iron Man suits” (augmenting humans) rather than fully autonomous robots. To leverage them effectively, developers must accelerate the human verification loop with efficient GUIs and “keep the AI on a leash” with concrete instructions. Concurrently, our digital infrastructure must evolve to provide machine-readable documentation and interfaces for these new AI agents.

Karpathy posits that after 70 years of relative stability, software has recently undergone two fundamental shifts, creating three distinct paradigms:

- Software 1.0: Traditional, human-written code like Python or C++.

- Software 2.0: Neural networks, where weights are “programmed” by optimizing datasets, not by writing explicit code.

- Software 3.0: Large Language Models (LLMs), which are programmed via natural language prompts.

All developers must become fluent in these three paradigms and fluidly transition between them based on the task at hand.

During the evolution of Tesla’s Autopilot, the neural network stack (Software 2.0) grew in capability and progressively replaced and deleted large amounts of traditional C++ code (Software 1.0). Today, the same process is repeating: Software 3.0, centered on LLMs, is beginning to “eat” the existing software stack.

Viewing LLMs as mere utilities is inaccurate; a more fitting analogy is a new generation of operating system.

- Analogy to 1960s Computing: The high cost of LLM computation has led to their centralization in the cloud, with access provided via a time-sharing model. This mirrors the pre-PC era of centralized mainframes. The LLM acts as the CPU, and its context window is the equivalent of RAM.

- Ecosystem Parallels: The LLM market is developing a landscape similar to operating systems: a few closed-source providers (like OpenAI and Google) akin to Windows and macOS, and an open ecosystem (led by Llama) analogous to Linux.

Unlike nearly all previous transformative technologies (e.g., computers, the internet, GPS) which followed a “top-down” diffusion from governments and corporations, LLMs have flipped the model. They first reached billions of consumers via apps like ChatGPT and are only now being adopted by enterprises and governments. This “bottom-up” proliferation is unprecedented.

Karpathy describes LLMs as “stochastic simulations of people” or “people spirits” powered by transformers. This nature gives them a unique “personality” and set of flaws.

Superpowers: They possess encyclopedic knowledge and near-perfect recall, like the savant in the movie Rain Man.

Cognitive Deficits:

- Hallucination: They invent facts.

- Jagged Intelligence: They can be superhuman in some domains yet make elementary mistakes in others.

- Anterograde Amnesia: They do not naturally accumulate or consolidate knowledge from continued interaction. Their learning is confined to the current context window; each session is a restart after “memory loss.”

- Gullibility: They are susceptible to security risks like prompt injection.

The most valuable LLM applications today are not those aiming for full autonomy, but those that achieve “partial autonomy” as powerful human assistants.

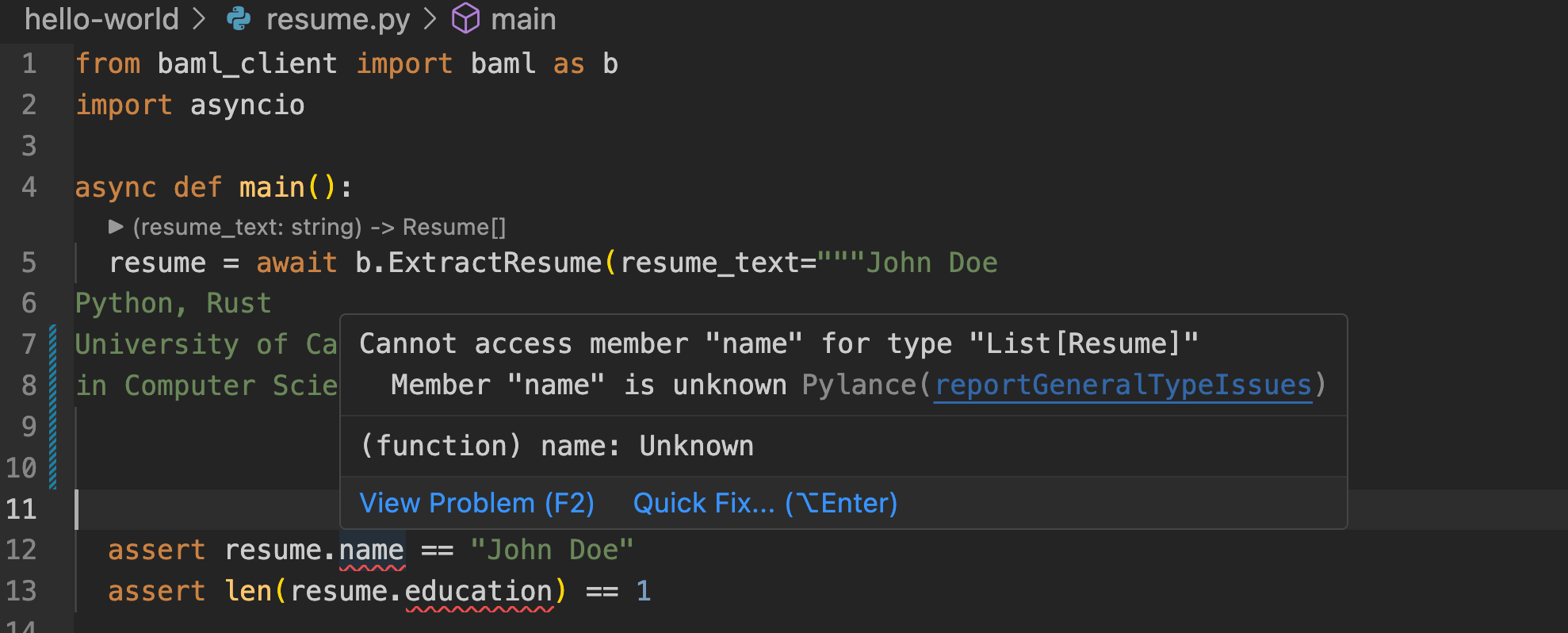

- Exemplary Apps: Cursor (for coding) and Perplexity (for search) are successful early examples.

Shared Characteristics:

- They automatically manage context to reduce the user’s cognitive load.

- They orchestrate multiple backend models (e.g., for embeddings, chat, applying diffs).

- They provide a task-specific GUI for human review and editing (e.g., a visual diff for code).

- They feature an “autonomy slider”, allowing the user to adjust the level of AI intervention based on task complexity.

To achieve efficient human-AI collaboration, the “AI generation → human verification” loop must be as fast as possible.

- Speed Up Verification: The key is the GUI. Humans can audit work much faster through visual interfaces (graphics, color-coded changes) than by reading and comprehending plain text.

- Keep the AI on a Leash: It’s crucial to prevent the AI from producing massive, unmanageable outputs. Developers must constrain the AI with more concrete prompts and by breaking tasks into smaller steps, ensuring each generated piece is easy for a human to quickly verify.

Karpathy is skeptical of claims that “2025 is the year of agents.” Citing the example of self-driving cars — which went from a perfect demo in 2013 to still not being fully solved over a decade later — he argues that maturing software agents will be a similarly long journey. We are at the beginning of “the decade of agents”, which will require patience and continuous human-in-the-loop supervision.

Because LLMs are programmed in natural language, the barrier to entry for coding has been drastically lowered, giving rise to “vibe coding.” Anyone can build a simple, custom application based on an idea and a description. However, Karpathy notes that while the coding itself has become easy, the DevOps required to deploy an application (domains, authentication, payments) remains a significant hurdle for non-professionals.

AI agents are emerging as a new class of “consumer” for digital information, and our infrastructure must adapt to them.

- Write Docs for Agents: Create machine-readable documentation, like an llm.txt file or Markdown-based API docs, that directly tells an agent what a site does and how to use it.

- From “Click” to curl: In documentation, replace human-oriented instructions ("click this button") with agent-executable commands (a curl request).

- Meet in the Middle: Develop tools that convert human-friendly interfaces (like a GitHub repository) into a single, LLM-friendly text format for easier processing by agents.

Ultimately, we must find a “middle ground” between our current infrastructure and a future of all-capable agents, proactively adapting to meet their needs rather than waiting for them to navigate our complex world.

.png)