Jul 20th 2025 · 9 min read · #ai #art #classics #comics #dall-e #gpt-4.1 #imagegeneration #patterns #stablediffusion #styletransfer

As regular readers may know, I have no compunction about using AI tools for reviewing my writing and generating occasional illustrations – I do it as a force multiplier, not as a replacement for my own work, and I try to do it in a way that is ethical and responsible.

I know a lot of people have strong opinions about AI-generated content, and I respect that, but I also believe that these tools can be used to enhance creativity and productivity without necessarily replacing human effort.

So if you are not a fan of AI-generated content and have that weird (and almost fundamentalist) knee-jerk reaction of considering AI-generated images a hallmark of bad content, well, I’m really sorry you feel that way, but you might want to skip this post.

A Short Recap

Three years ago I spent a little while playing with Stable Diffusion, and since over the past couple of weeks I found myself with a little time to spare I decided to revisit the current status quo of image generation–but since I didn’t have access to my InvokeAI instance, I decided to bust out the upcoming version of Pythonista and invoke Azure OpenAI APIs directly from my iPad.

It was a bit of a hack, sure, but it worked surprisingly well, and I quickly amassed hundreds of images over last weekend. I will not bore you with the details of how I did it, but suffice it to say that I used gpt-4.1 with a slight variation of the script I used for image tagging a while back.

Multi-Modal Models

And why gpt-4.1? Well, it is a multi-modal model that can handle both text and image generation, and even though it mostly uses Dall-E in the background, I wanted to see how it fared in comparison.

You see, one of the things that intrigues me about multi-modal models is that even though they can be used to generate images from text prompts, there is still a considerable gap between the text embeddings they manipulate and generated images. In the case of gpt-4.1, I suspect that the text embeddings are not directly usable for image generation, which means that the model has to “translate” between the two modalities, and that isn’t a perfect process.

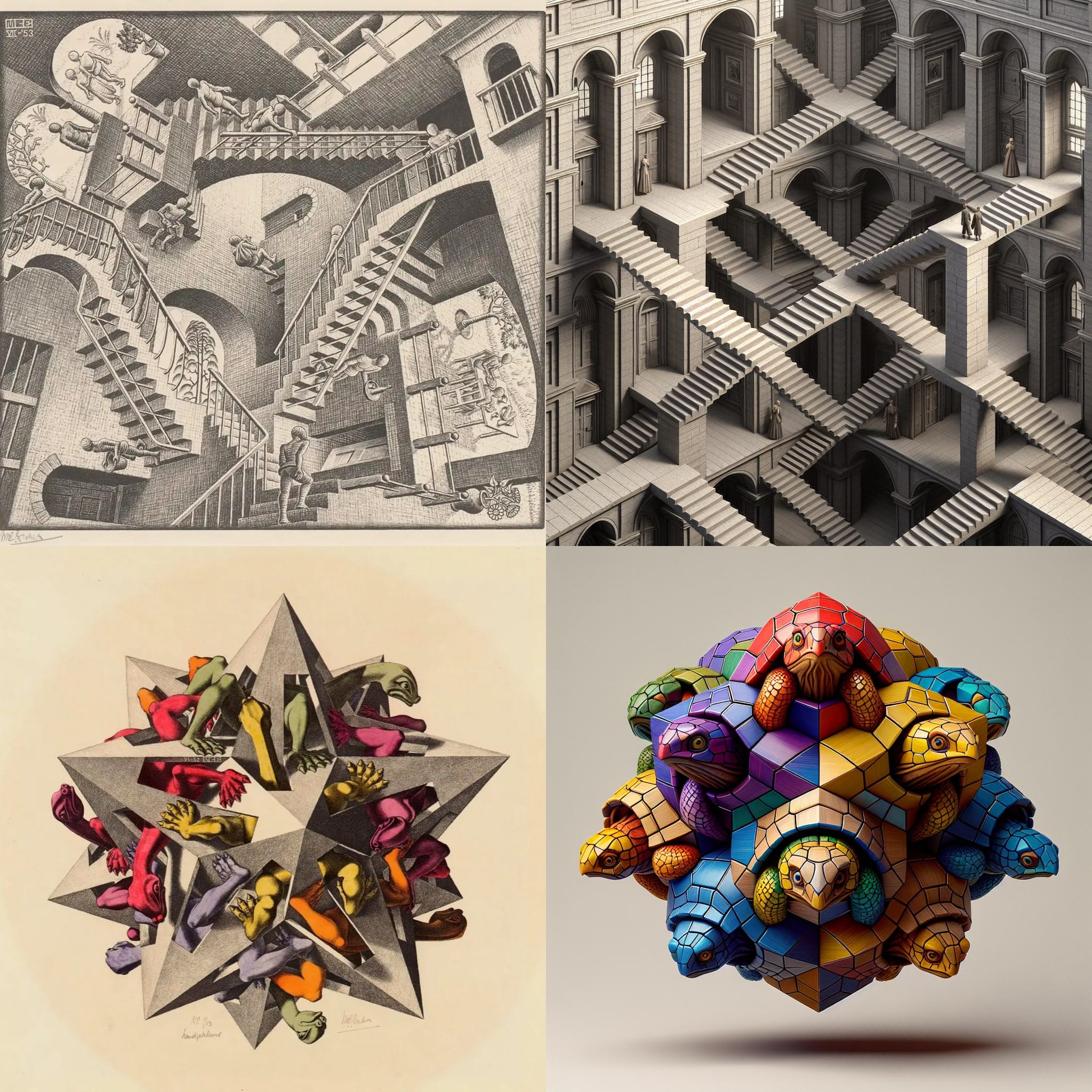

That is pretty obvious when you do something like asking it to describe Escher’s “Relativity” or “Gravity” (which it does quite well), but feeding those descriptions back into the model to generate images yields results that are not quite what you would expect:

Originals on the left, AI-generated interpretations on the right

Originals on the left, AI-generated interpretations on the right

This happens irrespective of the amount of detail you put into the prompt, and it feels like there is a game of telephone going on in the background as the prompt is encoded and transformed into an image that is not quite what the model “sees” in the original.

Pattern Recognition

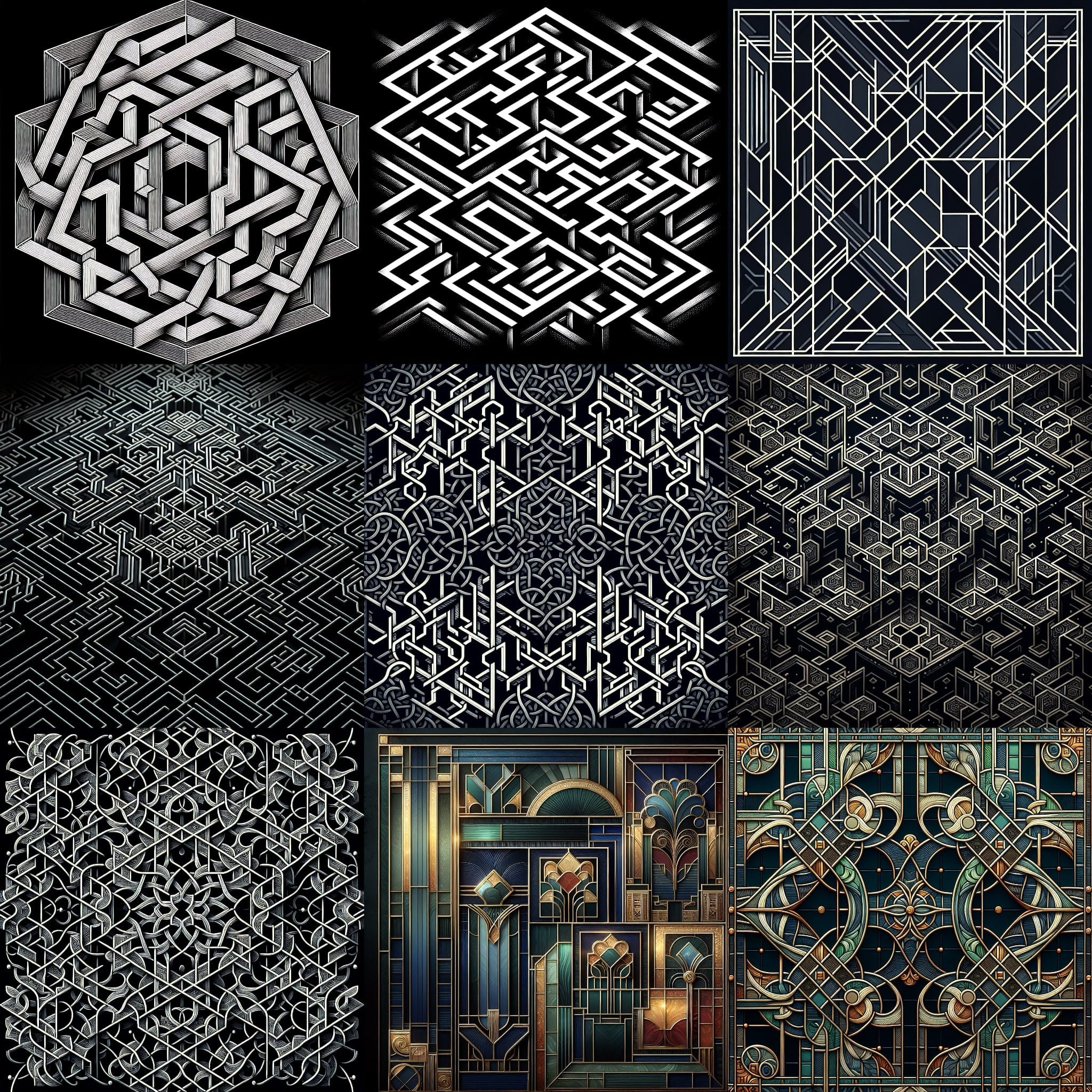

Another interesting aspect for me (especially when compared to the results I got with Stable Diffusion and even FLUX in recent past) is that gpt-4.1 seems to be pretty decent at drawing geometric patterns and shapes–I love this kind of thing since it makes for great wallpaper material, and I was pleasantly surprised by the results:

Symmetrical and asymmetrical patterns in a few different styles

Symmetrical and asymmetrical patterns in a few different styles

Some of these are quite intricate and surprisingly regular, which was a nice surprise.

Styling and Content

One of the things I found most interesting, however, is that broad composition and styling prompts yield results that are quite consistent, especially if you use the same prompt with different styles.

For example, I tried a few prompts that were variations on “a woman with flowing robes walking through a lighted hallway”1, and although I tweaked the prompt a little for a few instances (color schemes, specific styles), the results were quite consistent:

Yes, I had a lot of time on my hands

Yes, I had a lot of time on my hands

But where things get a little iffy is when you try to get the model to generate images in a specific style–in the past there was a lot of controversy around prompting image models to blatantly copy the style of specific artists, and… well… It’s still possible, but it is certainly not as blatant as it used to be.

However, some of the results are still quite good, and fairly recognizable if, for instance, you’re a comic book fan. Have a go at trying to guess the styles in the following montage:

The same prompt but seasoned with different comic book styles

The same prompt but seasoned with different comic book styles

The Classics

To explore this a little further, I tried my hand at prompting the model to generate images in a few specific styles (surrealism, impressionism, etc.) as well as the style of some of my favorite artists–and some of the results were pretty striking, like this set of Klimt-inspired images:

If you're a fan, you know what these are based on

If you're a fan, you know what these are based on

You can certainly recognize “The Kiss” in the first image, but the rest are more of a pastiche than a direct copy.

Incidentally, Surrealism is a great style for AI-generated art, because diffusion artifacts almost look like part of the style, and I got some interesting results with it as well, but then I decided to go for something a little more classical:

Hellenistic statues in the simplest possible setting

Hellenistic statues in the simplest possible setting

I also did a few dozen of these, and that is where I got the most anatomical errors. In fact, I fixed an extra arm on the rightmost figure above, and I found that interesting because the background and lighting were so minimal that I would expect the model to focus on the figures themselves. But it didn’t, because it’s still a diffusion model doing the actual generation, and diffusion models are notoriously bad at consistency.

I guess that we haven’t quite reached the point where models can generate fully accurate human figures, but the results are still pretty good and I wouldn’t mind having any of the above on my wall–if only things like the Reflection Frame were more affordable…

Mixing It Up

Finally, I decided to try mixing up the prompts a little, and that was where I had the most fun. For example, I tried a base prompt that was essentially “two people facing each other holding their phones” and then added various styles and settings to it, which yielded some pretty interesting–and unexpected–results:

Two people holding telephones

Two people holding telephones

I found it hilarious that the model went for actual corded telephones instead of modern smartphones, but as I added different styles and context to the prompt, it became a fun exercise to see how the model reacted.

One of the limitations of doing plain API calls was that I didn’t have the same kind of consistency as I do with InvokeAI (I can’t set seeds or fiddle with encoding or LoRAs), but I didn’t have the time or patience to set detailed API parameters, and I guess randomness and a degree of semantic drift is the price you pay for using a model that is not specifically aimed at this kind of task.

Conclusion

Although this was far from a comprehensive exploration of image generation in gpt-4.1, it was a fun exercise that yielded some interesting results. If I have another long bout of idle time (perhaps during Summer break) I will try to explore the API a little further and see if I can get more consistent results, but as a way to pass the time in hospital it was definitely worth it.

A few of my personal favorites

A few of my personal favorites

And even though the current state of image generation is still a little rough around the edges, we are definitely now at a point where quality at the current default resolution (1024×1024 pixels) is quite decent, although diffusion artifacts and structural inconsistencies are still easy to spot.

The elephant in the room for most people, however, is likely to be the ability to prompt for specific styles. It is sure to continue raising a lot of cries for preservation of artistic integrity, but I don’t think it will ever replace the work of artists–I’d rather look at this as a great way to generate ideas and inspiration, and I can see how it could be used to create mood boards or concept art for projects.

As to me, my favorite style of illustration is actually more along the lines of hand-drawn pencil sketches, and since I have actually been trying to get back into the habit of sketching and drawing, getting the model to generate images that I can trace out in Procreate or use as a starting point or reference is a great way to do that.

It’s going to be quite fun to get back to this in a couple of years again and see where things stand then.

.png)