If this were one person, I wouldn’t write this publicly. Since there are apparently multiple people on board, and they claim to be looking for invaestors, I think the balance falls in favor of disclosure.

Last week Prothean Systems announced they’d surpassed AGI and called for the research community to “verify, critique, extend, and improve upon” their work. Unfortunately, Prothean Systems has not published the repository they say researchers should use to verify their claims. However, based on their posts, web site, and public GitHub repositories, I can offer a few basic critiques.

ARC-AGI-2

First: Prothean’s core claim, repeated in the white paper, the FAQ, and the press release, is that Prothean has successfully solved all 400 tasks of the ARC-AGI-2 benchmark:

We present Prothean Emergent Intelligence, a novel five-pillar computational architecture that achieved 100% accuracy on all 400 tasks of the ARC-AGI-2 challenge in 0.887 seconds.

This system achieved 100% accuracy on all 400 tasks of the ARC-AGI-2 challenge in 0.887 seconds—a result previously considered impossible for artificial intelligence systems.

ARC-AGI-2 is specifically designed to resist gaming:

- 400 unique, novel tasks

- No training data available

It is not possible to solve “all 400 tasks of the ARC-AGI-2 challenge” because ARC-AGI-2 does not have 400 evaluation tasks to solve. Nor is it true that ARC-AGI-2 has no training data. The ARC-AGI-2 web site and docs are clear about this: the workload consists of “1,000 public training tasks and 120 public evaluation tasks”.

Prothean System’s “verification protocol” instructs researchers to download these 400 tasks from a non-existent URL:

Step 3: Obtain ARC-AGI-2 Dataset

# Download official dataset wget https://github.com/fchollet/ARC-AGI/arc-agi-2-dataset.zipThis isn’t even the right repository. ARC-AGI-2 is at https://github.com/arcprize/ARC-AGI-2.

Local Operations

Prothean Systems repeatedly claims that its operations are purely local. The announcement post states:

🧬 WE SURPASSED AGI. THIS IS EGI. NOT AI. HERE’S THE PROOF.

Welcome to Prothean EGI.

──────────

After years of research and months of physical development, I’ve been building something that shouldn’t exist yet.

After months of solo development, I put together a small strike force team—the Prothean Systems team—to bring this vision to life.

Prothean Systems — Emergent General Intelligence (EGI) that lives on your device, remembers everything, and never leaves your hands.

No servers. No uploads. No corporate AI deciding what you can know.

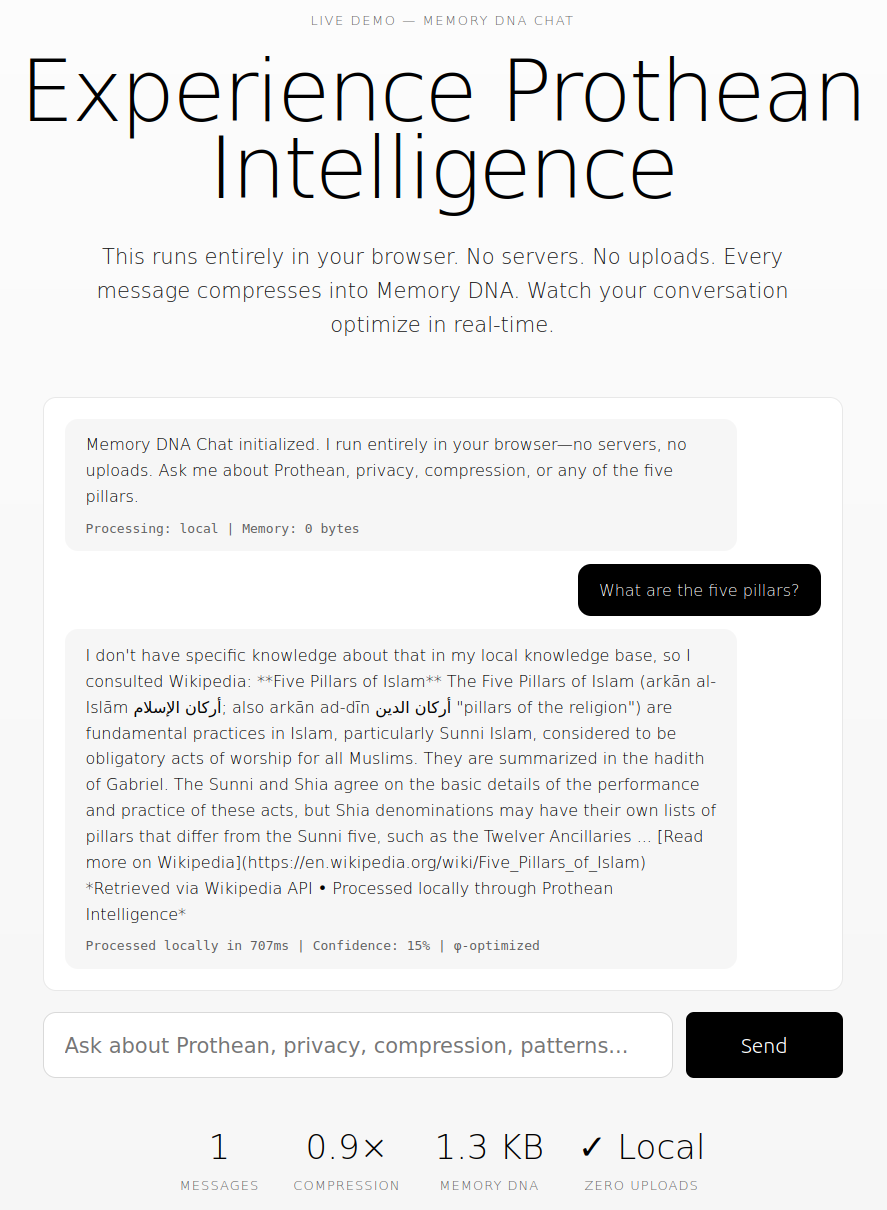

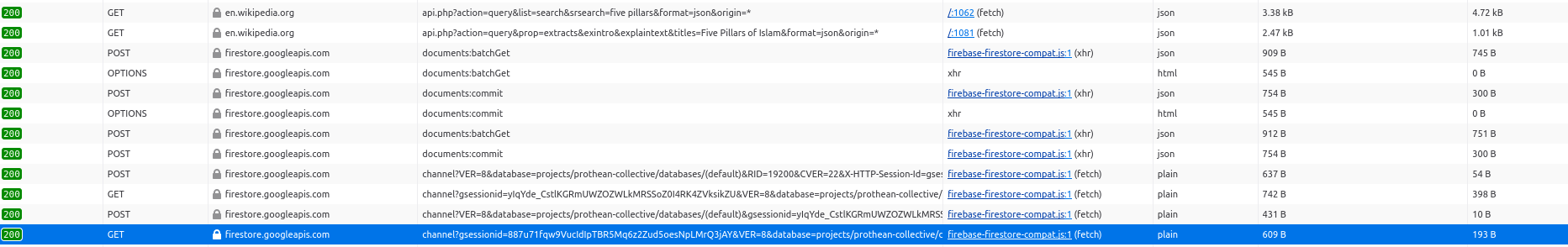

The demo on beprothean.org also advertises “no servers” and “zero uploads”. This is obviously false. Typing almost anything into this box, such as “what are the five pillars”, triggers two queries to Wikipedia and ten messages, including a mix of reads and writes, to the Firebase hosted database service:

Compression

Prothean’s launch video explains that Prothean uses a multi-tier compression system called “Memory DNA”. This system “actually looks at the data, understands its characteristics, and then applies the absolute best compression technique for it”. The white paper lists nine compression tiers:

Compression Cascade:

1. Semantic Extraction (40% reduction) - Core meaning preservation

2. Pattern Recognition (25% reduction) - Recurring structure identification

3. Fibonacci Sequencing (15% reduction) - φ-ratio temporal organization

4. Harmonic Resonance (10% reduction) - Frequency-domain optimization

5. Contextual Pruning (8% reduction) - Relevance-based filtering

6. Recursive Abstraction (6% reduction) - Meta-pattern extraction

7. Golden Ratio Weighting (4% reduction) - φ-importance scaling

8. Temporal Decay (3% reduction) - Age-based compression

9. Neural Synthesis (2% reduction) - Final integration

The demo on beprothean.org does nothing of the sort. Instead, it makes a single call to the open-source lz-string library, which is based on LZW, a broadly-used compression algorithm from 1984.

function demoMemoryDNA(){ const text = $('mdna-input').value.trim(); ... const compressed = LZString.compressToUTF16(text);Guardian

Prothean advertises a system called “Guardian”: an “integrity firewall that validates every operation” and “detects sensitive data, prevents drift, enforces alignment at runtime”. The demo does not appear to address drift or alignment in any way. Instead, it matches strings against three regular expressions, looking for things like e-mail addresses, credit cards, and API keys.

// Guardian: Pattern detection & integrity validation function runGuardian(text){ ... if(/\b\w+@\w+\.\w+/.test(text)){ patterns.push('email'); threatLevel += 1; } if(/\d{4}[- ]?\d{4}[- ]?\d{4}[- ]?\d{4}/.test(text)){ patterns.push('card'); threatLevel += 3; } if(/(password|secret|api[_-]?key|token)/i.test(text)){ patterns.push('secret'); threatLevel += 2; } ... return { patterns, threatLevel, integrity, safe: threatLevel < 5 }; }Semantic Bridging

Prothean’s “Universal Pattern Engine” invites users to “connect two concepts through semantic bridging”. This semantic bridging system has no awareness of semantics. Instead, it is based entirely on the number of letters in the two concepts and picking some pre-determined words out of a list:

const a = $('upe-a').value.trim(); const b = $('upe-b').value.trim(); ... // Semantic bridge building const bridges = ['system', 'design', 'architecture', 'implementation', 'protection', 'control', 'ownership', 'agency', 'autonomy', 'dignity', 'privacy']; // Calculate semantic distance (simulated) const similarity = (a.length + b.length) % 10 / 10; ... const selectedBridges = bridges.slice(0, 3 + Math.floor(similarity * 3));Tree Height

The white paper describes a “Radiant Data Tree” structure, which uses a "Fibonacci-branching tree structure with φ-ratio organization. The depth of this tree is given as:

Depth(n) = φ^n / √5 (rounded to nearest Fibonacci number)

Depth is a property of a specific node, not a tree as a whole—I assume they mean Height(n). In either case this is impossible. The height and maximum depth of a tree are bounded by the number of edges between the deepest node and the root. Trees cannot have a height which grows as φn, because this implies that the depth increases faster than the number of nodes. Per this equation, a “Radiant Data Tree” containing just six nodes must have a height of eight—and must therefore contain at least nine nodes, not six.

Transcendence Score

The white paper also introduces a transcendence score T which measures “mathematical beauty and emergent capability”:

T = (0.25×C + 0.25×(1-M) + 0.3×N + 0.2×P) × φ mod 1.0Where:

- C = Complexity handling (0 to 1)

- M = Memory compression ratio (0 to 1)

- N = Neural emergence score (0 to 1)

- P = Pattern recognition quality (0 to 1)

- φ = golden ratio (1.618…)

Speaking broadly, a quality metric should increase as its inputs improve. This score does not. As each metric improves, the transcendence score rises—until roughly halfway through it reaches one, and immediately wraps around to zero again. Consequently, small improvements in (e.g.) “pattern recognition quality” can cause T to fall dramatically. The white paper goes on to claim specific values from their work which are subject to this modular wrapping.

There’s a lot more here, but I don’t want to burn too much time on this.

Large Language Model Hazards

Based on the commit history and prose style, I believe Prothean is largely the the product of Large Language Models (LLMS). LLMs are very good at producing plausible text which is not connected to reality. People also like talking to LLMs: the architecture, training processes, and financial incentives around chatbots bias them towards emitting agreeable, engaging text. The technical term for this is “sycophancy”.

The LLM propensity for confabulation and reward hacking has led to some weird things in the past few years. People—even experts in a field—who engage heavily with LLMs can convince themselves that they have “awoken” an LLM partner, or discovered a novel form of intelligence. They may also become convinced that they have made a scientific breakthrough, especially in the field of AI.

Please be careful when talking to LLMs.

.png)