|

NVIDIA DGX Spark - $3,999 MSRP |

|||

|

|

|

||

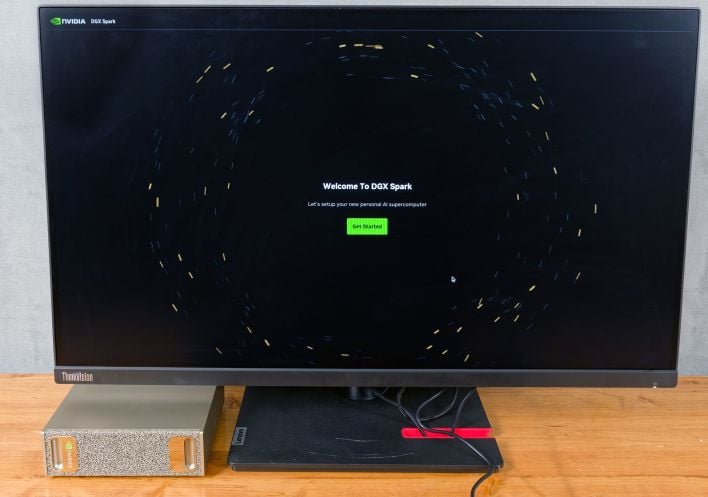

Back at CES in January, NVIDIA announced what was at the time called Project DIGITS and its accompanying GB10 "superchip". Project DIGITS was designed to bring powerful local AI to the desktop with more memory than any consumer GPU can provide. Now named the NVIDIA DGX Spark, the system was initially announced with a price tag of $3,000 and it was expected to ship in May of this year. However, fine-tuning the hardware and software stack and other external global factors pushed availability to today in mid-October, and with a higher price tag to boot. But it is here now, and we have one in the house for testing, so let's meet the NVIDIA DGX Spark...

NVIDIA DGX Spark Specifications

At the heart of the DGX Spark is the Grace Blackwell GB10 SoC. This SoC combines a 20-core Arm64 CPU with a Blackwell-based GPU with 4th-generation RT cores and 5th-gen Tensor cores. Like all Blackwell GPUs, the included support for the NVFP4 datatype allows developers to get better performance and lower memory footprints than INT8 or even FP8 allow. And all of that is backed by 128GB of unified LPDDR5x system memory offering up 273 GB/sec of peak bandwidth.

Unlike any x86 system, the full memory pool of 128GB is available to either the CPU or the GPU without any static partitioning. For example, on the HP ZBook Ultra G1a that we reviewed recently, we had to split the memory up manually in the BIOS. If we gave only 32GB to the GPU and saved 96 GB for the CPU, any workload that would spill out of the GPU partition would have to get swapped in and out. In Arm64 land that's not really a concern; all of the memory is available to either the CPU or the GPU part of the chip because they're connected via NVLink-C2C.

When it comes to local AI, that's a really important distinction and an advantage that Arm-based setups seem to have over current x86 devices. It's an advantage that Apple has had in AI since the inception of the Apple Silicon series right on up to the present M4 Max that can be found in MacBooks and the 2025 Mac Studio, and it's something DGX Spark has as well. NVIDIA says that thanks to this architecture and the 128GB pool of memory, models with 200 billion parameters can be loaded into memory, as long as they use the NVFP4 data format.

The GPU itself is also pretty impressive for something so small. The performance is rated at up to 1 PFLOP (or 1,000 TFLOP) of NVFP4 AI compute performance. What we haven't been able to nail down is the exact resource counts of the GPU. The nvidia-settings tool on Linux shows us that there are 6144 CUDA cores, but can't tell us about RT or Tensor core counts. If that's not enough, a pair of DGX Spark units can be paired together to share the load, distributing a model with up to 405B parameters across the pair of GB10 chips and their memory load outs.

NVIDIA DGX Spark Build and Features

The DGX Spark is quite small. At just under 6x6 inches for a footprint and only two inches in height (around 70 cubic inches, or just over 1 liter), it should have a potent performance to volume ratio. The gold-colored chassis is maybe a tad inauspicious, but the textured grille on both the front and rear of the unit prevent it from being a shiny, reflective beacon on a desk. The only aesthetic issue we find is that the NVIDIA logo is vertical rather than horizontal, and there are no feet on the side of the unit to make it stand up.

All of the connectivity is on the rear. There are four USB-C ports with USB 3.2 at 20Gbps, a single HDMI 2.1a connector good for 4K at 120 Hz, and a 10 Gbps Ethernet port. Wireless connectivity includes WiFi 7 and Bluetooth 5.4. While there is already 4 TB of storage onboard, that fast USB-C connectivity will allow expanding to external devices without giving up a lot of performance, though one of the USB-C ports will be occupied by the included 240 Watt AC adapter.

This angle makes the DGX Spark look mighty small -- and it is both of those things.

This angle makes the DGX Spark look mighty small -- and it is both of those things.NVIDIA DGX Spark vs Jetson AGX Thor Developer Kit

You might be saying to yourself, "Haven't we already done this? Didn't NVIDIA just release another small AI PC?" And the answer to that is a qualified yes. There is indeed a developer kit built around NVIDIA's Jetson AGX Thor, but there are some pretty significant differences. First of all, the SoCs are different, with different requirements. The DGX Spark features NVIDIA's Grace Blackwell GB10 SoC with the Grace CPU architecture while the Jetson AGX Thor uses an SoC with Arm Neoverse CPU cores and a different GPU configuration.

There are software differences as well. Jetsons come running the Jetpack operating system that's purpose-built for robotics and includes an actual Jetson module, the same one that would be deployed in a robot complete with its own real-time OS and inputs for tons of sensors. Meanwhile, the DGX Spark is meant for general-purpose AI. While you could do things like local LLM development and generative image AI on the Jetson, you'd really be missing out on the specialized robotics hardware. And you'd be limited to 1 TB of internal storage with slower 5Gbit USB for expansion, compared to the 4 TB on the Spark, not to mention the faster Arm cores and higher thermal and power limit, in the DGX Spark.

One other thing about the Jetson AGX Thor Developer Kit is that it's a limited release. When these units sell out, NVIDIA says they won't be making any more of them, because at that point they expect customers will be buying the modules for cloud deployment. On the other hand the DGX Spark is here to stay and will remain available for a long time to come.

The DGX Spark Is A Developer Companion Piece

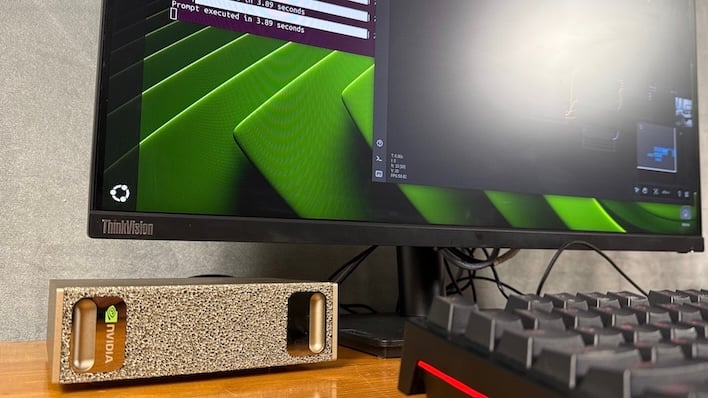

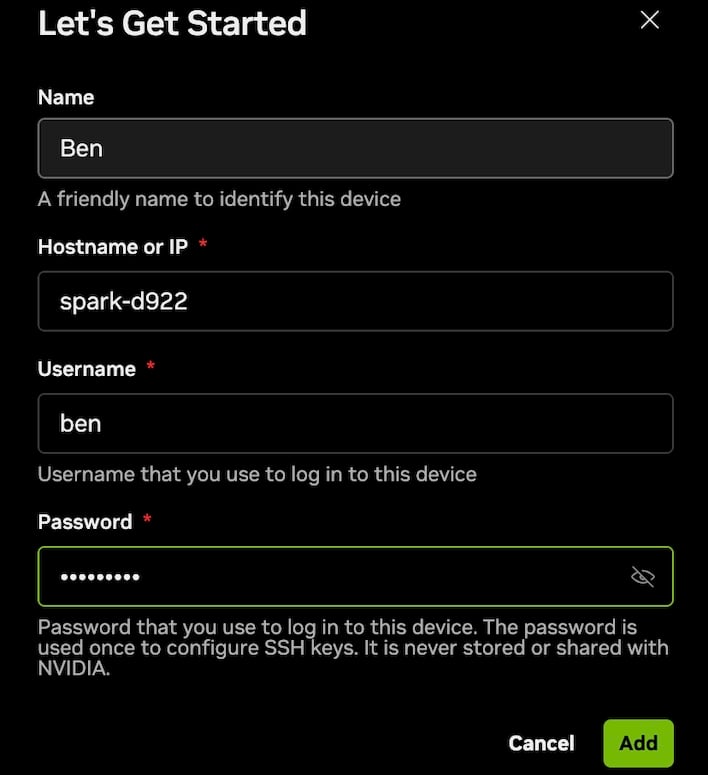

NVIDIA says that the DGX Spark is not really meant to replace a developer's workstation PC, but to work as a companion. It can certainly be configured in desktop mode, operating like a regular workstation. However, it's really designed to be accessed through NVIDIA Sync where it's not going to be wasting memory and other resources drawing a desktop environment and managing web browsers and email clients. That compute power and memory is too precious when it can be used for AI, and connecting over your local network preserves as much as possible.

This is a paradigm to which developers are well-accustomed. The idea of using your workstation to control a remote GPU is as true of a cloud-based DGX system as it is the DGX Spark. The Spark won't be nearly as fast as an RTX Pro 6000 with 96 GB of VRAM, but it doesn't have to be since that datacenter GPU costs $10,000.

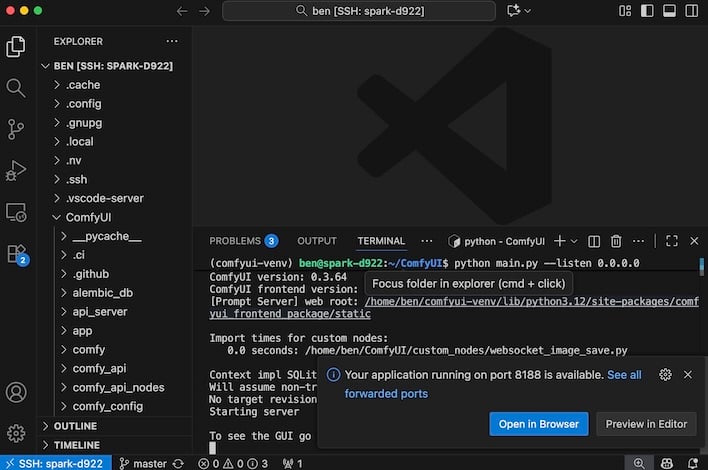

The NVIDIA Sync app is available for Windows, Linux, and Mac. Assuming your development environment (in our case, Visual Studio Code) is already configured, the Sync app can connect to your DGX Spark and use it as a remote workspace over a local 2.5 Gbps Ethernet connection. It's super easy to use, as well. VS Code's Explorer showed us the contents of the Spark's storage, the built-in terminal had us logged into the Spark, and apps running on the Spark were accessible locally.

NVIDIA Sync hooks your integrated dev environment to the Spark with ease

NVIDIA Sync hooks your integrated dev environment to the Spark with easeFor example, we cloned the ComfyUI git repository to the Spark, set up a virtual environment with Python and the virtualenv utility, installed our dependencies from requirements.txt in the project, and then loaded ComfyUI in the browser on a Mac Studio. Once the Spark is set up, you never have to touch it.

Of course, standard SSH connections are also supported, but considering how seamlessly the NVIDIA Sync app worked for us, we just wouldn't bother. Beyond Visual Studio Code, Cursor and NVIDIA AI Workbench are also supported. All three developer tools are available for all three major desktop platforms, and Linux flavors are supported on Debian (like Ubuntu), Redhat, and Arch-based OSes.

NVIDIA Build Makes Remote Development Easy

One of the best things about the DGX Spark is the software. The hardware is very cool, don't get us wrong, but NVIDIA made sure that it's usable not just for developers, but for everyone. If you can follow a wizard to set up the system out of the box, and then connect to it with NVIDIA Sync, and then paste some commands into a terminal, you too can be an AI developer.

There are a lot of "playbooks" available on NVIDIA Build's Spark hub as well. From a step-by-step guide to setting up ComfyUI, to generate content locally, to building and deploying a multi-agent chatbot, NVIDIA has you covered. These playbooks are tailor-made for the Spark hardware, and everything contained here is available publicly from day one.

That's not to say it's entirely finished yet, though. NVIDIA has let us know that there's more in the pipeline. While delays and price changes aren't fun, it seems that NVIDIA's software engineering teams have worked pretty hard to polish the portal. be sure to check the next page, because that's where we're going to take some of these playbooks for a spin and we can finally start talking about some performance figures.

.png)