After kicking off my swimming journey with an AI coach powered by OpenAI's o3-pro, I've now logged three swims: two at 1000m each and the latest at 1200m. This post in dives into how this collaboration is evolving, how we've adapted our workflow, and how the robot and I are thinking about building an iPhone app together.

Initially, my AI coach generated structured workouts—carefully choreographed swim drills based on Terry Laughlin’s "Total Immersion." But I quickly realized that I prefer the flexibility of choosing what to focus on in the pool, such as “swimming tall,” “pressing the buoy,” and “side skating,” rather than following prescribed sets. It’s not like I’m a complete beginner swimmer, it’s just that I’m not a good one, and I’m stubborn and want to feel like I can build some basic endurance before I spend all my time on drills… not going to lie, this probably has something to do with swimming next to masters or competitive-teen athletes in the local pool. While the robot may not be cursed with an ego… I can’t yet say the same for me.

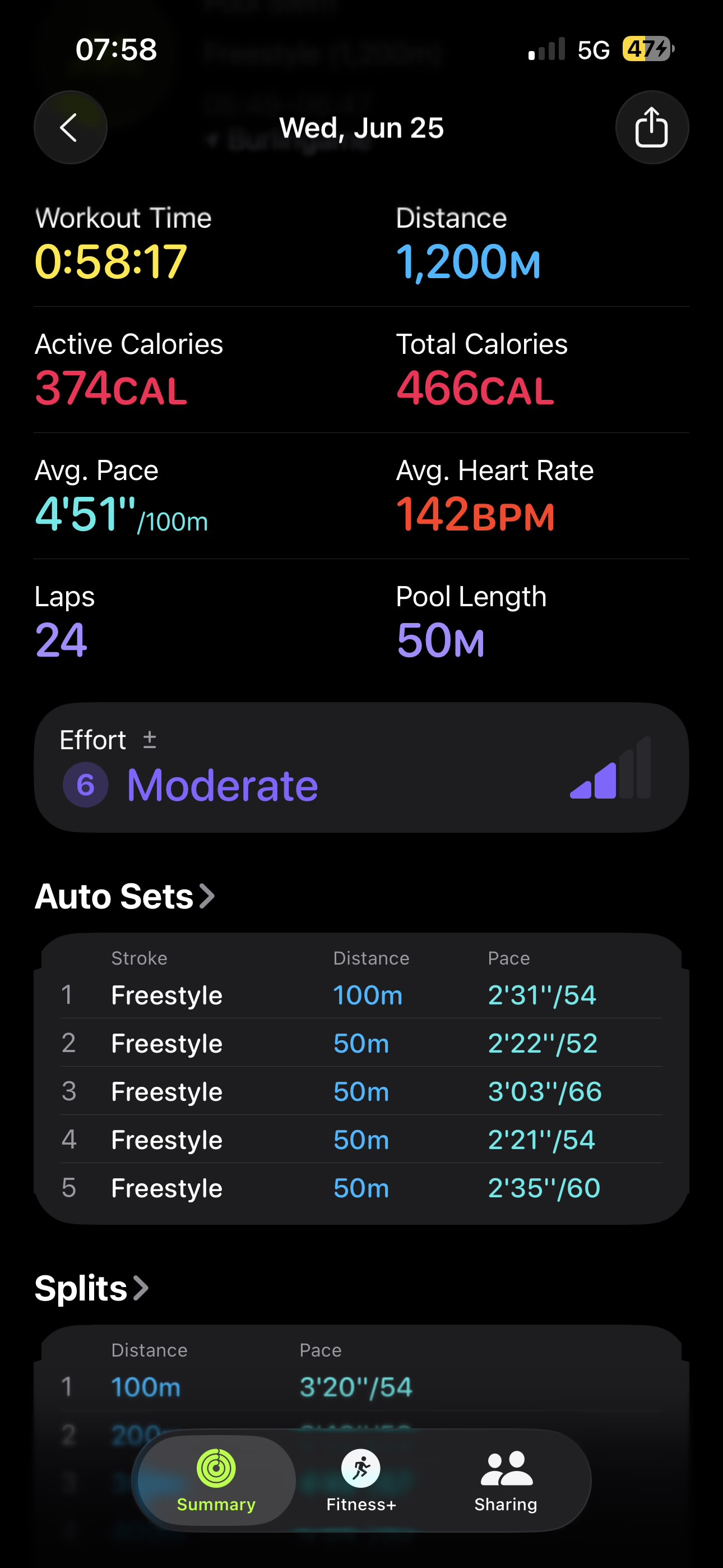

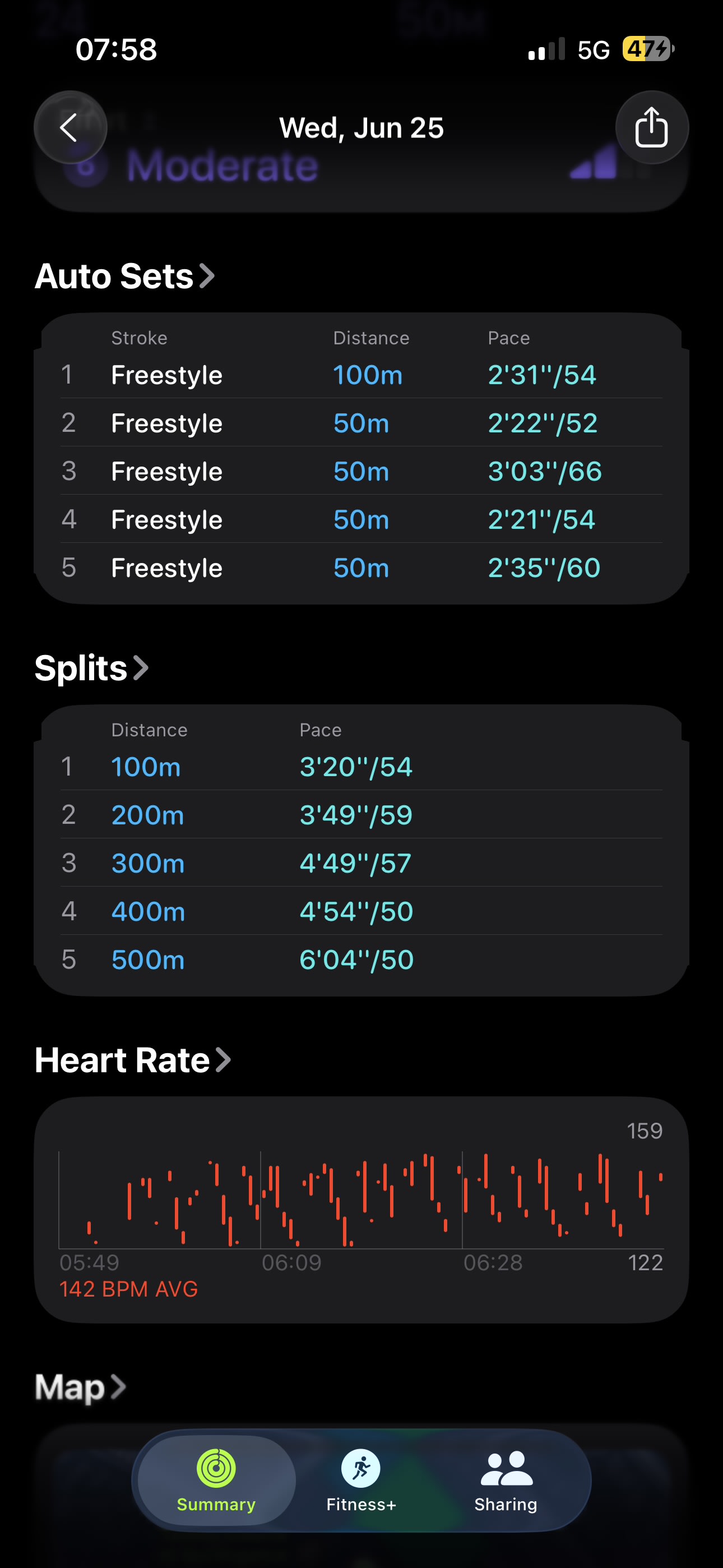

Rather than balking, the robot adapted beautifully. Instead of delivering rigid (or annoyed) instructions, it functions like an insightful observer, providing sharp, detailed feedback based solely on screenshots of my Apple Watch swim data. It analyzes my strokes per lap, splits, and heart rate, offering actionable advice that syncs effortlessly with my intuitive swimming style, and what I’ve told it I care about on any particular day.

This new workflow is surprisingly smooth. After each session, I upload a few screenshots of my swim metrics, and the robot responds with supportive, nuanced, hyper-personalised feedback:

Stroke Efficiency: It quickly identified improvements in my stroke count (reduced from about 64 strokes per 50 meters to 52-59). This validated that my intentional focus—pressing my buoy and swimming tall—was paying dividends.

Heart Rate Management: My AI coach confirmed that an average heart rate around 142 bpm was solidly aerobic - what I want to see in early-stage endurance building without excessive strain.

Pacing Strategy: However, it gently highlighted my pacing inconsistency—an initial 3’20”/100m ballooning to a 6’04” crawl (I’m not outpacing any sea turtles just yet). Spotting my wall hang-time issue mid-swim, it cleverly suggested introducing “mini negative-split games” to smooth things out.

Receiving insights this precisely from an AI coach feels genuinely supportive—more partner than instructor, a nuanced analyst rather than a prescriptive drill sergeant. (Though, I’m a little wary of these “games” the robot has in mind for me. At least it knows “not drowning” is a primary goal.)

This interactive collaboration sparked an idea: is there a better way than just sharing screenshots? All the raw data exists in Apple Health. Could the app automatically fetch swim sessions, run them through my AI model, and deliver personalized coaching insights—right on my iPhone?

Here’s the prompt I gave o3-pro Deep Research:

Im trying to use an LLM (or multiple) as a coach / trainer / physician’s assistant.

On the one hand, I have documents that guide my training (goals, plans, details, etc. all crafted for me). I want my health details (sleep, HRV, training load, individual workouts) pulled out of HealthKit, and stored in a data store locally on my phone so that they can influence my training. For example, the app should be able to pass to an LLM (local or cloud, though let’s say cloud for now and stick to openAI APIs) the documents that express my training plan and goals, as well as recent context (HRV, how I slept last night), as well as recent workouts (all the details), so it can customize my plan for my next workout. It should also be able to keep all of its data for visualization, etc.

I want it to keep its own copy of everything that’s in HealthKit and just sync changes, because at some point I may want to keep a synchronized copy in the cloud as well.

This sounds like something that ought to be done in Swift vs pythonista, though storing that local copy of HealthKit in a way that’s accessible to other apps (or, again, can easily sync a copy elsewhere) so that I can use python or something else to do analysis would also be helpful.

I’ve never written anything in swift or built anything for iOS. If you design the app for me, break it down into nice small modules, and assume that I’m going to use Cursor as my AI-guided IDE to take your starting point and build it into a real app. Also, since I’ve never done this, a guide that explains how to use cursor and Xcode together while deploying to an iPhone, etc would be handy.

But before we get too complicated, let’s say the MVP includes:

- providing a single document that details my training plan

- importing my health context (sleep, training load, HRV, rhr, overnight temperature, etc.)

- importing my last several workouts

- providing all of these to a single LLM (eg o3 pro)

- showing me the output from that LLM (I.e. what should I do today based on the info)

Nice to haves:

- being able to add text comments to any workout, which are stored persistently in our HealthKit clone database, such that these comments are also provided to the LLM whenever the rest of the workout is uploaded to an LLM.

This project taps into several powerful tools:

SwiftUI and Alamofire: For the app’s user interface and seamless data integration.

Cursor IDE: To streamline coding with direct AI assistance.

OpenAI API: Powering the analysis engine with deep, contextual intelligence.

Guided by my AI coach, we began scaffolding this concept, laying the foundational SwiftUI code structure. The idea is elegant yet straightforward: minimal friction between swim session completion and insightful feedback.

We’re crafting a minimal viable product (MVP) that allows:

Automatic synchronization with Apple Health swim data.

Local JSON storage (backed up via iCloud) of swim sessions.

AI-powered feedback generation, accessible directly on-device.

Markdown support for flexible note-taking and plan edits directly in-app.

Though still early, the potential is clear: an app that blends human intuition and AI precision, crafting genuinely individualized swim coaching accessible to anyone with an iPhone and Apple Watch.

You can see the entire conversation with GPT here.

It's exhilarating to watch my swimming improve through a collaboration with an AI that's genuinely responsive to my preferences. While traditional training plans might work for many, having a coach that pivots gracefully as my needs change—offering personalized strategies like negative splits or reinforcing specific form cues—feels a lot better for me.

This evolving interaction and interplay between my choices and the robot’s adaptive insights isn’t just about swimming smarter—it’s about training with deeper understanding and a personalized touch that conventional methods rarely match. The best swim coaches in the world might be smarter than the robot, but those folks are coaching olympians, not me.

As we push forward with app development, future posts will detail how this integration shapes my swimming journey. Will seamless access to AI-driven analytics accelerate my progress? How will the continuous feedback loop evolve as the AI becomes more attuned to my swimming style? Will this turn into a vibe coding disaster? Will I just stick with screenshots (honestly, it works pretty well).

Stay tuned as we explore these questions, refining the “system”, maybe even an app and my technique stroke by stroke.

I’d love your comments, suggestions, questions or feedback, so please drop them below!

.png)