I don't care that much for peer review but it’s for a lot of reasons. Peer review is done for free for for-profit companies with insane profit margins who seem to mostly operate as web hosting for static websites and PDFs. For example, here is a paper I co-wrote with colleagues about a statistical model that predicts COVID-19 transmission between neighborhoods in England. This paper will never change, the content will never change, the graphs and plots, which are only images and not some kind of data dashboard like a streamlit.io app, will never change. Yet it currently costs 2390 euros to publish an article in this journal. This is a service that other places such as github or arxiv or JOSS (see below) offer for free. But actually, this substack isn’t about how journals may be organizing some kind of price fixing monopoly. This post is about how peer review enables internet troll-like behavior and what we can do about it.

When you submit a scientific paper, it is typically because you have labored rather intensely for months to arrive at a result you think is relevant for others to read about. You have put a large amount of work into a paper, so much so, that you might have overlooked something that might be obvious to an outside observer. This is because you develop a kind of tunnel vision by being so close to the project. Peer review is great in this situation because it can act as a check against any kind of overfitting your brain may have done on the problem space you are exploring. Or perhaps you overlooked some body of literature that might be relevant but you are simply unaware. So you send your paper off hoping to receive constructive feedback. You wait weeks, maybe months, to get feedback from others and when it finally arrives you get comments like:

Machine learning always produces trash. [source]

This perspective is very old and boring. [source]

You [addressing the authors] shouldn’t be allowed to submit work like this. [source: anonymous reviewer from when I was guest editor for Physical Review Physics Education Research]

This young lady is lucky to have been mentored by the leading men in the field [source]

What exactly are you supposed to do with comments like that? How is that constructive feedback? How is that supposed to help you treat your self imposed tunnel vision? And how does that even make you feel to have your work labeled “trash”? Should we refer to quantum mechanics as an “old and boring” perspective? Or that someone wishes to gate keep you from their community? And this isn’t just me noticing this, it has been reported by AAAS and ars technica reports on it and people extend these arguments to the peer review process for grants as well. I believe that this is all happening at least in part because people are hiding behind the anonymity of the review process, or, they simply believe that it is okay to treat others poorly as long as it is on the internet. That is, like some internet forum commentators, some peer reviewers are internet trolls.

In any case, I don’t want to dwell too much on the terrible things people say to each other. Instead, I want to highlight a great peer review experience I had when writing a paper for the Journal of Open Source Software.

JOSS started to address a different issue altogether in science, that is, that often times computational scientists build complex software solutions that typically don’t get marked as a metric for any job evaluation. On their website they say:

In a perfect world we'd rather papers about software weren't necessary but we recognize that for most researchers, papers and not software are the currency of academic research and that citations are required for a good career.

JOSS says they are “Committed to publishing quality research software with zero article processing charges or subscription fees.” So you can probably see already why I am a fan of this journal. In addition to all of their commitments to a computational world where journal articles are free to submit and published open access, they also have developed a new way of evaluating papers through peer review.

If you are unfamiliar with the traditional peer review process, it works basically like this:

I do some research and write up a draft, agree with all my coauthors its a good draft.

I find a journal, hopefully a “high impact” one, that is willing to review my paper draft and I submit it through their web portal.

Typically this portal will ask you to recommend some reviewers and also suggest people you don’t want to review your paper for whatever reason

maybe you will need to sign a “no conflict of interest” document

and, then you click a big “submit” button and go about your day.

Assuming that you have submitted a paper following the format criteria, this paper is sent out to reviewers who are selected by the associate editor in charge of your paper, and they are expected to follow up to make sure the reviews are done and then sent back to you. Typically, you then wait 3 weeks to 3 months to get receive these reviews. Then, when the reviewer receives a paper review request:

they are first asked if they are willing to review, and if they say yes, they are usually given a tight deadline (10 days to 2 weeks, maybe less) to give a review back.

The reviewers are expected to write all their thoughts about the paper, look for inconsistencies, and generally follow the guidelines for reviewers that are made available (for example).

They submit these reviews back to the journal, typically through some web portal.

And this process is repeated until the editor and sometimes the reviewers are satisfied that the paper is publishable. Sometimes you go through successive iterations with new reviewers being added to the mix. Sometimes you only do it once and the paper gets published.

If you are thinking at this point, “this process sounds like it could be done via snail mail,” then you are correct. This process evolved long before computers were available to everyone. And I think JOSS has enabled a giant step forward in peer review that is somewhat ignored currently by the rest of science.

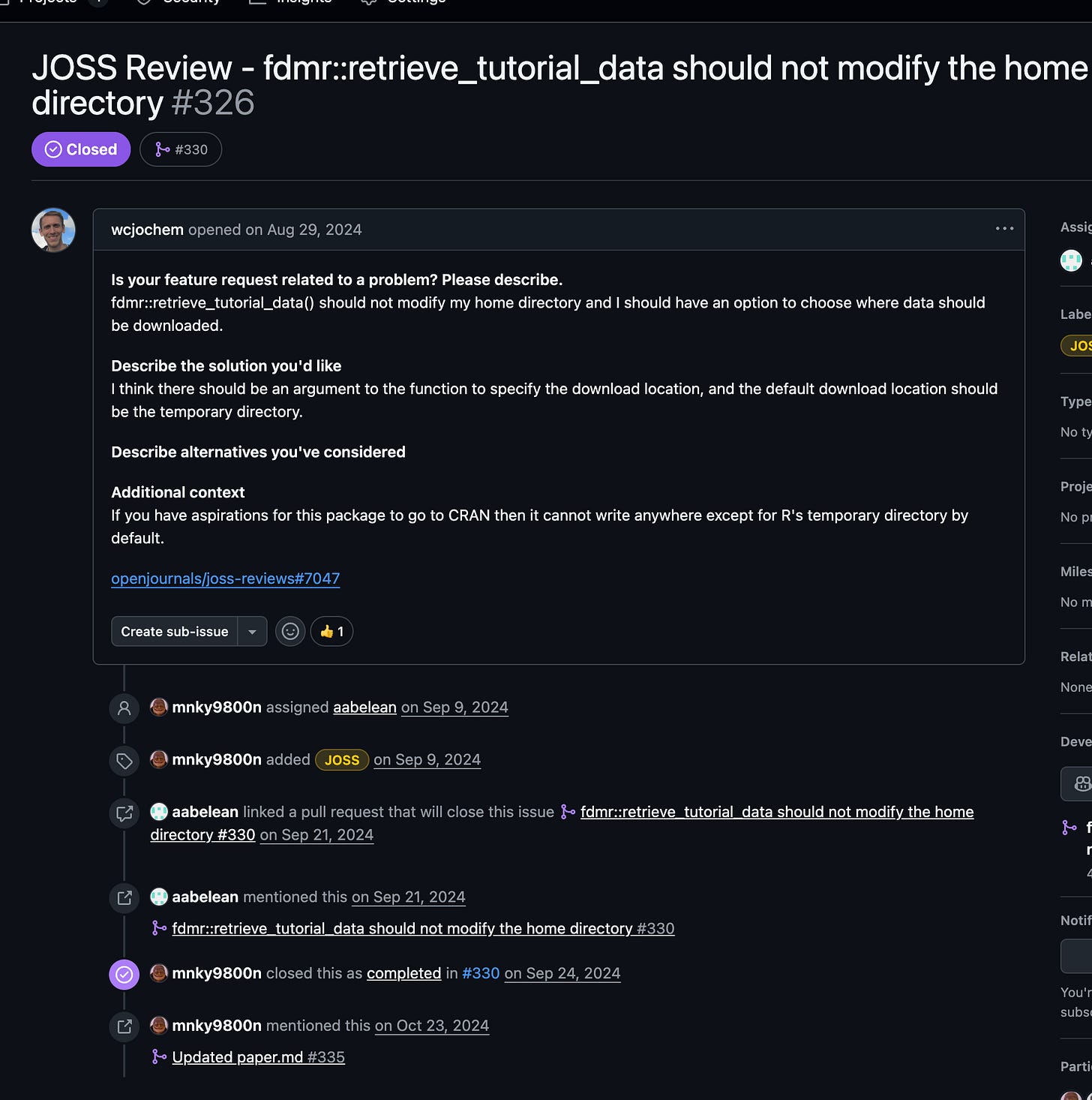

JOSS uses the github issue tracker and pull request system to write all reviews for the submitted paper describing the software that was developed. A reviewer will open an issue in the repo like this one here where the reviewer suggested that the library shouldn’t be changing the home directory whenever it feels like. Then one of the coauthors can address the reviewers comment either by explaining why they won’t make the suggested change, or simply making the changes and then submitting a pull request. If you click the link you can see the screen shot below:

Here you can see that the reviewer comment is logged, you can see me, mnky9800n, assign the issue to another team member aabelean. He then writes some code to fix this issue and creates a pull request mentioning this issue. And ultimately it is closed and fixed in the library. This system allows for the full exposure of the review process, including all of the changes that were made, and it is public, anyone can go and look at these issues and see how the JOSS peer review system made the 4D-Modeller package better. By having this fine grained approach, everyone can see everything that is happening within the paper, but also, it focuses the reviewers on the review. I don’t think that wcjochem would ever reply to this work by telling us that “Bayesian Hierarchical Models always produce trash”. A big reason is because the system that wcjochem is using to provide feedback is designed to target his feedback to the problem solution being presented.

Will this system work for all types of papers? Maybe. I think that these kind of systems can be explored in publishing. For example, Copernicus journals like The Cryosphere also have a public commenting and reviewing system. You can see here where colleague of mine has a paper where you can view the full chain of reviews including each iteration:

I am not sure that we currently have an optimum way of organizing peer review and scientific publication. I think that this kind of interaction could even be studied and optimized somewhat. But what I do know is that if you are writing stuff like “Machine learning always produces trash” and patting yourself on the back for engaging in “intellectual discussion” you should feel bad about yourself. And if that makes you angry, good.

In any case here is a list of ways to improve the current status quo:

Engage with journals that have public review processes

Review papers non-anonymously

Engage society publishers like Copernicus

Question why you are insecure instead of trashing some poor PhD student’s hard work

Be good to other people. We don’t get that long to live on planet Earth.

.png)

![Braid groups are cool [pdf]](https://news.najib.digital/site/assets/img/broken.gif)