AI-powered chatbots and documentation assistants have transformed how users interact with technical content. But here's the thing: AI is only as good as the documentation it's trained on.

While AI tools like Biel.ai leverage sophisticated retrieval-augmented generation (RAG) to understand and serve your content, the foundation of excellent AI assistance lies in well-structured, purposefully written documentation.

This guide provides actionable strategies to optimize your technical documentation for AI agents while maintaining exceptional human readability. Remember: you're not writing for robots—you're writing for humans, with AI as a powerful intermediary.

Why documentation structure matters for AI

Large Language Models (LLMs) excel at pattern recognition and context understanding, but they struggle with ambiguous, poorly organized, or inconsistent content. When documentation follows clear structural principles, LLM-powered assistants can:

- Retrieve relevant information faster: Well-organized content reduces search time and improves accuracy

- Provide more precise answers: Clear sections and focused content help LLMs understand context boundaries

- Maintain consistency: Structured patterns help LLMs learn your documentation's style and approach

Think of LLMs as extremely capable but literal research assistants. The clearer your instructions (documentation), the better the outcomes.

1. Essential information must exist: AI can't invent what's missing

Being minimalist with documentation is valuable, but essential user tasks must be documented. AI assistants can only retrieve and present information that exists. While they might attempt to fill gaps by generating plausible-sounding information, this will often be incorrect for your specific product.

Why this matters

When users ask "How do I cancel my subscription?" or "How do I delete my account?", the AI assistant needs actual documentation to reference. If this information doesn't exist, the AI assistant might either:

- Admit it can't find the information (frustrating for users)

- Attempt to provide a generic answer based on training data (potentially incorrect for your specific product)

Neither outcome serves your users well.

Implementation approach

Basic user journeys are often overlooked: Common tasks like account cancellation, subscription changes, data export, and password reset frequently get missed in documentation. These fundamental user paths are essential but often considered "obvious" by product teams.

Think of user paths specific to your product: Beyond the basics, consider the unique workflows that matter to your users—whether that's API key rotation, webhook configuration, or custom integrations.

2. Every page is page one: Provide complete context

Unlike human readers who browse through multiple pages, LLM-powered assistants often process individual pages or sections without the broader navigation context, depending on the implementation's context window and chunking strategy. Each page must stand on its own.

Why this matters

When a user asks "How do I configure authentication?", the AI assistant might retrieve a page deep in your security section. If that page assumes knowledge from previous pages, the answer becomes incomplete or confusing.

Implementation strategies

Include essential context at the top of each page:

Reference related concepts explicitly:

Avoid orphaned references:

3. One clear purpose per section

AI works best with focused, single-purpose content. Each section should answer one specific question or solve one particular problem. Mixing multiple objectives in the same section confuses AI retrieval and leads to unclear responses.

Why this matters for AI

When users ask "How do I set up authentication?", LLM-based systems need to find content that directly addresses that question. If your content mixes unrelated topics—like authentication setup, billing procedures, and database configuration—in the same section, LLMs struggle to provide focused answers and may include irrelevant information.

Implementation approach

- One question per section: Each section should answer a single user question

- Separate concerns: Keep procedures and references in different sections or pages, it helps reusability too

- Clear section purposes: Make it obvious what each section accomplishes

Example: Before and after

Before (mixed unrelated topics):

After (focused sections):

4. Less is more: Audit content strategically

Quality trumps quantity. AI performs better with focused, essential content than with comprehensive but diluted documentation.

Why this matters for AI

Too much content creates noise that confuses LLM-based retrieval systems. When documentation includes outdated information, duplicate content, or irrelevant details, LLMs struggle to identify the most accurate and current answers. Clean, focused documentation improves LLM precision and reduces conflicting information.

Implementation approach

Review what's being crawled:

- Remove outdated tutorials and deprecated feature guides

- Consolidate duplicate information across different sections

- Archive internal-only documentation that confuses external users

- Eliminate placeholder pages with minimal content

- Consider exclusion patterns: See Exclude URLS

5. Learn from user questions to improve content

User questions—whether asked to AI assistants or support teams—reveal what's missing or unclear in your documentation. Instead of focusing on AI metrics, use these insights to continuously improve your content.

Why this matters for AI

User questions provide direct feedback on where AI assistant responses fall short. When users repeatedly ask about the same topics, it indicates either missing content or unclear organization that LLMs cannot navigate effectively. This feedback loop helps you identify and fix gaps that improve both AI assistant performance and user satisfaction.

Implementation approach

Common patterns to watch for:

- Repeated questions about the same topic suggest missing or hard-to-find information

- Questions spanning multiple sections indicate content organization issues

- Requests for examples point to gaps in practical guidance

- Questions about "how to get started" suggest unclear onboarding paths

Simple improvement cycle:

- Collect questions: From support tickets, user feedback, or AI interaction logs

- Identify patterns: What topics come up repeatedly?

- Improve content: Add missing information, clarify confusing sections, or reorganize content

- Validate changes: Check if question volume decreases on those topics

This approach improves documentation for all users, regardless of how they access it—through AI assistants, search, or direct browsing.

6. Complete code examples with full context

LLMs excel at understanding complete, runnable code examples. Partial snippets without imports, configuration, or file paths create confusion.

Why this matters for AI

Incomplete code examples force LLMs to make assumptions or provide generic responses instead of specific, actionable guidance. When LLMs can't see the full context—like which packages you're using or how files are structured—they resort to generic advice that might not work for your specific implementation.

Implementation approach

Include all necessary context:

Provide complete file structure context:

7. Maintain consistent terminology with a glossary

Consistent terminology helps LLMs understand relationships between concepts and provide accurate cross-references.

Why this matters for AI

When the same concept is called "API key," "access token," and "credentials" across different pages, LLMs cannot effectively link related information or provide comprehensive answers. This leads to fragmented responses where users get partial information instead of complete guidance that connects related concepts.

Implementation approach

Spell out acronyms on first use: Use the full form first, followed by the acronym in parentheses. For example: "Application Programming Interface (API)" or "Retrieval-Augmented Generation (RAG)." This provides context for both LLMs and human readers.

Define terms clearly and consistently:

Use terms consistently throughout documentation:

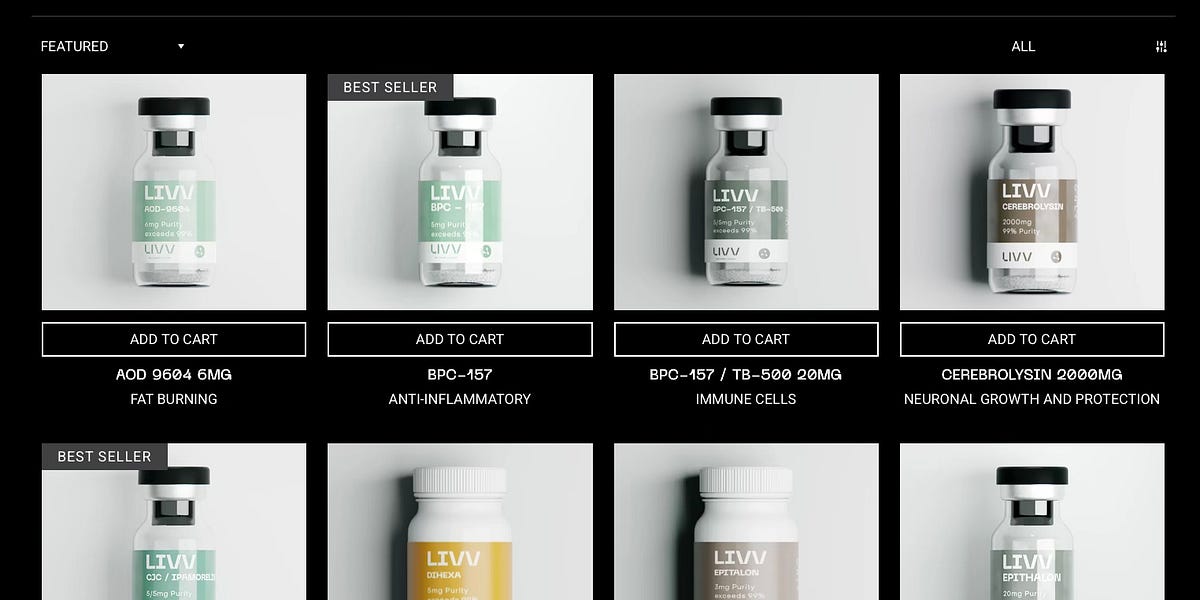

8. Describe images and interactive elements

Many AI assistants can't see images, videos, or interact with your product UI, though multimodal capabilities are emerging. Descriptive text ensures AI assistants can reference visual content effectively. This is especially critical for UI applications where users frequently ask about interface elements and workflows.

Why this matters for AI

When users ask "Where is the export button?" and your documentation only shows a screenshot, AI assistants cannot provide helpful guidance. This creates frustrating dead-ends where users need visual information that AI cannot access. Descriptive text transforms these visual elements into actionable guidance.

Implementation approach

For UI screenshots:

For interactive flows:

9. Tag pages with meaningful metadata

Good metadata helps AI understand the content's purpose, scope, and relationships. This isn't just SEO—it's semantic clarity.

Why this matters for AI

Metadata provides context that helps LLMs understand content scope and relationships before processing the main text. Clear titles, descriptions, and categorization help LLM-based systems select the most relevant content and understand how different pages relate to each other, leading to more accurate and contextual responses.

Implementation approach

How you define metadata depends on your documentation platform. Most systems generate HTML meta tags from frontmatter, while others require manual HTML meta tag configuration.

Example metadata elements:

Descriptive titles and headings

Instead of generic:

- "Configuration" → "WebSocket connection configuration"

- "Getting Started" → "Setting up your first Acme integration"

- "Advanced" → "Advanced caching strategies for high-traffic applications"

10. Customize the AI prompt for your specific context

Biel.ai provides a robust base prompt optimized for technical documentation, but every product and audience is unique. The custom prompt acts as long-term memory—it's used in every response the chatbot provides. Customize it to reflect your specific domain, common user scenarios, and communication style.

Why this matters for AI

Generic AI assistant responses often miss product-specific context and user needs. A customized prompt ensures LLMs understand your product's unique terminology, common user scenarios, and appropriate communication style. This leads to more relevant, accurate responses that feel native to your product ecosystem.

Implementation approach

For detailed configuration options, see the Biel.ai custom prompt documentation.

Example customizations:

For a developer-focused API:

For a low-code platform:

11. Multiple products, separate chatbots

If you maintain documentation for multiple products, consider dedicated AI assistants for each. This prevents cross-product confusion and allows for specialized prompts and content optimization.

Why this matters for AI

Mixed-product documentation confuses LLMs, leading to responses that blend concepts from different products or provide irrelevant solutions. Separate AI assistants ensure users get focused, product-specific answers without cross-contamination from unrelated features or workflows.

Implementation approach

Clear indicators for multiple chatbots:

- Products serve different user types (developers vs. end-users)

- Significantly different terminology or concepts

- Separate branding or go-to-market strategies

- Different support teams or documentation workflows

Example structure:

- docs.acme.com/api/ → API-focused chatbot with developer prompt

- help.acme.com/app/ → End-user focused chatbot with simplified language

- enterprise.acme.com/ → Enterprise-specific chatbot with advanced features

Implementation with Biel.ai

With Biel.ai, you can create multiple projects for different documentation sets:

Remember that AI is a powerful tool to enhance human interaction with your documentation, not replace thoughtful content creation. The same principles that made documentation SEO-friendly years ago apply to AI optimization: write for humans first, optimize for machines second.

Guiding principles

- Clarity benefits everyone: Well-structured content helps both AI and human readers

- Context is crucial: Good documentation provides context whether accessed by AI or directly by users

- Quality over quantity: Focus on essential, accurate information rather than comprehensive coverage

- User-first approach: Solve real user problems, and AI will naturally provide better assistance

Conclusion

Optimizing documentation for AI agents isn't about gaming algorithms—it's about creating clear, purposeful content that serves users effectively through any interface. By following these strategies, you'll build documentation that excels in both human-readable clarity and AI-assisted discovery.

The investment in structured, contextual documentation pays dividends across your entire user experience: faster onboarding, reduced support burden, and more confident users who can find answers quickly and accurately.

Start with one or two of these optimizations, measure the impact through your AI assistant's performance, and gradually implement additional strategies.

These optimizations improve documentation for both AI systems and human readers. Start with one or two changes and iterate based on user feedback.

.png)