Hello! We recently hit 2,000 subscribers, so thank you very much for the support! 🎉

Today, let’s dive into the world of testing and explore Property-Based Testing. In this post, we will discuss traditional tests, explore their limitations, and see how Property-Based Testing can help us improve our testing strategy by focusing on the fundamental concept of properties.

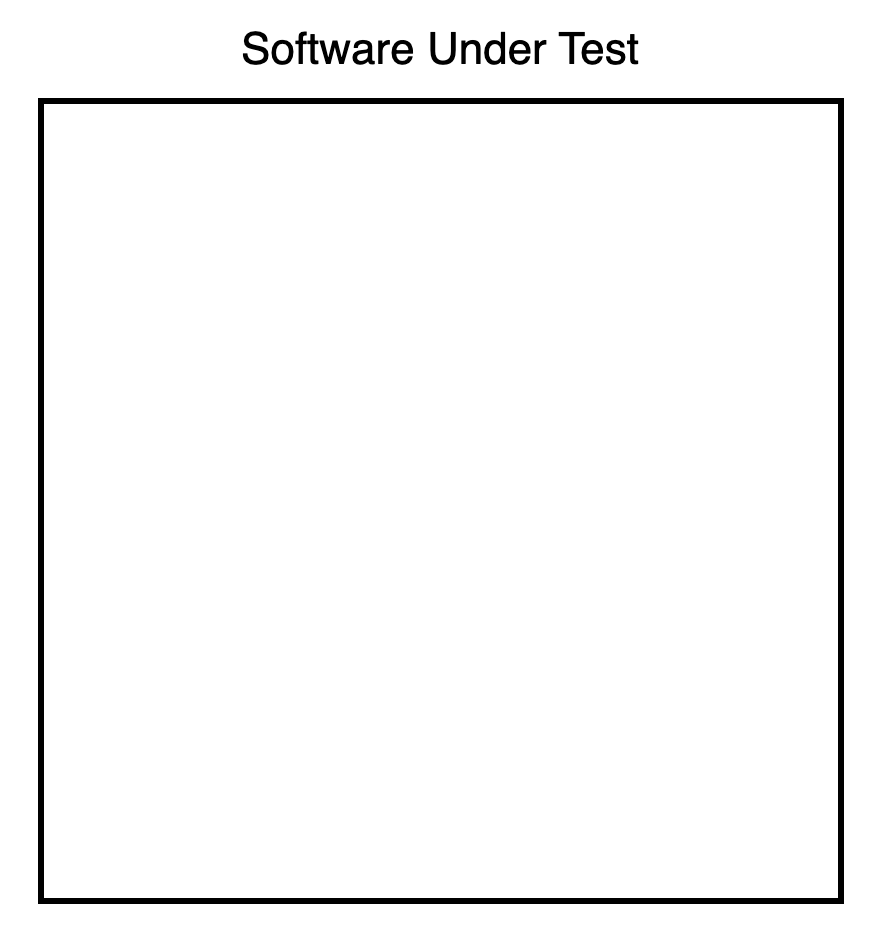

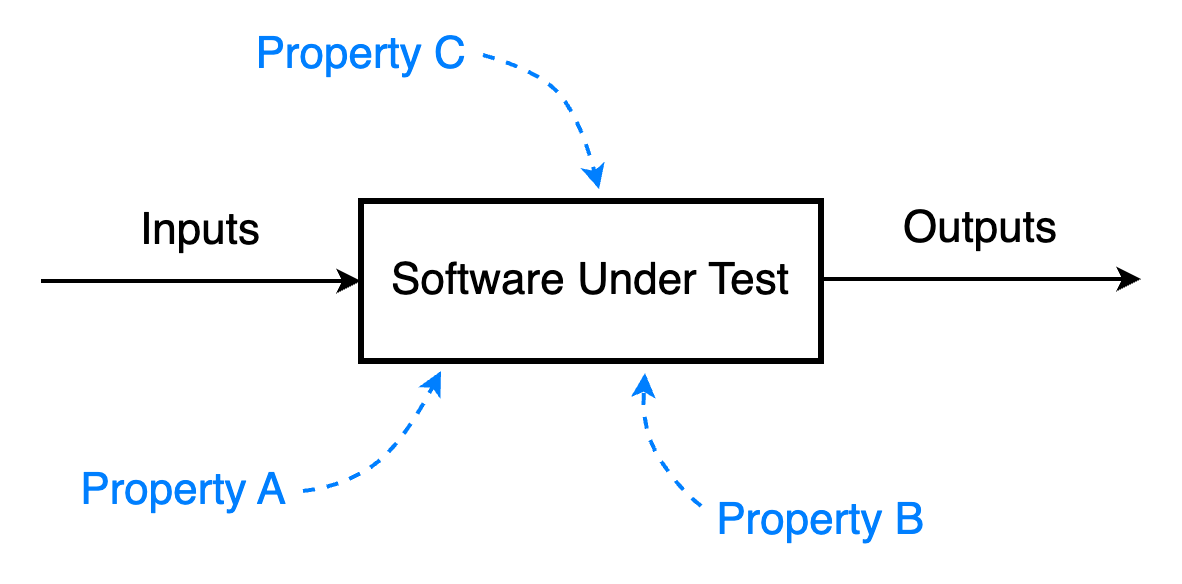

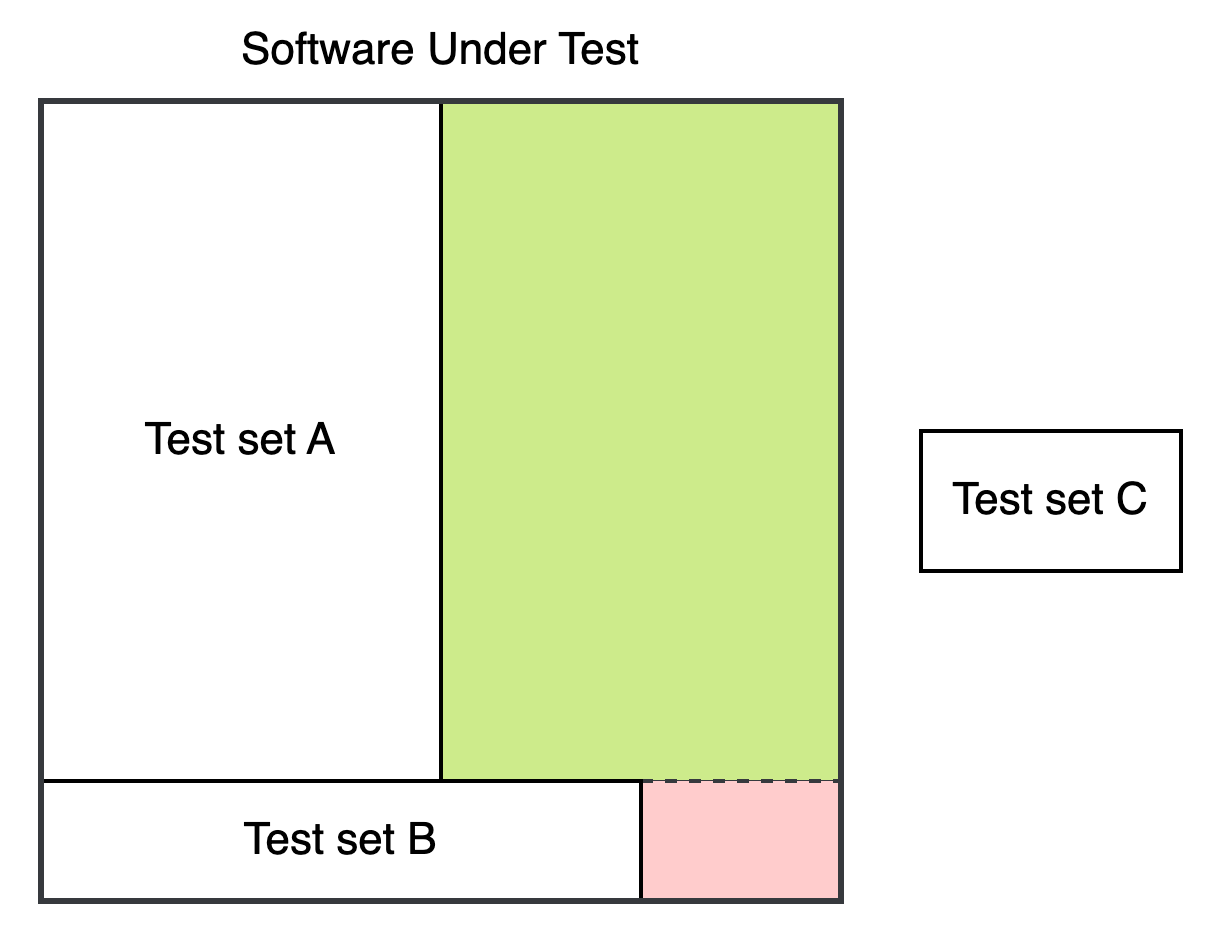

Imagine this white box contains all the behaviors implemented in a software:

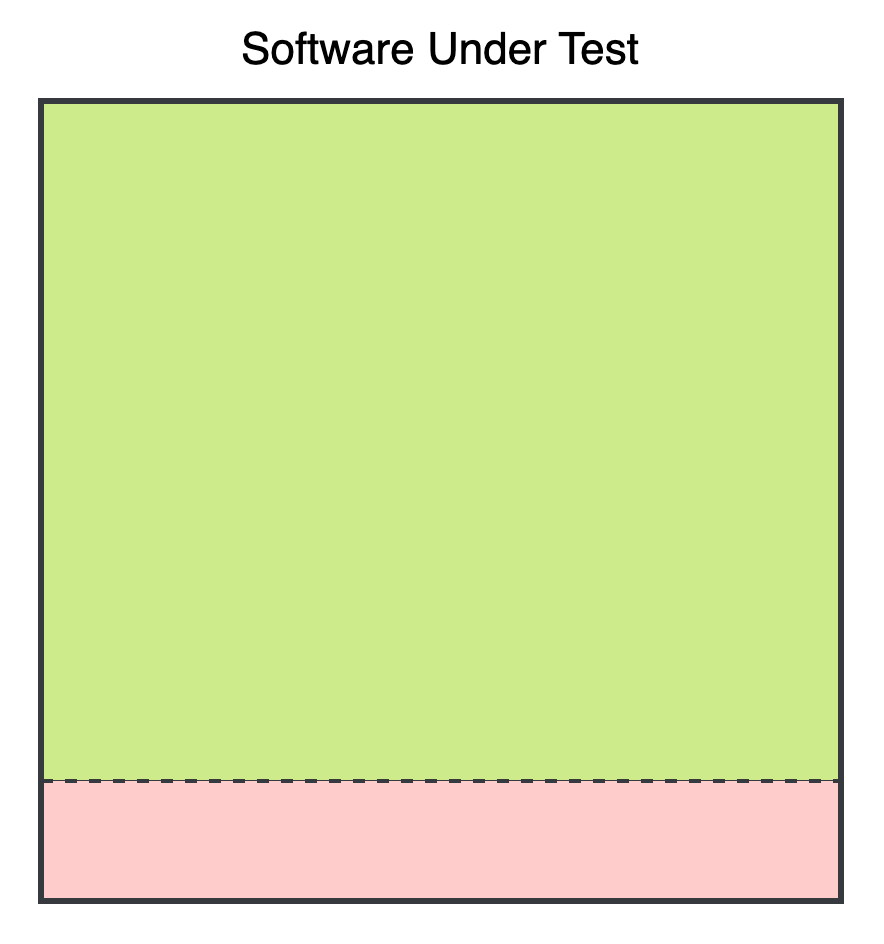

Of course, like with any codebase, our software contains bugs (imagine how boring bugs-free software would be!). Let’s make the green part represent all the valid behaviors while the red part the invalid ones (the bugs):

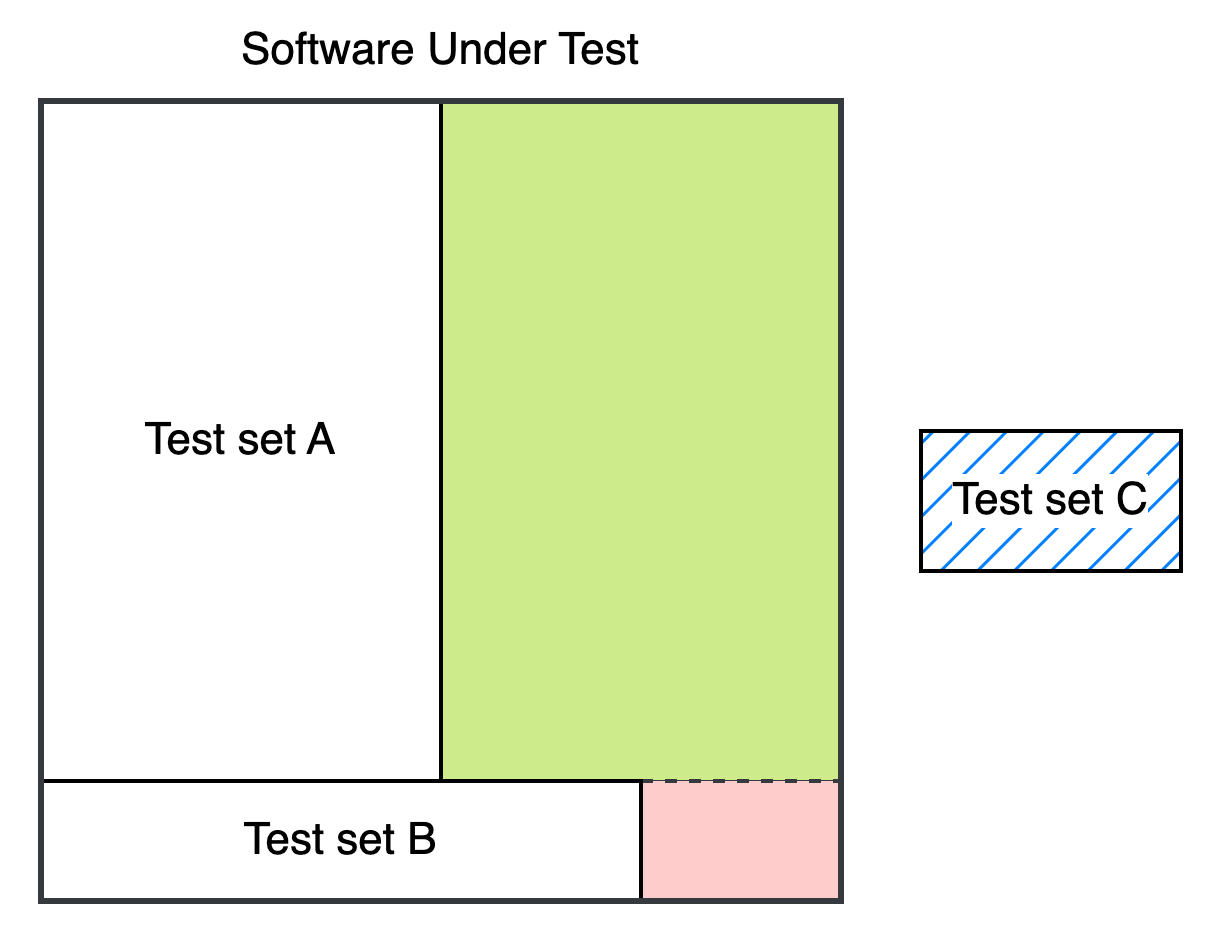

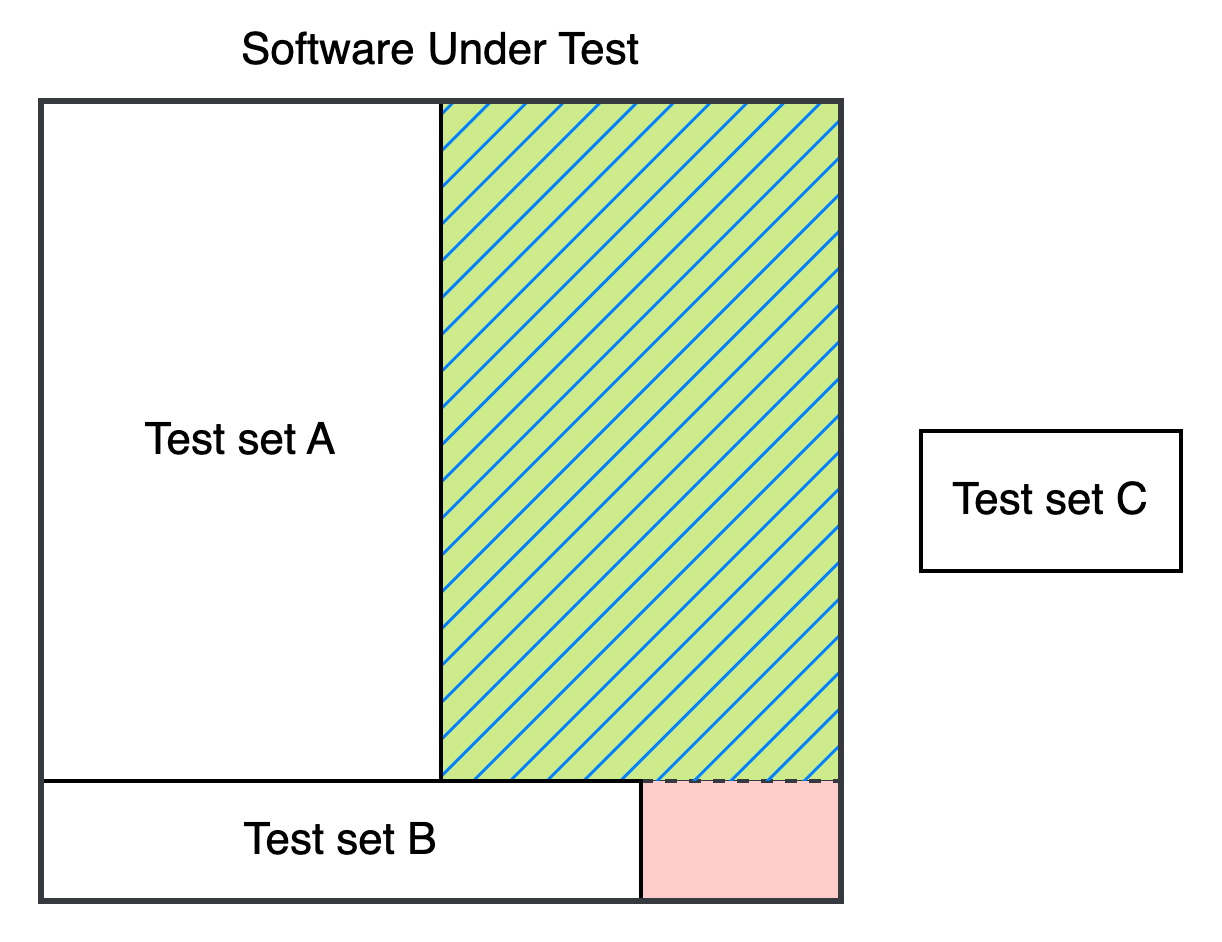

Now, let’s write some tests to validate our code. For the sake of visualizations, we will partition the tests written into three sets: A, B, and C—all of them will be passing:

Test set A: The true positive tests that correctly verify valid behaviors exhibited by the software. The remaining visible green part represents valid behaviors not covered by tests.

Test set B: The false negative tests that should catch bugs, but they don’t because they are flawed. The remaining visible red part represents invalid behaviors not covered by tests.

Test set C: The invalid tests that check for behaviors that… don’t even exist. They typically arise from misunderstandings of how a feature is supposed to work, and because the tests themselves are flawed, they fail to reveal this misalignment.

Let’s break down the four main problems in this example, sorted in increasing order of severity:

1. Invalid tests covering nonexistent behavior:

These tests are fundamentally misaligned with reality. They cause unnecessary maintenance overhead and can mislead developers if tests serve as living documentation (see Unit Tests As Documentation).

2. Test coverage gaps:

For now, this is okay. Yet, if something changes later in the uncovered area, regressions may go unnoticed.

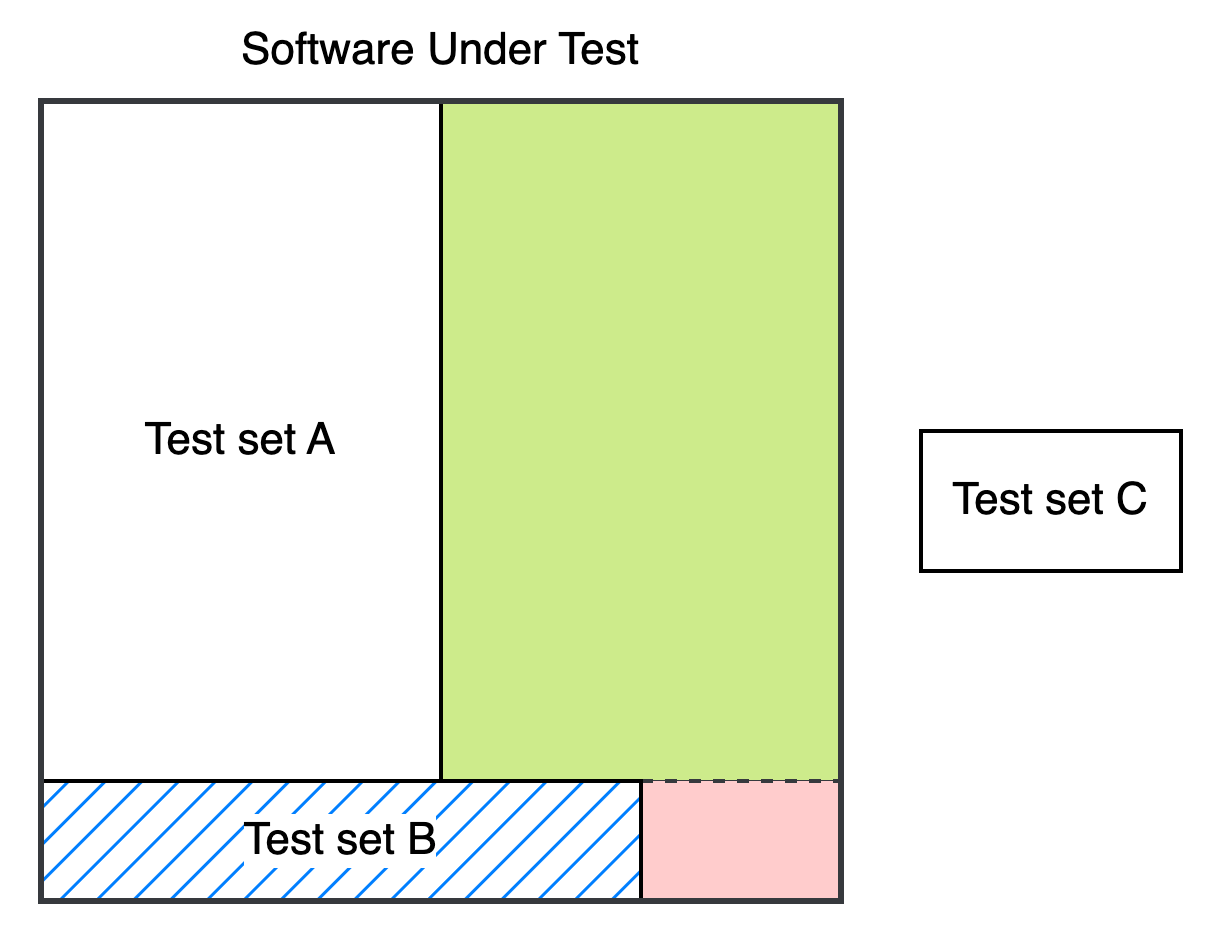

3. Missing tests lead to undetected bugs:

The consequences start to escalate. Here, bugs exist, but as there’s no test for them, they remain invisible.

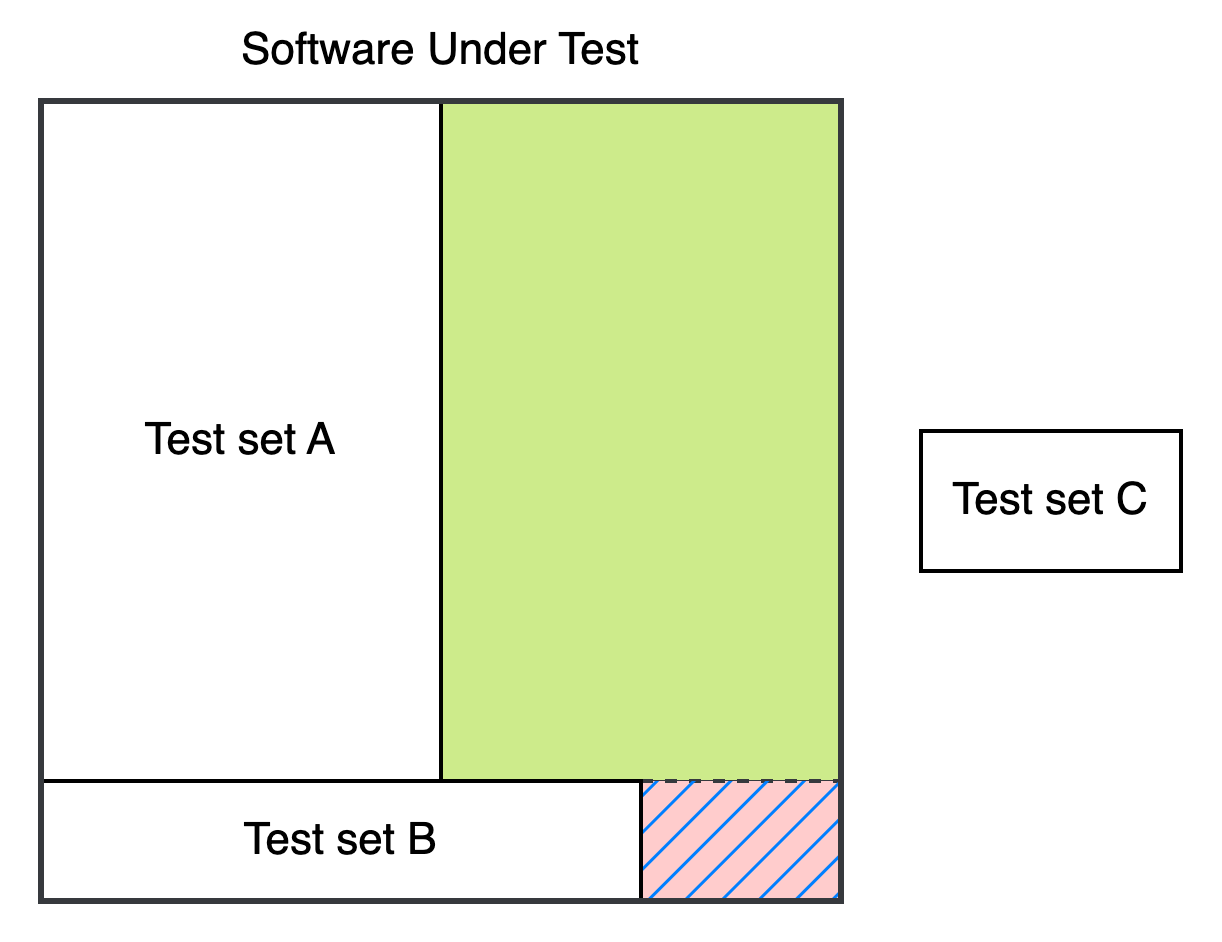

4. Flawed tests hiding bugs:

Even worse than 3., the tests here should detect bugs but they don’t. This is arguably worse than missing tests because it gives us a false sense of security: we think our software works as intended when it isn’t.

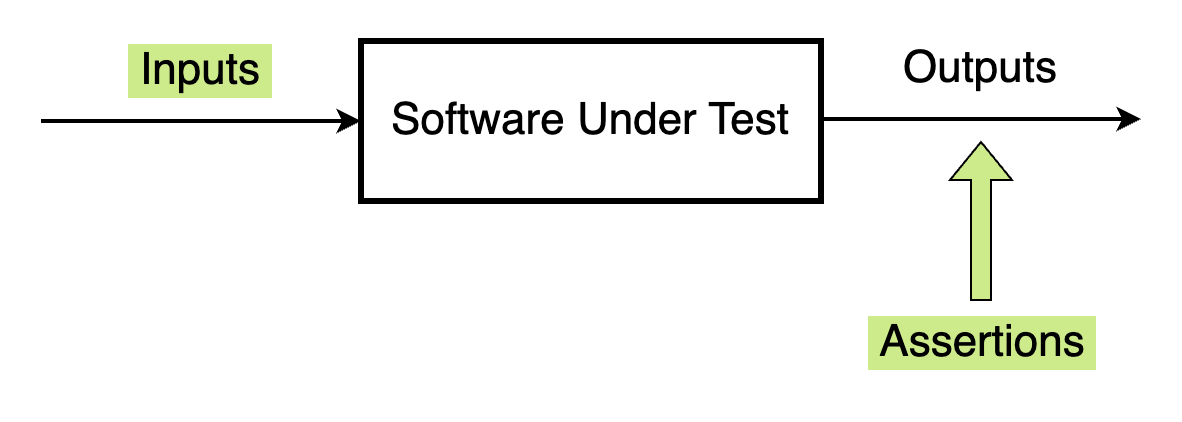

In this example, we rely on traditional tests. Regardless of whether we’re talking about unit tests, integration tests, or else, traditional tests follow a common structure:

Define a starting state.

Apply specific inputs.

Assert that output matches expectations.

This method has been proven effective for decades, but it has limitations, including:

Tests are manually designed, so some scenarios may be forgotten. Indeed, since traditional tests are written by humans, they are limited by our own assumptions and understanding of the software. Therefore, we can miss edge cases or unexpected situations.

Passing tests don’t guarantee a bug-free system. A passing test only confirms that the tested scenario behaves as expected, not that the software works as expected. If the test is flawed, bugs can remain unnoticed.

Test maintenance may become a burden over time. In contexts where high coverage is enforced, the more tests and edge cases we add, the more tightly coupled our tests become with the implementation, leading to increasing maintenance effort.

These limitations highlight a key challenge: traditional tests are only as good as the cases we manually define. They check specific scenarios but don’t ensure broader correctness across all possible inputs. This is where a different approach comes in, one that shifts the focus away from manually defining tests cases and instead focus on the fundamental concept of properties.

Instead of defining specific inputs and expected outputs, Property-Based Testing (PBT) focuses on properties, meaning rules that must always hold, regardless of the input.

Rather than asking: “For this input, do I get this expected output?“ We’re asking: “Regardless of the input, does this property always hold?“

To give a concrete example, let’s discuss a common type of PBT tests known as fuzzing.

In general, fuzzing tests focus on the property: “My software shouldn’t crash“. For example, imagine we wrote a function that manipulates a string. Instead of manually testing edge cases, we can use a fuzzing library to generate random inputs (including unexpected formats or extreme values) to ensure our function doesn’t crash without requiring us to think of every possible edge case.

So, what’s the difference between fuzzing and property-based testing? To be honest, the boundary isn’t always crystal clear.

My working understanding is based on blog posts from Hypothesis, a property-based testing in Python (the post is referenced in the Sources section). In a nutshell, we should see fuzzing as a subset of PBT:

Fuzzing: Primarily used to find crashes or unexpected behaviors by feeding random inputs. It doesn’t typically require a deep knowledge of the software’s expected properties.

Property-based testing: Instead of just detecting crashes, PBT defines general properties that the software must always satisfy and generates test cases to verify them. This approach goes beyond failure detection and requires more structured thinking about correctness.

Both fuzzing and PBT start with randomized inputs (often called a fuzzer), but while fuzzing primarily focuses on crashes, PBT goes beyond that to formally validate expected system behavior.

So, what kind of properties can we define in property-based tests? Here are some examples:

Structural properties: Ensure an operation preserves certain structural characteristics of the data, such as a length.

Idempotency properties: Ensure that an idempotent function produces the same output when applied multiple times.

Commutativity properties: Ensure that a function is commutative, meaning changing the order of its argument gives the same result.

Roundtrip properties: For serialization or encoding functions, encoding followed by decoding should return the original value.

These are some classic properties we can think of in the context of PBT. Ultimately, PBT is about viewing code as a whole and identifying the fundamental properties it should always preserve. Instead of verifying isolated cases, we validate broader correctness guarantees:

So far, the properties discussed in this post share similarities with unit tests, as they focus on local functions.

However, nothing in the concept of PBT prevents us from taking a step back and applying it at a broader level, such as at the API level or even at the system level, where multiple applications interact.

Once we extend this perspective, we can consider properties such as:

Temporal properties: “99% of API calls must complete in less than 30ms“.

Consistency properties: “The database must respect causal consistency”.

Application invariants: “Financial transaction must be balanced (debits = credits)”.

Reliability properties: “Retrying failed requests must not result in duplicate transactions”.

PBT is not limited to low-level function testing; it can be applied at multiple levels, from local functions to system level to assess property that must always hold for a group of applications.

With PBT, we can take any system, inject randomized inputs, and ensure that it behaves correctly—not by verifying specific test cases, but by enforcing fundamental system properties that must always hold, regardless of the input. This approach moves beyond individual test cases and shifts the focus to system-wide correctness.

PBT changes how we think about testing. Instead of manually defining scenario which may lead to issues like false negatives, PBT takes a step back and focuses on defining the fundamental properties or invariants that must always hold.

Beyond improving test coverage, PBT also serves as living documentation, as the properties capture essential rules that must govern our software or systems.

That said, PBT shouldn’t be seen as a replacement for traditional tests, it’s a complementary approach. The real challenge is recognizing that some parts of a system may be too complex to assess manually. In such cases, relying on randomized inputs and predefined properties can be a more effective and simpler way to catch bugs.

💬 I purposely avoided discussing library-specific features because I wanted this post to encourage you to think about whether properties-based tests make sense for the systems you manage. Let me know what you think about PBT in the comments!

❤️ If you made it this far and enjoyed the post, please consider giving it a like.

📣 This post is part of a series written in collaboration with Antithesis, an autonomous testing platform. They are not sponsoring this post—I reached out to them because I was genuinely intrigued by what they were building and ended up falling in love with their solution. We will dive deeper into it in an upcoming post titled Deterministic Simulation Testing. In the meantime, feel free to check out their website or their great blog.

What is Property Based Testing? - Hypothesis Blog // The source I referenced in the post.

Software reliability, part 1: What is property-based testing? - Antithesis blog

Property based testing: let your testing library work for you By Magda Stożek - Devoxx

.png)