Welcome to LWN.net

The following subscription-only content has been made available to you by an LWN subscriber. Thousands of subscribers depend on LWN for the best news from the Linux and free software communities. If you enjoy this article, please consider subscribing to LWN. Thank you for visiting LWN.net!

The pedalboard library for Python is aimed at audio processing of various sorts, from converting between formats to adding audio effects. The maintainer of pedalboard, Peter Sobot, gave a talk about audio in Python at PyCon US 2025, which was held in Pittsburgh, Pennsylvania in May. He started from the basics of digital audio and then moved into working with pedalboard. There were, as might be guessed, audio examples in the talk, along with some visual information; interested readers may want to view the YouTube video of the presentation.

Sobot works for Spotify as a machine-learning engineer in its audio intelligence lab, so he works on a team using machine-learning models to analyze music. The company has various open APIs that can be used to analyze audio tracks, but it has also released code as open-source software; pedalboard is available under the GPLv3. It has also released open models, such as Basic Pitch, which turns audio data into Musical Instrument Digital Interface (MIDI) files; the model can be used on the web or incorporated into other tools, he said.

He noted that he had created a demo for the talk that attendees could try out during his presentation; it used Python and pedalboard in the browser (by way of Pyodide and WebAssembly) to allow users to create audio. "If somebody were to make sound during my talk because they're playing with this REPL, I would be overjoyed." He got his wish a little over halfway into his 30-minute talk.

Digital audio

To understand how digital audio works, you need to understand how sound works, Sobot said. "Sound is really just pressure moving through the air"; his voice is moving the air in front of his mouth, which the microphone picks up and sends to the speakers, which create pressure waves by moving the air there. As with anything physical that can be stored into a computer, the way to get sound into the machine is to measure it.

He showed a simple graph with time as the x-axis and pressure on the y-axis; he then played a short saxophone clip, with each note showing up as a dot on the graph. The ten or so dots obviously corresponded to the notes in some fashion, but it was not entirely clear how, in part because each measurement was only taken every 0.4 seconds. A sample rate of one sample per 0.4 seconds is 2.5Hz. Sample rate is important for digital audio; "the faster that you sample, the higher quality audio that you get". It is not just quality, though, higher sampling rate means that more details, especially high-frequency details, are captured.

Looking a little more closely at the graph, he pointed out that silence (0.0) is not at the bottom of the y-axis as might be inferred, but is in the middle; some of the points measured are above that line, some below, because the pressure is a wave that is moving back and forth, he said. The maximum loudness (+/- 1.0) is both at the bottom and the top of the y-axis; our ears do not distinguish between waves moving toward or away from us, he said, but the measurements do. Each of the points on the graph has a pressure value that can be turned into a number, such as 60% amplitude (or 0.60) or 90% amplitude in a negative direction (-0.90).

If you wanted to reproduce that audio clip, though, you would need much higher-fidelity sampling, so he showed the graph that results from sampling at a standard digital-audio rate of 44,100Hz. That graph (or waveform) has so many points that you cannot distinguish individual measurements anymore, though you can still see where the original points came from.

He zoomed the graph in to see it at millisecond scale, which consisted of around ten close-to-identical waves. Measuring the waves from peak to peak showed that the period was 5.7142ms—the reciprocal of that is 174.61Hz, which can be looked up in a table of note frequencies to show that it is an F (specifically F3).

Looking at the microsecond scale allows seeing the individual measurements that were made by the analog-to-digital converter when the audio was digitized. That can be looked at as a string of numbers, such as -0.003, 0.018, 0.128, and so on. That is all that digital audio is, Sobot said; it is just a stream of numbers coupled with a sample rate, which is enough information to reproduce the original sound.

In order to store that information on disk, it could written out as, say, 64-bit floating-point numbers, but that takes a lot of space: roughly 21MB per minute per channel. That is not really workable for streaming audio over the network or for storing a music collection. "So, instead, very smart people came up with compression algorithms", such as MP3 or Ogg Vorbis, which can reduce that size for a channel to around 1MB per minute. The algorithms do that "by throwing away parts of the audio that we can't really hear" because the frequency is outside of our hearing or the sound is covered up by other parts of the audio.

Audio in Python

But these compressed floating-point representations no longer correspond to the original data; in order to work with them, there will be a need to convert them using a library of some sort. Since Python is a "batteries included" language, there should be something in the standard library to work with audio data, he said. The wave module is available, but it provides a pretty low-level API. He showed how it can be used to retrieve the floating-point values, "but it's kind of a pain", so he would not recommend that approach.

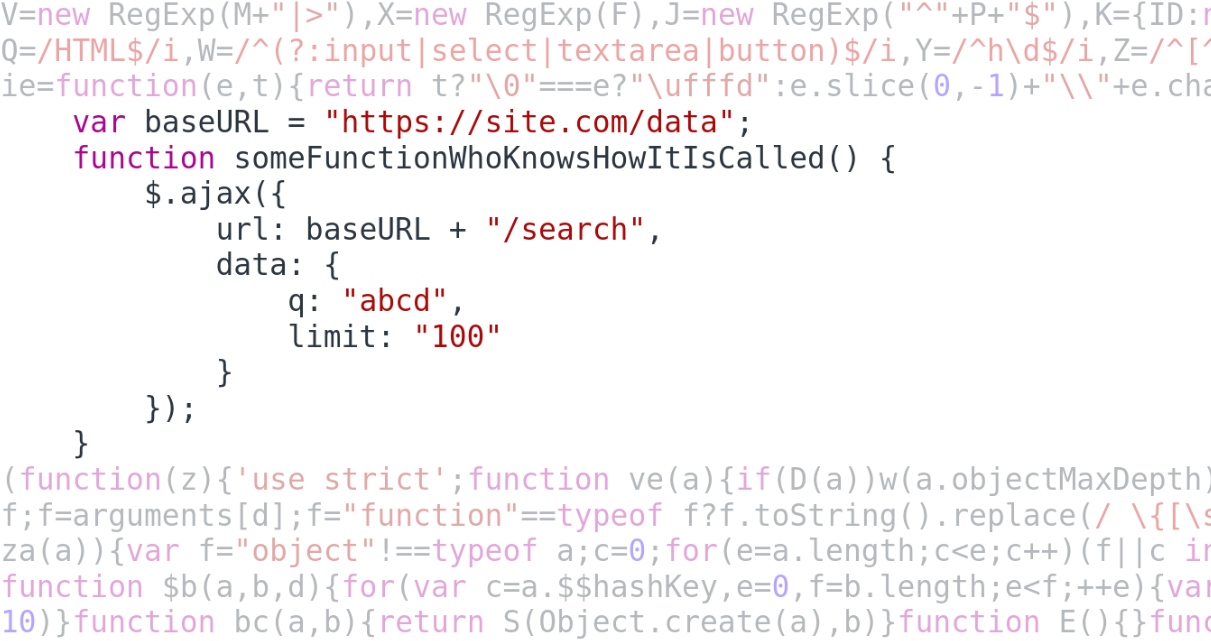

Instead, he showed the pedalboard interface, which is much more natural to use. From his slides (which do not appear to be online):

from pedalboard.io import AudioFile with AudioFile("my_favourite_song.mp3") as f: f.samplerate # => 44100 f.num_channels # => 2 f.read(3) # => array of shape (2, 3) # array([ # [ 0.01089478, 0.00302124, 0.00738525], # [ ... ] # ])The

read()function returns a NumPy N-dimensional array (

ndarray) with two rows (one for each channel) each having three sample values (as requested by the

read(3)). The first row in the array is the left channel and the other is the right. He then showed ways to use array slices to select various portions of the audio:

with AudioFile("my_favourite_song.mp3") as f: audio = f.read(f.frames) # => shape (2, 1_323_000) audio[1] # right channel ... audio[:, :100] # first 100 samples, stereo audio[:, :f.samplerate * 10] # first 10 seconds audio[:, -(f.samplerate * 10):] # last 10 secondsIn addition, the file does not have to all be read into memory like it is above, the

seek()function can be used with

read()to extract arbitrary sections of the audio data.

He displayed a small program to add a delay (or echo) effect to the audio, by simply doing math on the sample values and plugging them back into the array:

... mono = audio[0] # one channel delay_seconds = 0.2 # 1/5 of a second delay_samples = int(f.samplerate * delay_seconds) volume = 0.75 # 75% of original volume for i in range(len(mono) - delay_samples): mono[i + delay_samples] += mono[i] * volumeHe played a short clip of a major scale on the piano, both before and after the effect, which was easily noticeable both audibly and in the graphs of the waveforms that he also displayed. That showed the kinds of effects that a few lines of Python code can create. His next example added some distortion (using the math.tanh() hyperbolic tangent function) to a short guitar riff—once again with just a few lines of Python.

Audio problems

There are some pitfalls to working with audio and working with it in Python that he wanted to talk about. He gave an example of a program to increase the volume of an audio file, perhaps as a web service, by reading in all of the samples, then multiplying them by two ("don't do this"). The resulting file can be written out using pedalboard in much the same way:

with AudioFile("out.mp3", "w", f.samplerate) as o: o.write(louder_audio)That program might work for a while, making users happy, until one day when it takes the whole server down by crashing the Python process. The problem, as might be guessed, comes from reading the entire audio file into memory.

He tested his favorite song, which is 3m22s in length, takes up 5MB on disk, which corresponds to 68MB once it is uncompressed into memory. That is around 14x compression, which is pretty good, he said, but maybe another user uploads a podcast MP3. That file is 60m in length, but podcasts compress well, so it is only 28.8MB on disk—uncompressed, however, it is 1.2GB, for 42x compression. That still would probably not be enough to crash the machine, however. When someone uploads "whoops_all_silence.ogg", which is 12 hours of silence ("just zeroes") that only takes up 3.9MB on disk because Ogg has good compression, it turns into 14.2GB in memory (7,772x compression) and crashes the system.

The important thing to remember, Sobot said, is that the input size does not necessarily predict the output size, so you should always treat the files as if they could have any length. Luckily, that's easy to do in Python. He showed some code to open both the input and output file at the same time and to process the input file in bounded chunks of frames. That way, the program never reads more than a fixed amount at a time, so the code scales to any file size. If there is only one takeaway from his talk, he suggested that attendees should always "think of audio as a stream" that can (and sometimes does) go on forever.

Another thing to keep in mind is the processing speed of Python, he said. For example, the loop in his distortion example takes eight seconds per minute of audio, which is not bad; that is a 7.5x speedup so it could still easily be done in realtime. But, switching the loop to use the NumPy version of tanh() makes it run in 23ms per minute of audio (a 2541x speedup). That means using NumPy, which does the looping and calculating in C, is 338x faster than using a regular Python loop; "for audio, pure Python is slow".

pedalboard

He asked how many of the attendees played electric guitar or bass and was pleased to see a lot of them; those folks already know what a pedalboard (in the real world) is. For the rest, pedalboards provide various kinds of effects on the output of a guitar, with configuration settings to change the type and intensity of the effect. pedalboard the library is meant to be a pedalboard in Python.

He showed some effects from pedalboard, including using the pedalboard.Reverb class for easily adding "reverb" (i.e. reverberations) to an audio file. It is rare that only a single effect is desired, he said, so pedalboard makes it easy to put them together:

board = Pedalboard([ Distortion(gain_db=25), Delay(delay_seconds=0.6, feedback=0.5, mix=0.5), Reverb(room_size=0.75), ]) effected = board(audio, f.samplerate)Checking out the video at 23:49 will demonstrate these effects nicely.

pedalboard "ships with a ton of plugins" for effects, Sobot said. But users often have plugins from third parties that they want to use; pedalboard can do that too. The pedalboard.load_plugin() function can be used to access Virtual Studio Technology (VST3) plugins (as a pedalboard.VST3Plugin class). Those plugins can be configured in Python or by using the user interface of the plugin itself with the show_editor() method. VST3 instruments are supported as well.

Advanced pedalboard

In the waning minutes of the talk, Sobot did a bit of a whirlwind tour through some advanced pedalboard features. For example, audio files can be resampled "in constant time, with constant memory", easily, and on the fly:

with AudioFile("some_file.flac").resampled_to(22050) as f: ...The

pedalboard.time_stretch() functioncan change the length of an audio file. The

AudioFile.encode()function will convert to different formats, such as MP3, FLAC, and Ogg; the

AudioFileinterface can be used to write a file in a different format as well. The

AudioStream classcan be used to send or receive live audio streams (e.g. to speakers or from a microphone)—or both while doing some kind of effect in realtime. But, wait, there's more ...

In the Q&A, an attendee asked about hardware requirements for working with audio using pedalboard. Sobot said that the requirements were modest, since the code is mostly running in C and C++ that has been optimized over the last 30 years or so; people are using Raspberry Pi devices successfully, for example. Another wondered about seeking into the middle of a frame in an MP3 file, but Sobot said that pedalboard would ensure that any seek would land on a sample boundary, just as if the file had already been decoded in memory.

[Thanks to the Linux Foundation for its travel sponsorship that allowed me to travel to Pittsburgh for PyCon US.]

.png)

![Peter Sobot [Peter Sobot]](https://static.lwn.net/images/2025/pycon-sobot-sm.png)