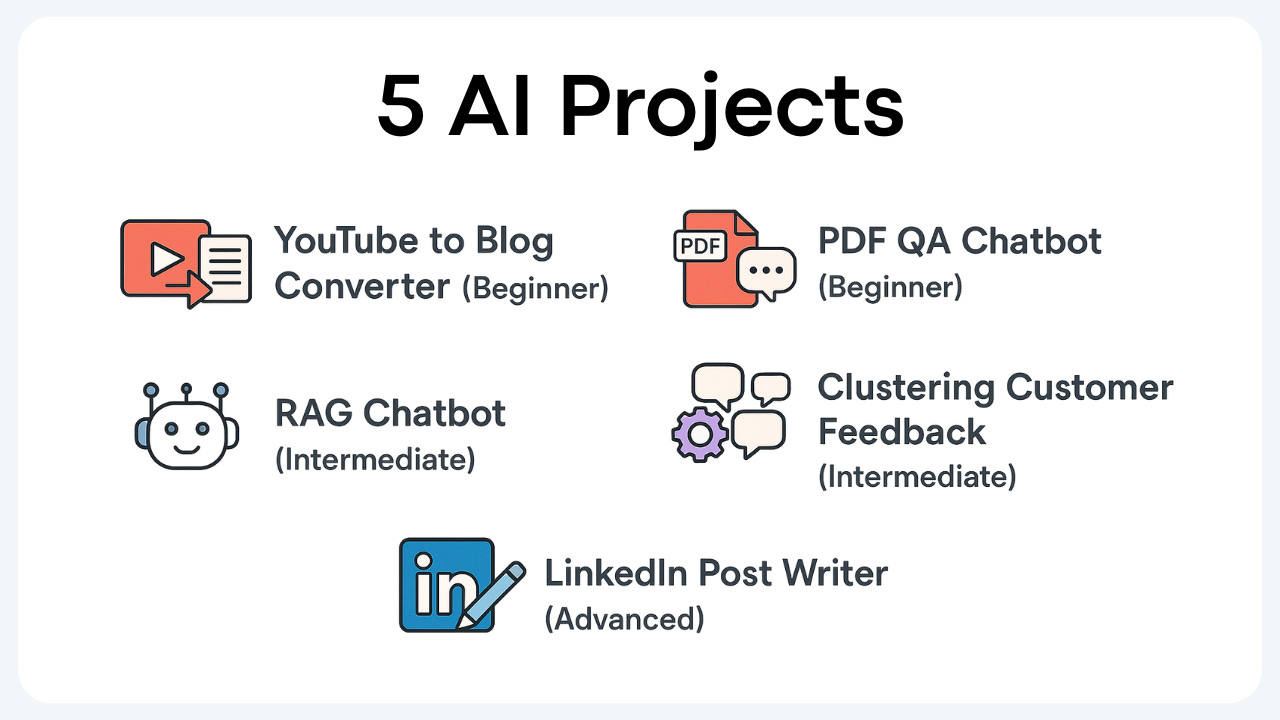

The best way to develop your AI skills is by building projects. However, finding time to sit down and actually code projects can be challenging for busy professionals and leaders. Here, I share 5 AI projects you can build in an hour (or less) at three levels of sophistication. For each idea, I share step-by-step instructions and example code to help you get started.

Shaw Talebi is an ex-Toyota data scientist with over 6 years in AI. Driven by a passion for learning and making AI accessible to all, he founded a YouTube channel and technical blog that now inspire over 75,000 learners. He helps entrepreneurs and tech consultants build AI apps through his 6-week AI cohort.

AI gives us back our most valuable resource: time. This comes from automating and expediting tasks that might otherwise take us hours to complete.

For coders, the best way to tap into this is by using tools like ChatGPT, Cursor, Co-pilot, Claude, v0 and Windsurf (the list grows longer and longer) to help you write code faster. These tools have compressed my “afternoon projects” into something I can knock out in an hour or less.

Here I share 5 projects I’ve built in less than an hour. You can use these projects directly or (even better) use them as inspiration for automating tasks that you do repeatedly.

Content creation is a big part of my work as an entrepreneur. While it is one of the best ways solo founders can inform customers about their products and services, it is time-consuming.

One automation that has helped me write blog posts 3X faster is translating YouTube video transcripts into articles using GPT-4o. Although this doesn’t automate the entire content creation process, it still saves me hours developing an initial draft.

Extract the YouTube video ID from the video link using regex

Use the video ID to extract the transcript using youtube-transcript-api

Write a prompt that repurposes the video transcript into a blog post

Send prompt to GPT-4o using OpenAI’s Python API

Resources: Example code (extracting transcripts) | Example code (OpenAI API call)

# (Step 2) Extracting transcript from a YouTube video from youtube_transcript_api import YouTubeTranscriptApi # video ID (from Step 1) video_id = "bAe4qwQGxlI" # Fetch the transcript transcript = YouTubeTranscriptApi.get_transcript(video_id) # Combine the transcript text transcript_text = "\n".join([entry["text"] for entry in transcript])P.S. I turned this project into a proper web app at y2b.io.

Although ChatGPT is a great tool for quick document question-answering, it is not a suitable solution for documents containing sensitive information (data uploaded to ChatGPT can be used for future model training).

One solution is to set up an LLM chat system on your local machine. Here is a simple way to do that with ollama.

Download ollama

Extract the text from the PDF using PyMuPDF

Pull in your favorite LLM using ollama

Write a system message which passes the PDF text into the model’s context

Pass user messages with questions about the document

(Bonus) Create a simple UI using gradio

Resources: Example code (local chatbot) | Example code (extracting PDF text)

# Basic usage of Ollama (note: you need to install ollama locally first) import ollama import time # pull model ollama.pull('llama3.2-vision') # interact with model (locally) stream = ollama.chat( model='llama3.2-vision', messages=[{ 'role': 'user', 'content': 'What is an LLM?', }], stream=True, ) # stream text for chunk in stream: print(chunk['message']['content'], end='', flush=True)Sometimes you don’t only want to find information in a single PDF, but information dispersed across a range of files. This is where retrieval-augmented generation (RAG) is helpful.

RAG automatically retrieves context relevant to a user query before passing it to an LLM. Here is a simple way to do that with LlamaIndex.

Read and store documents in a vector database

Create a search function to retrieve context from the database given a query

Construct a prompt template to combine a user query and retrieved context, and pass it to an LLM

Connect components in a simple chain: query -> retrieval -> prompt -> LLM

Write a query and pass it into the chain

(Bonus) Create a simple UI using gradio

Resources: Example code (RAG) | Example code (gradio UI)

A central component of a RAG is the vector database, which enables the retrieval process. Specifically, these “vectors” are so-called text embeddings, numerical representations of a chunk’s semantic meaning.

However, RAG isn’t the only use case for embeddings. These vectors can be combined with traditional machine learning approaches to unlock countless applications. I recently used it to analyze and summarize patterns in open-ended survey responses from my customers.

Load in survey data

Encode open-ended responses using the sentence transformers Python library

Cluster responses by similarity using KMeans (scikit-learn)

Write a prompt to summarize the responses from a given cluster

Summarize responses in each cluster using GPT-4o-mini

Resources: Example code

# Basic usage of the Sentence Transformers library from sentence_transformers import SentenceTransformer # load model model = SentenceTransformer("all-distilroberta-v1") # compute embeddings embeddings = model.encode(text_list)A popular AI use case is content marketing. Doing this in practice, however, is tricky since, one, the content often sounds AI-generated, and two, search engines and social media platforms actively check and suppress AI-generated content.

We can mitigate these issues by fine-tuning an LLM on one’s unique writing style and voice. I recently used OpenAI’s fine-tuning API to train GPT-4o on my LinkedIn posts. Here’s how you can do that, too.

Create a .csv file consisting of (10–50) idea-post pairs

Craft a prompt template that combines response instructions and a LinkedIn post idea

Generate message-response examples and store them as a list of dictionaries

Split the dataset into training and validation sets (optional)

Save data in the .jsonl format except by OpenAI’s fine-tuning API

Upload the .jsonl file(s) to OpenAI

Create a fine-tuning job

Compare the fine-tuned model to GPT-4o

Resources: Example code

Today’s AI coding tools (e.g. ChatGPT, Cursor, Windsurf, v0, etc.) have made it easier than ever to build projects fast. The broader impact is that new coders can start building practical projects in just a few hours, and experienced coders can build an entire product in a weekend.

Here I shared 5 AI projects spanning three levels of sophistication. I hope that one of these projects can help break the seal for those stuck on the sidelines when it comes to building with AI.

P.S. Struggling to learn AI on your own? Check out my 6-week AI cohort—a hands-on program where you'll build real projects each week with me and a supportive community of peers :)

Use code PAUL100 to get $100 off.

.png)