The Upshot

$1.8 trillion, with a T.

That’s the size of the market opportunity for AI application startups, according to new research from Redpoint.

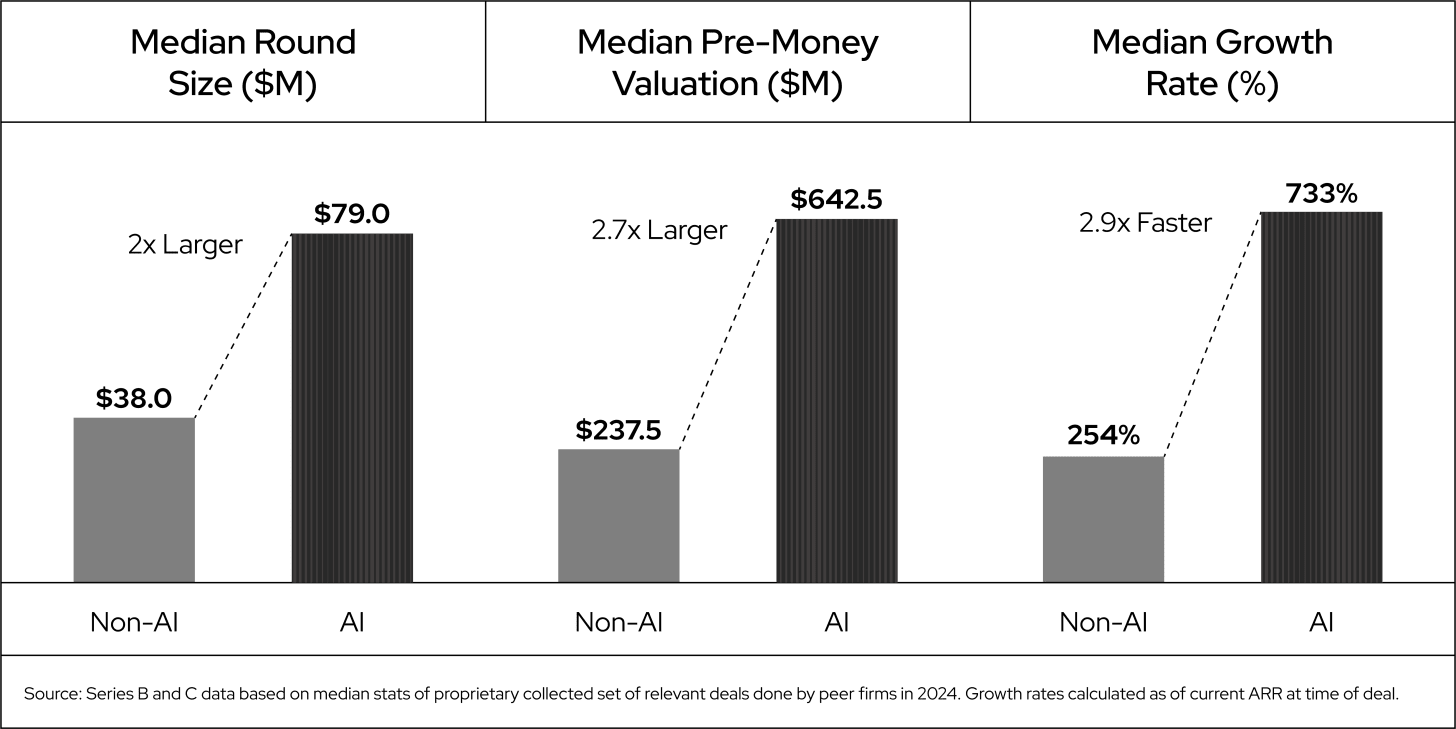

And it’s a good thing, too: that “massive” number is also approximately what is needed for AI apps to pay off the hundreds of billions invested in AI infrastructure today by Nvidia and others, the VC firm has found in a new report launching today, the AI64.

Does it sound frothy? Maybe a little crazy? Yes, freely admits Urvashi Barooah, a Redpoint partner who helped spearhead the project.

But in past tech innovation cycles, the apps layer has historically caught up to the picks-and-shovels folks underpinning them. And with $10 trillion-plus in knowledge work ripe for AI innovation, Redpoint’s team is confident the current cohort of AI apps standouts can get us there.

In what feels like a fated twist, we’ve partnered with Redpoint to share the AI64 in this, our 64th edition of Upstarts.

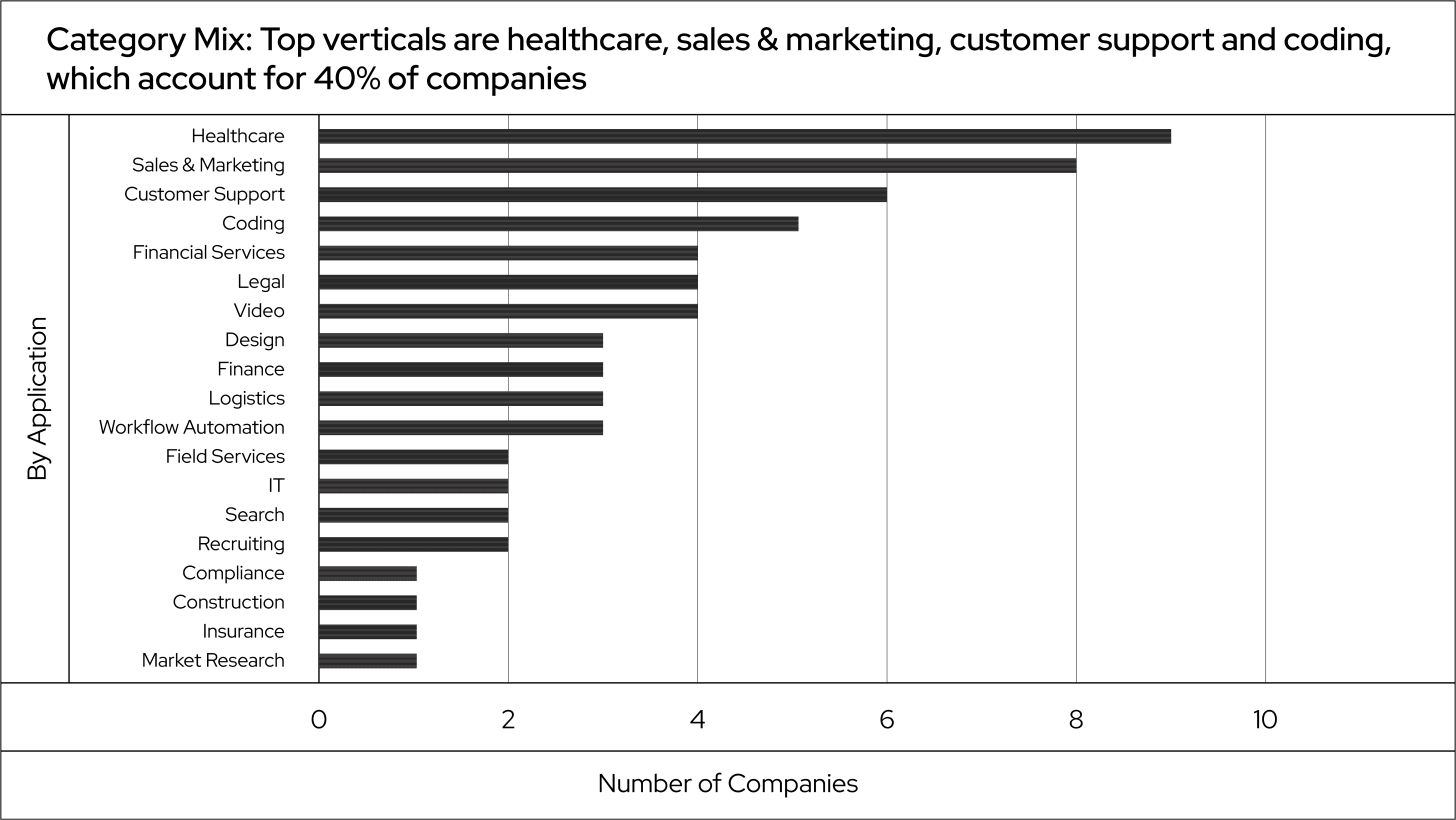

The report includes a deeper breakdown at the investment in AI that’s counting on these apps, as well as the categories that stand to see breakout winners, or several, from accounting to software development.

A bunch of unicorns are on there that you already know or should, like Lovable, Perplexity and Runway. But about half are newer breakouts that have announced less than $50 million in funding to date, like Latent Health and Maxima.

Combined, the mostly Bay Area bunch have raised $12 billion (that we know of) and reached an aggregate valuation of $72 billion; the median age of a listed company is just three years.

Often launched after the rise of ChatGPT, their pricing matches the new realities of AI inference, too, with just 19% of AI64 companies pricing their software by seat. Nearly half charge by usage, while outcome-based pricing is a smaller but emerging option.

Many have claimed record sprints to reach milestones like $100 million in annualized sales, but even among these companies themselves, there’s debate: how sticky is such revenue? Are the world’s biggest companies really using AI apps at scale? How much of this is easy come, easy go?

All eyes are on Cursor, a code editor startup on AI64 reported to be considering new investment at a $30 billion valuation. Launched just two-plus years ago, Cursor already works with two-thirds of the Fortune 1000 and 80% of the world’s thousand biggest engineering organizations, among one million users overall.

And in the past 90 days, Cursor has held no fewer than 300 C-Suite level conversations with such corporations, chief operating officer Jordan Topoleski tells Upstarts in an interview.

“We like to say ‘fast is fun,’ and it’s truly the case,” Topoleski says. “When people are feeling and loving a tool, it’s amazing to be in a position where you get to be there, working with them.”

To grow into its hype, Cursor’s running a carefully-scripted playbook that includes a higher bar for talent, hands-on tactics for winning over prospects, and a different approach to success metrics. Above all, it never loses sight of its core user, its COO says: the professional (10x, or 100x) engineer.

You can check out the complete AI64 report, with a lot more charts and startup takeaways, here.

For more on Cursor’s sales strategy – and how key customer Coinbase is using it today – read on, for our exclusive Upstarts AI64 coverage.

When Topoleski, a former startup founder, joined Cursor nearly two years ago, CEO Michael Truell had already set the tone: Cursor (then known as Anysphere) was up against incumbents like Microsoft with much bigger engineering and sales teams, and baked-in customer bases. The way to win: start with “discerning” developers.

Cursor deliberately targeted the “10x” engineer – a professional already looking to improve their efficiency, who would be likely to code at home on nights and weekends pretty similarly to how they worked in their day job.

To do that, Cursor intentionally sought out and put its product through the wringer with what the company considered “the most distinguished and best engineers” it could find, even if the feedback was rough. “We’d use it as a litmus test of our product’s capabilities,” Topoleski says.”Then if people loved the product, we could let gravity do its work, helping it spread.”

As Cursor reached engineers in bigger companies, the startup would get pulled into adding more features needed by such customers: compliance, finance, procurement and security; plus better ways for users to interact with each other and what they built in Cursor.

While much of Cursor’s story has been one of classic “product-led growth,” or PLG, where its tools land with rank-and-file developers and then expand organically across an organization, Topoleski says the company has always believed that a bottoms-up function needed to be combined as fast as possible with top-down interest from engineering leaders.

“What that requires is a level of being a great educator, a teacher of what this tech is going to mean for an organization,” he says.

For many of Cursor’s big customers – and Topoleski says enterprise clients are “a large part of Cursor’s revenue, last disclosed in June as having crossed $500 million in ARR – Cursor is the first AI tool they’re paying for at scale.

That means it’s crucial that Cursor employees work hands-on with such customers as much as possible, and get leaders at the customer playing with Cursor, too.

To do that, Cursor hires what it calls ‘technical AI advisors,’ staffers who have worked in technical sales in the past, and who are typically power users of Cursor themselves. Every GTM team member demos the product back in the hiring process; in onboarding, they must complete a course on building and shipping a product using Cursor.

A more important metric for success, according to Cursor: half life of generated code.

In a ‘Built in Cursor’ Slack channel, onboarded employees must share one live, hosted project with the entire company to check out and critique. “We think it’s really important that people are equal parts product expert and enablers,” Topoleski says.

One such leader is Pavan Ravipati, Cursor’s vice president of technical account management. A former software engineer like many of his team, Ravipati spends much of his time effectively consulting big companies, like Adobe, Nvidia and Salesforce, on how to do more with Cursor’s tools.

Cursor’s ‘field engineers’ – something of a hybrid between a traditional sales engineer and a forward-deployed engineer taken from the Palantir playbook – meet with key clients at least once a week, helping them map out projects to try with Cursor, and how to measure progress better.

To start, they’ll look at how many developers shift to using Cursor daily. “Right out of the gate, that’s the first thing to focus on,” Ravipati says. But then it’s about bringing engineers close to product development, and breaking down silos internally, he adds. Cursor, for example, sees its engineers and their job functions as more interchangeable, less specialists than “barrel-shaped” talent that can go deep on a wide range of tasks.

How much a company is using Cursor is important to track – the startup offers dashboards for tracking each developer’s history – but quality is more important.

“We never thought that was the right metric to look at, because you can write a million lines of code, and it could be terrible,” Topoleski says. (Incidentally, Cursor enterprise customers already write about 100 million lines of code each day, the company says.)

“Part of the secret sauce is making sure that we can pull in understanding of a prompt in the context window, then actually improve the quality of the output.”

A more important metric for success, according to Cursor: half life of generated code.

Code that has to be rewritten in a few days or a week isn’t very valuable or helpful. At the bare minimum, inexpert users of Cursor should see at least 20% efficiency improvements or reductions in development cycle times, Ravipati says. But customers that are using Cursor most effectively should be seeing boosts of 2x to 6x in shipping speed (100% to 500%).

To get companies to that level, Cursor invests in helping to co-host large hackathons inside customers, as well as reverse demo sessions in which a handful of power users share sessions on tools they’ve built.

Ravipati’s team also works extra closely with companies that are moving over from mainframes or older code, with field engineers spending a week or more on-site setting up.

“One thing that we’re never going to see go away is that human touch,” says Topoleski. “The way we think about stickiness, from a GTM perspective, is to make sure that we form these types of relationships.”

Fellow AI founders and investors tip their cap to Cursor for becoming arguably the most popular tool for developers to code with AI right now, apart from, as one founder put it anonymously, “hardcore bros” who prefer to work directly in Anthropic’s Claude Code or OpenAI’s Codex tools.

“Competing on price alone doesn’t serve developers.”

The big question everyone seems to have: if Cursor is easy to set up, will it be as easy to drop? Cursor’s core product is already a “fork” of an open-source tool, VS Code, that added a bunch of features and customization. Much of the revenue it takes in, meanwhile, is believed to be sent on to the labs running the models it uses, including Anthropic and OpenAI.

One executive at a leading lab tells Upstarts on condition of anonymity that they believe that Cursor could still be losing money on some, if not all of its use cases. Over the summer, Cursor caused a stir when it changed its pricing to better reflect the costs of using more expensive models, leading Truell to publish a blog post addressing ensuing sticker shock.

Cursor’s still unusual among app layer AI startups in using token-based pricing, but a few startups have followed its example, Ravipati says. “Competing on price alone doesn’t serve developers,” he argues.

As for the sustainability of its business, Cursor says that revenue from existing customers tripled this year. Its business model is “sustainable for users and Cursor,” according to Ravipati.

“It’s really grown like a wildfire over the last year,” says Rob Witoff, head of platform at Coinbase, which we contacted after seeing past comments by CEO Brian Armstrong that he had given all employees just one week to adopt AI code tools or be fired.

(A source inside Coinbase argues that’s not quite what happened, as those who didn’t pick one up met with Armstrong for a Saturday call, and were let go if they signalled they were more resistant to change generally.)

Engineers at Coinbase aren’t required to use Cursor specifically, but about 90% choose it, says Witoff, with the rest using some mix of Microsoft Copilot, Codex, or JetBrains for specific uses and roles. “We’ve just driven Cursor the most because we’ve gotten the best feedback from it,” he says.

And Witoff disputes that using Cursor is like using a thin wrapper on top of Anthropic’s or OpenAI’s models. “That would be like saying that your Mac is just a pass-through for using the internet,” he says. (Upstarts learned a lot more about how Coinbase is using AI tools in our chat – so much so, that we will likely share more of those insights in a follow-up post.)

Back at Cursor, Ravipati says the company is working on a bunch of features to help ensure customer loyalty as well. Many of those involve its agent product announced a few months ago, which can build code behind the scenes, but will prove more useful and cost-effective as it can be better directed and guided.

Bug catching and code review are also high priorities for Cursor, to help corporations trust using its code at scale.

The mission, says Topoleski, stays the same: “Continue to make sure that we have the best product out there for developers, then try to get that product into the hands of as many people as possible.”

Update: This story has been updated with additional info from Cursor on its business.

.png)