The Problem with Traditional Development Workflows

Our traditional SDLC doesn’t make a lot of sense anymore now, does it?

Whether you’ve read Accelerate and are a “high performing team” doing trunk-based development, or still stuck in GitLab flow or GitHub flow, once you’ve drunk the agentic Kool-Aid, it becomes apparent these will no longer cut it.

This is due to a couple of reasons. For starters, since we’re now “orchestrating”, should reviewing be shifted left earlier in the process? And what about actual PRs, when you’re already reviewing code in real-time with AI (like pair programming), does the traditional post-hoc review still add the same value?

I’ve noticed some interesting shifts in how I work that seem worth sharing.

Let me walk you through my transformation from skeptic to convert, and the practical lessons I’ve learned along the way.

I’ve tried augmented coding in many forms, going through all the stages of grief, convinced it’s over-hyped and pushed by corporate interests rather than genuine developer need.

GitHub Copilot felt messy. Getting in the way even of the LSPs I had configured. Moreover, I dreaded the “pause” as I started writing to then wait on Copilot to get to the boilerplate.

Cursor and Windsurf… Look, I’m spoiled. As someone who lives and breathes in the terminal, these UI heavy editors feel painfully slow. It’s like moving from a 120Hz display phone to 60Hz. There’s no going back.

Zed is incredibly impressive. I want this editor to succeed, and part of me even wants to really be a user of it. But it’s not in my terminal.

Sure, you can open a terminal in Zed, but I want it the other way around. When you use terminal panes as your main tiling “window” manager in a full screen terminal, then a separate editor will always feel alien. I have little appetite for throwing my muscle memory over-board for something that (at least in my opinion) feels inferior.

I tried several Neovim plugins, but none of them stuck.

The Breakthrough Moment

Fatih Arslan’s post “I was wrong about AI Coding”, broke through the noise.

It made me try out Claude Code, and all of a sudden, it clicked for me.

What Actually Changed My Mind

What clicked wasn’t the tech itself, but it was finally getting a straight answer. For the longest time, upper management kept pushing AI tools down, and I kept asking the same persistent question: ‘It doesn’t work for me, can someone PLEASE just show me, because I don’t see it’. Crickets.

What finally broke through for me was a combination of things:

- Fatih is not a corporate evangelist, and someone whose technical judgment I respect

- His post was a genuine “I was wrong” story, not marketing material

- He showed real workflows, not theoretical benefits

I’ve sat through literal hours of AI talks, and nobody could actually demonstrate it working in practice until then. That’s what made me give Claude Code a shot. And remarkably, it actually worked. This completely shifted how I think about development workflows, leading to several key learnings:

I’ve been using Claude Code ever since. I started small, wrote project commands to fetch GitLab issue details through MCP and have at it and started getting familiar with this new agentic tooling.

Key Learnings from My Workflow Transformation

This experience has led to several key insights about how AI tools are reshaping development workflows:

Learning 1: Context Management is Critical

I’ve added the following to my global .gitignore:

_md/- Claude Code can write plans to markdown files in _md using literal checklists (- [ ]) and loop over them systematically (preferably test-driven)

- Leave breadcrumbs for future sessions in organized Markdown summaries

- Export deep research from other tools (e.g. ChatGPT) to Markdown, then ingest in Claude Code for strategic planning (I used this workflow to successfully migrate a family recipe website from Ruby on Rails 2 to Rails 7)

Context windows will get bigger, but I think this one workflow trick is here to stay with us for some time at least. I mean to look into https://www.task-master.dev, which seems to streamline this process even further.

Learning 2: Git Branches Create Workflow Conflicts

The problem with git branches is that they claim state of your working directory. You cannot have two agent sessions run on the same state, as they might get in each other’s way.

There’s already a ready-made solution: Git Worktrees.

Git worktrees create separate folders for each branch, allowing isolated AI agent sessions.

It’s not perfect (yet), I had to wrap most worktree actions for stuff such as copying .envrc around. There might be better ways here. IndyDevDan has a YouTube video on this subject: https://www.youtube.com/watch?v=f8RnRuaxee8.

Learning 3: IDEs Have Become Precision Tools

Since I’ve moved to using Claude Code for a lot of things, I’ve gutted my neovim plug-ins to the bare minimum. And certainly no LLM or auto-complete tools such as GitHub Copilot anymore.

My editor became a scalpel, for precision work only. I will open my editor for quick edits, showing Claude what I meant, or small edits/reviews, only.

Learning 4: Our SDLC doesn’t make a lot of sense anymore

This reinforces my opening point: our traditional SDLC patterns are fundamentally misaligned with agentic workflows.

Why am I still orchestrating this from my machine? Why isn’t my source code management platform initiating these? I’d rather have a single control center more adjacent to what an aviation control center is, than initiating all of these from my machine.

This shift requires rethinking fundamental assumptions: if an AI agent can write, test, and deploy code autonomously, do we still need the same approval gates? Should quality control happen during development rather than after?

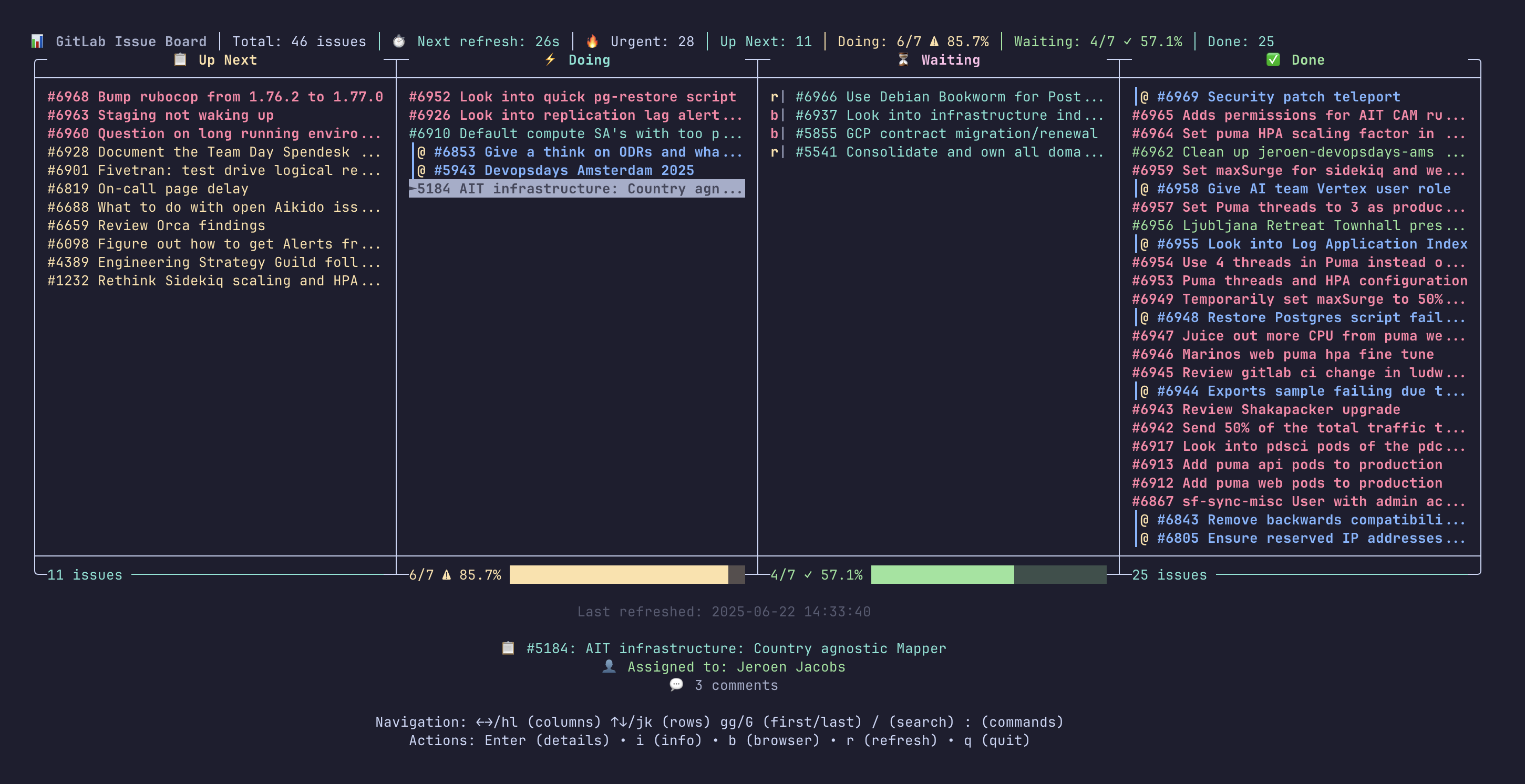

A PoC issue board in terminal I’m working on, for centralized orchestration

A PoC issue board in terminal I’m working on, for centralized orchestration

Where This Leads Us

These learnings hint us toward a fundamental reimagining of how we build software. I’m unsure what it’ll look like in a couple of years.

We’re moving from individual developers to conductors of agents within collaborative workflows. The question isn’t whether this will happen, but how quickly we can adapt our processes to leverage it effectively.

If you’re still skeptical (like I was), I get it. But find someone you trust who’s actually using these tools successfully. Skip the corporate demos and marketing hype. Just think of it as automation rather than AI if that helps reduce the hype resistance. Look for honest, practical accounts of what works and what doesn’t. Skepticism is healthy.

If you want to dip your toes in the water, here’s what worked for me:

Start small. Pick one annoying task (maybe writing boilerplate or documentation) and try Claude Code for just a week. Don’t try to revolutionize your entire workflow on day one.

Set up that _md/ folder and start documenting your experiments, even if they feel silly at first. Future you will thank present you when you’re trying to remember what worked and what didn’t.

Look for developers you already respect who are sharing honest experiences, not the ones pushing tools for affiliate commissions or corporate sponsorships.

Actually measure something. Don’t rely on vibes. Track how long specific tasks take before and after. I was shocked when I realized how much of a force multiplier Claude Code ended up being.

The landscape is shifting fast. Those who adapt their workflows now will have a significant advantage over those who wait for the tools to accommodate traditional development patterns.

.png)