Say you have a problem in front of you. It doesn’t really matter what the problem is, let’s say the problem is that you have to write out the entire text of Hamlet on a typewriter. This is not a particularly difficult problem to solve in the modern day. Naively, you could just look up the text of Hamlet on some website and then sit down at your nearby typewriter (what, you don’t just have one of those in the house?) and plink out the play character by character. Maybe at first you get a little tripped up on how the typewriter actually works, but within at most an hour you’ll have the whole thing ready to go.

If you were Sam Altman, you might go “No no, that’s silly. What we’ll do instead is grab a ton of monkeys, like infinite monkeys, and we’ll sit all of those monkeys down at infinite typewriters, and eventually between all of the shit flinging and banana eating one of them will produce Shakespeare. (And if you shake a bit of quantum dust on all of this, you will get Shakespeare very quickly).” Naively, you may think that this is a very expensive thing to do. And you’d be right, it is extremely expensive. But, critically, it would work in theory.

Why does this not work in practice? Monkeys are expensive, time is expensive, we just don’t have the resources to actually implement this monkey business in the real world. But this hypothetical example illustrates something rather important: there is no problem that cannot be solved with enough monkeys. If you have the resources for it, you can keep throwing monkeys at a problem until eventually the problem goes away. In fact, a sufficiently large number of monkeys is equivalent to a general purpose problem solver over a long enough time scale.

Replace monkeys with compute (and data) and it turns out that this basic formulation is approximately what is happening in all of the AI labs around the world. It’s not exactly as silly as monkeys at typewriters, of course, but the basic principle is that you really can just keep throwing compute (and data) at a problem until eventually the problem is solved. Richard Sutton, one of the fathers of reinforcement learning, called this ‘the bitter lesson‘:

The bitter lesson is based on the historical observations that 1) AI researchers have often tried to build knowledge into their agents, 2) this always helps in the short term, and is personally satisfying to the researcher, but 3) in the long run it plateaus and even inhibits further progress, and 4) breakthrough progress eventually arrives by an opposing approach based on scaling computation by search and learning. The eventual success is tinged with bitterness, and often incompletely digested, because it is success over a favored, human-centric approach.

And actually, it turns out you can go a step further and throw compute (and data) at a problem in a predictable way and get predictable returns from that investment. Very roughly, exponential increases in compute and data result in linear improvements in model performance, following power-law relationships. We call these ‘scaling laws’ — inspired by ‘the laws of physics’.

This is a deeply unintuitive hypothesis. There must be some things that can’t be brute forced, right?

In the mid 2010s, the idea that models get better as they get bigger was called the ‘scaling hypothesis’ and a lot of people wrote a lot about whether or not the scaling hypothesis was a real thing. There were many models, and many of them were quite big. But all of them plateaued eventually. Then, in 2017-2020, OpenAI blew the whole debate sky high. They released GPT-1, GPT-2, and GPT-3 in quick succession. Each model was simply the previous model but bigger, trained with exponentially more data and more compute. The rest, as they say, is history.

For a long time, many people thought that the creation of music and art and writing would never be reduced to a simple search over some hyperparameter space. Turns out those people were wrong, and these days we have image generators and text generators that are so good they’re starting to cause mass psychoses. In fact, it seemed like the only things that couldn’t be brute forced were things that actually occurred in the real world — like plumbing or folding laundry. This prompted many people to rightfully complain that the whole point of AI was to automate boring things, not fun things.

You know what the biggest problem with pushing all-things-AI is? Wrong direction. I want AI to do my laundry and dishes so that I can do art and writing, not for AI to do my art and writing so that I can do my laundry and dishes.

There are lots of reasons why robotics, and especially home robotics, has been difficult, but the TLDR is that there is an impossibly long tail of edge cases. What happens when you get some dust on your camera lens? How do you deal with a dog that is maybe too friendly with your household robot? Maybe you didn’t train on fabric that folds exactly like that. Stairs are hard. Etc etc. This long tail is why you have so much effort spent on seemingly basic tasks like picking things up. When I was at Google, I remember that the X building had a room called ‘the arm farm’, where dozens of robot arms spent all day picking up objects of various sizes and shapes just to get training data. Entire PhDs have been spent on just robot gripping.

All of this to say, reality has a lot more granularity and noise than basically any simulation. There’s also often more cost to getting things wrong — things that break or people that get hurt. If you want to build a useful robot — one that is sufficiently general purpose — you have to deal with all that. As a result the general feeling is that even as AI takes off and monkeys general purpose problem solvers become more ubiquitous, robotics is still fundamentally unsolved and you have to do all sorts of hand built things to get your robots to be useful.

But like…maybe we just need more monkeys?

This past week, startup Generalist released a very impressive demo called Gen0. They showed a single robot trained to do a wide range of tasks, many that it had never seen before. I was coincidentally visiting SF around the same time Gen0 dropped, and I’m not exaggerating when I say that every single person I knew even remotely close to robotics was buzzing about this thing. One friend of mine straight up said that this was the GPT2 moment for robotics.

What was the secret sauce? How did Generalist do something that all of the big labs have spent hundreds of millions of dollars chasing?

GEN-0 is pretrained on our in-house robotics dataset, which includes over 270,000 hours of real-world diverse manipulation data

You’ve gotta be…that’s it??? That’s all it was?!

Technically no that’s not all, they also talk about something called ‘harmonic reasoning’ which is some kind of ‘fundamentally new approach to train models’ blah blah blah its the data. The next biggest data set comes from the Pi robotics guys, clocking in at a whopping 400 hours. The Generalist guys trained on 1000x more data and got fantastic results.

This is absolutely a massive accomplishment for Gen0, and I think everyone at Generalist should take a victory lap. But also, what on earth is going on over at Google / OpenAI / xAI and every other big player in this space? 270000 hours is a lot of hours, but also, it’s not actually that much! How much does it cost to pay someone to do an hour of teleop? Generously, say it costs $20 per hour. So to collect 250000 hours, you have to shell out $5 million. Again, that’s a lot, but it’s not actually that much!

As far as I can tell, Generalist literally just sent out a bunch of grippers to a few thousand people and told them to use the grippers in their day-to-day lives in exchange for payment. Boom, thousands of hours of teleop data. In the process, Generalist showed that robotics follows the same sort of scaling laws as every other kind of deep neural net. As the compute and data get exponentially bigger in tandem, the models get better, following a power law.

I strongly suspect everyone else is going to try and rapidly catch up on their teleop data hours now that Gen0 has proven what is possible. Expect a race to be the first company to a million hours of data.

It’s worth pausing for a moment to think about how the Generalist story reflects on broader trends in the industry. I’ve said in the past that

Every AI model, large and small, is dependent on a few basic ingredients.

The first is compute. This is the substrate of intelligence. You need silicon, and you need a lot of it, and it needs to be wired up in a very particular way so that you can do electric sand magic to in turn do a lot of math very quickly. For most people, this means NVIDIA GPUs.

The second is data. Increasingly it seems like you need two different kinds of datasets. The first is just broadbased masses of data, as much as possible, with no care for quality of any kind under any measure. Reddit posts? Yep. Trip Advisor reviews? Into the mix. An esperanto translation of the Canterbury Tales? Sure, why not. The suffix we are looking for here is -trillions. And the second is extremely tailored highly specific subsets of data that ‘teach’ the model. A few hundred thousand examples of perfect question-answer pairs, or extremely detailed reasoning traces, things like that.

But I should be more explicit: you need the compute and data to go hand in hand. You can’t really replace data with compute and vice versa, they need to scale in tandem. So if you have too much compute and not enough data, you might as well not have bothered with the compute. Sell some GPUs and go hire some labellers.

For a while, the industry as a whole has been compute capped. The big story of 2024 was how everyone and their mother was hoarding GPUs because NVIDIA literally could not pump them out fast enough.

That is no longer the case. The data centers have come online. All of the big players have at least one datacenter with a few hundred thousand GPUs wired together. That means the focus has shifted back to data. And if we’re going to talk about data, we’re going to have to talk about a startup called Mercor.

I suspect most people haven’t heard of this particular company. In fact, I really think that the only people who know about it are folks in the startup world. But man, people in the startup world hear about this company a lot. Mercor’s origins are a bit hazy — a group of Thiel fellows who all sorta personally know Sam Altman just happened to land OpenAI as their first big client and raise hundreds of millions of dollars seemingly overnight? Skeptical. Still, they really did become a unicorn seemingly overnight, and their most recent valuation was at $10 billion within 2 years of founding. The rapid growth of Mercor has turned them into a kind of gold-standard company that VCs love to talk about (and compare their companies to). Rapid growth, crazy valuation, and super young kids running the show? It’s made for Silicon Valley.

What does Mercor actually do? Originally they were just a hiring platform of the garden variety. You would use them to post job openings for things, and they would hire contractors in India to do those things. Supposedly they would use ‘AI’ to do things, but I think in practice this was mostly just a hiring board. At some point they created a video recording platform for their hiring tool. The idea was that you could record a video of yourself to help hiring managers (and maybe also the “AI”) evaluate and match you better. So they started collecting a bunch of video data of people doing things.

You probably see where I’m going with this.

Reading between the lines, I think at some point one of the big AI companies (OpenAI?) tapped Mercor on the shoulder and said, “Hey, like, it’s great that you’re sending us all these contractors to fill jobs and stuff. Good job, love that. But actually, we noticed that you’re also collecting all this video data of people doing stuff. And, like, we would pay you way way more for just that video data.” It’s not quite right that Mercor turned into a way for the big AI companies to get people to give them video data for free. But, also, that description was not quite inaccurate either.

In any case, Mercor eventually pivoted fully into the data brokerage space. They now hire contractors to put together bespoke datasets for the big AI players. I don’t blame you if you feel like you’re getting de ja vu. We’ve seen this company before, it was called ScaleAI and it just got bought by Meta for a lot of money.

Tech Things: Meta spends $14b to hire a single guy

[UPDATE: apparently Scale.AI employees received a dividend equal to a premium on the value of all of their stock. In other words, all of the employees were effectively bought out, but still retain their stock AND still have any upside if the company continues to do well. This neatly explains why the price tag was essentially the valuation of the company…

Unlike ScaleAI, Mercor is mostly now hiring white collar first-world experts — lawyers, doctors, researchers — to construct these datasets. The datasets aren’t cheap. One of my friends contracted for Mercor and was paid something like $100 per hour. Which should give you a sense of how much OpenAI is paying on the backend for Mercor to still be turning a profit.

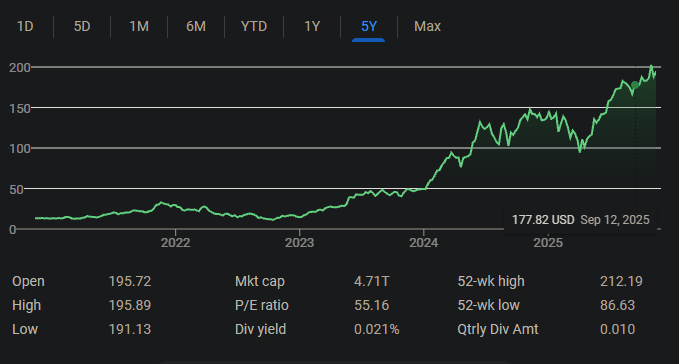

I’ll be honest, for a long time none of this made much sense to me. I was always surprised by ScaleAI’s success and equally surprised by Mercor’s success. Even though I just said that we are pivoting back to data, I really thought that we more or less had the data thing locked up. After all, we’ve indexed all of the world’s text, images, and video. And while we sometimes may need to get a few hundred thousand hours of some really specific data format — like teleop data or some kind of health data — I was under the impression that we already had pretty large datasets of those things. Eventually, though, something clicked. Google has indexed all of the world’s text, images, and video. Google never really needed ScaleAI, it had an internal equivalent called HComp. And Google is probably not using Mercor. My time at Google Research made me forget that the rest of the world exists and does not have the entire world’s data and has distinctly non-Google problems. Everyone else that is competing with Google is desperately trying to catch up to Google’s data reserves. Thus the money being thrown around to build these datasets. Yet another reason why I am bullish on Google.

Tech Things: Gemini 2.5 and The Bull Case for Google

Now, look, I have to admit up front that I'm biased here. I worked at Google for 4 years, and I loved it, and also I still have a little tiny bit of their stock (read: all of it), so, you know, feel free to critique my incentives if you want.

Anyway, data itself does have limitations and eventually I expect the scaling laws to kick back in on the compute side eventually. But as we saw with Gen0 above, we may be entering a brief window of a few months / years where everyone is scrambling to get some very bespoke datasets. Expect to see a raft of startups coming to meet that demand, with niche focus on creatives, aesthetics, and specific industries.

.png)