![]()

JavaScript-native AI agent orchestration via containerization. Deploy anywhere, scale infinitely.

Quick Start

Get started in 3 steps

From installation to running your first agent in under 5 minutes.

1

Install Dank

Install Dank globally with a single command. Auto-detects and installs Docker if needed.

2

Initialize Project

Create your agent configuration and project structure with built-in templates.

3

Run Agents

Deploy and manage your agents with Docker orchestration and real-time monitoring.

Why Dank

Built for the modern developer

JavaScript-powered, Docker-native, and open-source from day one.

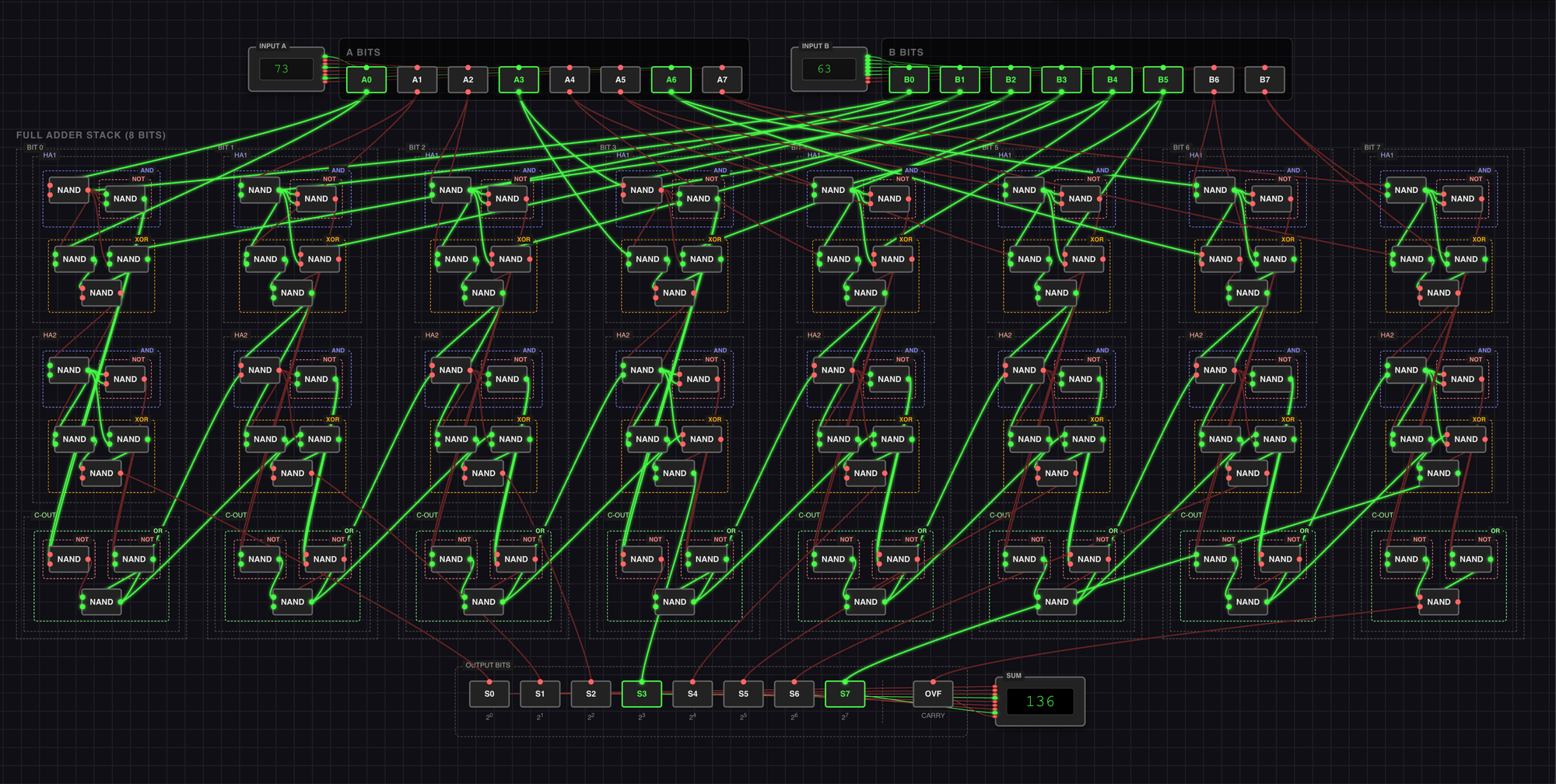

Multi-Agent Orchestration

Deploy and manage multiple AI agents as containerized services

JS

JavaScript Native

Built on the world's most popular programming language. No Python dependencies, no complex setup—just familiar JavaScript that every developer knows.

98% of developers use JavaScript

Universal Deployment

Docker containers work everywhere—AWS, GCP, Azure, Kubernetes, or your own servers. Deploy to any stack, any cloud, any infrastructure.

100% infrastructure agnostic

Open Source & Extensible

MIT licensed and community-driven. Add custom LLM providers, extend functionality, or contribute back to the ecosystem.

MIT licensed • Community driven

CI/CD Made Simple

Docker-native architecture means your agents build, test, and deploy like any other containerized application.

Production-Ready Images

Dank automatically builds optimized Docker images of your agents with all dependencies included. No more "works on my machine" issues.

dank build --push registry.com/my-agent:v1.0

Universal Compatibility

Deploy to any container orchestration platform—Kubernetes, Docker Swarm, AWS ECS, or your own infrastructure. Same agent, anywhere.

Kubernetes•Docker Swarm•AWS ECS•Azure ACI

Configuration

Define agents in seconds

Simple JavaScript configuration with powerful Docker orchestration under the hood.

const

{ createAgent }

= require('dank-ai');

agents: [

createAgent('customer-service')

model: 'gpt-4',

temperature: 0.7

memory: '1g',

cpu: 2

.setPrompt(`You are a helpful customer service agent...`)

.addHandler('output', console.log)

]

Simple Configuration

Define your AI agents with clean, readable JavaScript. No complex YAML files or obscure configuration syntax.

1

Choose Your LLM

OpenAI, Anthropic, Cohere, Ollama—or add your own custom provider with a few lines of code.

2

Configure Resources

Set memory, CPU, and timeout limits. Dank handles the Docker container configuration automatically.

3

Add Event Handlers

Define prompts, add event handlers, and customize behavior with familiar JavaScript patterns.

Management

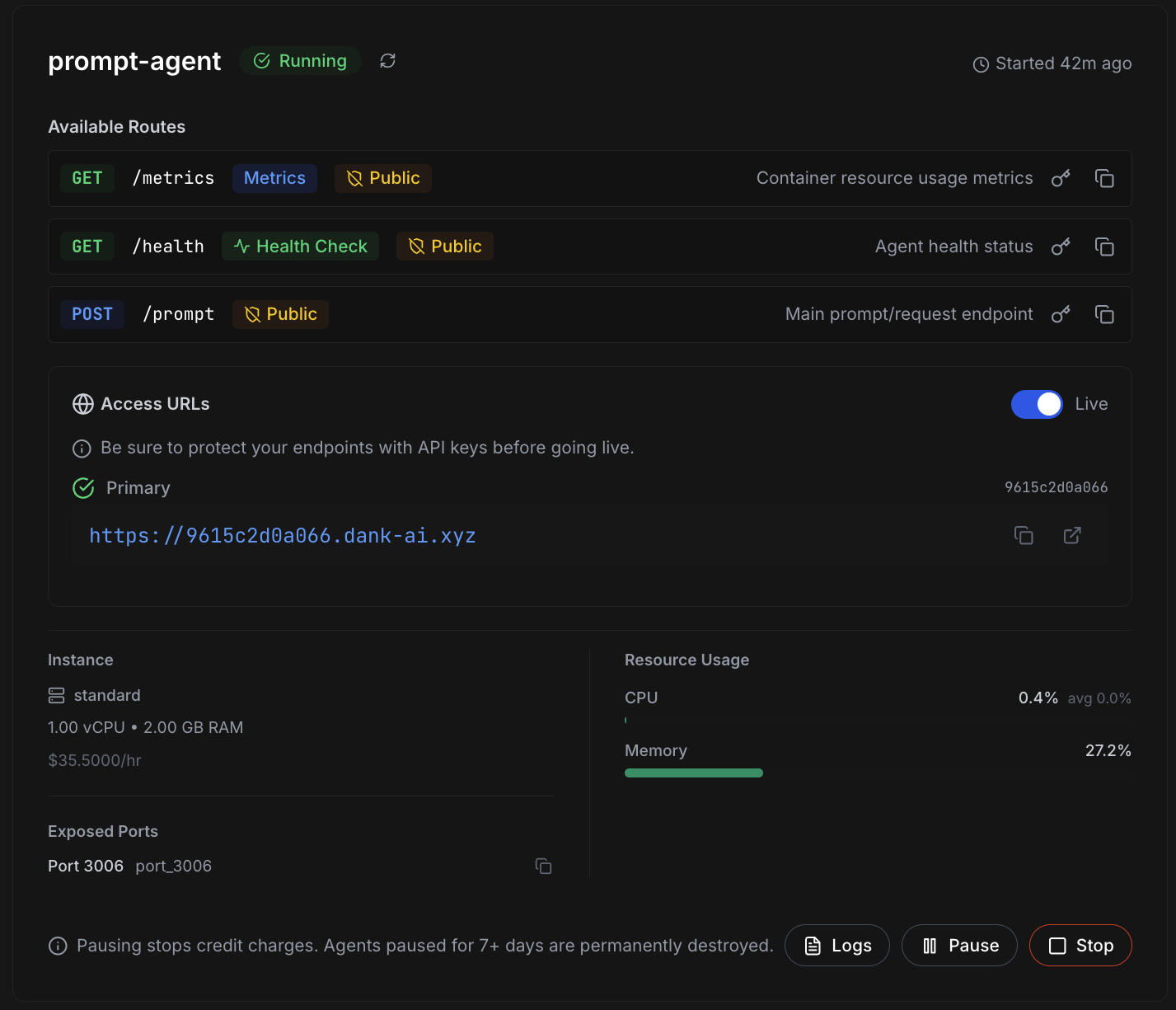

Direct Agent Control

Manage every aspect of your containerized agents through an intuitive interface. Monitor performance, configure resources, secure endpoints, and optimize utilization in real-time.

Resource Management

Allocate CPU, memory, and storage per agent. Set resource limits and requests to ensure optimal performance without over-provisioning.

- Dynamic resource scaling

- Real-time utilization metrics

- Cost optimization insights

Endpoint Configuration

Configure HTTP endpoints, webhooks, and API routes for each agent. Manage request routing, rate limiting, and response handling.

- Custom endpoint routing

- Request/response logging

- Load balancing controls

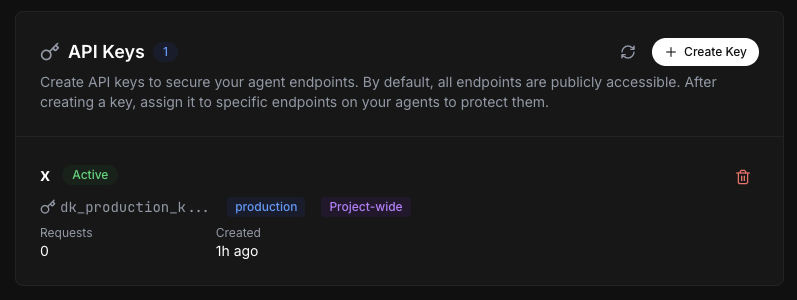

Security & Access Control

Implement authentication, authorization, and encryption at the agent level. Define access policies and manage API keys securely.

- API key management

- Role-based access control

- TLS/SSL encryption

Performance Monitoring

Track agent performance with detailed metrics and logs. Monitor response times, error rates, and resource consumption in real-time.

- Live performance dashboards

- Historical analytics

- Alerting and notifications

Full control, zero complexity. Manage agents like containers, deploy like microservices.

Built on Docker standards

Production Ready

Deploy with Confidence

Enterprise-grade security, dedicated endpoints, and secure configuration management built in from day one.

Custom API Keys

Protect endpoints with custom API keys. Expose public endpoints for customers while securing internal endpoints for your team with granular access control.

- Per-endpoint authentication

- Team vs customer access

- Key rotation and management

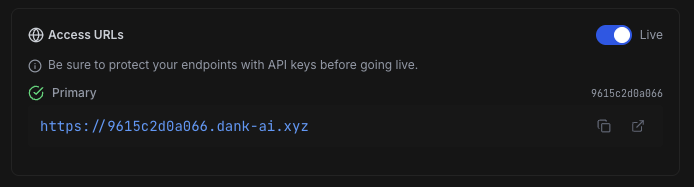

Dedicated Hostnames

Deploy and go straight to production with operational agent addresses. Each agent gets its own dedicated hostname for immediate accessibility.

- Instant production URLs

- Custom domain support

- SSL/TLS by default

Environment Variables

Parameterize your agent configuration with environment variables. Update settings without redeploying code for flexible, maintainable agents.

- Runtime configuration

- Environment-specific values

- No code changes needed

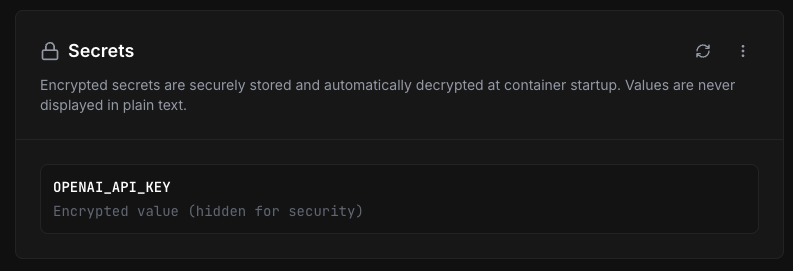

Secure Secrets

Store API keys, tokens, and sensitive credentials securely. Encrypted at rest with access controls to protect your production deployments.

- Encrypted storage

- Audit logging

- Role-based access

Production-ready from the start. Security, scalability, and reliability built into every deployment.

CLI Commands

Powerful command-line interface

Deploy, build, and manage your agents with simple commands.

Dank automatically builds Docker images, manages containers, and provides real-time monitoring with zero configuration.

Create optimized Docker images with all dependencies included. Perfect for production deployment and CI/CD pipelines.

Build and push your agent images to any Docker registry. Integrates seamlessly with GitHub Actions and GitLab CI.

Event-Driven Architecture

Event Triggered Architecture

Loose coupling, flexible development, and patterns every developer already knows.

const agent = createAgent('smart-assistant');

// Preprocess requests before LLM

agent.addHandler('request_input', async (context) =>

{

const

{ input, userId }

= context;

// Fetch user context from database

const userHistory = await getUserHistory(userId);

const ragContext = await searchRAG(input);

// Enhance context before LLM

context.enhancedInput =

{

original: input,

history: userHistory,

ragContext: ragContext

// Process LLM responses

agent.addHandler('request_output', async (response) =>

{

// Save conversation to database

await saveConversation(response);

// Update user preferences

await updateUserPreferences(response);

// Handle errors gracefully

agent.addHandler('request_error', async (error) =>

{

await logError(error);

await notifyAdmin(error);

Stateless runtime wrapped with stateful event handlers

Intercept requests before they hit the LLM to add database calls, RAG systems, and user context. Make your agents stateful and context-aware with familiar JavaScript patterns.

Familiar Patterns

Event listeners, async/await, and standard JavaScript patterns. No new syntax to learn—just the patterns you already use.

Loose Coupling

Handlers are independent and composable. Add, remove, or modify logic without affecting other parts of your system.

Easy Testing

Test individual handlers in isolation. Mock events, verify outputs, and ensure reliability with standard testing practices.

Built-in Events

request_input

request_output

request_error

agent_start

agent_stop

agent_output

custom-event

any-event

Preprocess requests, enhance responses, and add stateful behavior with database calls and RAG systems.

Distributed Runtime

Each agent gets its own runtime

Deploy agents across disparate cloud services with independent scaling and resource allocation.

Independent Agent Runtimes

Each agent runs in its own isolated container

Runtime: Node.js 18 • Memory: 1GB • CPU: 2 cores

Runtime: Node.js 18 • Memory: 2GB • CPU: 4 cores

Content Generation Agent

Azure Container Instances

Runtime: Node.js 18 • Memory: 512MB • CPU: 1 core

True Horizontal Scaling

Each agent is packaged as an independent Docker container that can be deployed to any cloud provider, scaled independently, and managed separately from other agents.

Independent Scaling

Scale each agent based on its specific workload. High-traffic agents get more resources while low-usage agents stay cost-effective.

Multi-Cloud Deployment

Deploy different agents to different cloud providers. Use AWS for some, GCP for others, Azure for compliance requirements.

Isolated Resources

Each agent gets its own memory, CPU, and network resources. No resource contention between agents, ensuring consistent performance.

Registry Agnostic

Commit agent runtimes to any container registry—Docker Hub, AWS ECR, GCP Artifact Registry, or Azure Container Registry.

Serverless for Agents

Deploy with Dank Cloud

As easy as Vercel, but for AI agents. Deploy in seconds, scale automatically, pay only for what you use.

Zero configuration required

$10 Free Credits

New users get $10 in free credits to explore Dank Cloud. No credit card required to start.

Simple, Usage-Based Pricing

Choose the right instance type for your workload. Pricing is based on actual usage time.

Small

0.25 vCPU, 1 GB RAM

$0.02

per hour

CPU0.25 vCPU

Memory1.00 GB

Medium

0.5 vCPU, 2 GB RAM

$0.03

per hour

CPU0.50 vCPU

Memory2.00 GB

Popular

Large

1 vCPU, 4 GB RAM

$0.06

per hour

CPU1.00 vCPU

Memory4.00 GB

XLarge

2 vCPU, 8 GB RAM

$0.13

per hour

CPU2.00 vCPU

Memory8.00 GB

Deploy AI agents with confidence.

Runtime independent AI Agent Framework.

Creator

Built in the shadows

Delta-Darkly

An elusive figure who emerges from the digital shadows, crafting tools that exist in the liminal space between human creativity and artificial intelligence. Delta-Darkly operates in the interstices of code and consciousness, building bridges between worlds that were never meant to meet.

Like a digital phantom, they appear only when the code compiles and the containers run. Their presence is felt in the elegant simplicity of solutions that others find impossibly complex.

Support

Keep Dank Open Source

Help keep Dank free and open source. Your donations support development, infrastructure, and the continued evolution of containerized AI agent orchestration.

Donation Address

0xF4E97E86c4e2a7CF60bAa3Fae78F80a4CDE86fCD

Accepts ETH and ERC-20 tokens

Works on any EVM-compatible network

Supports development and infrastructure

.png)