Automatic performance capture and analytics for production applications in Python using a custom columnar database written in Rust.

View Kronicler on UseKronicler.com, PyPi.org, Crates.io and GitHub.

Important

Kronicler is still early in development. I'd appreciate any feedback to help make kronicler more useful for you!

- Automatic performance capturing

- Lightweight and concurrent*

- One Python dependency

- Works out-of-the-box without configuration

* concurrency is in development but not fully implemented as of version 0.1.1. Track concurrency in issue #123.

If you want to monitor the performance of a production application, kronicler offers efficient and lightweight logging with a single library. Kronicler lets you view runtime statistics for functions like mean and median as well as statistics for different percentiles.

A use-case for these statistics is to find functions that occasionally operate much slower than they do on average. By looking at the "slowest" speed, the standard error, and the mean, you can find functions that occasionally run much slower than expected. Sometimes it's hard to find and replicate these issues in a test environment, so keeping logs in your production application can improve your ability to find these issues.

| Kronicler (Python) | A Python library that provides the @kronicler.capture decorator to save performance logs to a database. | More about Python |

| Kronicler (Database) | Custom columnar database designed for log capturing and performance analytics. | More about Database |

| Kronicler (Rust) | A Rust library for accessing the columnar database and capture functions for Rust-based log capturing tasks. | More about Rust |

| Kronicler (CLI) | Rust-based CLI tool for analyzing performance logs captured with the capture methods. | More about CLI |

| Kronicler (Web) | Prototype web portal for remotely viewing performance logs. | More about Web |

You may need to use a virtual environment.

Kronicler provides a Python decorator called capture that will calculate the time it takes to run the function.

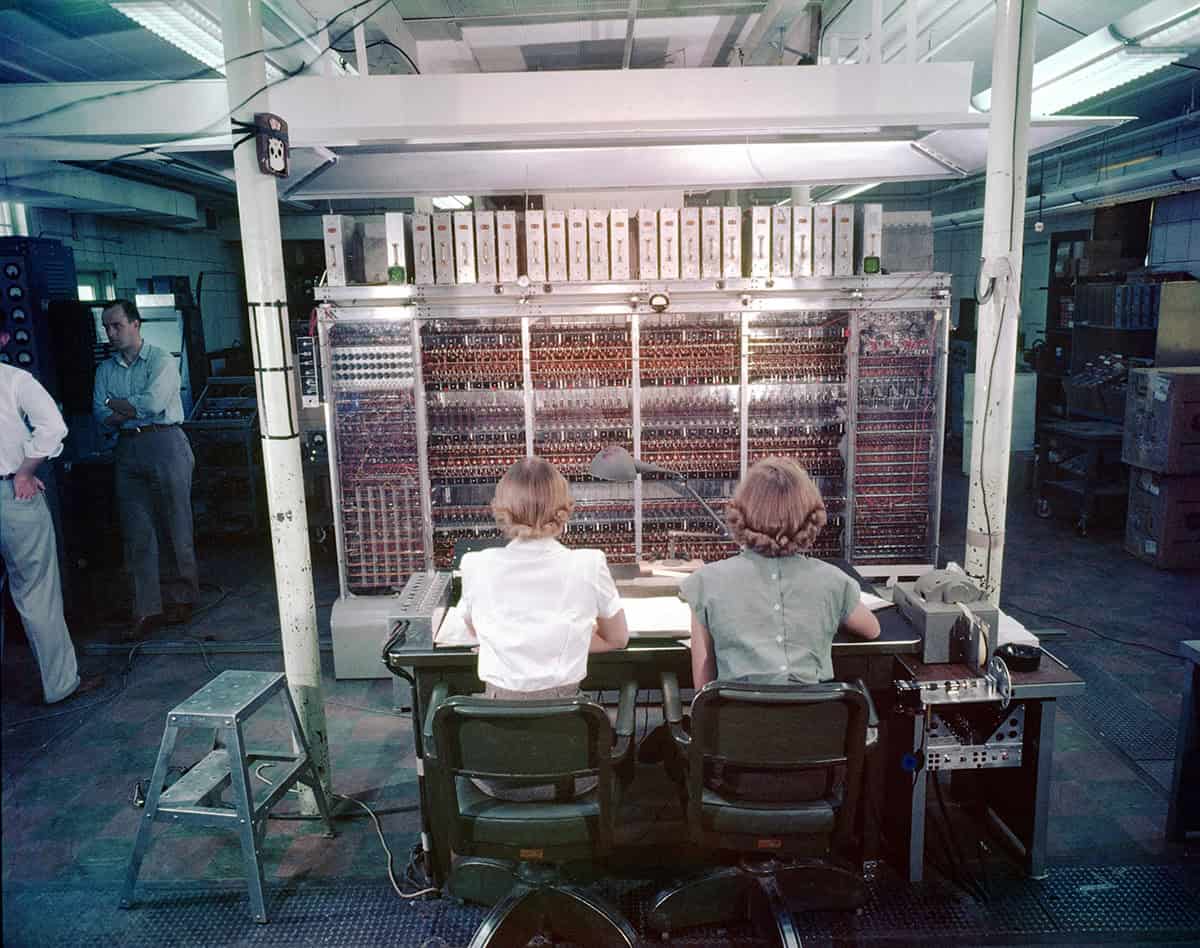

Simplified version of the package and database architecture. The data is passed from the Python decorator called capture to the database's queue. It then consumes that queue to insert each field into its respective column. The column uses the bufferpool to operate on pages.

This does not include details on:

- How the bufferpool manages pages.

- How pages operate.

- capture, index, row, or saving and loading with metadata.

The columnar database is somewhat inspired by my previous database called RedoxQL. A lot of the structure and architecture is different as well as how data is stored.

The bufferpool is based on my bufferpool project. I had to modify it to work with the rest of this database.

Install and Usage for Rust is coming soon...

I plan to implement the Rust version as an attribute to be used like the following:

Here is the most basic usage of Kronicler. Use it to capture the runtime of functions by adding the @kronicler.capture decorator.

With just two lines of code, you can add Kronicler to your FastAPI server.

For FastAPI, to capture each route, you can use the KroniclerMiddleware that will be included in v0.1.2. This allows you to capture every route that gets called.

If you want to capture functions manually, you can still do that with @kronicler.capture as normal. This is helpful if you want to benchmark functions that are not routes.

Code from tests/fastapi-test/main.py.

It's recommended to first use Kronicler's build-in capture decorator. However, if you want to write your own capture statements and fetch data yourself, you can use the functions in that come with the Python library.

More functionality will be added to the Python library in future releases.

If you're interested in using Kronicler's database directly in Rust to add custom logging functions (or just to use a columnar database), the library is published to crates.io.

Add as a dependency in your Cargo.toml.

To get a good idea of how to use Kronicler's internal Rust database, I'd recommended looking at some of the tests in the Rust files. You can also look at the source code for the kr binary in main.rs.

Here is an example of a function that fetches data based on index. It creates a Database from the new_reader trait.

You can also make a database that writes new capture data with the new trait.

Kronicler is designed to be as lightweight as possible. By adding logs to a queue concurrently*, Kronicler doesn't affect performance by much [PR #73, PR #76].

For accessing logs and running calculations, Kronicler uses a columnar database design to optimize file operations when looking at lots of data from only a few columns typical of analytics tasks.

* concurrency is in development but not fully implemented as of version 0.1.1. Track concurrency in issue #123.

I implemented a test version of Kronicler logging that uses SQLite under the hood to benchmark against.

Here is the function that we capture with kronicler_sqlite.capture defined by our test package that uses SQLite.

Here is where we call the function CAPTURE_COUNT times. Which in this case is 1000.

We do the same for the Kronicler DB version with kronicler.capture.

We then run each of those functions 50 times and log the results:

This test is called simple_sync.py. Note: concurrent mode is turned off as of v0.1.1, so those tests will not run. The concurrent version of the test is simple_concurrent.py and will exit with an error message explaining the lack of support in v0.1.1.

The data can be found at sync_insert_data.json.

Inserting is 7.71x faster than SQLite.

It's still unknown why SQLite has large latency spikes, but information about that will be mentioned in issue #101 if found.

The data can be found at sync_avg_data.json.

Kronicler uses amortized constant calculations to keep an average runtime for functions. This causes the mean calculation to be 837.24x faster than the average function in SQLite.

Here is how it's done in SQLite:

Kronicler keeps an updated mean on insert. This same approach can be done with SQLite by having your own index on function names and a table to keep track of the mean function time the same way Kronicler does it, however that's not the default and you'd have to build that into your implementation.

This script is called run_large_avg.sh.

Importantly, we test that the average is correct using a "ground truth" calculation.

This is from large_average.py.

As you can see, in the first test the kronicler_data amortized const average took 2.1696090698242188e-05 seconds but the ground truth written in Python 0.0007288455963134766 seconds. The Rust amortized constant is faster.

The Analytics Web Dashboard is still under construction. This feature will let you remotely view the logs collected from Kronicler.

You can view all of your data by running kr in the directory of your data:

You should see the data collected:

In the future, there will be many options for sorting, filtering, and viewing specific statistics.

By adding the capture decorator to your code (as seen below), Kronicler will automatically test the runtime of your function when it gets called. The results of the test get added to the database. This data can later be viewed in the Analytics Web Dashboard or the Analytics CLI.

To temporarily turn off logging, you can set KRONICLER_ENABLED=false. The default for KRONICLER_ENABLED is true, so it does not need to be set to make logging work.

You will need maturin installed, see Install Maturin.

You can run the scripts in tests/ to test out the functionality of Kronicler.

You can run the testing suite with the following command:

We use cargo t as an alias to run the tests in one thread.

The tests should all succeed

Check that only the needed Rust files are included.

Use maturin to publish the package

You may need to get a new API token from PyPi.org.

Create the virtual environment

Enter the virtual environment

I usually call my virtual environment either .venv or more frequently just venv

For more info, see the maturin docs.

Kronicler uses env_logger to log internals. To view these logs, you add the RUST_LOG env var.

For instance, you can include the logger for cargo run with the fetch argument.

This will print the logs:

You can add logs with the info! macro. There are also debug!, warn!, trace!, and error! variants.

You only need to call try_init once, and that's already done in lib.rs and main.rs.

Kronicler uses Ruff for formatting.

You can format all Python files with:

You can also check that they have the right format with:

Kronicler uses cargo fmt

.png)