Press shortcut → speak → get text. Free and open source ❤️

Whispering is a voice-to-text app that turns your speech into text with a single keyboard shortcut. It works anywhere on your desktop—in any app, any text field—giving you instant transcription without switching windows or clicking buttons.

Quick Links: Watch Overview (5 min) | Install (2 min) | Why I Built This | FAQ | Architecture

Unlike subscription services that charge $15-30/month, Whispering lets you bring your own API key and pay providers directly—as little as $0.02/hour. Or go completely free with local transcription. Your audio, your choice, your data.

Note: Whispering is designed for quick transcriptions, not long recordings. For extended recording sessions, use a dedicated recording app.

Want to see the voice coding workflow? Check out this 3-minute demo showing how I use Whispering with Claude Code for faster development.

- 🎯 Multiple Transcription Providers - Choose from Groq, OpenAI, ElevenLabs, or local options → See providers

- 🤖 AI-Powered Transformations - Automatically format, translate, or summarize your transcriptions → Learn more

- 🎙️ Voice Activity Detection - Hands-free recording that starts when you speak

- ⌨️ Custom Shortcuts - Set any keyboard combination for recording → Customize shortcuts

- 💾 Local-First Storage - Your data stays on your device with IndexedDB → Data privacy

- 🏗️ Modern Architecture - Clean, testable code with extensive documentation → Architecture deep dive

Get transcribing in 2 minutes → Download, install, speak

Choose your operating system below and click the download link:

🍎 macOSNot sure which Mac you have? Click the Apple menu → About This Mac. Look for "Chip" or "Processor":

- Apple M1/M2/M3 → Use Apple Silicon version

- Intel Core → Use Intel version

- Download the .dmg file for your architecture

- Open the downloaded file

- Drag Whispering to your Applications folder

- Open Whispering from Applications

- "Unverified developer" warning: Right-click the app → Open → Open

- "App is damaged" error (Apple Silicon): Run xattr -cr /Applications/Whispering.app in Terminal

- Download the .msi installer (recommended)

- Double-click to run the installer

- If Windows Defender appears: Click "More Info" → "Run Anyway"

- Follow the installation wizard

Whispering will appear in your Start Menu when complete.

🐧 LinuxAppImage (Universal)

Debian/Ubuntu

Fedora/RHEL

Links not working? Find all downloads at GitHub Releases

Try in Browser (No Download)No installation needed! Works in any modern browser.

Note: The web version doesn't have global keyboard shortcuts, but otherwise works great for trying out Whispering before installing.

Right now, I personally use Groq for almost all my transcriptions.

💡 Why Groq? The fastest models, super accurate, generous free tier, and unbeatable price (as cheap as $0.02/hour using distil-whisper-large-v3-en)

- Visit console.groq.com/keys

- Sign up → Create API key → Copy it

🙌 That's it! No credit card required for the free tier. You can start transcribing immediately.

- Open Whispering

- Click Settings (⚙️) → Transcription

- Select Groq → Paste your API key where it says Groq API Key

- Click the recording button (or press Cmd+Shift+; anywhere) and say "Testing Whispering"

🎉 Success! Your words are now in your clipboard. Paste anywhere!

Having trouble? Common issues & fixes- No transcription? → Double-check API key in Settings

- Shortcut not working? → Bring Whispering to foreground (see macOS section below)

- Wrong provider selected? → Check Settings → Transcription

This happens due to macOS App Nap, which suspends background apps to save battery.

Quick fixes:

- Bring Whispering to the foreground briefly to restore shortcuts

- Keep the app window in the foreground (even as a smaller window)

- Use Voice Activated mode for hands-free operation

Best practice: Keep Whispering in the foreground in front of other apps. You can resize it to a smaller window or use Voice Activated mode for minimal disruption.

Accidentally rejected microphone permissions?If you accidentally clicked "Don't Allow" when Whispering asked for microphone access, here's how to fix it:

- Open System Settings → Privacy & Security → Privacy → Microphone

- Find Whispering in the list

- Toggle the switch to enable microphone access

- If Whispering isn't in the list, reinstall the app to trigger the permission prompt again

If you accidentally blocked microphone permissions, use the Registry solution:

Registry Cleanup (Recommended)

- Close Whispering

- Open Registry Editor (Win+R, type regedit)

- Use Find (Ctrl+F) to search for "Whispering"

- Delete all registry folders containing "Whispering"

- Press F3 to find next, repeat until all instances are removed

- Uninstall and reinstall Whispering

- Accept permissions when prompted

Delete App Data: Navigate to %APPDATA%\..\Local\com.bradenwong.whispering and delete this folder, then reinstall.

Windows Settings: Settings → Privacy & security → Microphone → Enable "Let desktop apps access your microphone"

See Issue #526 for more details.

Take your transcription experience to the next level with these advanced features:

🎯 Custom Transcription ServicesChoose from multiple transcription providers based on your needs for speed, accuracy, and privacy:

- API Key: console.groq.com/keys

- Models: distil-whisper-large-v3-en ($0.02/hr), whisper-large-v3-turbo ($0.04/hr), whisper-large-v3 ($0.06/hr)

- Why: Fastest, cheapest, generous free tier

- API Key: platform.openai.com/api-keys (Enable billing)

- Models: whisper-1 ($0.36/hr), gpt-4o-transcribe ($0.60/hr), gpt-4o-mini-transcribe ($0.18/hr)

- Why: Industry standard

- API Key: elevenlabs.io/app/settings/api-keys

- Models: scribe_v1, scribe_v1_experimental

- Why: High-quality voice AI

- API Key: None needed!

- Why: Complete privacy, offline use, free forever

Transform your transcriptions automatically with custom AI workflows:

Quick Example - Format Text:

- Go to Transformations (📚) in the top bar

- Click "Create Transformation" → Name it "Format Text"

- Add a Prompt Transform step:

- Model: Claude Sonnet 3.5 (or your preferred AI)

- System prompt: `You are an intelligent text formatter specializing in cleaning up transcribed speech. Your task is to transform raw transcribed text into well-formatted, readable content while maintaining the speaker's original intent and voice.

Core Principles:

- Preserve authenticity - Keep the original wording and phrasing as much as possible

- Add clarity - Make intelligent corrections only where needed for comprehension

- Enhance readability - Apply proper formatting, punctuation, and structure

Formatting Guidelines:

Punctuation & Grammar:

- Add appropriate punctuation (periods, commas, question marks)

- Correct obvious transcription errors while preserving speaking style

- Fix run-on sentences by adding natural breaks

- Maintain conversational tone and personal speaking patterns

Structure & Organization:

- Create paragraph breaks at natural topic transitions

- Use bullet points or numbered lists when the speaker is listing items

- Add headings if the content has clear sections

- Preserve emphasis through italics or bold when the speaker stresses words

Intelligent Corrections:

- Fix homophones (e.g., "there/their/they're")

- Complete interrupted thoughts when the intention is clear

- Remove excessive filler words (um, uh) unless they add meaning

- Correct obvious misspeaks while noting significant ones in [brackets]

Special Handling:

- Technical terms: Research and correct spelling if unclear

- Names/places: Make best guess and mark uncertain ones with [?]

- Numbers: Convert spoken numbers to digits when appropriate

- Time references: Standardize format (e.g., "3 PM" not "three in the afternoon")

Preserve Original Intent:

- Keep colloquialisms and regional expressions

- Maintain the speaker's level of formality

- Don't "upgrade" simple language to sound more sophisticated

- Preserve humor, sarcasm, and emotional tone

Output Format: Return the formatted text with:

- Clear paragraph breaks

- Proper punctuation and capitalization

- Any structural elements (lists, headings) that improve clarity

- [Bracketed notes] for unclear sections or editorial decisions

- Original meaning and voice intact

Remember: You're a translator from spoken to written form, not an editor trying to improve the content. Make it readable while keeping it real.`

- User prompt: `Here is the text to format:

{{input}}` 4. Save and select it in your recording settings

What can transformations do?

- Fix grammar and punctuation automatically

- Translate to other languages

- Convert casual speech to professional writing

- Create summaries or bullet points

- Remove filler words ("um", "uh")

- Chain multiple steps together

Example workflow: Speech → Transcribe → Fix Grammar → Translate to Spanish → Copy to clipboard

Setting up AI providers for transformationsYou'll need additional API keys for AI transformations. Choose from these providers based on your needs:

- API Key: platform.openai.com/api-keys

- Models: gpt-4o, gpt-4o-mini, o3-mini and more

- Why: Most capable, best for complex text transformations

- API Key: console.anthropic.com/settings/keys

- Models: claude-opus-4-0, claude-sonnet-4-0, claude-3-7-sonnet-latest

- Why: Excellent writing quality, nuanced understanding

- API Key: aistudio.google.com/app/apikey

- Models: gemini-2.5-pro, gemini-2.5-flash, gemini-2.5-flash-lite

- Why: Free tier available, fast response times

- API Key: console.groq.com/keys

- Models: llama-3.3-70b-versatile, llama-3.1-8b-instant, gemma2-9b-it, and more

- Why: Lightning fast inference, great for real-time transformations

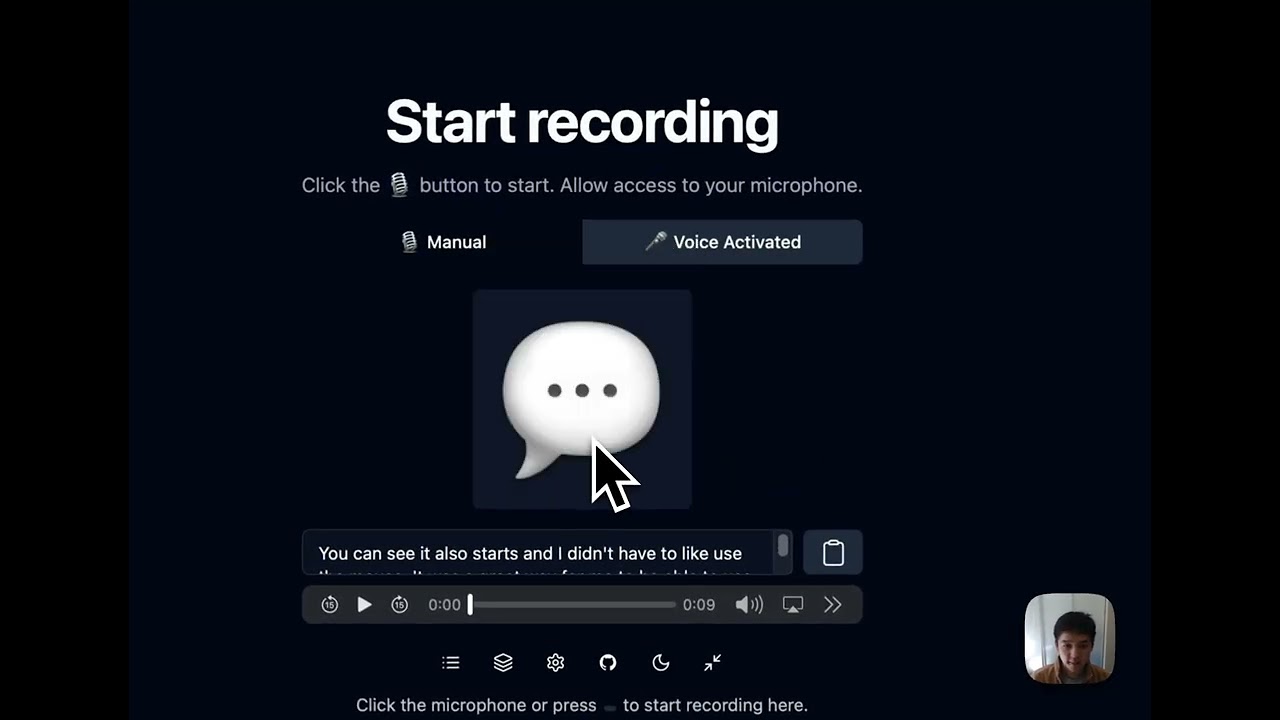

Hands-free recording that starts when you speak and stops when you're done.

Two ways to enable VAD:

Option 1: Quick toggle on homepage

- On the homepage, click the Voice Activated tab (next to Manual)

Option 2: Through settings

- Go to Settings → Recording

- Find the Recording Mode dropdown

- Select Voice Activated instead of Manual

How it works:

- Press shortcut once → VAD starts listening

- Speak → Recording begins automatically

- Stop speaking → Recording stops after a brief pause

- Your transcription appears instantly

Perfect for dictation without holding keys!

⌨️ Custom ShortcutsChange the recording shortcut to whatever feels natural:

- Go to Settings → Recording

- Click on the shortcut field

- Press your desired key combination

- Popular choices: F1, Cmd+Space+R, Ctrl+Shift+V

I was tired of the usual SaaS problems:

- The pricing was nuts. Most transcription services charge $15-30/month for what should cost at most $2. You're paying for their profit margin.

- You have no idea what happens to your recordings. Your recordings get uploaded to someone else's servers, processed by their systems, and stored according to their privacy policy.

- Limited options. Most services use OpenAI's Whisper behind the scenes anyway, but you can't switch providers, can't use faster models, and can't go local when you need privacy.

- Things just disappear. Companies pivot, get acquired, or shut down. Then you're stuck migrating your workflows and retraining your muscle memory.

So I built Whispering the way transcription should work:

- No middleman - Your audio goes straight to the provider you choose (or stays fully local)

- Your keys, your costs - Pay OpenAI/Groq/whoever directly at actual rates: $0.02-$0.18/hour instead of $20/month

- Actually yours - Open source means no one can take it away, change the pricing, or sunset the service

With Whispering, you pay providers directly instead of marked-up subscription prices:

| distil-whisper-large-v3-en (Groq) | $0.02 | $0.20/month | $0.60/month | $1.80/month | $15-30/month |

| whisper-large-v3-turbo (Groq) | $0.04 | $0.40/month | $1.20/month | $3.60/month | $15-30/month |

| gpt-4o-mini-transcribe (OpenAI) | $0.18 | $1.80/month | $5.40/month | $16.20/month | $15-30/month |

| Local (Speaches) | $0.00 | $0.00/month | $0.00/month | $0.00/month | $15-30/month |

Whispering stores as much data as possible locally on your device, including recordings and text transcriptions. This approach ensures maximum privacy and data security. Here's an overview of how data is handled:

-

Local Storage: Voice recordings and transcriptions are stored in IndexedDB, which is used as blob storage and a place to store all of your data like text and transcriptions.

-

Transcription Service: The only data sent elsewhere is your recording to an external transcription service—if you choose one. You have the following options:

- External services like OpenAI, Groq, or ElevenLabs (with your own API keys)

- A local transcription service such as Speaches, which keeps everything on-device

-

Transformation Service (Optional): Whispering includes configurable transformation settings that allow you to pipe transcription output into custom transformation flows. These flows can leverage:

- External Large Language Models (LLMs) like OpenAI's GPT-4, Anthropic's Claude, Google's Gemini, or Groq's Llama models

- Hosted LLMs within your custom workflows for advanced text processing

- Simple find-and-replace operations for basic text modifications

When using AI-powered transformations, your transcribed text is sent to your chosen LLM provider using your own API key. All transformation configurations, including prompts and step sequences, are stored locally in your settings.

You can change both the transcription and transformation services in the settings to ensure maximum local functionality and privacy.

The main difference is philosophy. Whispering is 100% free and open source. You bring your own API key, so you pay cents directly to providers instead of monthly subscriptions. Your data never touches our servers—it goes straight from your device to your chosen transcription service (or stays completely local).

No catch. I built this for myself and use it daily. I believe essential tools should be free and transparent. The code is open source so you can verify everything yourself. There's no telemetry, no data collection, and no premium tiers. It's just a tool that does one thing well.

Built with Svelte 5 (using new runes) + Tauri for native performance. ~50MB download, instant startup, low memory usage. The codebase showcases modern patterns and is great for learning. For a deep dive into the architecture, see the Architecture section.

Yes! Choose the Speaches provider for completely local transcription. No internet required, no API keys, and your audio never leaves your device.

With your own API key:

- Groq: $0.02-$0.06/hour

- OpenAI: $0.18-$0.36/hour

- Local (Speaches): Free forever

Compare that to subscription services charging $15-30/month!

Yes. Whispering stores recordings locally in IndexedDB. When using external transcription services, your audio goes directly to them using your API key—there's no middleman server. For maximum privacy, use the local Speaches provider.

Absolutely! Use AI-powered transformations to automatically format, translate, or summarize your transcriptions. See AI-Powered Transformations for details.

Desktop: macOS (Intel & Apple Silicon), Windows, Linux

Web: Any modern browser at whispering.bradenwong.com

Please open an issue on GitHub or join our Discord. I actively maintain this project and love hearing from users!

Whispering showcases the power of modern web development as a comprehensive example application:

- Svelte 5: The UI reactivity library of choice with cutting-edge runes system

- SvelteKit: For routing and static site generation

- Tauri: The desktop app framework for native performance

- WellCrafted: Lightweight type-safe error handling

- Svelte Sonner: Toast notifications for errors

- TanStack Query: Powerful data synchronization

- TanStack Table: Comprehensive data tables

- IndexedDB & Dexie.js: Local data storage

- shadcn-svelte: Beautiful, accessible components

- TailwindCSS: Utility-first CSS framework

- Turborepo: Monorepo management

- Rust: Native desktop features

- Vercel: Hosting platform

- Zapsplat.com: Royalty-free sound effects

- React: UI library

- shadcn/ui: Component library

- Chrome API: Extension APIs

Note: The browser extension is temporarily disabled while we stabilize the desktop app.

- Service Layer: Platform-agnostic business logic with Result types

- Query Layer: Reactive data management with caching

- RPC Pattern: Unified API interface (rpc.recordings.getAllRecordings)

- Dependency Injection: Clean separation of concerns

Whispering uses a clean three-layer architecture that achieves extensive code sharing between the desktop app (Tauri) and web app. This is possible because of how we handle platform differences and separate business logic from UI concerns.

Quick Navigation: Service Layer | Query Layer | Error Handling

The service layer contains all business logic as pure functions with zero UI dependencies. Services don't know about reactive Svelte variables, user settings, or UI state—they only accept explicit parameters and return Result<T, E> types for consistent error handling.

The key innovation is build-time platform detection. Services automatically choose the right implementation based on the target platform:

This design enables extensive code sharing between desktop and web versions. The vast majority of the application logic is platform-agnostic, with only the thin service implementation layer varying between platforms. Services are incredibly testable (just pass mock parameters), reusable (work identically anywhere), and maintainable (no hidden dependencies).

→ Learn more: Services README | Constants Organization

The query layer is where reactivity gets injected on top of pure services. It wraps service functions with TanStack Query and handles two key responsibilities:

Runtime Dependency Injection - Dynamically switching service implementations based on user settings:

Optimistic Updates - Using the TanStack Query client to manipulate the cache for optimistic UI. By updating the cache, reactivity automatically kicks in and the UI reflects these changes, giving you instant optimistic updates.

Generally speaking, it's often unclear where exactly you should mutate the cache with the query client—sometimes at the component level, sometimes elsewhere. By having this dedicated query layer, it becomes very clear: we co-locate three key things in one place: (1) the service call, (2) runtime settings injection based on reactive variables, and (3) cache manipulation (also reactive). This creates a layer that bridges reactivity with services in an intuitive way. It also cleans up our components significantly because we have a consistent place to put this logic—now developers know that all cache manipulation lives in the query folder, making it clear where to find and add this type of functionality:

This design keeps all reactive state management isolated in the query layer, allowing services to remain pure and platform-agnostic while the UI gets dynamic behavior and instant updates.

→ Learn more: Query README | RPC Pattern Guide

The query layer also transforms service-specific errors into WhisperingError types that integrate seamlessly with the toast notification system. This happens inside resultMutationFn or resultQueryFn, creating a clean boundary between business logic errors and UI presentation:

Whispering uses WellCrafted, a lightweight TypeScript library I created to bring Rust-inspired error handling to JavaScript. I built WellCrafted after using the effect-ts library when it first came out in 2023—I was very excited about the concepts but found it too verbose. WellCrafted distills my takeaways from effect-ts and makes them better by leaning into more native JavaScript syntax, making it perfect for this use case. Unlike traditional try-catch blocks that hide errors, WellCrafted makes all potential failures explicit in function signatures using the Result<T, E> pattern.

Key benefits in Whispering:

- Explicit errors: Every function that can fail returns Result<T, E>, making errors impossible to ignore

- Type safety: TypeScript knows exactly what errors each function can produce

- Serialization-safe: Errors are plain objects that survive JSON serialization (critical for Tauri IPC)

- Rich context: Structured TaggedError objects include error names, messages, context, and causes

- Zero overhead: ~50 lines of code, < 2KB minified, no dependencies

This approach ensures robust error handling across the entire codebase, from service layer functions to UI components, while maintaining excellent developer experience with TypeScript's control flow analysis.

- Clone the repository: git clone https://github.com/braden-w/whispering.git

- Change into the project directory: cd whispering

- Install the necessary dependencies: pnpm i

To run the desktop app and website:

If you have concerns about the installers or want more control, you can build the executable yourself. This requires more setup, but it ensures that you are running the code you expect. Such is the beauty of open-source software!

Find the executable in apps/app/target/release

We welcome contributions! Whispering is built with care and attention to clean, maintainable code.

- Follow existing TypeScript and Svelte patterns throughout

- Use Result types from the WellCrafted library for all error handling

- Follow WellCrafted best practices: explicit errors with Result<T, E>, structured TaggedError objects, and comprehensive error context

- Study the existing patterns in these key directories:

- Services Architecture - Platform-agnostic business logic

- Query Layer Patterns - RPC pattern and reactive state

- Constants Organization - Type-safe configuration

→ New to the codebase? Start with the Architecture Deep Dive to understand how everything fits together.

Note: WellCrafted is a TypeScript utility library I created to bring Rust-inspired error handling to JavaScript. It makes errors explicit in function signatures and ensures robust error handling throughout the codebase.

- Fork the repository

- Create a feature branch: git checkout -b feature/your-feature-name

- Make your changes and commit them

- Push to your fork: git push origin your-branch-name

- Create a pull request

- UI/UX improvements and accessibility enhancements

- Performance optimizations

- New transcription or transformation service integrations

Feel free to suggest and implement any features that improve usability—I'll do my best to integrate contributions that make Whispering better for everyone.

Whispering is released under the MIT License. Use it, modify it, learn from it, and build upon it freely.

If you encounter any issues or have suggestions for improvements, please open an issue on the GitHub issues tab or contact me via [email protected]. I really appreciate your feedback!

- Community Chat: Discord

- Issues and Bug Reports: GitHub Issues

- Feature Discussions: GitHub Discussions

- Direct Contact: [email protected]

This project is supported by amazing people and organizations:

Transcription should be free, open, and accessible to everyone. Join us in making it so.

Thank you for using Whispering and happy writing!

.png)