This work presents a modified version of the card game Monopoly Deal. It serves as a platform for ongoing independent research on systems and algorithms for sequential decision-making under imperfect information, focusing on classical and modern approaches from game theory and reinforcement learning. It includes models, training pipelines, experiment tracking, and a full web application for human–AI interaction—providing a practical testbed for studying complex games.

Play against the AI: monopolydeal.ai

The platform is designed to be modular, extensible, and interactive. It consists of the following components:

- Model Training: Training loops and experiment tracking via Weights & Biases

- Backend API: FastAPI-based service managing game state

- Frontend Web App: React/Next.js interface for human-AI gameplay with real-time state visualization

- Database: PostgreSQL for game metadata and individual game tracking

- Deployment: Docker-based deployment to Google Cloud Run

Before getting started, ensure you have the following installed:

- uv for Python package management: docs.astral.sh/uv/

- npm for Node.js package management: nodejs.org

- PostgreSQL 15+: postgresql.org

- dbmate for database management: github.com/amacneil/dbmate

- just command runner: github.com/casey/just

- Docker & Docker Compose: docs.docker.com

- direnv for environment variable management: direnv.net

For development with hot reloads (and non-trivial setup):

Access the application:

- Frontend: http://localhost:3000

- Backend API docs: http://localhost:8000/docs

For development without hot reloads but with easier setup:

Access the application:

- Frontend: http://localhost:3000

- Backend API docs: http://localhost:8000/docs

- models/cfr/ - Counterfactual regret minimization implementation and training tools

- app/ - Backend API service and database models

- frontend/ - React web application

- game/ - Core game logic and state management

- db/ - Database schema and migrations

The web application uses a standard microservices architecture with FastAPI backend, React frontend, and PostgreSQL database:

- FastAPI Backend: RESTful API handling game state and model inference

- React/Next.js Frontend: Type-safe web interface for human-AI interaction

- PostgreSQL Database: Stores game metadata and individual game results

- Docker Containerization: Multi-stage builds with single container per service

- Google Cloud Run: Deployment and liveness probes

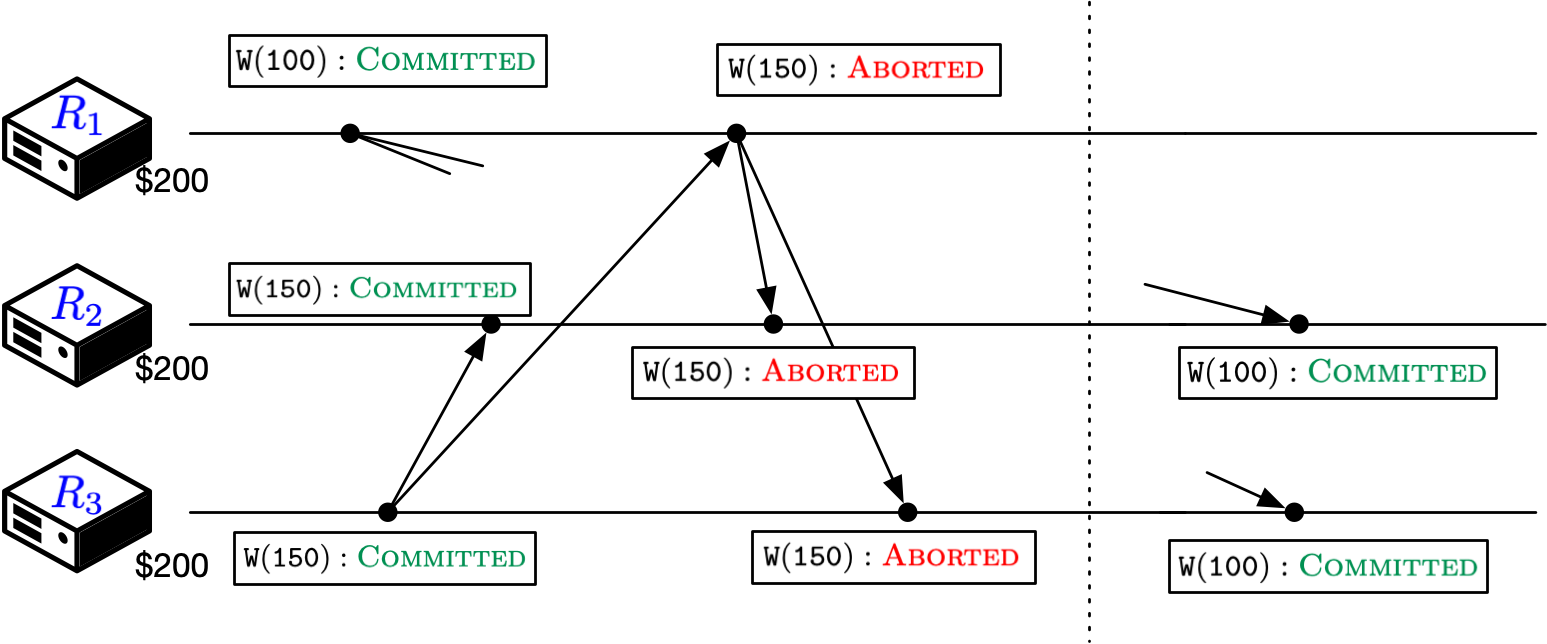

- Fault Tolerance: Reconstruct game state from database logs when cache fails

The CFR learning pipeline parallelized CFR trainining, experiment tracking, and checkpoint management:

- Ray: Multi-core parallelization with shared memory for CFR self-play rollouts

- Weights & Biases: Experiment tracking with metrics logging

- Kubernetes Jobs: Training infrastructure on GKE with configurable CPU/memory resources

- Checkpoint Management: Model checkpointing to GCS

- Evaluation: Model evaluation against different opponents (random, risk-aware)

- State Abstraction: Configurable abstractions to reduce game tree complexity

- Reproducibility: Git commit tracking, random seeds, and deterministic training

- Designing a System for Counterfactual Regret Minimization in Monopoly Deal (in progress)

This project is licensed under the Apache License 2.0. See LICENSE for details.

This project implements a modified version of Monopoly Deal for research purposes. "Monopoly Deal" is a trademark of Hasbro. This is not an official product.

If you use this work in your research, please cite:

.png)