Introduction

Artificial intelligence (AI) draws its foundational concepts from the brain. Within the brain, intricate neurobiological circuits process vast amounts of incoming spike signals in parallel, generating synaptic currents that trigger output spikes in analog parallel mode1,2,3,4,5. Concurrently, the synaptic weights (conductance) can be dynamically modified by the processed signals through learning mechanisms like spike-time-dependent plasticity (STDP)3,5. The memories in the human brain can be continuously modified over time, enabling humans to navigate changes and respond effectively to novel situations6,7. In stark contrast, computers operating on the Turing model excel at executing pre-programmed inference algorithms, whether human-designed or derived from machine learning8,9,10,11,12,13,14,15,16,17,18. While AI systems, such as self-driving cars14 and large language models16, can surpass human performance in specific, well-defined domains, their inability to learn during inference renders them vulnerable to environmental changes, hardware errors, or task modifications. Although the human brain can also operate on fixed, pre-learned algorithms (Turing mode)19,20, its unique ability to concurrently infer and learn, termed Super-Turing computing19,20, distinguishes it from computers. For instance, computers can derive the algorithms to optimize wing shapes through off-site machine learning processes, but they cannot continuously adapt wing shapes like a bird in complex and rapidly changing aerodynamic environments while in flight21,22,23,24. Similarly, human intervention becomes necessary when self-driving cars encounter unforeseen scenarios14,25. Computer-based AI systems currently necessitate extensive data and energy-intensive off-site learning to broaden the operational domain, contrasting with the brain’s efficient and continuous adaptation. Consequently, AI inference algorithms, trained on limited datasets, often struggle with the infinite complexity and unpredictable dynamics of the real world. Conversely, due to the “Turing constraint”, computers require the expansion of learning domains for various conditions using “big data” and “deep-learning” technology, resulting in longer learning latency and higher energy consumption compared to the human brain8,9,10,11,12,13,14,15,16. As a result, the computationally demanding learning processes typically occur on large, energy-intensive remote computers to generate inference algorithms, which are subsequently deployed on power-constrained edge computers8,9,10,11,12,13,16,25,26,27,28. However, the AI inference algorithms derived from limited off-site training data often prove inadequate when applied to the unbounded complexity and unpredictable dynamics of real-world environments. Consequently, the computationally intensive learning processes often take place on large, power-hungry off-site computers to derive inference algorithms, which are then deployed on edge devices with power constraints8,9,10,11,12,13,16,25,26,27,28. The AI inference algorithms developed from finite training domains are limited in their effectiveness when applied to real-world environments with infinite complexity and unpredictable dynamic changes.

The super-Turing computing model has been postulated theoretically19,20, but it was not established in terms of the concurrent inference and learning functionalities of neurobiological circuits. Neuromorphic computing circuits, built with digital transistors10,11,12,13,25,26,28 or analog devices like floating-gate transistors29,30, memristors31,32,33,34,35, and phase-change memory resistors36, aim to mimic biological neural networks for energy-efficient in-memory computing and in-situ learning. While learning algorithms like STDP have been successfully implemented in both digital and analog circuits, the resulting inference algorithms can only be executed after the learning phase is complete10,11,12,13,25,26,28,29,30,31,32,33,34,35,36. Consequently, these neuromorphic circuits are constrained to sequential learning and inference, preventing them from achieving super-Turing computing with simultaneous inference and learning10,11,12,13,25,26,28,29,30,31,32,33,34,35,36. The challenge remains: how can we design an electronic circuit capable of super-Turing computing—one that performs concurrent learning and inference, achieves high-energy efficiency, allows rapid learning, and adapts to dynamic environments?

In this article, we present a synaptic resistor (synstor)37,38,39,40,41 circuit capable of operating in super-Turing mode, with concurrent inference and learning functionalities, to control a morphing wing in a wind tunnel —a complex and dynamic setting distinct from conventional AI test environments. We first introduce a super-Turing computing model based on a synstor circuit. We fabricated a synstor circuit, designed to mimic synapses by integrating inference, memory, and learning capabilities within each synstor to enable concurrent inference and learning in analog parallel mode. The synstor circuits accelerate learning speed, improve adaptability to dynamically changing environments, spontaneously correct device conductance errors, and reduce its operational conductance and power consumption by circumventing the need for sequential inference-learning and iterative learning-testing processes in the circuits of other neuromorphic devices. We then conducted experiments using the synstor circuit, humans, and a computer-based artificial neural network (ANN) to control a morphing wing. The objective was to minimize the drag-to-lift force ratio, reduce the fluctuation of the forces, and recover the wing from stall conditions by optimizing its shape in complex aerodynamic environments within a wind tunnel. Our results demonstrate that the synstor circuit and humans, operating in the super-Turing mode, outperform the ANN, operating in the Turing mode, in terms of learning speed, performance, power consumption, and adaptability to changing environments.

Synstor circuit for intelligent systems

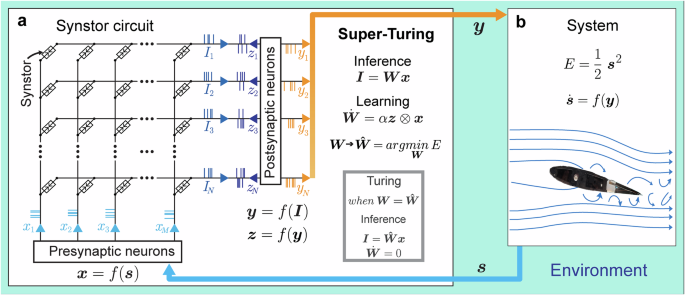

To emulate a neurobiological network, a circuit with \(M\) input and \(N\) output electrodes linked through \(M\times N\) synstors is illustrated in Fig. 1a. When voltage pulses (\({{\bf{x}}}\)) are applied to the input electrodes, they generate currents (\({{\bf{I}}}\)) through the synstors at the output electrodes, implementing an inference algorithm:

$${{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}$$

(1)

where \({{\bf{W}}}\) represents the synstor conductance matrix. The excitatory or inhibitory currents (\({{\bf{I}}}\)) stimulate or suppress voltage pulses (\({{\bf{y}}}\)) from neuron and interface circuits, leading to changes in the system state (\({{\bf{s}}}\))—such as the configuration of a morphing wing (Fig. 1b). Sensors monitor the system state \({{\bf{s}}}\) (e.g., the lift-to-drag force ratio), and this feedback is converted back into input signals (\({{\bf{x}}}\)) by the interface and neuron circuits. The control objective is to minimize the objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{2}\).

a A crossbar circuit is depicted, featuring synstors connecting presynaptic and postsynaptic neuron circuits. The input voltage pulses applied to the input electrode are represented by the vector x. The output voltage pulses from the postsynaptic neuron form the vector y, while the voltage pulses applied to the output electrode are given by the vector \({{\bf{z}}}\). The resulting current at the nth output electrode is represented by the vector i with elements in, which induces y and v from the postsynaptic neuron circuit. \({{\bf{I}}}\) induces \({{\bf{y}}}\) and \({{\bf{z}}}\) from the postsynaptic neuron circuit. b System states (\({{\bf{s}}}\)) are detected by sensors and converted into input voltage pulses (x) through the presynaptic neuron circuit. These input pulses drive the synstor circuit, which operates in super-Turing mode, concurrently executing an inference algorithm (\({{\bf{I}}}={{\bf{Wx}}}\)) and modifying its conductance matrix (\({{\bf{W}}}\)) according to a learning rule (\(\dot{{{\bf{W}}}}=\alpha \,{{\bf{z}}}\,\bigotimes \,{{\bf{x}}}\)). The output (\({{\bf{y}}}\)) from the synstor circuit controls actuators that modify the system states (\({{\bf{s}}}\)). The goal is to minimize an objective function (\(E=\frac{1}{2}{{{\bf{s}}}}^{2}\)). When \(E\) reaches its minimum, \({{\bf{W}}}=\hat{{{\bf{W}}}}\) and \(\dot{{{\bf{W}}}}=0\). Under this condition, the circuit only executes the inference algorithm \({{\bf{I}}}=\hat{{{\bf{W}}}}{{\bf{x}}}\) in Turing mode.

In contrast to Turing-mode computing circuits, where inference algorithms (typically represented by \({{\bf{W}}}\)) remain static during inference computation, the synaptic weights (\({{\bf{W}}}\)) in our synstor circuit, similar to biological neural networks, can dynamically adapt through a concurrent correlative learning rule4,

$${\dot{{\bf{W}}}}= \alpha \,{{\bf{z}}}\,\otimes \,{{\bf{x}}}$$

(2)

where \(\alpha \) denotes a learning coefficient, \({{\bf{z}}}\) denotes voltage pulses triggered at the output electrodes of the circuit, and \({{\bf{z}}}\,\otimes \,{{\bf{x}}}\) represents the outer product between \({{\bf{z}}}\) and \({{\bf{x}}}\) (i.e., \(\frac{d{w}_{{nm}}}{{dt}}=\alpha \,{z}_{n}{x}_{m}\)). The STDP learning rule3,5 is also one of correlative learning rules4 and can be represented by Eq. 2, where the temporal mean \(\overline{{{\boldsymbol{z}}}}\,=0\) (Methods, Eq. 4), and the covariance between \({z}_{n}\) and \({y}_{{n}^{{\prime} }}\), \( < {z}_{n},{y}_{{n}^{{\prime} }} > \,={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}\) with \({\eta }_{n}\le 0\) (Methods, Eq. 5). The concurrent execution of inference (\({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\), Eq. 1) and learning (\(\dot{{{\bf{W}}}}={{\rm{\alpha }}}{{\bf{z}}}\,\otimes \,{{\bf{x}}}\), Eq. 2) in a synstor circuit can result in the change rate of \(\overline{E}\) (Methods):

$$\frac{d\overline{E}}{{dt}}\le 0$$

(3)

Under this condition, \(\overline{E}\) functions as a Lyapunov function for \({{\boldsymbol{W}}}\). When \(\overline{E}\) reaches its minimum value, \(\left(\frac{d\overline{E}}{{dt}}\right)=0\), and \({{\bf{W}}}=\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\) remains unchanged (\(\dot{{{\bf{W}}}}=0\)). The circuit operates in Turing mode, executing the inference algorithm \({{\bf{I}}}=\hat{{{\bf{W}}}}{{\bf{x}}}\) (Fig. 1). When \(\left(\frac{d\overline{E}}{{dt}}\right) < 0\), \(\dot{{{\bf{W}}}}\ne 0\), the circuit operates in the super-Turing mode, simultaneously performing inference and learning to adjust \({{\bf{W}}}\) toward \(\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\) and reduce \(E\). A synstor circuit can operate as a conventional computing circuit in Turing mode, executing a fixed optimal inference algorithm. When the inference algorithm deviates from its optimal form due to environmental changes, task modifications, conductance errors, or other factors, the synstor circuit can spontaneously switch to super-Turing mode, simultaneously executing and optimizing the inference algorithm through learning to reduce \(E\).

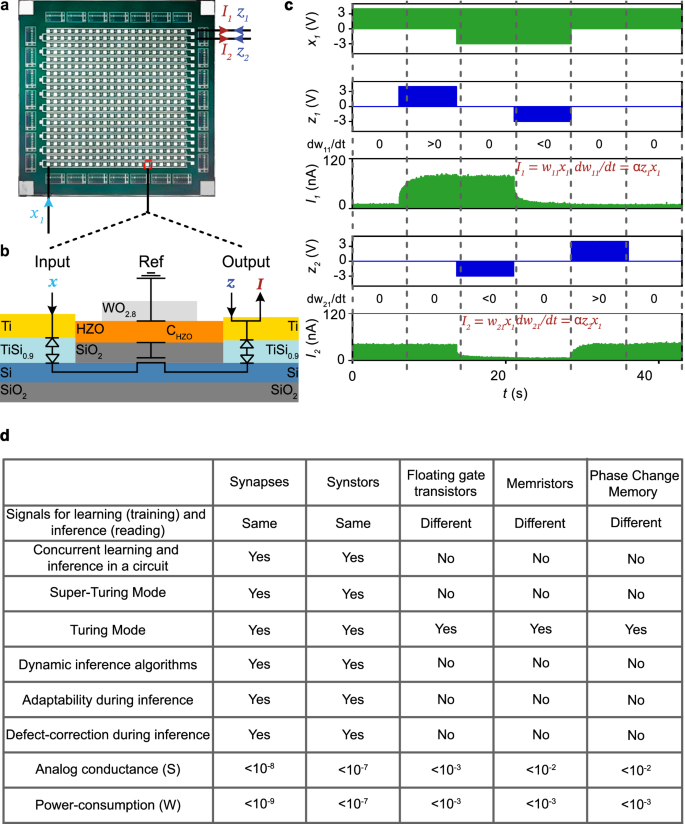

A synstor circuit (Fig. 2a) was fabricated following the methods detailed and illustrated in Fig. S1. Each synstor comprises a Si channel with Schottky contacts formed by Ti input and output electrodes via a metallic TiSi0.9 layer42. Additionally, each synstor incorporates a vertical heterojunction stacked on the Si channel, consisting of a SiO2 dielectric, a ferroelectric Hf0.5Zr0.5Ο2 layer, and a WO2.8 conductive reference electrode (Fig. 2b). During all electrical tests of this circuit (as detailed in the Methods), the reference electrodes were grounded. The electric tests of the synstor circuit (Methods) demonstrated its unique ability to execute both the inference (\({I}_{n}={\sum}_{m}{w}_{{nm}}{x}_{m}\), Eq. 1) and learning (\({\dot{w}}_{{nm}}=\alpha {z}_{n}{x}_{m}\), Eq. 2) algorithms concurrently in super-Turing mode using the same type of signals. As shown in Fig. 2c, when voltage pulses (\({x}_{m}\)) are applied to the \({m}^{{th}}\) input electrode, they induce currents that flow through the synstors to grounded output electrodes (\({z}_{n}=0\)), while the conductance of the synstors remains unchanged. The synstors connected to the grounded output electrodes simultaneously execute the inference (\({I}_{n}={\sum}_{m}{w}_{{nm}}{x}_{m}\)) and learning (\({\dot{w}}_{{nm}}=\alpha {z}_{n}{x}_{m}=0\)) algorithms. Concurrently, the conductance of other synstors experiencing positive or negative voltage pulses with the same amplitudes (i.e., \({x}_{m}={z}_{n} > 0\) or \({x}_{m}={z}_{n} < 0\)) is modified according to the learning algorithm \({\dot{w}}_{{nm}}=\alpha {z}_{n}{x}_{m}\) with \(\alpha < 0\) or \(\alpha > 0\). As shown in experiments (Fig. S2a), device modeling (Methods), and an equivalent circuit for a synstor (Fig. 2b), when a single voltage pulse (\({x}_{m}\)) is applied to the input electrode with respect to the grounded output electrode (\({z}_{n}=0\)), the voltage primarily drops across the Schottky contact between the input electrode and Si channel42. As a result, the Hf0.5Zr0.5O2 layer beyond the Schottky contact experiences a small electric field that is insufficient to alter the ferroelectric domains or the synstor conductance. However, when voltage pulses (\({x}_{m}={z}_{n}\)) are simultaneously applied to the input and output electrodes of a synstor, there is no voltage drop across the Schottky contacts or the Si channel. Instead, the voltage primarily drops across the Hf0.5Zr0.5Ο2 layer, generating a large electronic field that progressively switches the individual ferroelectric domains within the layer43, thereby attracting or repelling the holes in the p-type Si channel, and increasing or decreasing the synstor conductance in analog mode. The oxygen vacancies with higher defect energy in the Hf0.5Zr0.5O2 layer tended to diffuse toward the WO2.8 reference electrode with lower defect energy, which effectively enhances the quality of the Hf0.5Zr0.5O2 ferroelectric layer and improves device performance. Initially, the fabricated devices exhibited conductance variability, with an average conductance of \(2.7\,{{\rm{nS}}}\) and a standard deviation of \(2.1\,{{\rm{nS}}}\). However, this variation was reduced to a standard deviation of \(0.015\,{{\rm{nS}}}\) (Fig. S2) after tuning the devices to a target conductance value using a train of paired \({x}_{m}={z}_{n}\) pulses with a duration of \(10\, \mu s\) and an amplitude of \(-4{V}\) (or \(4{V}\)). As a result, the synstor conductance can be precisely modified across 1000 analog conductance levels within a range of \(1-60{nS}\) (Fig. S2b) with a tuning accuracy of ~0.1 nS (Figs. S2c, d), \(1.6\times {10}^{11}\) repetitive tuning cycles, and nonvolatile conductance retention time for over a year (Fig. S3e). During learning processes for various applications, we applied voltage pulses in the same manner to ensure precise tuning of device conductance and accurate execution of algorithms. The materials characterization and device properties of Hf0.5Zr0.5O2 synstors will be detailed in a separate report. Compared with other neuromorphic circuits composed of analog devices such as floating-gate transistors29,30, memristors31,32,33,34,35, and phase-change memory resistors36, the synstor circuit has the unique capability to execute inference and learning algorithms concurrently in analog parallel mode using pulse signals (\({{\bf{x}}}\) and \({{\bf{z}}}\)) with the same amplitudes (Fig. 2d). This enables the synstor circuit to operate in both super-Turing and Turing modes with very low conductance and power consumption, dynamically modifying the inference algorithm while executing it, adapting to dynamically changing environments, and correcting conductance errors in the circuit. However, as shown in the nonvolatile conductance retention test (Fig. S2e), after tuning the synstors to distinct analog conductance levels, their conductance was monitored over \({10}^{6}{s}\) at room temperature. While the projected conductance levels remained distinct without overlap for a year, gradual shifts in conductance were observed over time. In Turing mode, where the synstor circuit operates without real-time learning, these conductance shifts can lead to computational errors. In contrast, in super-Turing mode, where real-time learning is enabled, the circuit can dynamically adjust the conductance to adapt to changing environments and correct errors, which makes synstor circuits more suited for super-Turing computing than traditional Turing computing.

a An optical image shows a 20\(\times \)20 synstor crossbar, composed of 20 rows of Ti input electrodes and 20 columns of Ti output electrodes. b A schematic illustration of a synstor composed of a vertical heterojunction of a Si channel, a SiO2 dielectric layer, a ferroelectric Hf0.5Zr0.5Ο2 layer, and a WO2.8 conductive reference (Ref) electrode. The Si channel connects with a TiSi0.9 layer and Ti input and output electrodes. An equivalent circuit for the synstor is also shown, featuring diodes representing the Schottky contacts between the Si channel and Ti input/output electrodes via the TiSi0.9 layer, a transistor formed by the Si channel and SiO₂ dielectric layer, and a capacitor (CHZO) representing the Hf0.5Zr0.5Ο2 ferroelectric layer beneath the WO2.8 reference electrode. c Voltage pulses (\({x}_{1}\)) applied on the first input electrode, voltage pulses applied on the first (\({z}_{1}\)) and second (\({z}_{2}\)) output electrodes, and currents flowing on the first (\({I}_{1}\)) and second (\({I}_{2}\)) output electrodes are shown against over time. The inference (\({I}_{1}={w}_{11}{x}_{1}\) and \({I}_{2}={w}_{21}{x}_{1}\)) and learning algorithms (\(d{w}_{11}/{dt}=\alpha {z}_{1}{x}_{1}\) and \(d{w}_{21}/{dt}=\alpha {z}_{2}{x}_{1}\)) are executed concurrently in the circuit in parallel analog mode under the various conditions, including (1) \({x}_{1}=4.2{V}\), \({z}_{1}={z}_{2}=0\), with \(d{w}_{11}/{dt}=d{w}_{21}/{dt}=0\); (2) \({x}_{1}=4.2{V}\), \({z}_{1}=4.2{V}\), \({z}_{2}=0\), with \(d{w}_{11}/{dt} > 0\) and \(d{w}_{21}/{dt}=0\); (3) \({x}_{1}=-2.1{V}\), \({z}_{1}=0\), \({z}_{2}=-2.1{V}\), with \(d{w}_{11}/{dt}=0\), and \(d{w}_{21}/{dt} < 0\); (4) \({x}_{1}=-2.1{V}\), \({z}_{1}=-2.1{V}\), \({z}_{2}=0\), with \(d{w}_{11}/{dt} < 0\) and \(d{w}_{21}/{dt}=0\); and (5) \({x}_{1}=4.2{V}\), \({z}_{1}=0\), \({z}_{2}=4.2{V}\), with \(d{w}_{11}/{dt}=0\) and \(d{w}_{21}/{dt} > 0\). d Comparative analysis of biological synapses3, and analog neuromorphic devices including synstors (this work), floating-gate transistors29,30, memristors31,32,33,34,35, and phase-change memory resistors36.

Morphing wing controlled by a synstor circuit

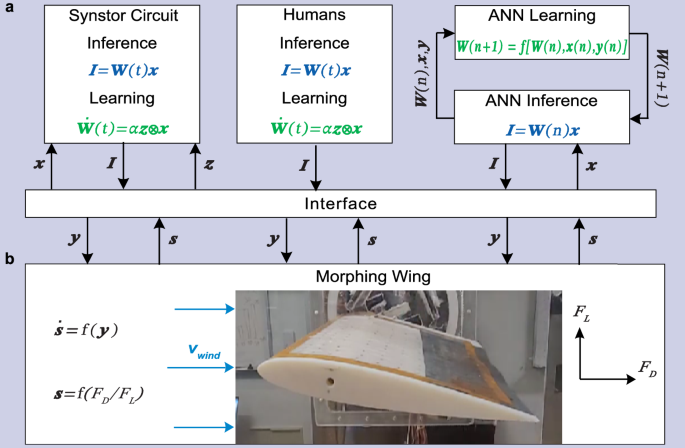

A morphing wing in a wind tunnel, as described in previous studies44,45, was controlled by the synstor circuit (Fig. 3, Methods). The lift force (\({F}_{L}\)) and drag force (\({F}_{D}\)) on the wing were detected by strain gauges, with data processed by a computer (Fig. S3a). The sensing signals (\({{\boldsymbol{s}}}\)), represented by the drag-to-lift force ratio (\({s}_{1}={F}_{D}/{F}_{L}\)) and the magnitude of the fluctuation of the drag-to-lift force ratio (\({s}_{2}\)), were transformed into voltage pulses (\({x}_{1}\) and \({x}_{2}\)) and fed into the synstor circuit. The firing rate of \({{\bf{x}}}\) pulses increased monotonically with increasing amplitudes of \({{\bf{s}}}\). A \(2\times 2\) synstor circuit, composed of two input electrodes, two output electrodes, and four synstors, processed the input voltage pulses (\({x}_{1}\) and \({x}_{2}\)) applied to the input electrodes. These pulses generated currents (\({I}_{1}\) and \({I}_{2}\)) through the synstors at the output electrodes, implementing the inference algorithm (\({{\bf{I}}}={{\bf{W\; x}}}\), Eq. 1). The currents (\({I}_{1}\) and \({I}_{2}\)) triggered voltage pulses (\({y}_{1}\) or \({y}_{2}\)) through neuron circuits to increase or decrease the actuation voltage (\({V}_{a}\)) applied on macro fiber composite (MFC) piezoelectric actuators44,45, thereby adjusting the shape and states (\({s}_{1}\) and \({s}_{2}\)) of the wing (Methods, Figs. S4, 5a, 6a). The \({y}_{1}\) or \({y}_{2}\) pulses increased or decreased the actuation voltage (\({V}_{a}\)) applied on macro fiber composite (MFC) piezoelectric actuators44,45, thereby altering the shape and states (\({{\bf{s}}}\)) of the wing. The experiments were conducted in two different settings: one at a low angle of attack (\({8}^{\circ }\)) with the wing at pre-stall condition (Fig. S5a), and another at a high angle of attack (\({18}^{\circ }\)), exceeding the stall angle of the wing, thus introducing a chaotic aerodynamic environment at stall condition (Fig. S6a). The conductance matrix (\({{\bf{W}}}\)) of the synstor circuit was initialized to random values before each experiment started to ensure that the circuit had no prior learning experience or predefined algorithm. When \({{\bf{y}}}\) pulses were triggered, \({{\bf{z}}}\) pulses satisfying the conditions \(\overline{{{\bf{z}}}}\,=0\) (Eq. 4) and\(\,\overline{{z}_{n}{y}_{{n}^{{\prime} }}}={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}\) (Eq. 5) with \({\eta }_{n}\le 0\) were also triggered at the output electrodes of the synstor circuit to modify \({{\bf{W}}}\) according to the correlative learning rule \(\dot{{{\bf{W}}}}={{\rm{\alpha }}}{{\bf{z}}}{{\otimes }}{{\bf{x}}}\) (Eq. 2) in super-Turing mode. The objective of the experiments was to minimize the objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\bf{2}}}}\), representing both the drag-to-lift force ratio (\({s}_{1}={F}_{D}/{F}_{L}\)) and its fluctuation (\({s}_{2}\)), and to recover the wing from the stall state.

a A schematic shows the experimental settings where a synstor circuit (left), human operators (middle), and an ANN (right) all receive sensing signals of wing states (\({{\bf{s}}}\)), including the drag-to-lift force ratio (\({s}_{1}\)) and its fluctuation magnitude (\({s}_{2}\)), from the wing. The wing shape and its states (\({{\boldsymbol{s}}}\)) are modified by actuation signals (\({{\bf{y}}}\)). b An image displaying the morphing wing utilized in the wind tunnel experiments. During inference processes, the sensing signals \({{\bf{s}}}\) are converted to input signals (\({{\bf{x}}}\)), sequentially triggering output currents \({{\bf{I}}}\) according to the inference algorithm \({{\bf{I}}}={{\bf{W\; x}}}\). In the synstor or human neurobiological circuits, the conductance matrixes (\({{\bf{W}}}\)) of synstors or synapses and inference algorithm \({{\bf{I}}}={{\bf{W}}}(t){{\bf{x}}}\) can be concurrently modified following the correlative learning rule \(\dot{{{\bf{W}}}}(t)=\alpha \,{{\bf{z}}}\,{{\bigotimes }}\,{{\bf{x}}}\). In the sequential inference and learning processes of ANN, the inference data, including \({{\bf{x}}}\), \({{\bf{y}}}\), and \({{\bf{W}}}(n)\) from the \({n}^{{th}}\) inference episode are sent to a computer. Subsequently, \({{\bf{W}}}(n)\) is modified to \({{\bf{W}}}(n+1)\) in the \({n}^{{th}}\) learning episode according to a reinforcement learning algorithm \({{\bf{W}}}\left({{\rm{n}}}+1\right)={{\rm{f}}}[{{\bf{W}}}\left({{\rm{n}}}\right),\,{{\bf{x}}}\left({{\rm{n}}}\right),\,{{\bf{y}}}\left({{\rm{n}}}\right)]\). The inference algorithm \({{\bf{I}}}={{\bf{W}}}\left({{\rm{n}}}+1\right){{\bf{x}}}\) is then executed iteratively in the \({\left(n+1\right)}^{{th}}\) inference episode in the Turing mode.

Morphing wing controlled by human operators

In the experiments involving a morphing wing controlled by human operators (Fig. 3, Methods), operators with no prior knowledge of the wing or its control system visually received the sensing signals (\({{\bf{s}}}\)) displayed on a computer monitor and were tasked with minimizing the objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\boldsymbol{2}}}}\) (Fig. S3b). These experiments were performed with the wing under the same pre-stall and stall conditions as those used for the synstor circuit. The \({{\boldsymbol{s}}}\) signals were processed by the human neurobiological circuits for inference (\({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\), Eq. 1), prompting the operators to generate actuation signals (\({{\boldsymbol{y}}}\)) by pressing two keys on a keyboard to adjust the actuation voltage \({V}_{a}\), thereby modifying the wing shape and its states \({{\boldsymbol{s}}}\). The firing rates of \({{\boldsymbol{y}}}\) pulses corresponded to the duration of keystrokes (Figs. S5b, S6b). The conductance matrixes (\({{\bf{W}}}\)) of synapses in the human neurobiological circuits could be concurrently adjusted according to the STDP learning rule \(\dot{{{\bf{W}}}}={{\rm{\alpha }}}{{\bf{z}}}\,{{\otimes }}\,{{\bf{x}}}\).

Morphing wing controlled by ANN

In the experiments where a morphing wing was controlled by a state-of-the-art ANN with optimal structure and learning parameters (Fig. 3, Methods, Figs. S3c, S7), a computer received the sensing signals (\({{\bf{s}}}\)), executed the inference algorithm (\({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\), Eq. 1) within the ANN, and triggered actuation pulses (\({{\bf{y}}}\)) to adjust the actuation voltage \({V}_{a}\), thereby modifying the shape and states (\({{\bf{s}}}\)) of the wing. These experiments were performed with the wing under the same pre-stall and stall conditions as those used for the synstor circuit and human operators (Figs. S5c, S6c). To ensure a fair comparison, we used the policy gradient-based RL algorithm, Monte-Carlo Policy Gradient with baseline, as the benchmark. Since the actions were discrete, we did not use continuous action-based RL algorithms such as deep deterministic policy gradient (DDPG). Similar to the synstor trials, the synaptic weight matrices (\({{\bf{W}}}\)) in the ANN were initialized to random values before the learning experiment began. Due to the large data size, the time required to execute the learning algorithm was longer than that needed to execute the inference algorithm, therefore inference and learning were executed sequentially in Turing mode. In the offline learning process, the inference data, including \({{\bf{x}}}\), \({{\bf{y}}}\), and \({{\bf{W}}}(n)\) from the \({n}^{{th}}\) inference episode, were saved in the computer. The weight matrix \({{\bf{W}}}({{\rm{n}}})\) was then modified to \({{\bf{W}}}(n+1)\) in the \({n}^{{th}}\) learning episode according to a reinforcement learning algorithm46 (Methods, Supplementary Materials). The inference algorithm (\({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\)) was subsequently executed iteratively based on \({{\bf{W}}}(n+1)\) in the \({\left(n+1\right)}^{{th}}\) episode.

Results

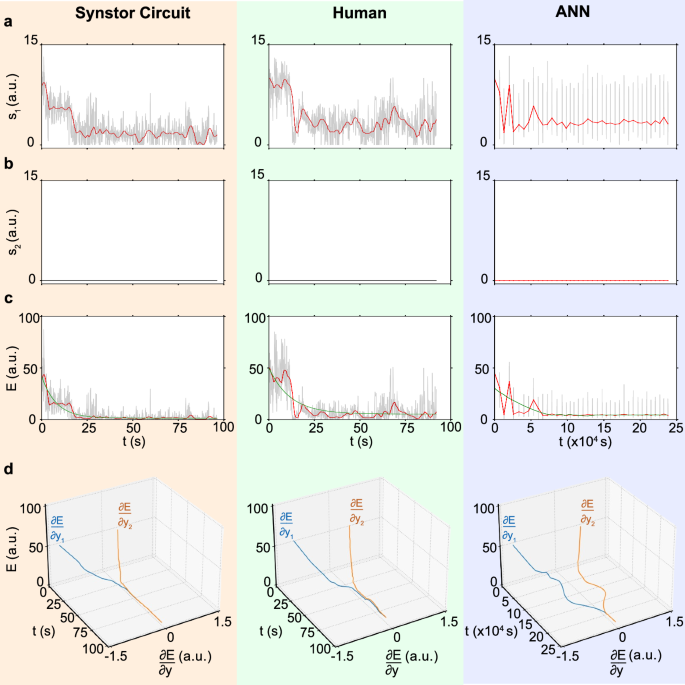

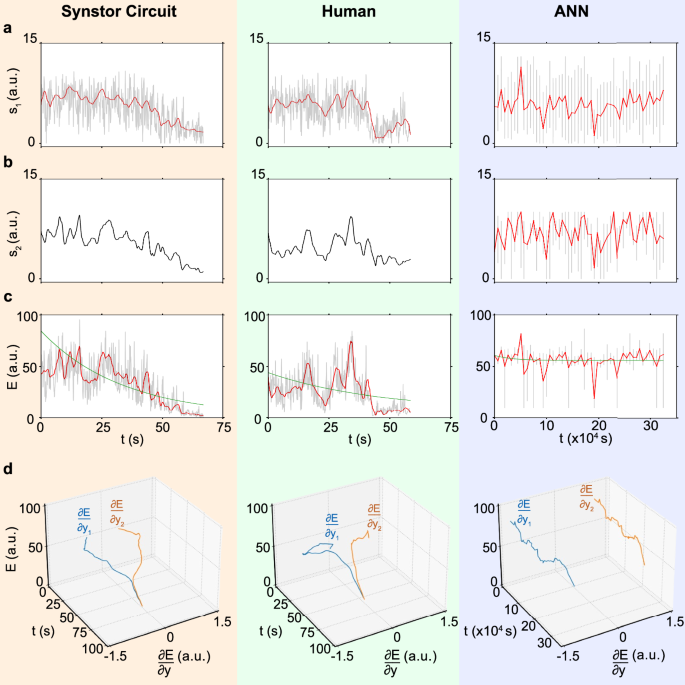

In the pre-stall condition with an \({8}^{\circ }\) angle of attack, the synstor circuit, human operators, and ANN successfully learned to adjust the wing shape, minimizing the drag-to-lift force ratio (\({s}_{1}\)) and the objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\bf{2}}}}\) (Fig. 4 and Supplementary Materials Movie S1). The fluctuation in the drag-to-lift force ratio (\({s}_{2}\)) is inherently small under the pre-stall condition, and the change in \({s}_{2}\) remains negligible throughout the experimental process. When the wing was set in a stall condition with an \({18}^{\circ }\) angle of attack, the synstor circuit and a few human operators successfully learned to adjust the wing shape, minimizing \({s}_{1}\), \({s}_{2}\), and \(E\), thus recovering the wing state from stall (Fig. 5 and Supplementary Materials Movie S2). However, the ANN was unable to reduce \({s}_{1}\), \({s}_{2}\), or \(E\) in sequential inference and learning trials under the stall condition.

a The drag-to-lift force ratios, \({s}_{1}={F}_{D}/{F}_{L}\), b the magnitude of the fluctuation of the drag-to-lift force ratio, \({s}_{2}\), and c the objective function, \(E=\frac{1}{2}{{{\bf{s}}}}^{2}\) (arbitrary unit), d \(E\) and \(\frac{\partial E}{\partial {y}_{n}}\) (arbitrary unit, Methods) for the wing controlled by a synstor circuit (left), a human (middle), and an ANN (right) are displayed versus time \(t\) (gray and black lines), where \(t\) represents concurrent inference and learning time in synstor and human neurological circuits, and the cumulative time for sequential inference and learning in the ANN. The average values of \({s}_{1}\) and \(E\) are also shown versus time \(t\) (red lines). The \(E-t\) curves are best fitted by \(E\left(t\right)=\left(E\left(0\right)-{E}_{e}\right)\,{e}^{-t/{T}_{L}}+{E}_{e}\) (green lines).

a The drag-to-lift force ratios, \({s}_{1}={F}_{D}/{F}_{L}\), b the magnitude of the fluctuation of the drag-to-lift force ratio, \({s}_{2}\), and c the objective function, \(E=\frac{1}{2}{{{\bf{s}}}}^{2}\) (arbitrary unit), d \(E\) and \(\frac{\partial E}{\partial {y}_{n}}\) (arbitrary unit, Methods) for the wing controlled by a synstor circuit (left), a human (middle), and an ANN (right) are displayed versus time \(t\) (gray and black lines), where \(t\) represents concurrent inference and learning time in synstor and human neurological circuits, and the cumulative time for sequential inference and learning in the ANN. The average values of \({s}_{1}\) and \(E\) are also shown versus time \(t\) (red lines). The \(E-t\) curves are best fitted by \(E\left(t\right)=\left(E\left(0\right)-{E}_{e}\right)\,{e}^{-t/{T}_{L}}+{E}_{e}\) (green lines).

\(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\) can be extrapolated from the experimental data of \(E\) and \({{\boldsymbol{y}}}\) using Eq. 10, Methods. \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\) and \(\overline{E}\) are displayed versus time for synstor and human neurobiological circuits in both pre-stall and stall conditions (Figs. 4d, 5d). At the initial stage of the experiments, \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\ne 0\), thus the change rate in \(\overline{E}\) due to learning, \({\left(\frac{\partial \overline{E}}{\partial t}\right)}_{L}=\overline{\frac{\partial E}{\partial {{\boldsymbol{W}}}}\dot{{{\boldsymbol{W}}}}}={\sum}_{{n}}2\left|\alpha \right|{\eta }_{n}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right){\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)}^{2}\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)={\sum}_{{n}}2\left|\alpha \right|{\eta }_{n}\left(\frac{\partial E}{\partial {y}_{n}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right) < 0\) (Methods, Eq. 9), resulting in \(\left(\frac{d\overline{E}}{{dt}}\right) < 0\) and \(\dot{{{\bf{W}}}}\ne 0\). The synstor and human neurobiological circuits operate in super-Turing mode, simultaneously performing inference and learning to adjust \({{\bf{W}}}\) toward \(\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\), reducing \(\left|\frac{\partial E}{\partial {y}_{n}}\right|\) and \(E\). At the late stage of the experiments, \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\approx 0\), \({\left(\frac{\partial \overline{E}}{\partial t}\right)}_{L}\approx 0\), \(\overline{E}\) reaches its minimum value with \(\left(\frac{d\overline{E}}{{dt}}\right)\approx 0\). Under this condition, \(\dot{{{\bf{W}}}}\approx 0\) and \({{\bf{W}}}\approx \hat{{{\bf{W}}}}\), at which point the synstor and neurobiological circuits operate in Turing mode, executing only the inference algorithm \({{\bf{I}}}=\hat{{{\bf{W}}}}{{\bf{x}}}\). At the initial stage of the experiment for ANN in the pre-stall condition, \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\ne 0\) (Fig. 4d), \(\frac{\partial E}{\partial {{\boldsymbol{W}}}}\ne 0\) (Fig. S8a), and \(\left(\frac{d\overline{E}}{{dt}}\right) < 0\). The computer sequentially executes the inference and learning algorithms in Turing mode, adjusting \({{\bf{W}}}\) toward \(\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\) during learning, and reducing \(\left|\frac{\partial E}{\partial {y}_{n}}\right|\) and \(E\) during inference. At the late stage of the experiment, \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\), \(\frac{\partial E}{\partial {{\boldsymbol{W}}}}\), and \(\left(\frac{d\overline{E}}{{dt}}\right)\) approach to zero, \(\overline{E}\) reaches its minimum value, and \({{\bf{W}}}\approx \hat{{{\bf{W}}}}\). In the stall condition, the wing experiences a chaotic aerodynamic environment, resulting in larger fluctuations in \({s}_{1}\), \({s}_{2}\), or \(E\) (Fig. 5) compared to the pre-stall condition (Fig. 4). Due to the chaotic changes in the environment, \(\hat{{{\bf{W}}}}={{arg}}{\min }_{{{\bf{W}}}}{{\rm{E}}}\) varies dynamically, but \({{\bf{W}}}\) cannot be adjusted in real-time to adapt to these changes during inference. As a result, the sequential inference and learning fail to adjust \({{\bf{W}}}\) toward \(\hat{{{\bf{W}}}}\) or reduce \(E\) (Fig. S8b).

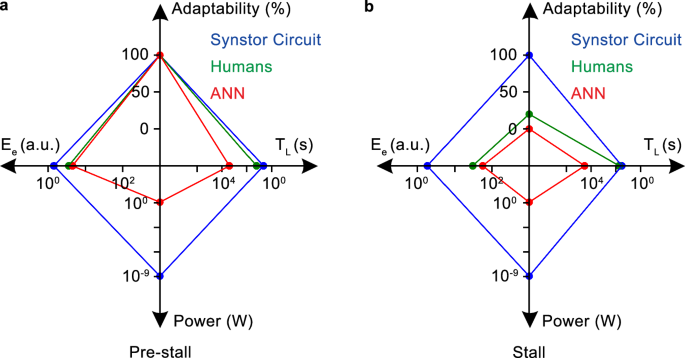

The \(E-t\) curves can be best fitted by \(E\left(t\right)=\left(E\left(0\right)-{E}_{e}\right)\,{e}^{-t/{T}_{L}}+{E}_{e}\) (Eq. 12) to extrapolate the initial learning time \({T}_{L}\) and the equilibrium objective function \({E}_{e}\) when \(t\, \gg \, {T}_{L}\), and \(\dot{E}\approx 0\). Under this equilibrium, both synstor circuits and human neurobiological circuits operate in Turing mode, with \(\dot{{{\bf{W}}}}\approx 0\) and \({{\bf{W}}}\approx \hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\), and \(\dot{E}\approx 0\). In the pre-stall condition with the wing at an \({8}^{\circ }\) angle of attack, the average \({T}_{L}\) of the synstor circuit (\(4.6\,{s}\) with a standard division \(\sigma =0.5\,{s}\)) in multiple trials is shorter than that of the human operators (\(16.8\,{s}\) with \(\sigma =2.2\,{s}\)), and superior to that of the ANN (\(2656\,{s}\) with \(\sigma =192\,{s}\)) (Fig. 6a). The average \({E}_{e}\) of the synstor circuit (\(1.4\) a.u. with \(\sigma =0.2\)) and humans (\(3.7\) a.u. with \(\sigma =0.8\)) in their multiple trials is superior to that of the ANN (\(4.3\) a.u. with \(\sigma =0.9\)) (Fig. 6a). Adaptability to the environment is represented by the successful rate in minimizing \(E\) toward \({E}_{e}\) in multiple trials. In the pre-stall condition, the adaptability for the synstor circuit, human operators, and ANN is \(100 \% \) with \(\sigma =0\) (Fig. 6a).

The average learning time (\({T}_{L}\)), equilibrium objective function (\({E}_{e}\)), adaptability to the changing environment, and power consumption across multiple trials for the wing controlled by the synstor circuit (blue), human operators (green), and ANN (red) a in the pre-stall condition with the wing at an \({8}^{\circ }\) angle of attack and b in the stall condition with the wing at an \({18}^{\circ }\) angle of attack.

In the stall condition with the wing at an \({18}^{\circ }\) angle of attack, the average \({T}_{L}\) of the synstor circuit (\(33.2{s}\) with \(\sigma =2.5\,{s}\)) across multiple trials is shorter than that of the human operators (\(55.8{s}\) with \(\sigma =7.5\,{s}\)), and superior to that of the ANN (\( > 34000\,{s}\)) (Fig. 6b). The average \({E}_{e}\) of the synstor circuit (\(1.9\) a.u. with \(\sigma =0.5\)) across multiple trials is better than that of humans (\(30.0\) a.u. with \(\sigma =7.1\)) and the ANN (\(55.4\) a.u. with \(\sigma =2.5\)) (Fig. 6b). Adaptability to the environment is measured by the success rate in recovering the wing from stall and minimizing \(E\) toward \({E}_{e}\) across multiple trials. The adaptability of the synstor circuit to the aerodynamic environment (\(100 \% \) with \(\sigma =0\)) is better than that of humans (\(20 \% \) with \(\sigma =17 \% \)), and is superior to that of the ANN (\(0 \% \) with \(\sigma =0\)) across their multiple trials (Fig. 6b). The power consumption of the synstor circuit (28 nW, Methods) for the concurrent execution of the inference and learning algorithms was eight orders of magnitude lower than the aggregate power consumption of the computer (5.0 W, Methods) executing the learning and inference algorithms sequentially. Estimating the power consumption of the human brain for inference and learning is difficult.

Discussions and conclusions

We have created a synstor circuit model that mimics a neurobiological circuit by simultaneously executing inference (\({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\), Eq. 1) and learning (\(\dot{{{\bf{W}}}}={{\rm{\alpha \; z}}}\otimes \,{{\bf{x}}}\), Eq. 2) algorithms in super-Turing mode. Theoretical analysis shows that this concurrent operation within a system can optimize the objective function of the system \(E=\frac{1}{2}{{{\bf{s}}}}^{2}\) of the system with \({{\bf{s}}}\) representing the state of the system. When the inference algorithm deviates from its optimal form due to environmental shifts, conductance inaccuracies, or other influences, the synstor circuit actively corrects and optimizes it through simultaneous learning, driving the conductance matrix (\({{\bf{W}}}\)) towards its ideal state (\(\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\)) and minimizing the objective function \(E\). Once the inference algorithm in the synstor circuit approaches its optimal configuration (i.e., \({{\bf{W}}}=\hat{{{\bf{W}}}}\)), the circuit can operate in Turing mode, functioning as a conventional neuromorphic circuit executing a fixed, optimized inference algorithm (\({{\bf{I}}}=\hat{{{\bf{W}}}}\,{{\bf{x}}}\)).

A synstor circuit was fabricated with a vertical stack comprising a Si channel, SiO2 dielectric layer, ferroelectric Hf0.5Zr0.5O2 layer, and a WO2.8 reference electrode. Each synstor also features lateral Schottky contacts between the Si channel and TiSi0.9/Ti input/output electrodes. Applying paired voltage pulses (\({x}_{m}\) and \({z}_{n}\)) to these electrodes progressively tunes the ferroelectric domains in the Hf0.5Zr0.5O2 layer, enabling analog conductance adjustment based on a correlative learning rule (\({\dot{w}}_{{nm}}=\alpha {z}_{n}{x}_{m}\)). Conversely, a single input voltage pulse (\({x}_{m}\)) induces current according to both an inference algorithm (\({I}_{n}={\sum}_{m}{w}_{{nm}}{x}_{m}\)) and the learning algorithm (\({\dot{w}}_{{nm}}=0\)).

Under this condition, the voltage primarily drops laterally across the Schottky junction and does not modify the ferroelectric domains within the Hf0.5Zr0.5O2 layer, or the synstor conductance (i.e., \({\dot{w}}_{{nm}}=0\) when \({x}_{m}\ne 0\) and \({z}_{n}=0\)) as per the learning rule (\({\dot{w}}_{{nm}}=\alpha {z}_{n}{x}_{m}\)). Unlike other neuromorphic circuits, the synstor circuit has the unique capability to execute inference and learning algorithms concurrently in analog parallel mode, allowing the circuit to operate in both super-Turing and Turing modes. During inference algorithm execution, it can dynamically optimize the algorithm via learning, adapt to environmental changes, and correct the circuit conductance matrix. Unlike other neuromorphic circuits, this synstor circuit uniquely enables concurrent execution of inference and learning algorithms in analog parallel mode, allowing for dynamic algorithm optimization, adaptation to environmental changes, and correction of the conductance matrix of the circuit during inference, thus supporting both Super-Turing and Turing operation.

Experiments were conducted to control a morphing wing in a wind tunnel by a synstor circuit, humans, and a computer-based ANN in both pre-stall (\({8}^{\circ }\) angle of attack) and stall condition (\({18}^{\circ }\) angle of attack). The experimental objective was to minimize the drag-to-lift force ratio (\({s}_{1}={F}_{D}/{F}_{L}\)), its fluctuation (\({s}_{2}\)), and objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\bf{2}}}}\), recovering the wing from stall by optimizing the shape of a morphing wing. Without prior learning, a synstor circuit and humans executed learning and inference concurrently in Super-Turing mode, while the ANN executed inference and learning sequentially in Turing mode. In a synstor or neurobiological circuit, the conductance of each synstor or synapse can be dynamically adjusted and optimized in parallel analog mode to adapt to environmental changes. In contrast, an ANN cannot adjust its \({{\bf{W}}}\) matrix during inference in response to environmental changes; it requires sequential inference and learning to determine the statistically optimal \({{\bf{W}}}\) matrix across all conditions. Consequently, in the pre-stall condition, the synstor circuit and humans exhibited learning times (\({T}_{L}\)) two orders of magnitude shorter than the ANN. In the stall condition, the synstor circuit and a few humans successfully optimized the wing shape and adapted to the chaotic aerodynamic environment, recovering the wing from the stall. In contrast, the ANN failed to recover the wing from the stall. In stall condition, the wing faces a chaotic aerodynamic environment. During the inference process, both synstor and human neurobiological circuits can adjust and optimize their \({{\bf{W}}}\) matrices in response to these chaotic changes, allowing the wing to recover from the stall. In contrast, the ANN cannot adapt its \({{\bf{W}}}\) matrix during inference in response to environmental changes and fails to derive the statistically optimal \({{\bf{W}}}\) matrix across chaotic environments, leading to failure in recovering the wing from the stall. For the same reasons, the wing performance, measured by the post-learning equilibrium objective function (\({E}_{e}\)), was superior for both the synstor circuit and humans compared to the ANN. The single-layer synstor circuit can execute learning and inference concurrently in real-time, dynamically optimizing its \({{\bf{W}}}\) matrix and inference algorithms, triggering optimal output actuation signals (\({{\bf{y}}}\)) to minimize the objective function (\(E\)). Conversely, the ANN and other neuromorphic circuits require additional time and energy for sequential data storage, learning algorithm execution, and data transfer between circuits. Moreover, the conductance (\( < 60{nS}\)) and power consumption (\( < 100{nW}\)) of synstors are lower than that of transistors (\( < 1{mS}\) and \( < 1{mW}\))11,12,13,47, memristors (~\( < 10{mS}\) and \( < 1{mW}\))31,32,33,34,35, and phase-change memory resistors (\( < 10{mS}\) and \( < 1{mW}\))36. Consequently, the power consumption of the synstor and neuron circuits (28 nW) for concurrent inference and learning is eight orders of magnitude lower than the aggregate power consumption (5.0 W) of the computer executing the learning and inference algorithms sequentially in the ANN. The speed to execute these algorithms in analog parallel mode scales linearly with the number of synstors (\({MN}\)) in an \(M\times N\) synstor circuit37,38,41. Synstor circuits offer a brain-inspired super-Turing computing platform for AI systems with extremely low power consumption, high-speed real-time learning and inference, self-correction of errors, and agile adaptability to dynamic complex environments.

Methods

Theoretical analysis of synstor circuits in super-Turing mode

The temporal mean of the \({{\bf{z}}}\) voltage pulses applied to the output electrode of the synstor circuit:

$$\overline{{{\bf{z}}}}=0$$

(4)

and the covariance between \({z}_{n}\) and \({y}_{{n}^{{\prime} }}\),

$$\overline{{z}_{n}{y}_{{n}^{{\prime} }}}= < {z}_{n},{y}_{{n}^{{\prime} }} > \,={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}$$

(5)

where \( < {z}_{n},{y}_{{n}^{{\prime} }} > =\overline{({z}_{n}-\overline{{z}_{n}})({y}_{{n}^{{\prime} }}-\overline{{y}_{{n}^{{\prime} }}})}=\overline{{z}_{n}({y}_{{n}^{{\prime} }}-\overline{{y}_{{n}^{{\prime} }}})}=\overline{{z}_{n}{y}_{{n}^{{\prime} }}}\) due to \(\overline{{z}_{n}}=0\), \({\delta }_{n{n}^{{\prime} }}\) denotes the Kronecker delta with \({\delta }_{n{n}^{{\prime} }}=\left\{\begin{array}{c}0\,{when}\,{n}^{{\prime} }\ne n\hfill\,\\ 1\,{when}\,{n}^{{\prime} }=n\end{array}\right.\), and \({\eta }_{n}\) represents a parameter with \({\eta }_{n}\le 0\).

The learning rule observed in synapses within neurobiological circuits, known as STDP3,5, can also be formulated as \(\frac{d{{\bf{W}}}}{{dt}}=\alpha \,{{\bf{z}}}\,\otimes \,{{\bf{x}}}\) (Eq. 2) or \(\frac{d{w}_{{nm}}}{{dt}}=\alpha \,{z}_{n}{x}_{m}\) with \({z}_{n}\left(t\right)=\left\{\begin{array}{c}{A}_{-}{e}^{(t-{t}_{n}^{y})/{\tau }_{-}}{when\; t} < {t}_{n}^{y}\\ -{A}_{+}{e}^{-(t-{t}_{n}^{y})/{\tau }_{+}}{when\; t}\ge {t}_{n}^{y}\end{array}\right.\), where \({t}_{n}^{y}\) denotes the moment when a pulse (\({{\boldsymbol{y}}}\)) is triggered at the \({n}^{{th}}\) postsynaptic neuron, \({A}_{+} > 0\) and \({A}_{-} > 0\) denote amplitude constants, and \({\tau }_{+} > 0\) and \({\tau }_{-} > 0\) denote time constants. In STDP, \({{\boldsymbol{z}}}\) also satisfies the conditions \(\overline{{{\boldsymbol{z}}}}\,=0\) (Eq. 4) and \(\overline{{z}_{n}{y}_{{n}^{{\prime} }}}={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}\) (Eq. 5) with \({\eta }_{n}\ge 0\) for STDP and \({\eta }_{n}\le 0\) for anti-STDP5.

The change rate of objective function \(E\) due to learning (modification of \({{\bf{W}}}\)),

$${\left(\frac{\partial E}{\partial t}\right)}_{L}=\frac{\partial E}{\partial {{\bf{W}}}}\dot{{{\bf{W}}}}={\sum}_{n\,}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)2\left|\alpha \right|{z}_{n}{E}_{{{\boldsymbol{x}}}}$$

(6)

where \(E=\frac{1}{2}{\sum}_{{m}}{s}_{m}^{2}\), \({E}_{{{\boldsymbol{x}}}}=\frac{1}{2}{\sum}_{{m}}{x}_{m}^{2}\), \({\left(\frac{\partial E}{\partial t}\right)}_{L}=\frac{\partial E}{\partial {{\boldsymbol{W}}}}\dot{{{\boldsymbol{W}}}}={\sum}_{n,{m}}\left(\frac{\partial E}{\partial {w}_{{nm}}}\right){\dot{w}}_{{nm}}={\sum}_{n,{m}}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)\left(\frac{\partial {I}_{n}}{\partial {w}_{{nm}}}\right){\dot{w}}_{{nm}}={\sum}_{n,{m}}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right){x}_{m}(\alpha {z}_{n}{x}_{m})={\sum}_{{n}}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)2\left|\alpha \right|{z}_{n}{E}_{{{\boldsymbol{x}}}}\), with \(\frac{\partial {I}_{n}}{\partial {w}_{{nm}}}={x}_{m}\) due to \({I}_{n}={\sum}_{{m}}{w}_{{nm}}{x}_{m}\) (Eq. 1), and \({\dot{w}}_{{nm}}=\alpha {z}_{n}{x}_{m}\) (Eq. 2). \({E}_{{{\boldsymbol{x}}}}\) and \({y}_{n}\) are discontinuous pulse functions and not differentiable; thus, \(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\), \(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\), and \(\frac{\partial {y}_{n}}{\partial {I}_{n}}\) in Eq. 6 need to be derived through fitting experimental data. The change of \({E}_{{{\boldsymbol{x}}}}\) within a learning period can be expressed in a linear model as:

$$\delta {E}_{{{\boldsymbol{x}}}}={\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right)\delta {y}_{{n}^{{\prime} }}+\delta {E}_{{{\boldsymbol{x}}}}^{0}$$

(7)

where \({\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right)\delta {y}_{{n}^{{\prime} }}\) represents the change in \({E}_{{{\boldsymbol{x}}}}\) due to \(\delta {y}_{{n}^{{\prime} }}\) with \(\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right)\) as coefficients in the model, and \(\delta {E}_{{{\boldsymbol{x}}}}^{0}\) represents the part of \(\delta {E}_{{{\boldsymbol{x}}}}\) unrelated to \(\delta {y}_{{n}^{{\prime} }}\). By multiplying both sides of Eq. 7 by \({z}_{n}\) and then taking the temporal average over the learning period, we get

$$\overline{{z}_{n}{E}_{{{\boldsymbol{x}}}}}=\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right){\eta }_{n}$$

(8)

where \(\overline{{z}_{n}{E}_{{{\boldsymbol{x}}}}}=\overline{{z}_{n}\delta {E}_{{{\boldsymbol{x}}}}}={\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right)\overline{{z}_{n}\delta {y}_{{n}^{{\prime} }}}+\overline{{z}_{n}\delta {E}_{{{\boldsymbol{x}}}}^{0}}={\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right)\overline{{z}_{n}{y}_{{n}^{{\prime} }}}=\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right){\eta }_{n}\), \(\overline{{z}_{n}\delta {E}_{{{\boldsymbol{x}}}}^{0}}=0\), \(\overline{{z}_{n}\delta {E}_{{{\boldsymbol{x}}}}}=\overline{{z}_{n}({E}_{{{\boldsymbol{x}}}}-{E}_{{{\boldsymbol{x}}}}(0))}=\overline{{z}_{n}{E}_{{{\boldsymbol{x}}}}}\), \(\overline{{z}_{n}\delta {y}_{{n}^{{\prime} }}}=\overline{{z}_{n}({y}_{{n}^{{\prime} }}-{y}_{{n}^{{\prime} }}(0))}=\overline{{z}_{n}{y}_{{n}^{{\prime} }}}\) due to \(\overline{{z}_{n}}=0\) (Eq. 4), and \({\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right)\overline{{z}_{n}{y}_{{n}^{{\prime} }}}=\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{{n}^{{\prime} }}}\right){\eta }_{n}\) because\(\,\overline{{z}_{n}{y}_{{n}^{{\prime} }}}={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}\) (Eq. 5). The partial derivative, \(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\), can be derived as a coefficient from a linear model, \(\delta E=\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\delta {E}_{{{\boldsymbol{x}}}}+\delta {E}^{0}\), where \(\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\delta {E}_{{{\boldsymbol{x}}}}\) represents the change in \(E\) due to \(\delta {E}_{{{\boldsymbol{x}}}}\), and \(\delta {E}^{0}\) represents the change of the part of \(\delta E\) unrelated to \(\delta {E}_{{{\boldsymbol{x}}}}\). By multiplying both sides of the equation by \({E}_{{{\boldsymbol{x}}}}-\overline{{E}_{{{\boldsymbol{x}}}}}\) and then taking the temporal average over the learning period, \( < E,{E}_{{{\boldsymbol{x}}}} > \,=\, < \delta E,{E}_{{{\boldsymbol{x}}}} > \,=\,\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right) < \delta {E}_{{{\boldsymbol{x}}}},{E}_{{{\boldsymbol{x}}}} > + < \delta {E}^{0},{E}_{{{\boldsymbol{x}}}} > =\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right) < {E}_{{{\boldsymbol{x}}}},{E}_{{{\boldsymbol{x}}}} > \), thus\(\,\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}= < E,{E}_{{{\boldsymbol{x}}}} > / < {E}_{{{\boldsymbol{x}}}},{E}_{{{\boldsymbol{x}}}} > \). In the circuit, \(E\) increases monotonically with increasing \({E}_{{{\boldsymbol{x}}}}\), thus \(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\ge 0\). The partial derivative, \(\frac{\partial {y}_{n}}{\partial {I}_{n}}\), can be derived as a coefficient from a linear model, \(\delta {y}_{n}=\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)\delta {I}_{n}+\delta {y}_{n}^{0}\), where \(\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)\delta {I}_{n}\) represents the change in \({y}_{n}\) due to \(\delta {I}_{n}\), and \(\delta {y}_{n}^{0}\) represents the change of the part of \(\delta {y}_{n}\) unrelated to \(\delta {I}_{n}\). By multiplying both sides of the equation by \({I}_{n}-\overline{{I}_{n}}\) and then taking the temporal average over the learning period, \( < {y}_{n},{I}_{n} > = < \delta {y}_{n},{I}_{n} > =\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right) < \delta {I}_{n},{I}_{n} > + < \delta {y}_{n}^{0},{I}_{n} > =\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right) < {I}_{n},{I}_{n} > \), thus \(\frac{\partial {y}_{n}}{\partial {I}_{n}}= < {y}_{n},{I}_{n} > / < {I}_{n},{I}_{n} > \). In the circuit, \({y}_{n}\) increases monotonically with increasing \({I}_{n}\), thus \(\frac{\partial {y}_{n}}{\partial {I}_{n}}\ge 0\). Based on Eq. 6, the change rate of \(\overline{E}\) due to learning within the learning period, \({{\left(\frac{\partial \overline{E}}{\partial t}\right)}_{L}=\overline{\left(\frac{\partial E}{\partial t}\right)}}_{L}={\sum}_{{n}}\overline{\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)2\left|\alpha \right|{z}_{n}{E}_{{{\boldsymbol{x}}}}}={\sum}_{{n}}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right)\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)2\left|\alpha \right|\overline{{z}_{n}{E}_{{{\boldsymbol{x}}}}}={\sum}_{{n}}2\left|\alpha \right|{\eta }_{n}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right){\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)}^{2}\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)\), where \(\overline{{z}_{n}{E}_{{{\boldsymbol{x}}}}}=\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}{\eta }_{n}\) (Eq. 8). During the learning process, \({\eta }_{n}\le 0\) (Eq. 5), \(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\ge 0\), and \(\frac{\partial {y}_{n}}{\partial {I}_{n}}\ge 0\), thus

$$\,{\left(\frac{\partial \overline{E}}{\partial t}\right)}_{L}=\overline{\frac{\partial E}{\partial {{\bf{W}}}}\dot{{{\bf{W}}}}}={\sum}_{n\,}2\left|\alpha \right|{\eta }_{n}\left(\frac{\partial E}{\partial {E}_{{{\boldsymbol{x}}}}}\right){\left(\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right)}^{2}\left(\frac{\partial {y}_{n}}{\partial {I}_{n}}\right)\le 0$$

(9)

The overall change rate of \(\overline{E}\), \(\frac{d\overline{E}}{{dt}}={\left(\frac{\partial \overline{E}}{\partial t}\right)}_{L}+{\left(\frac{\partial \overline{E}}{\partial t}\right)}_{0}\), where \({\left(\frac{\partial \overline{E}}{\partial t}\right)}_{0}\) represents the change rate of \(\overline{E}\) unrelated to learning. When the system and learning parameters, such as \(\alpha \), \({\eta }_{n}\), \(\left|\frac{\partial {E}_{{{\boldsymbol{x}}}}}{\partial {y}_{n}}\right|\), and \(\frac{\partial {y}_{n}}{\partial {I}_{n}}\), are adjusted to meet the condition \({\left(\frac{\partial \overline{E}}{\partial t}\right)}_{L}\le -{\left(\frac{\partial \overline{E}}{\partial t}\right)}_{0}\), then \(\frac{d\overline{E}}{{dt}}\le 0\) (Eq. 3).

Synstor circuit fabrications and characterization

The synstor circuit was fabricated on a silicon-on-insulator wafer featuring a 220 nm p-doped Si layer on a 3-µm-thick buried silicon oxide layer. Ultraviolet (UV) photolithography and reactive ion etching (RIE; Oxford Plasmalab 80 Plus RIE) were used to fabricate 5 µm \(\times \) 40 µm Si channels. After thermal oxidation to form a 3.5-nm-thick SiO2 layer and subsequent etching to define contact areas, 300-nm-thick Ti input/output electrodes were fabricated by UV photolithography, e-beam evaporation, and a lift-off process. The chip was annealed in forming gas (5% H2 in N2) at 460 °C for 30 min to form a titanium silicide layer sandwiched between the Si channel and Ti input/output electrodes. A 12.6-nm-thick Hf0.5Zr0.5O2 layer was deposited on the chip by atomic layer deposition (Fiji Ultratech ALD) at 200 °C using tetrakis(dimethylamino)hafnium(IV) and tetrakis(dimethylamino)zirconium(IV) precursors. An 80-nm-thick W oxide layer was deposited on the Hf0.5Zr0.5O2 layer by magnetron sputtering (Denton Discovery), and W oxide reference electrodes were patterned by lifting off a photoresist layer. A rapid thermal anneal (Modular Process Technology RTP-600xp) at 500 °C crystallized the Hf0.5Zr0.5O2 layer and formed a WO2.8 reference electrode. Finally, the Hf0.5Zr0.5O2 was etched from the contact pads. The synstor features an active area of 200 µm2. Based on our device simulations, an HfZrO-based synstor can be miniaturized to an active area of ~0.002 µm2 using the 28 nm fabrication techniques for HfZrO-based ferroelectric transistors48,49. Structural and material composition profiles of the synstor chip were characterized using STEM (scanning transmission electron microscopy), EDX (energy-dispersive X-ray spectroscopy), and EELS (electron energy loss spectroscopy) analysis. EDX analysis was performed using JEOL JEM-2800 TEM operated at 200 kV. Atomic resolution STEM and EELS analysis was performed with a JEOL Grand ARM TEM operated at 300 kV with a spherical aberration corrector.

Computer-aided device simulation

Based on the properties of synstors and their circuits, we have designed and simulated synstors by a technology computer-aided design (TCAD) simulator (Sentaurus Device, Synopsys). The simulator performed numerical calculations of the device physics by solving Poisson’s equation describing the electrostatics and drift-diffusion carrier transport under a set of boundary conditions defined by the device structure. Quasi-stationary simulations were conducted under various voltage biases on the input/output electrodes of the synstors with respect to the grounded reference electrodes. The band diagrams of the synstors were extracted from these simulations, the electronic properties of the synstors were analyzed, and an equivalent circuit (Fig. 2b) was established to emulate these electronic properties.

Electrical tests of synstor circuits

During the electric tests, the reference electrodes of the synstors were always grounded. Current-voltage characteristics were measured with a Keithley 4200 semiconductor parameter analyzer. The electrical voltage pulses applied to the input and output electrodes of the devices and circuits were generated by FPGA (National Instruments, cRIO-9063), computer-controlled modules (National Instruments, NI-9264), and a Tektronix AFG3152C waveform/function generator. Currents flowing through the synstors were measured by a semiconductor parameter analyzer, computer-controlled circuit modules (National Instruments, NI-9205 and NI-9403), and an oscilloscope (Tektronix TDS 3054B). Testing protocols were programmed (NI LabVIEW) and implemented in an embedded FPGA (Xilinx), a microcontroller, and a reconfigurable I/O interface (NI CompactRIO).

Neuron circuits

Neuron circuits were designed in our laboratory to emulate the functions of biological neurons, and were fabricated in Taiwan Semiconductor Manufacturing Company (TSMC) according to our design. The structure and properties of the neuron circuit will be detailed in a separate report. Currents from excitatory (or inhibitory) synstors, \({{\rm{I}}}\), are mirrored by transistors, leading the current I to a capacitor of a transistor gate, thereby increasing (decreasing) its potential \({V}_{m}\). A leakage current, \({I}_{b}\), controlled by the voltage, \({V}_{b}\), reduces \({V}_{m}\). When \({V}_{m}\) reaches a threshold value, an output \(y\) pulse is triggered. The neuron circuit fabricated by TSMC was tested by injecting the current \(I\) into the neuron circuit, and measuring the voltage pulses output from the neuron circuit. As shown in Fig. S4, when \(I\, < \,{I}_{{th}}\) (a threshold current), no pulse is output from the neuron circuit. When \(I\ge {I}_{{th}}\), the frequency of the pulses output from the neuron circuit (\({f}_{{out}}\)) increases with increasing \(I\). The \({I}_{{th}}\) and \({f}_{{out}}-I\) relation can be adjusted by adjusting the control voltage (Vb). A digital circuit processes the \({{\boldsymbol{y}}}\) pulses and generate \({{\boldsymbol{z}}}\) pulses, with level shifters converting logic signals from the digital circuit to the desired voltage levels in the neuron circuit. As shown in Figs. S5a, S6a, when a \({y}_{n}\) pulse is triggered at \(t={t}_{n}\) from the \({n}^{{th}}\) neuron circuit, a 80-ms-wide \(-2.1{V}\) (\(4.2{V}\)) \({z}_{n}\) pulses is triggered simultaneously at the \({n}^{{th}}\) (\({n}^{,{th}}\)) output electrode connected with excitatory (inhibitory) synstors, and a 80-ms-wide \(4.2{V}\) (\(-2.1{V}\)) \({z}_{n}\) pulse is triggered at the \({n}^{,{th}}\) (\({n}^{{th}}\)) output electrode connected with the inhibitory (excitatory) synstors at \(t={t}_{n}+{t}_{d}\), with \({t}_{d}=1.2{s}\). The \({{\boldsymbol{y}}}\) and \({{\boldsymbol{z}}}\) pulses satisfy the conditions \(\overline{{{\boldsymbol{z}}}}\,=0\) (Eq. 4) and\(\,\overline{{z}_{n}{y}_{{n}^{{\prime} }}}={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}\) (Eq. 5) with \({\eta }_{n}\le 0\). To minimize power consumption, all the analog transistors in the neuron circuit are operated in their subthreshold region. The circuit simulations indicate that the average power consumption of the neuron circuit (including z pulse generation) is 0.27 nW.

Morphing wing and wind tunnel

During reinforcement learning, the discrete action space comprised 500 distinct voltage levels used to either raise or lower the activation voltage applied to the piezoelectric actuators of the morphing wing. The wing is made of a NACA 0012 airfoil with 12-inch chord and 15-inch span rectangular profile. This morphing edge consisted of two macro fiber composite (MFC) piezoelectric actuators, each bonded to 0.001-inch stainless steel shims to create bending. Due to the antagonistic design of the morphing tail, two voltages opposite in sign but proportional in magnitude were supplied to the dual MFC system so that each MFC actuates the tail in the same direction. Therefore, although we only reported the actuation voltage, \({V}_{a}\), for one MFC, the second MFC received a separate voltage appropriately. Through a flexure box interface, the two MFC actuate antagonistically to smoothly and rapidly deflect the trailing edge and modify the camber of the airfoil, providing a multifunctional system acting as both skin and actuator44,45. An increase in actuation voltage causes the trailing edge of the morphing wing to deflect upward, while a decrease in voltage results in a downward deflection of the trailing edge. The design was scalable to multiple piezoelectric actuators along the spanwise edge (spanwise morphing trail edge) to achieve a continuous change in wing shape. The morphing wing experiments were performed in the open-loop wind tunnel facility at Stanford University with a square test section of 33 inch × 33 inch. The wind speed in the wind tunnel was set at \(10.0\,{m}/s\). The lift (FL) and drag (FD) forces on the wing were measured with strain gauges (OMEGA SGD-5/350-LY13) attached to a morphing wing mounting shaft.

Experimental setup for a morphing wing controlled by a synstor circuit

As shown in Figs. S5a, 6a, the lift and drag forces on the wing were recorded by the strain gauges (OMEGA SGD-5/350-LY13), which was then connected to NI-9236 DAQ for analog to digital conversion and then processed by PC. The sensing signals, \({{\boldsymbol{s}}}\), including the drag-to-lift force ratio (\({s}_{1}={F}_{D}/{F}_{L}\)), and the magnitude of the fluctuation of the drag-to-lift force ratio (\({s}_{2}\)) were converted to voltage pulses, \({{\boldsymbol{x}}}\), with an amplitude of \(-2.1{V}\) or \(4.2{V}\) and a duration of \(10{ns}\). The \({{\boldsymbol{x}}}\) pulses were input to the synstor circuit via an interface circuit (FPGA, Xilinx, Kintex-7). The firing rate of \({{\bf{x}}}\) pulses increased monotonically with increasing \({{\bf{s}}}\). The \({{\boldsymbol{x}}}\) signals generated currents via the synstor circuit by following the inference algorithm \({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\) (Eq. 1), the currents, \({{\bf{I}}}\), flowed through the synstors to neuron/interface circuits, triggering the actuation pulses, \({{\bf{y}}}\), to modify the shape of the wing. When \({{\boldsymbol{y}}}\) pulses were triggered, \({{\boldsymbol{z}}}\) pulses satisfying the conditions \(\overline{{{\bf{z}}}}\,=0\) (Eq. 4) and\(\,\overline{{z}_{n}{y}_{{n}^{{\prime} }}}={\eta }_{n}\,{\delta }_{n{n}^{{\prime} }}\) (Eq. 5) with \({\eta }_{n}\le 0\) were also triggered at the output electrodes of the synstor circuit via the neuron circuits to modify \({{\boldsymbol{W}}}\) according to the learning rule \(\dot{{{\bf{W}}}}={{\rm{\alpha }}}{{\bf{z}}}\,{{\otimes }}\,{{\bf{x}}}\) (Eq. 2) during the real-time learning process. The output pulses from the neuron circuits were converted to actuation voltage, \({V}_{a}\), via an interface circuit (FPGA, Xilinx, Kintex-7) to control the wing.

Experimental setup for a morphing wing controlled by human operators

In the experiments with the morphing wing controlled by humans under the same environment of the synstor circuit experiments (Figs. S5b, S6b), human operators without any prior knowledge of the morphing wing and its control system visually received sensing signals (\({{\boldsymbol{s}}}\)) displayed on a computer monitor, and were instructed to minimize the \({{\bf{s}}}\) or \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\bf{2}}}}\) values. The human operators triggered actuation pulses \({{\bf{y}}}\) to change wing shape by pressing two keys in a keyboard. The firing rates of \({{\bf{y}}}\) pulses were proportional to the keystroke times.

Experimental setup for a morphing wing controlled by ANN

In the experiments with the morphing wing controlled by ANN under the same environment of the synstor circuit experiments (Figs. S5c, S6c), a Dell computer with Intel i7-8700 CPU received the \({{\bf{s}}}\) signals, executed the inference algorithm (\({{\bf{I}}}={{\bf{W}}}\,{{\bf{x}}}\), Eq. 1) in ANN with three layers of nine neurons and 20 synapses (Fig. S7), and triggered actuation pulses, \({{\bf{y}}}\), to change wing shape. We tested ANNs with various structures and learning parameters, selecting the optimal structures (nine neurons and 20 synapses) and learning parameters (a learning rate of \(5\times {10}^{-9}{a}.u.\) and a discount factor of \(0.99995\,{a}.u.\)) with the shortest learning time and lowest objective function for the experiments. Before the experiment started, the synaptic weight matrix, \({{\bf{W}}}\), in the ANN was also set to random values, the same as for the synstor trials. Due to the large data size, the time for executing the learning algorithm was much longer than executing the inference algorithm. The ANN controller with synaptic weight matrixes \({{\bf{W}}}(n)\) controlled the wing for 30 s in the same environment as the synstor circuit in its \({n}^{{th}}\) round of trial, and the experimental data collected from this inference experiment was used to execute a policy gradient-based deep reinforcement learning algorithm46 for about \(600{s}\), modifying \({{\bf{W}}}(n)\) to \({{\bf{W}}}(n+1)\). The modified \({{\bf{W}}}(n+1)\) was sequentially sent back for the \({(n+1)}^{{th}}\) round of experiment to control the wing iteratively. In the case of pre-stall condition, after 40 iterative offline learning processes, the weights in the ANN gradually evolved from uncorrelated random values to stable correlated values, the ANN controller progressively learned to control the wing and the objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\bf{2}}}}\) was gradually decreased and minimized to a stable value. However, due to a highly chaotic environment during the stall condition, the ANN weights continuously oscillated and never stabilized in the experiments performed for 57 iterative offline learning processes. Without real-time learning functionality, the computer was not able to dynamically optimize \({{\bf{W}}}\) in ANN to control the wing in the chaotic stall condition, and the wing failed to recover the wing from the stall and minimize the objective function \(E=\frac{1}{2}{{{\bf{s}}}}^{{{\bf{2}}}}\).

Analysis of \(\left(\frac{\partial E}{\partial {y}_{n}}\right)\) during learning processes

During the learning processes of the synstor circuits, humans, and ANN, \(\frac{\partial E}{\partial {y}_{n}}\) can be derived from a linear model \(\delta E={\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial E}{\partial {y}_{{n}^{{\prime} }}}\right)\delta {y}_{{n}^{{\prime} }}+\delta {E}^{0}\), where \({\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial E}{\partial {y}_{{n}^{{\prime} }}}\right)\delta {y}_{{n}^{{\prime} }}\) represents the change in \(E\) due to learning (\(\delta {y}_{{n}^{{\prime} }}\)) with \(\left(\frac{\partial E}{\partial {y}_{{n}^{{\prime} }}}\right)\) as coefficients in the model, and \(\delta {E}^{0}\) represents the part of \(\delta E\) unrelated to \(\delta {y}_{{n}^{{\prime} }}\). By multiplying both sides of the equation by \({y}_{n}-{\overline{y}}_{n}\) and then taking the temporal average over a learning period, \( < E,{y}_{n} > \,=\, < \delta E,{y}_{n} > \,=\,{\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial E}{\partial {y}_{{n}^{{\prime} }}}\right) < \delta {y}_{{n}^{{\prime} }},{y}_{n} > +\, < \delta {E}^{0},{y}_{n} > =\left(\frac{\partial E}{\partial {y}_{n}}\right) < {y}_{n},{y}_{n} > \), where the covariance \( < \delta {E}^{0},{y}_{n} > \,=0\), \( < \delta {y}_{{n}^{{\prime} }},{y}_{n} > \,=\, < {y}_{{n}^{{\prime} }},{y}_{n} > \,=\, < {y}_{n},{y}_{n} > {\delta }_{n{n}^{{\prime} }}\), and \({\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial E}{\partial {y}_{{n}^{{\prime} }}}\right) < \delta {y}_{{n}^{{\prime} }},{y}_{n} > \,={\sum}_{{n}^{{\prime} }\,}\left(\frac{\partial E}{\partial {y}_{{n}^{{\prime} }}}\right) < {y}_{n},{y}_{n} > {\delta }_{n{n}^{{\prime} }}\,=\left(\frac{\partial E}{\partial {y}_{n}}\right) < {y}_{n},{y}_{n} > \), thus

$$\,\left(\frac{\partial E}{\partial {y}_{n}}\right)=\, < E,{y}_{n} > / < {y}_{n},{y}_{n} > $$

(10)

When \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}\ne 0\), and \(\frac{\partial E}{\partial {{\boldsymbol{W}}}}=\frac{\partial E}{\partial {{\boldsymbol{y}}}}\frac{\partial {{\boldsymbol{y}}}}{\partial {{\boldsymbol{W}}}}\ne 0\), the circuit operates in the super-Turing mode, simultaneously performing inference and learning to adjust \({{\bf{W}}}\) toward \(\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\), while \(\left|\,\frac{\partial E}{\partial {{\bf{W}}}}\right|\) gradually decreases toward 0. When \(\overline{E}\) reaches its minimum value, \(\frac{\partial E}{\partial {{\boldsymbol{y}}}}=0\), and \(\frac{\partial E}{\partial {{\boldsymbol{W}}}}=\frac{\partial E}{\partial {{\bf{y}}}}\frac{\partial {{\boldsymbol{y}}}}{\partial {{\bf{W}}}}=0\), \(\dot{{{\bf{W}}}}=0\), and \({{\bf{W}}}=\hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\) remains unchanged, the circuit operates in the Turing mode (Figs. 4d, 5d).

Analysis of \(\left(\frac{\partial E}{\partial {{\bf{W}}}}\right)\) during ANN learning processes

During the ANN learning processes, \(\frac{\partial E}{\partial {{\bf{W}}}}\) can be derived from a linear model \(\delta E={\sum}_{{n}^{{\prime} },\,{{m}}^{{\prime} }\,}\left(\frac{\partial E}{\partial {w}_{{n}^{{\prime} }{m}^{{\prime} }}}\right)\delta {w}_{{n}^{{\prime} }{m}^{{\prime} }}+\delta {E}^{0}\), where \({\sum}_{{n}^{{\prime} },\,{{m}}^{{\prime} }\,}\left(\frac{\partial E}{\partial {w}_{{n}^{{\prime} }{m}^{{\prime} }}}\right)\delta {w}_{{n}^{{\prime} }{m}^{{\prime} }}\) represents the change in \(E\) due to learning (\(\delta {w}_{{n}^{{\prime} }{m}^{{\prime} }}\)), with \(\left(\frac{\partial E}{\partial {w}_{{n}^{{\prime} }{m}^{{\prime} }}}\right)\) as coefficients in the model, and \(\delta {E}^{0}\) represents the part of \(\delta E\) unrelated to \(\delta {w}_{{nm}}\). By multiplying both sides of the equation by \({w}_{{nm}}-{\overline{w}}_{{nm}}\) and then taking the temporal average over a learning period, \( < E,{w}_{{nm}} > \,=\, < \delta E,{w}_{{nm}} > \,=\,{\sum}_{{n}^{{\prime} },\,{{m}}^{{\prime} }\,}\left(\frac{\partial E}{\partial {w}_{{n}^{{\prime} }{m}^{{\prime} }}}\right) < \delta {w}_{{n}^{{\prime} }{m}^{{\prime} }},{w}_{{nm}} > +\,\!\! < \delta {E}^{0},{w}_{{nm}} > =\left(\frac{\partial E}{\partial {w}_{{nm}}}\right) < {w}_{{nm}},{w}_{{nm}} > \), where the covariance \( < \delta {E}^{0},{w}_{{nm}} > \,=0\), \( < \delta {w}_{{n}^{{\prime} }{m}^{{\prime} }},{w}_{{nm}} > \,=\, < {w}_{{n}^{{\prime} }{m}^{{\prime} }},{w}_{{nm}} > \,=\, < {w}_{{nm}},{w}_{{nm}} > {\delta }_{n{n}^{{\prime} }}{\delta }_{m{m}^{{\prime} }}\), and \({\sum}_{{n}^{{\prime} },\,{{m}}^{{\prime} }\,}\left(\frac{\partial E}{\partial {w}_{{n}^{{\prime} }{m}^{{\prime} }}}\right) < \delta {w}_{{n}^{{\prime} }{m}^{{\prime} }},{w}_{{nm}} > \,=\left(\frac{\partial E}{\partial {w}_{{nm}}}\right) < {w}_{{nm}},{w}_{{nm}} > \), leading to:

$$\,\left(\frac{\partial E}{\partial {w}_{{nm}}}\right)=\,[ < E,{w}_{{nm}} > / < {w}_{{nm}},{w}_{{nm}} > ]/ < {w}_{{nm}},{w}_{{nm}} > $$

(11)

During the ANN learning process of ANN,\(\,\left(\frac{\partial E}{\partial {w}_{{nm}}}\right)\) can be derived from \(E\) and \({{\bf{W}}}\) data based on Eq. 11 (Fig. S8). However, during the learning processes of the synstor and human neurobiological circuits, \({{\bf{W}}}\) was neither measured nor recorded, thus \(\frac{\partial E}{\partial {w}_{{nm}}}\) cannot be derived.

Analysis of the objective function \(E\) during learning processes

During the learning processes of the synstor circuits, humans, and ANN, the average change rate of objective function, \(\left\langle \dot{E}\right\rangle \), is a nonlinear function of time, but can be best fitted by a linear dynamic model \(\dot{\left\langle E\right\rangle \,}=-(\left\langle E\right\rangle \,-{E}_{e})/{T}_{L}\) and its solution over the learning processes,

$$\left\langle E\left(t\right)\right\rangle =\left(E\left(0\right)-{E}_{e}\right)\,{e}^{-t/{T}_{L}}+{E}_{e}$$

(12)

where the fitting parameter \({T}_{L}\) represents the average learning time, and \({E}_{e}\) represents the equilibrium objective function when \(t\, \gg \,{T}_{L}\) and \(\dot{\left\langle E\right\rangle \,}\approx 0\) (Figs. 4c, 5c). In this condition, both synstor circuits and human neurobiological circuits operate in Turing mode, with \(\dot{{{\bf{W}}}}\approx 0\) and \({{\bf{W}}}\approx \hat{{{\bf{W}}}}={arg}{\min }_{{{\boldsymbol{W}}}}E\), after the learning processes conclude.

Power consumption of inference and learning by the synstor circuit

During learning in the synstor circuit, conductance tuning was performed by applying a pair of voltage pulses with identical amplitudes to the input and output electrodes at the same time. These pulses charge the capacitor formed between the reference electrode and the silicon channel but did not drive current through the Si channel, unlike during inference. The average power consumption for learning in the synstor circuit can be estimated as: \({P}_{L}\approx {c}_{T}\,{V}_{a}^{2}\,{f}_{p}\), where \({c}_{T}\) is the total capacitance of the synstors in the circuit, \({V}_{a}\) is the magnitude of pulses, and \({f}_{p}\) is the average frequency of the pulses applied for learning. In the learning process of the synstor circuit, the parameters are approximately, \({c}_{T}\approx 3.5{pF}\), \({V}_{a}=4.2{V}\), and \({f}_{p}\approx 0.6{Hz}\), resulting in \({P}_{L}\approx 8.8{pW}\).

During inference in the synstor circuit, voltage pulses were applied to the input electrodes of the synstors, while their output electrodes were held at ground potential. The average power consumption of the circuit for inference,

$$P={{\bf{I}}}{{\otimes }}{{\bf{x}}}=({{\bf{wx}}}){{\otimes }}{{\bf{x}}}\approx {w}_{T}\,{V}_{a}^{2}{D}_{p}$$

(13)

where \({w}_{T}\) denotes the total conductance of the synstors in the circuit, \({V}_{a}\) denotes the magnitude of pulses, \({D}_{p}\) denotes the average duty-cycle of the pulses. During the inference in the synstor circuit for the wing, \({w}_{T}\approx 40\,{nS}\), \({V}_{a}=4.2{V}\), and \({D}_{p}=0.04\), thus \(P\approx 28\,{nW}\) (Fig. 6). The power consumption for learning is substantially lower than that required for inference. As a result, inference power primarily determines the overall power consumption of the synstor circuit.

Power consumption of sequential inference and learning in ANN by the computer

According to analysis from Python toolkits “keras_flops” and “pyperf”, the speeds for sequentially executing the inference and learning programs in ANN on the computer were estimated to be \(1.24{KFLOPS}\) and \(2.0{GFLOPS}\), respectively. With a computing energy efficiency of \(0.4{GFLOPS}/W\) 50, the power consumption for sequentially executing the inference and learning programs on the computer was \(3.1\,\mu W\) and \(5.0{W}\), respectively. The aggregate power consumption of the ANN for sequential inference and learning is \(5.0{W}\) (Fig. 6).

.png)