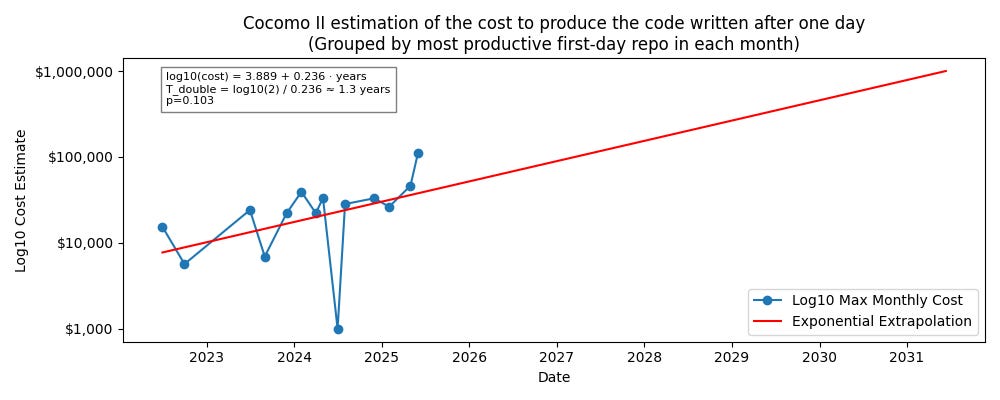

By 2031, I predict that it will be possible in a single day to produce software that would have taken $1,000,000 worth of development effort in 2021. I base it on the chart above, where I have extrapolated data from my last few years of programming.

Let's pull it apart to explain what's going on.

For the last 40 to 50 years, one of the interesting areas of research into software engineering projects is to estimate how long it is going to take to develop a piece of software, and consequently how much it will cost. And we have some pretty good formulae that take into account the programming language, the style of development, and other factors.

Occasionally when I'm working with an investment company, we run this in reverse, where we look at the existing code that a startup has and then we estimate how much time (and how much money it would have cost) to produce that software, which then gives you some idea of how hard it would be for a competitor to appear on the scene with a clone of the startup software.

So I applied the COCOMO II technique to software code repositories that I have created over the last few years, and with a twist. The twist is I didn't look at the present day value of these repositories (because things that I've been working on for 4 or 5 years with customers are obviously going to be much bigger and more valuable than something I started a month ago). I identified 26 repositories, each representing a customer project (academic or commercial) where development began simultaneously with project kickoff.

The reason I decided to do this was because of an experience I had this week working with a new customer. By the end of the day, I had written 1,110 lines of code plus some documentation. Not that long ago, that would have been extraordinarily productive. But in truth, I did not do much of the code writing. I just used Anthropic Claude and burned through about $5 worth of credits with Anthropic. I reviewed a lot of code and I made two tiny fixes manually and I wrote and designed the database schema by hand, but the rest was me just prompting a bot to write the code.

When I applied the COCOMO II technique to that one day of work, it reported that it would have taken $100,000 worth of development effort.

From this, we can take a few observations:

We need to update COCOMO II, because I didn't earn $100,000 for one day's work. My consulting fees are actually quite affordable — did you like how I managed to insert that unashamed advertisement for my services into my Substack?

We can look at the trend over time of these software estimates of how much I got done in one day and see what the trend is. I'm fairly confident that after a 30-year career in software, any improvements are not coming from my abilities—they're coming from the tools and infrastructure surrounding me.

As we have migrated from auto-completers to chatbots writing more code, the productivity growth appears to be exponential: when I plot the estimated cost to develop and take logs, a straight line through it does seem to reflect the data reasonably well.

There is roughly a 10% chance the trend I observed is coincidental. Conversely, this means there’s a 90% probability that there is a statistically significant linear productivity improvement over time.

The value is doubling every 1.3 years. Extrapolating that trend out, that’s how I can make my prediction for 2031: I will be working with a client and on my first day with them I will write some programs that — if I had tried to write them in 2021 — would have cost $1 million (and obviously taken far longer than a day).

My projections are a bit slower than those from METR (https://metr.org/blog/2025-03-19-measuring-ai-ability-to-complete-long-tasks/), which predicts task-completion capabilities of AI doubling every 7 months

Two things could be going on here. First, I started my trend back in 2021 and there really wasn't a lot of significant improvement in software development in that first year or two. Things have only really ramped up in the last year. By including that longer-term trend, I will underestimate a phase change shift like what we're going through at the moment.

Second, software complexity isn't linear. It doesn't take twice as much effort to write a program that's twice as long. What happens is that there is a slowdown associated with having to interface with the rest of the program. Making changes to big programs takes longer than making an otherwise-similar change to a smaller program. Therefore, we would expect that the effort and cost involved in working with larger programs should be more, and it’s possible that this might be starting to come into effect in the sized programs I’m writing already. Perhaps this is nudging the doubling time in my data somehow.

What this implies economically depends on how elastic the demand is for software to be developed. I believe that if software can be developed for half as much money (as I see happening every 16 months in the future) that the number of things we will want to automate using software will more than double. There have always been projects that businesses have wanted to do which could not be cost justified because of the large effort involved in developing them. I believe there is probably a very large backlog of such projects, and as that backlog gets drained, more people will have more ideas on what could be automated by software, and that thus the backlog will actually fill faster than it drains.

Not everyone agrees with me on this. There are certainly people who believe that there is a fixed quantity of integration and automation in the world economy that could be done and that we may be nearing saturation point for that. There is some merit in that argument in that eventually we need to interface to the physical world and that the constraints on diffusion of technology and deployment of technology in the physical world are serious.

If we're bumping up against that soon, then that will put the brakes on what software could be developed, and that therefore the demand for new software to be developed could hit some limits.

Taking the scenario where the backlog of software development projects never drains, then there will continue to be demand for software developers.

While most of the tasks that I did this week could have been done by somebody without software development experience, there were just a few places where my experience meant that I was able to describe the problem more succinctly, or identify the problem that needed to be fixed faster, or simply just have the aptitude and interest to spend a day talking to an AI software engineering chatbot.

So there will continue to be software developers working, but they may not be editing all that much code directly. They might be reading a lot of code and directing bots to do certain kinds of code review.

Given that the world economy is not growing at a rate of 100% growth every 16 months, but software developer productivity is, that suggests that software developer salaries are going to rise quite rapidly if the demand for software developers increases because there is staggeringly more software to develop and that software has some value. Then software developers will take a larger portion of that value created.

By the end of Wednesday, the program was getting 99% accuracy on the task that was required to solve the business problem. The stretch goal was 99.9%, which I think is achievable, but that will require another day or two of experimentation to figure out how to do it.

It was sufficient for what was needed: it was enough for my client to be able to move forward with the business discussions he needed to have with his clients to say “yes, I can honestly say that this is possible” and hopefully win some new business. So I expect that we’ll talk again in a few weeks once he has bagged that contract. Since the whole thing he was aiming to do would have been impossible prior to GPT-3, this is all new software goods and services that expands the size of the market. One way or another, I’ll have plenty of work to do, if not for him then for someone else.

But also: that meant that I effectively had a one day contract which achieved the business goal. In the past that might have been a week-long contract. This skews the balance of how much effort I need to be expending finding new clients vs servicing existing clients in the future.

What I find odd is that it is increasingly difficult for junior developers to get jobs. I have several very strong Masters-level students from last semester who are still looking for jobs 6 months later. (Ping me if you are hiring and I’ll introduce you.) This is a deep contrast to my experience: I graduated into a recession with a Bachelor’s and had jobs lined up within a few weeks, in a world where software could do much less.

It’s a bit of a paradox: there’s an increasing backlog of software that could be developed economically, that would have a positive return on investment. It's now possible to hire someone on a junior developer wage who can write the backend, the frontend, the mobile app, and also do some data science, AI, and cryptography if that’s needed to make a project viable. That would have commanded a rock-star premium wage 2 to 3 years ago. So there’s an increasing demand, and the input costs are getting cheaper, but we’re not seeing this in the lived experiences of junior developers.

Why not?

I have a suspicion that the problem is that a lot of companies have been burned and are afraid of software development projects. A lot of consultancies can’t deliver; managing software projects in-house is hard work; in the past software projects were big-ticket line items that needed to be budgeted for. So it’s easier politically to start no ambitious software projects.

If it’s just fear and politics that is driving the lack of consequent software development activity, this means that the companies that have the skills and courage to execute on software development projects are going to reap a lot of benefits.

This is one of the reasons why I'm tracking which boards of directors contain people who could themselves write a piece of software — https://boards.industrial-linguistics.com/ . A company whose board consists entirely of people who cannot themselves write a program are going to be much more reluctant to launch into a major initiative automating portions of their work through software compared to a company that has software-capable board members who could do it themselves if they had to.

An interesting side observation is if the trend continues beyond the $1 million mark (which is perhaps extrapolating too far), then by 2037 a single person in a day would be able to write $1 billion worth of software.

At that point, even having one software-technical capable person in the organization would be enough to transform an entire 2021-era enterprise's IT, replacing everything from mainframes to routers, desktops, and sensors.

From an accounting perspective, the rapid decrease in software development costs suggests software assets should depreciate at least 50% every 16 months, effectively reaching zero value within a few years. This sounds about right and it matches established practice, but the interesting thing is that previously (say prior to 2020) it should not have been depreciated quite so quickly. Have we been accounting for software intellectual property incorrectly all this time?

It also means that startups that are relying on the difficulty of software implementation as part of their moat are in for a bit of a shock.

Given this rapid decrease in software costs, I’m uncertain about the ideal balance between software developers and business developers for startups. Perhaps we need fewer software-focused employees, or perhaps the opposite.

Should update away from the idea that the right balance for an early stage startup is one software developer for every two business developers? If software is less valuable per unit, then this would argue we need more focus on the business side of the startup; on the other hand, if this now means that the startup can achieve more and shoot for more ambitious targets, then it should be the other way around.

I still don't have clear thoughts about this.

This has been a busy week for software developers.

I did the development work on Wednesday, so I was using Claude Sonnet 3.7. By Thursday I was using OpenAI Codex to write the code that analysed the repositories (it’s OK — it takes a bit of getting used to the way you have to structure your code to make the best of it). By Thursday night Google had announced Jules, so I could have used that. I’ll have to give it a try soon.

It’s Friday in Sydney, so I’ve just heard the announcement of Claude Sonnet 4.0 and updated my systems to use it. If it’s better than what I was using on Wednesday (which it surely will be), then that number of “$100,000 worth of 2021-era software written in one day” is already outdated.

I’ll have to wait for a customer who wants something AI-ish built to try out those other tools. I might be waiting days for that.

.png)

![Exposed Industrial Control Systems and Honeypots in the Wild [pdf]](https://news.najib.digital/site/assets/img/broken.gif)